Happy bloody new year.

In my last post, I mentioned that I was trying some new things. My long-gestating fan controller (Windy) finally taking physical form is exciting (if maybe a little slow), but I've got some other things on my plate that I'd like to share before they go too far out of mind.

Infosectacular!

For a while, I've been thinking about making a professional move from embedded engineering to information security. I've been in the field as a hobbyist for a while now (1999 or so; I have a post brewing on my path that I'll post later), but it's really where my heart lies. I have a lot of related experience, but I wanted to make sure that I'm up on the skills that would actually matter for a career.

I've been following a number of infosec folks on Twitter for the past year or so. One of them (Tony Robinson, aka @da_667) recently wrote a book on setting up a basic malware analysis lab with a variety of virtualization systems (Building Virtual Machine Labs: A Hands-On Guide) available to home users. I had some spare PC hardware and about 10 years of virtualization experience with VMware Fusion (easy) and Xen (uh... somewhat less easy). In addition, I recently set up a Fibre Channel SAN for the benefit of some of my bigger vintage machines (thanks, FreeNAS!) and figured it might be a good chance to really exercise it.

Some specifics

The hardware

To start with, I had a lot of a spare PC leftover after a PC overhaul about a year ago: a Gigabyte GA-EX58-UD5 motherboard with a six-core Xeon W3690 and 24 GB of RAM. It's a little old, but the triple-channel memory on the LGA1366 machines really let it haul a lot, so I figured it would be sufficient. I threw it into a spare case and got started. I set an additional constraint for myself: any expansion cards must be low-profile so I could move to a 2U rack case later.

I also had a bunch of additional 4Gbps Fibre Channel cards left over from the SAN build; ever since 16Gbps Fibre Channel hit the market, it looks like datacenters have been dumping 4Gbps FC gear, so PCIe and PCI-X cards are about $5 each and switches are about $20 on eBay right now. Since I was (am?) still a bit of a Fibre Channel novice, I thought it might be a good chance to see how it interacts with modern, real-world situations rather than being mass storage for a bunch of aging SPARC and Alpha machines. And, bonus, they're low-profile (and spare low-profile brackets are readily available for cheap on eBay as well).

The big downside of the board was that there's no built-in video. It does, however, have two PCI slots that aren't likely to be used for much on the VM box, so I don't have to sacrifice a PCIe slot for video. I had a nice Matrox VGA card from 1997 (one of these), but that a) wasn't low-profile and b) seemed like a bit of a waste for something that was only ever going to provide a system console, so I got a low-profile Rage XL PCI card (a low-end adaptation of the old Rage 64 targeted at basic needs like servers). It didn't come with a low-profile bracket, but fortunately low-profile DE-socket brackets are easy to find, hopefully with the right alignment. I'll have to take care of that at some point.

NAS hardware

In case you're curious, my FreeNAS server was a dual Opteron 248 server (Tyan K8S motherboard, also 24 GB RAM) which served as my main server rig from about 2006 (when it was new) to about 2014. FreeNAS uses ZFS as a storage backend, even for Fibre Channel targets (the LUNs are just zvols), so a healthy chunk of RAM makes even a slower system run pretty nicely. It had 4 PCI-X slots, which let me populate it with two dual-link FC adaptors (QLA2462) and two 8-way SATA controllers (Supermicro AOC-SAT2-MV8). I used SATA controllers instead of SAS because the best SAS cards available for PCI-X (LSI1068) have a hard addressing limit for SATA disks which only allows you to use the first 2TB of a SATA drive, and since I had populated the array with 6 TB SATA drives, that was a non-starter; the Marvell chipset used in the Supermicro card allowed me to address the entire disk. It was not without problems, though (more later).

The software (and, subsequently, more hardware)

So much for the hardware. Having already used VMware Fusion extensively, and wanting to stretch my Fibre Channel muscles some, I decided this would be a good opportunity to learn how to use something heavier-duty. I have a fair amount of experience with Xen as well, and my main home server is running on Xen so I can spawn some VMs when I want to, but a) the book doesn't really talk much about Xen, b) it's pretty fiddly, and c) I wanted to learn something new. I decided to go with VMware ESXi, since it's pretty common in the industry and has a decent free tier.

The book did caution that ESXi is infamous for being picky about hardware, and it's not lying. The motherboard I wanted to use had two Realtek Ethernet interfaces, which are unsupported on ESXi 6.5. If you don't have a supported network interface, ESXi doesn't even install. There are ways of shoehorning the drivers into the installer, but I've had enough unfavorable experiences with Realtek chips locking up under load in the past (there's probably a reason they're no longer supported) that it seemed best to just Do The Right Thing and get a decent card. There's an x1 PCIe slot on the board with VERY little rear clearance, which was perfect for Intel's tiny i210-T1 card (which I could get for $20, delivered same-day from Amazon). That only gave one port, but that was perfect for the management port so I could install; I ordered a 4-port i350-T4 from eBay for about $50 for the VM networks and went forward with the install while I waited. Both cards are low-profile and came with the low-profile brackets.

Gettin' it done

The install

After a few false starts, I finally had a baseline hardware configuration that would work. I hooked the VM machine up to my FC switch, carved 20 GB (boot) and a 500 GB (VMs) chunk out of the SAN volume for VMWare, and went to install from USB.

Burning the ESXi installer to a USB drive wasn't a simple dd operation like most Linux distros use now; I needed to use unetbootin on OS X (Rufus should also have worked on Windows) to make the USB drive bootable. After discovering the above-mentioned restriction on Ethernet and throwing the Intel card into the machine, I was able to get it installed on the 20 GB LUN on the SAN. It looked like everything was ready to go!

Trouble

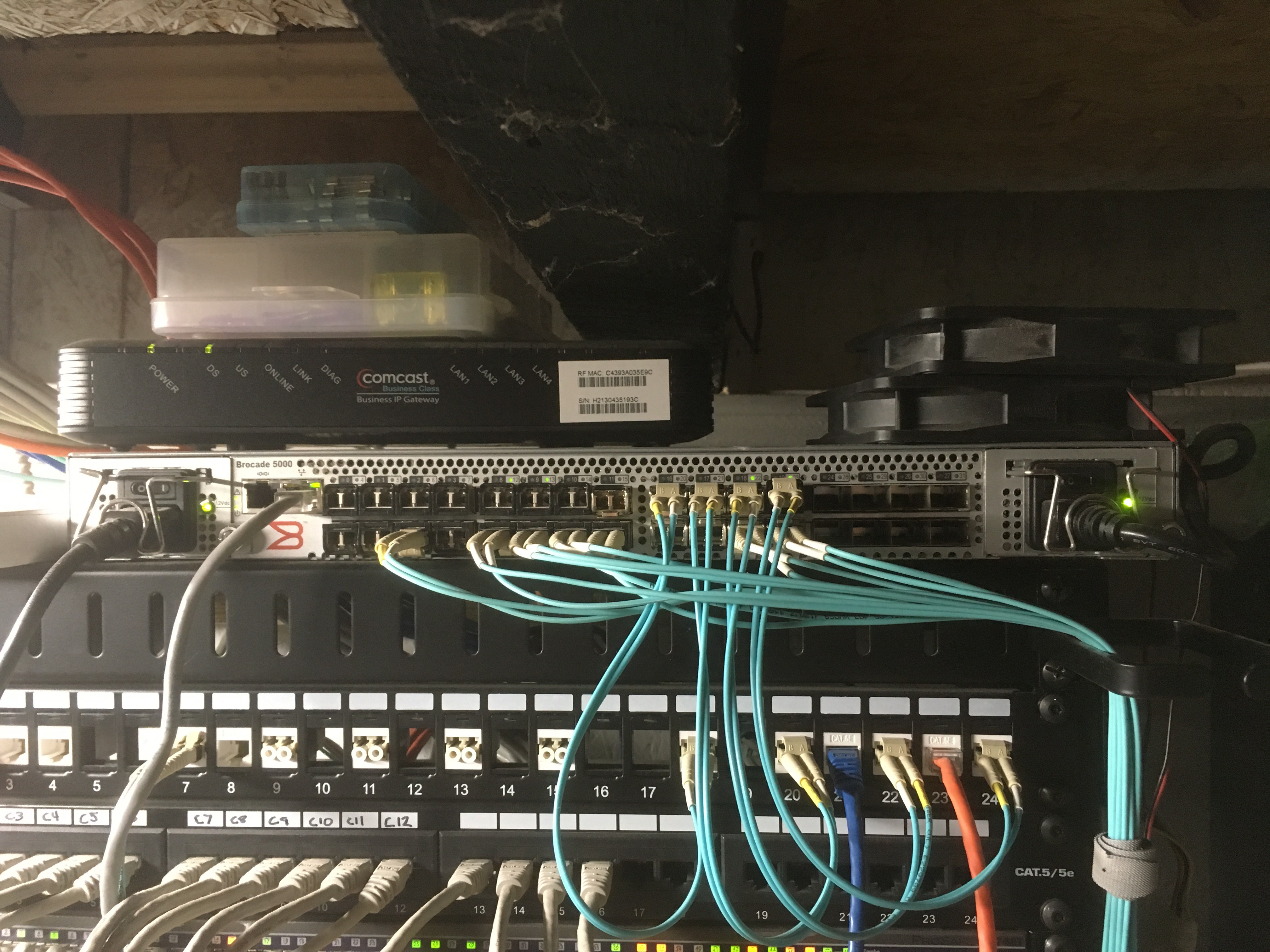

Then I tried to boot the installed drive. I discovered some... things... about Fibre Channel. First, it turns out the way FreeNAS registered itself on the FC network (the details of which are really hairy, but involve an actual SCSI name server sitting on the FC network to translate 24-bit port numbers to 64-bit World-Wide Name, the FC equivalent of a MAC address) was not the One True Way. Higher-level operating systems like Windows and the ESXi installer could see the targets, but I couldn't get the boot code for any of my QLogic adaptors (whether BIOS, EFI or OpenBoot on Sparc or the Alpha firmware console) to see them properly. This was a problem that had plagued me for years, and I always figured I was misconfiguring something on the switch, so I learned how to do zoning on my Brocade switch (something I needed to learn anyway).

That didn't do the trick, but while playing around, I found that I could boot if the ESXi machine was plugged directly into one of the ports on the FreeNAS box. A bit of googling finally found a somewhat-recent FreeBSD forum post from someone who had a similar problem, and had gotten it fixed, and it turned out that the fix had made it into the 11.1 version of FreeNAS! My FreeNAS install was still running on 9.10.

Long story behind the weird versioning made short: FreeNAS 9 was based on FreeBSD 9, and FreeNAS 10 was based on FreeBSD 10, but they also made a lot of changes to the entire system that were Not Great so they pretty much threw it out, rebased the 9.x system onto FreeBSD 10 and called it 9.10, and now FreeNAS 11 is 9.10 but on FreeBSD 11.

Anyway, the update to 11.1 fixed the problem with Fibre Channel switches (well, mostly; I still haven't been able to make my Solaris boxes recognize it, and I haven't tried with the Alphas yet), but introduced a new problem: under any kind of significant load, the system would just completely go out to lunch. I took a look at the console and saw that a bunch of the drivers were barfing in a way that made it look like they were losing interrupts. The maintainers labeled it a WONTFIX (I don't blame them, there's no commercial incentive to update for 12-year-old hardware), so I accelerated my nearly-complete plan to upgrade to a (slightly) newer motherboard/SAS controller combo (Tyan S7012/SAS9211 combo, if you're curious). After a long comedy of errors which ended up being solved by a BIOS update, I'm stable again.

Well.

My SAN is.

Install Part 2: Electric Boogaloo

So I finished the install well before I had the SAN stabilized, but hadn't realized it was being flaky yet. It's worth noting that while ESXi sort of holds on to I/O transactions from guests when the backing store goes down, most OSes don't like it when their I/O doesn't complete within a minute or two and Bad Things happen. 3/10 would not recommend. Have a stable disk before you get started running things.

Once ESXi was installed, I followed the book's advice for setting up the virtual networking to keep things nicely sandboxed. ESXi has a decently flexible networking model based on virtual switches and port groups. Some of the decisions (why are port groups separate from the switches?) make a lot more sense when you consider its purpose as an enterprise-grade, migratable, cluster-ready piece of software; I could see some of the networking being a little cumbersome for a home user, but I appreciated the long-term prospects.

Setting up the guest OSes was pretty standard for VMs. I have to say that the process made me really understand why people shell out good money for VMware; not only is the built-in web client pretty good (looks like I got lucky and started right when they finally nailed it), the web-based remote console is also excellent (and doesn't require Java or Flash or an external VNC client, like a lot of remote KVM stuff) and it worked absolutely seamlessly with VMware Fusion, which I already had on my Macs. Seriously, using Fusion as a remote console for this stuff is like a dream. Having come from Xen and trying to even get text consoles and the like to play nicely, it's easily worth the price of admission.

The Thrilling Denoument

I did a few things differently than the book recommended, partly because I wanted a challenge, and partly because I'm picky and ornery. Below are details about where I've deviated.

The Great Firewall

I prefer OpenBSD over the recommended pfSense (which is FreeBSD based) for firewalls. For one thing, pf (the routing layer that FreeBSD uses nowadays) is the core packet routing infrastructure of OpenBSD, where it was developed. FreeBSD's clone has lagged behind in feature implementation, etc. OpenBSD is (generally) quite secure by default, though I'm quite sure that pfSense does a good job of locking things down by default. FreeBSD is in general a much better perfomer than OpenBSD, especially when it comes to networking; if I were making decisions about a production system that had to handle tens or hundreds of Gb of traffic, I might think twice, but for my own sandbox, it's not a big issue. Most importantly, though, I'm more comfortable with OpenBSD, since I've been using it for my own router for well over a decade now (starting with one of these beasties, which I still have around here somewhere).

OpenBSD is an interesting operating system to use under virtualization. Its developers have had a, er, cantankerous relationship with... well, anything new for a long time. Virtualization is no exception. In recent years, though, they've started moving along; I think they finally got proper support for Xen blkfront disk devices in 6.1, which is pretty recent. Support for VMware paravirtualized devices has been there for longer, but it's not perfect; even the 64-bit version reports itself as 32-bit FreeBSD, for example, and the paravirtualized disk driver seems to drop the first write after boot, which is not great. This means that when installing, you need to "prime" it with a simple write to your target volume before it tries to write the boot block and partition table, or it won't work; if you have to do an fsck after boot because, say, your SAN went out to lunch, you need to run it twice. Everything else (including the paravirtualized network) seems to work great.

I set up the firewall without too much trouble after I figured out the quirks. To add to my misery, I decided to also add IPv6 to the networking setup suggested in the book. I already had IPv6 set up on my main network (my VM box is set up on its own port on the router to further sandbox things), so subnetting the /48 block I got through Hurricane Electric's free tunnel service was easy enough. I'm still working on getting DHCPv6 DUIDs to work just right, which isn't strictly necessary unless you want to be able to assign static addresses from a central server (which makes administration a lot easier than updating static addresses on each box).

Just About Everything Else

Everything else aside from the Windows VMs were pretty easy. All the other VMs in the lab book are Linux-based, which means they enjoy widespread support and it's a lot easier to find posts about what's wrong if something is wrong. About the only thing I've had trouble with, actually, was figuring out how to make Ubuntu behave properly with DHCPv6 when it's done post-install (since I didn't have the IPv6 set up before installing the first few). Other than that, everything pretty much works as intended. And all these things running on the SAN run wicked fast because the SAN server is backed by ZFS, which means once the fairly substantial caches have warmed up, most things are coming off of RAM or an SSD directly out to the Fibre Channel.

Windows XP

This actually isn't in the book, but because I've been wanting to run through the labs in Practical Malware Analysis, which expects Windows XP, I installed that. It was... fun. Ish.

I decided I would start with SP3, since none of the malware analysis in the book ran on real malware which needed particularly vulnerable versions; if I need to test something that requires SP2, I have an older disc which needs to be imaged, but I was feeling lazy. Installing SP3 wasn't too hard in and of itself, but updating it turned out to be a task.

First, I took a snapshot of the initial install so I could clone it later with a fresh, unpatched SP3 install if I wanted. I took snapshots after each reboot in the install process (there were at least four, possibly five) so I could have different stages available later. It never hurts to be able to clone a vulnerable version.

Then I tried to run Windows Update. No dice. The web page errored out, and I wish I had taken some screenshots at the time to document the process, but suffice it to say that a 10-year-old update process (and that's the new one) doesn't work flawlessly anymore. I ended up having to manually download the updated updater and install it before it would even work.

After that, the updates went fine, if possibly a little tedious. It is to Microsoft's credit that the update process even still works, since they don't have much commercial reason to do so (though I'm sure there are plenty of still-extant support contracts that require it, so there's that). After 4 or 5 reboots, I finally had a system that is as up-to-date as Windows XP can possibly be. I'll detail some of my lab experiences later.

What Now?

Well, I use the system. I'm working on instrumenting my personal server(s) with proper log and metric aggregation so I can actually get a handle on all my logs (I've been shamefully bad about that lately); at the moment, I've settled on Graylog (good opportunity to learn docker-compose) for logs and am working on firing up InfluxDB/Grafana for metrics such as sensor data, server load and the like. That'll let me pull logs from the VM systems as well, but I need to make sure I keep decent security barriers so I'm not allowing nefarious syslog traffic into my main server. At the moment, everything except a select few things (e.g. not syslog) gets dropped on the ingress to my main router.

If anyone has recommendations for log aggregators or SIEMs that are open-source and aren't as cumbersome as Graylog (i.e. no dependencies like MongoDB/ElasticSearch and the effing JVM), I'd be grateful; I really like InfluxDB's model (no dependencies, native code via Go) but I haven't seen anything similar yet for log gathering that's reasonably powerful.

As things move along, I'll be sure to share any interesting experiences or insights I have with the VM system. Hopefully the SAN stays stable for the foreseeable future.

Oh, And The Book.

The book is great! You should buy it! It gives an excellent, in-depth walkthrough for each major VM environment offered (Hyper-V, VirtualBox, VMware [Fusion|Workstation|ESXi]) to get the main VMs set up, then redirects you to common chapters for the more detailed setups of things like the SIEM and IPS. It's written in a pretty friendly manner, and it's full of really useful information, especially if you've never done virtualization before (and even if you have, it's great for learning to scale up).

I'd love to see it cover Xen or KVM, but a) that would be decidedly beginner-unfriendly (Xen is a harsh mistress) and b) probably would require its own book. I don't see lack of Xen as a fault here, and it doesn't detract from the book at all.

Buy it. You know you want to.

Dave

Dave

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.