-

Ryzen overclocking adventure

11/09/2018 at 05:49 • 0 comments![]()

![]()

![]()

![]()

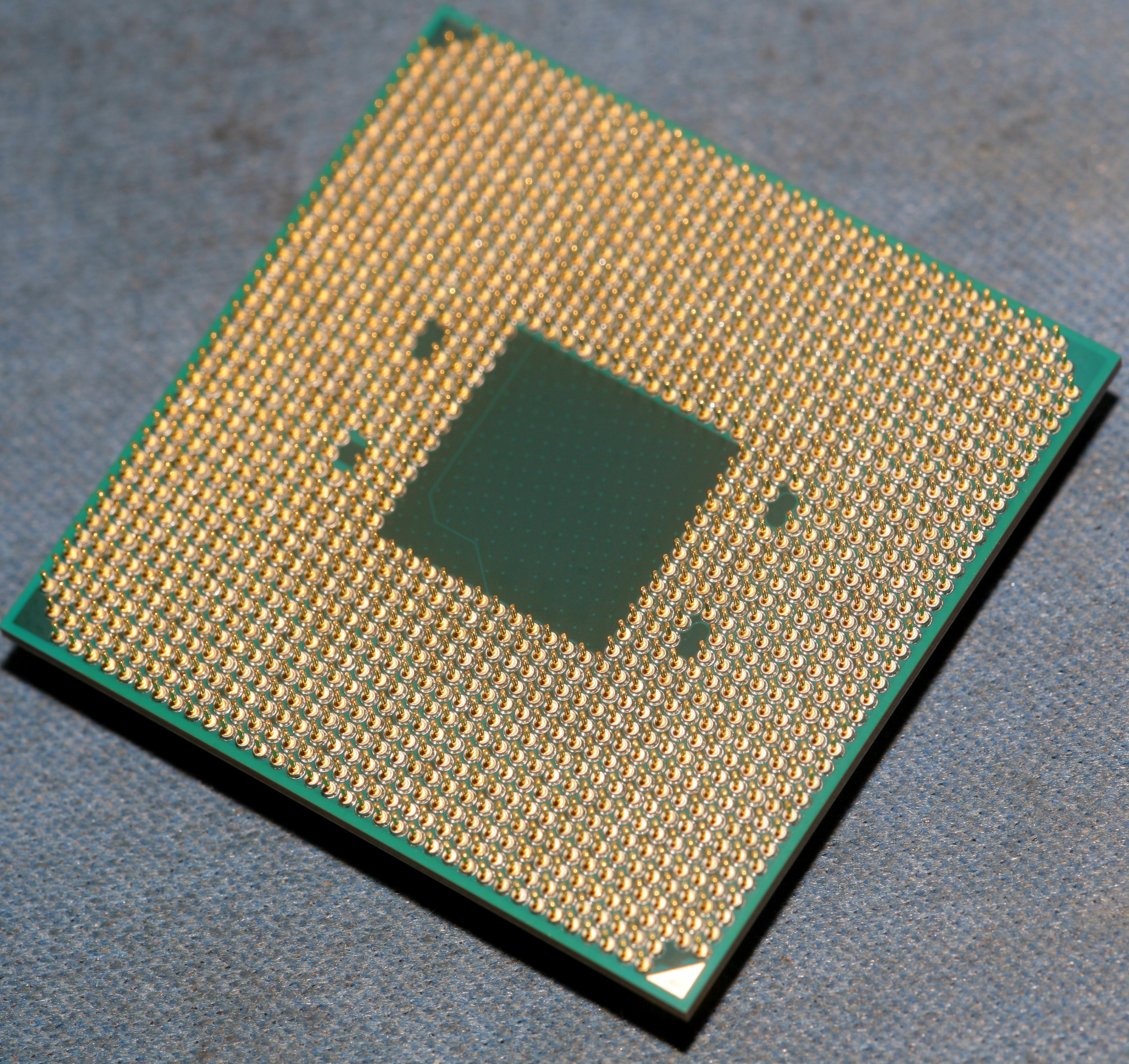

![]() That was the most expensive CPU, motherboard, memory setup the lion kingdom ever got. Getting a speed boost over what was $400 in 2010 took a lot more money than it did, but hopefully will last another 8 years.

That was the most expensive CPU, motherboard, memory setup the lion kingdom ever got. Getting a speed boost over what was $400 in 2010 took a lot more money than it did, but hopefully will last another 8 years.The #1 trap for young players: The BIOS uses the mouse to navigate, except when adjusting the CPU core ratio. That greyed out box needs to be adjusted with the +/- keys.

Accessing higher memory frequencies requires stepping up the CPU clockspeed. For a Ryzen 2700x, got 2 x G.SKIL 3600Mhz 16GB to really hit 3600Mhz only after increasing the CPU clockspeed to 4.2Ghz. At 3.7Ghz, the RAM would die beyond 3333Mhz.

The side firing SATA header is a pain.

The ugly plastic thing covering the ports doesn't come off without 1st removing the heatsink over the MOSFETS. Maybe you can spray paint it.

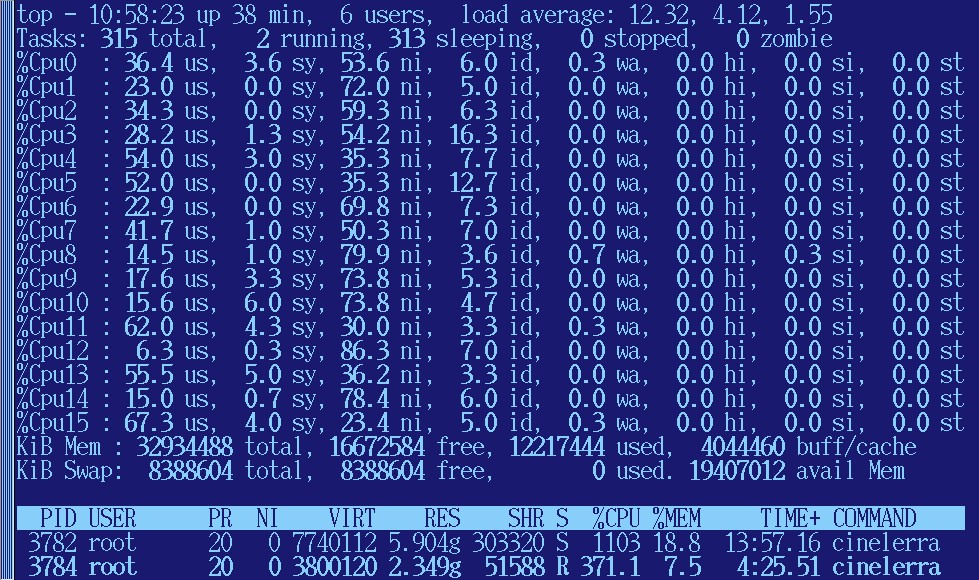

At 4.2Ghz, it plays an H.265 8192x4096 138megabit video in a 2048x1024 window at a studdering 24fps. It definitely can't play 8k video on an 8k monitor but might do 4k on a 4k monitor.

![]()

Transcoding 8k video to a faster codec, it maxes out all 16 hyperthreads at 4.2Ghz & uses 8 of 32gig of RAM. Not out of memory or taking all day, as it would have been a week ago. Rendering speed of the NASA video was 1/3 realtime.

The faster codec reduced NASA's 139megabits to gootube quality & played back at roughly 70fps. Still constrained to a 2k window.

-

Fixing junctions in Eagle

11/05/2018 at 01:27 • 0 commentsIt was such a long fought battle for such an obvious solution, the video had to be made.

-

How to make kids interested in programming

09/25/2018 at 16:28 • 0 commentsAbsolutely hated any higher level language than assembly as a kid. Programming was only a means to draw fast graphics, rather than an end or a way to make money as taught nowadays. The outcome of the act was the key.

Assembly language was the only motivational language because it unlocked the maximum performance of the machine & it was less contrived than determined by raw physics. Languages like Swift are heavily dictated by bureaucracy. Don't think kids are motivated by a desire to navigate bureaucracy the way middle management seeking 20 year olds are, although they are somewhat motivated by money.

8 Bit Guy always hypes the addition of graphics commands to newer BASICs & criticizes the lack of graphics commands on the C64. It's a very modern reasoning. We had BASIC graphics commands by 1985, on the x86, but they didn't motivate programming. The results were slower than assembly language on the 6502 at 1Mhz.

The lion kingdom will never get back the feeling of turning on the 1st pixel with the 1st poke, clearing the screen in BASIC, then doing the same for the 1st time in assembly language, marveling at how fast it was.

-

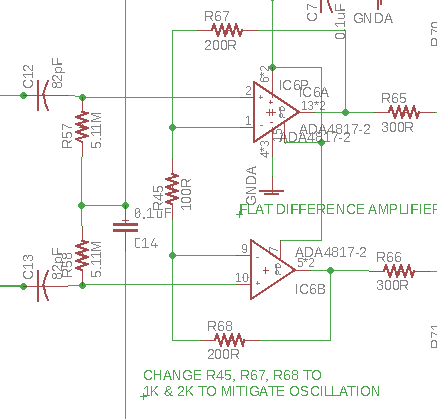

HOW TO FIX OP-AMP OSCILLATION

09/20/2018 at 08:10 • 0 commentsIt dogged the lion kingdom forever. First, it was the AD4817 making an 80Mhz sine wave. The AD4817 appeared to have a capacitive load & responded to higher feedback resistors. Higher R went against internet wisdom, but detuned it by lowering the frequency response, lions suppose.

It happened again with the AD4807 making a 13Mhz sine wave. It appeared to be delays from long traces feeding the op-amp inputs. Truly didn't want to spin another board with shorter traces or go to a slower op-amp. Higher R & lowpass filters didn't work. Then came

https://e2e.ti.com/blogs_/archives/b/thesignal/archive/2012/05/30/taming-the-oscillating-op-amp

Putting C in parallel with the feedback resister was brilliant. Never would have thought of it. The internet just recommended low pass filters. Whatever magic allows it to work, the idea seems to be making the time constant of the feedback R equal to the time constant of the delay line. It behaved like an op-amp should, all the way up to the required 2.5Mhz.

-

Storefronts for subscription services

08/31/2018 at 07:13 • 0 commentsWe're probably near the end of keyboard passwords. Alphabetic, alpha + number, alpha + number + punctuation passwords were all fully exploited in short order. The future will be 8 pictures picked from a dictionary of pictures. Everyone gets a unique dictionary.

Faced with the task of setting up a subscription based store front has made lions study security more. 20 years ago, all the credit cards were stored on a computer in the office & charged by dialing into a modem pool, after much stalling & negotiating with customers who were just minutes away from funding their credit cards. This manually executed monthly task funded everyone's paycheck. Other manually run scripts increased the balances in the accounts, shut down unpaid accounts, sent out warning emails.

Nowadays, secure credit card storage is a monumental task. All known cyphers can be cracked in milliseconds. Small companies need to outsource the credit card storage & even the subscription management. It's too easy for someone to get in & change their balance.

-

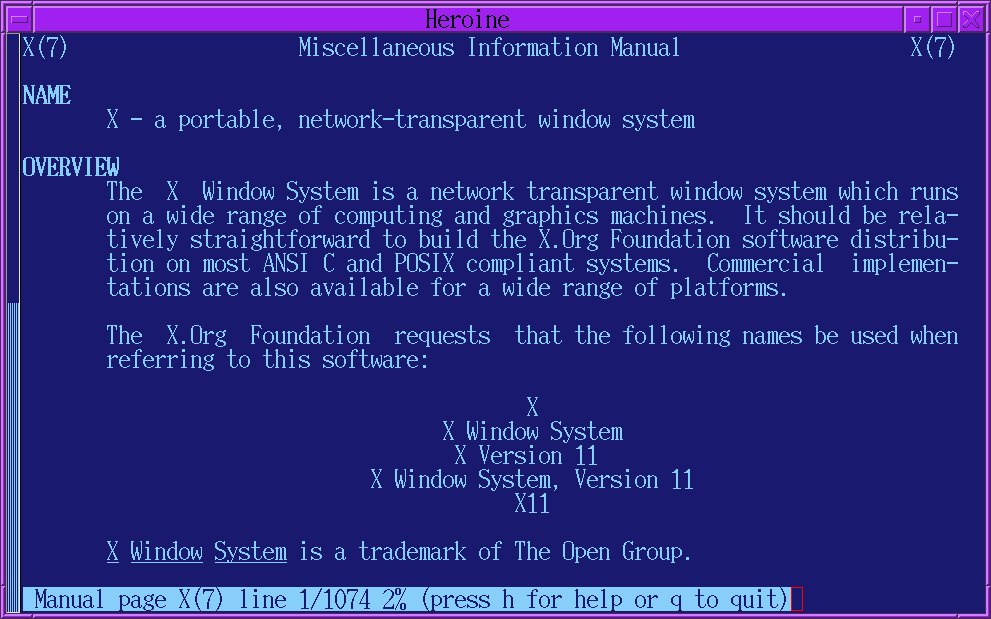

Why did the world pick VNC over X11?

08/10/2018 at 20:34 • 0 commentsVNC is so bad, it's god's joke against computer users. Apple's implementation of the VNC server is a joke upon a joke. Every MacOS release rounds the same circle of bugs, 1 release having broken security, the next release locking up during fullscreen blits, the next release having broken security again, none of them trapping all of the frame buffer changes. The mane problem with VNC, besides Apple's incompetent hiring practices, is it tries to passively detect frame buffer changes rather than being in the drawing pipeline.

It can compare every pixel of the frame buffer in certain time intervals or try to sniff drawing commands by checking CPU usage. It seems to rely on sniffing drawing commands, most of the time. Lions don't know because MacOS is closed source. It never works, no matter how fast the network is. It misses most frame buffer changes, severely delays the ones it catches, or just crashes.

Little of the internet knows before VNC, there was already a fully functional, superb protocol known as X11. That forced every drawing command through a single network socket. Running programs over a high speed network was like running them locally. It never missed a drawing command & responded instantly. By 2001, X11 had been extended so the same programs without any changes could run on a local display, without the network layer.

The world picked VNC because of its extremely short memory & the desire to reinvent the wheel to make money. VNC is the corporate desire to replace i = 0; i < 5; i++ on Monday with i in 0 ..< 5 on Tuesday, then i in range(0, 5, 1) on Wednesday & use each one as an excuse to split your stock.It's a real problem of human nature. Humans exist because of luck more than intelligence.

-

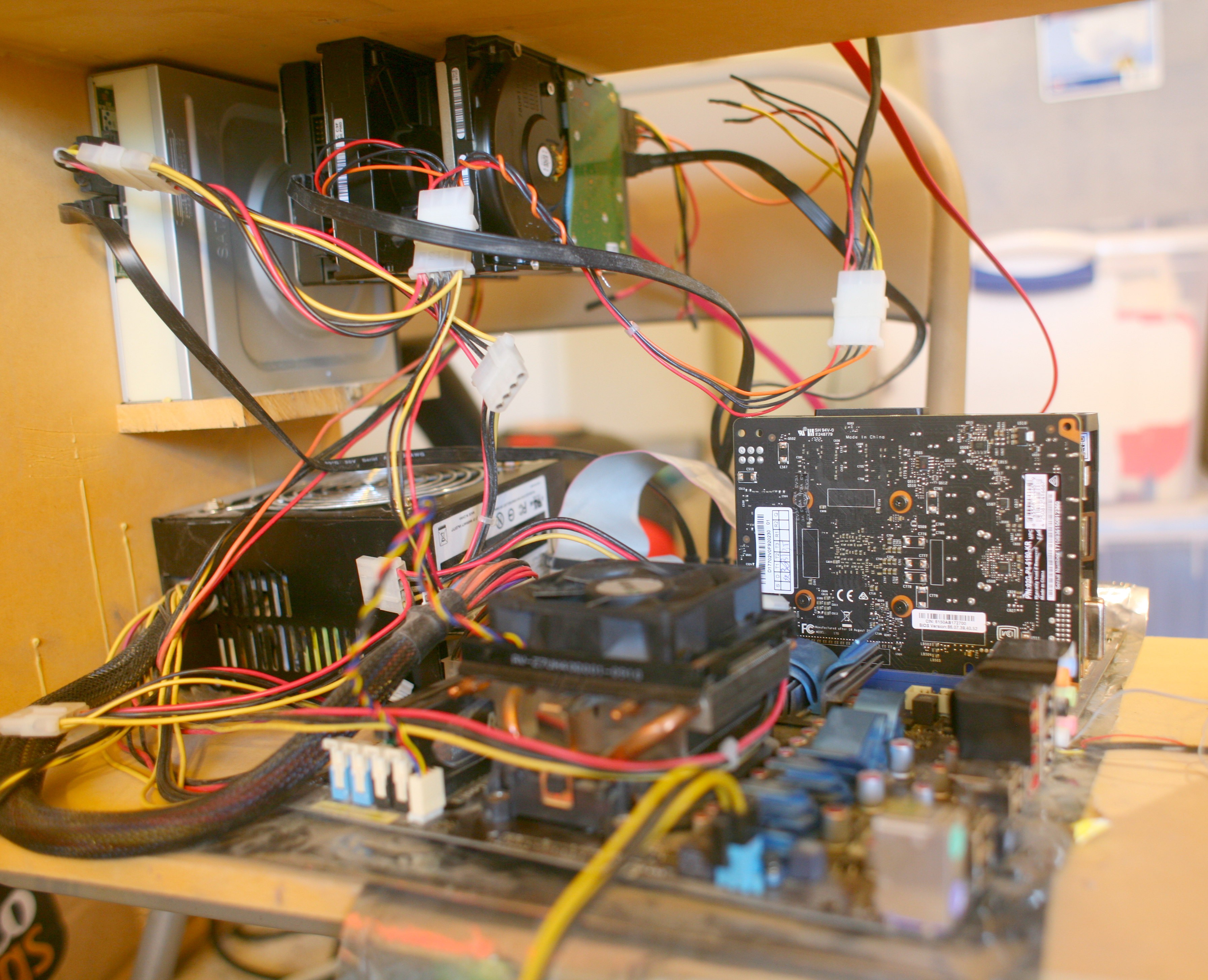

Using a "backup drive" as /dev/root

06/24/2018 at 23:20 • 0 comments![]()

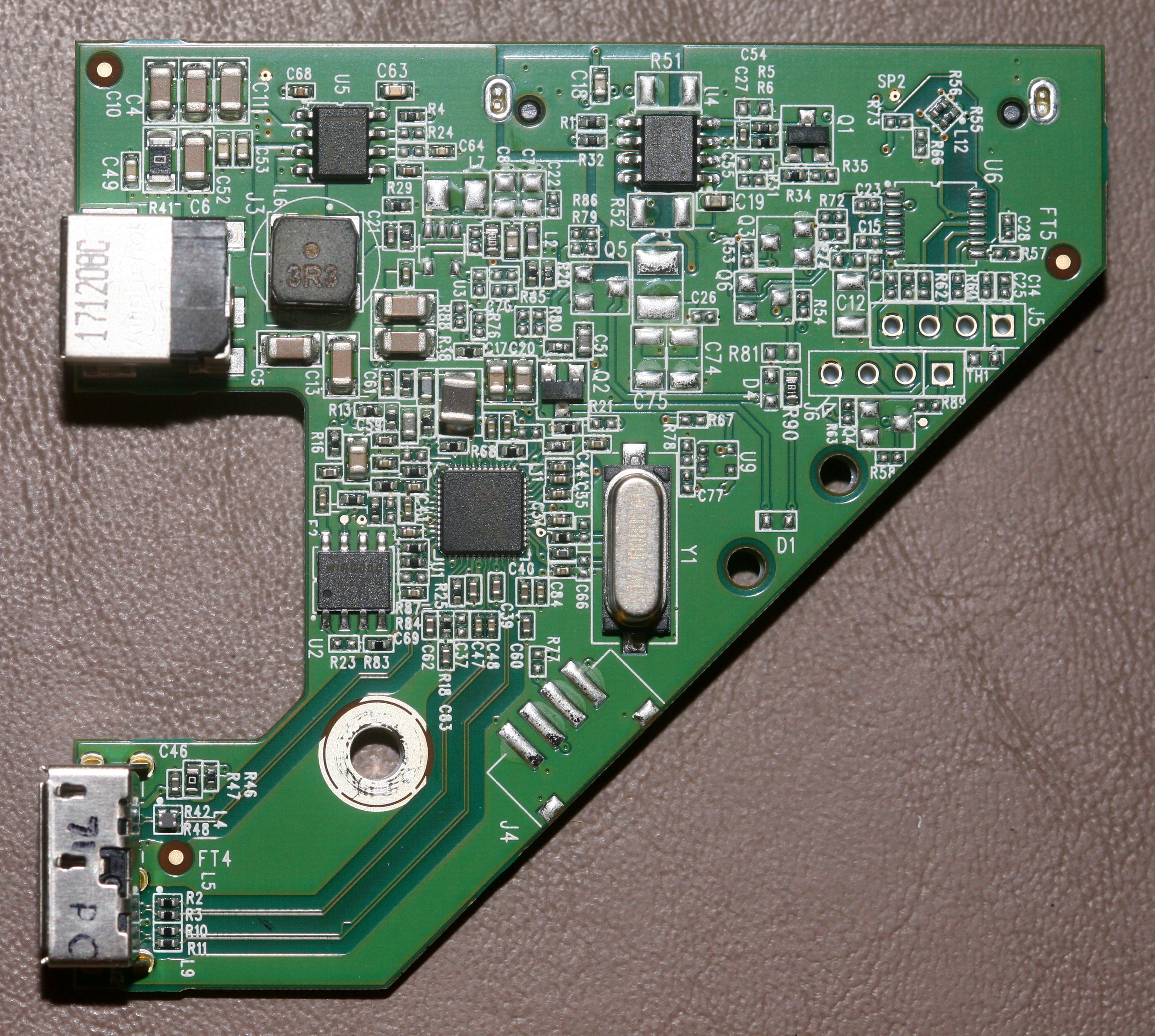

The answer is yes, the obscenely cheap, giant drives marketed only for backup use can still be used as internal drives. Once you don't mind losing the warranty, they can be torn down & an ordinary SATA drive extracted. The lion kingdom risked $170 + $17 tax in an act of desperation because for the 1st time in 30 years of owning desktop hard drives, the hot weather seems to have damaged one. It was a good time to start moving the entire optical storage collection of 20 years to a hard drive. The lion kingdom had 2 other hard drive failures in Hitachi micro drives, but not root filesystems. Japanese hard drives have proven the least reliable.

![]()

![]()

After much destruction, the goods slid out from the bottom to the top, in their own plastic assembly. The assembly was locked inside by tabs & had rubber shock absorbers.

![]()

The teardown also liberated a USB to SATA adapter & 12V 1.5A power brick. The USB adapter does the magic trick of spinning it down when inactive, without disconnecting it.

![]()

All USB drives are much cheaper than what are being sold as internal drives. Suspect the support & warranty costs are much higher when people install the bare drives.

The lion kingdom got into the habit of tacking on 2nd paw, small hard drives as needed. This creates a lot of heat & uses a lot of power. The 8TB should eventually replace all of them.

-

The 6th language

05/09/2018 at 04:52 • 0 commentsThe lion kingdom's 1st language was C64 BASIC, then 6502 assembly, then logo, then Applesoft BASIC, then GW Basic, & many years later, C, the 6th language. It was extraordinary to finally be able to express the machine's native instructions in a higher level language. The compilers were scarce & expensive, so lions would write source code at home in a text editor & compile it at the day job, the next day. The lion kingdom's 1st trouble spot was "invalid lvalue" for using = instead of == in an if statement. It took forever to figure that one out.

Most of SOMA has never heard of C & probably only vaguely has heard of assembly language.

-

Lions vs Ben Heck

05/01/2018 at 20:45 • 0 commentsThe fight to make a CAD model leaves the lion kingdom feeling a bit under accomplished, compared to Ben Heck. Although his show only covers baby projects, he obviously is very skilled in CAD tools, electronic design tools, FPGA programming tools, embedded programming tools, & machine tools.

1 week, he'll have a very high proficiency in programming an FPGA. The next week he'll design something in Fusion 360. The next week, he'll design a circuit board. There's no way he could fake a high proficiency in each tool in the time between episodes. Just learning & getting good results from a circuit board editor takes lions a lot longer.

He could just as easily push a large aerospace project as make useless raspberry pi enclosures. The key is learning many tools rather than developing everything from scratch & always trying the latest method instead of doing what's tried & true for decades. Retro computing works best when applied to the device under test, not the tools for making the device.

![]()

The mane disappointment is how he ballooned over the last 8 years. Anyways, back to using a 30 year old X11 interface to make mobile apps.

-

Cheating your way into space

04/04/2018 at 18:58 • 0 commentsWith US still over 1 year away from being able to launch humans into space again, what a story it would be if someone stowed away in a cargo mission. It would be like a Doolittle raid, but a raid against bureaucracy & management. It would be riskier than Alan Shepard's 1st flight, because the cargo modules have no launch abort capability. Beyond that, it would be the same as that 1st flight, despite all the concern about minutiae in the flight termination system, life support system, & micro meteoroids. Indeed, much of the last 8 years of pain has been micro meteoroid shielding standards.

The stowaway would be a man, of course. He would have a food supply & a bathroom hidden somewhere. Once on the space station, they would have the issue of feeding him, not having enough room in the Soyuzes to evacuate the station, the life support system encountering loads not seen in 8 years.

The real drama would be the consequences of stowing away. Would he be shot for treason? Would NASA give up on human spaceflight entirely because they couldn't enforce enough meaningless regulations? Would private space programs tolerate more risk? -

retro computing & lions

04/02/2018 at 00:21 • 0 comments![]()

Ready Player One might be the peak of retro computing fever or at least the peak of attention to it. FVWM was written in 1993. XTerm was written in 1984. NEdit was written in 1992. X11 began in 1984. So lions have manely used the same tools since 1995 & those tools date back to the Commodore 64.

Lions were into retro computing long before retro computing was a fad, but manely in a UNIX sense rather than an 8 bit PC sense. There was always a fascination with the simplicity of these tools compared to the massive marketing gimmicks that modern interfaces have become. X11 is still the only thing which actually works over a network.

After the decline of early 8 bit PCs, early UNIX workstations were the only computers that interested lions. They were more expensive & capable than what any private individual could afford, yet since the introduction of Linux, could be recreated on any PC hardware.

The retro computing fad has sorely overlooked early UNIX workstations from the same time period. Millenials know what Koala paint was, but not what XTerm was. It's based on what consumers could afford. Lions have been lucky to be able to base most of their income on using retro tools, though it may not be the most productive way to go.

-

Stopping an op-amp from oscillating

03/30/2018 at 22:25 • 0 commentsHow quickly 1 project to make an op-amp oscillate turned to another project to stop an op-amp from oscillating. The internet says op-amp oscillations are caused by very high frequency op-amps with slight delays between the output & feedback. Capacitance on the output & feedback terminals & higher feedback resistors cause delays just like an RC circuit. They recommend lower feedback resistors & a resistive load for the output.

100 & 200 were the lowest reasonable feedback resistors, but this caused the ADA4817 to oscillate at 80Mhz. Increasing to 1k & 2k actually seemed to eliminate the oscillation. The output of each op-amp is very noisy, but when combined in a difference amplifier, it's relatively clean. The only explanation is if physics experiments never work, pick what doesn't work to make them work. Perhaps having the output impedance too low slowed down its response rate & caused it to oscillate.![]()

-

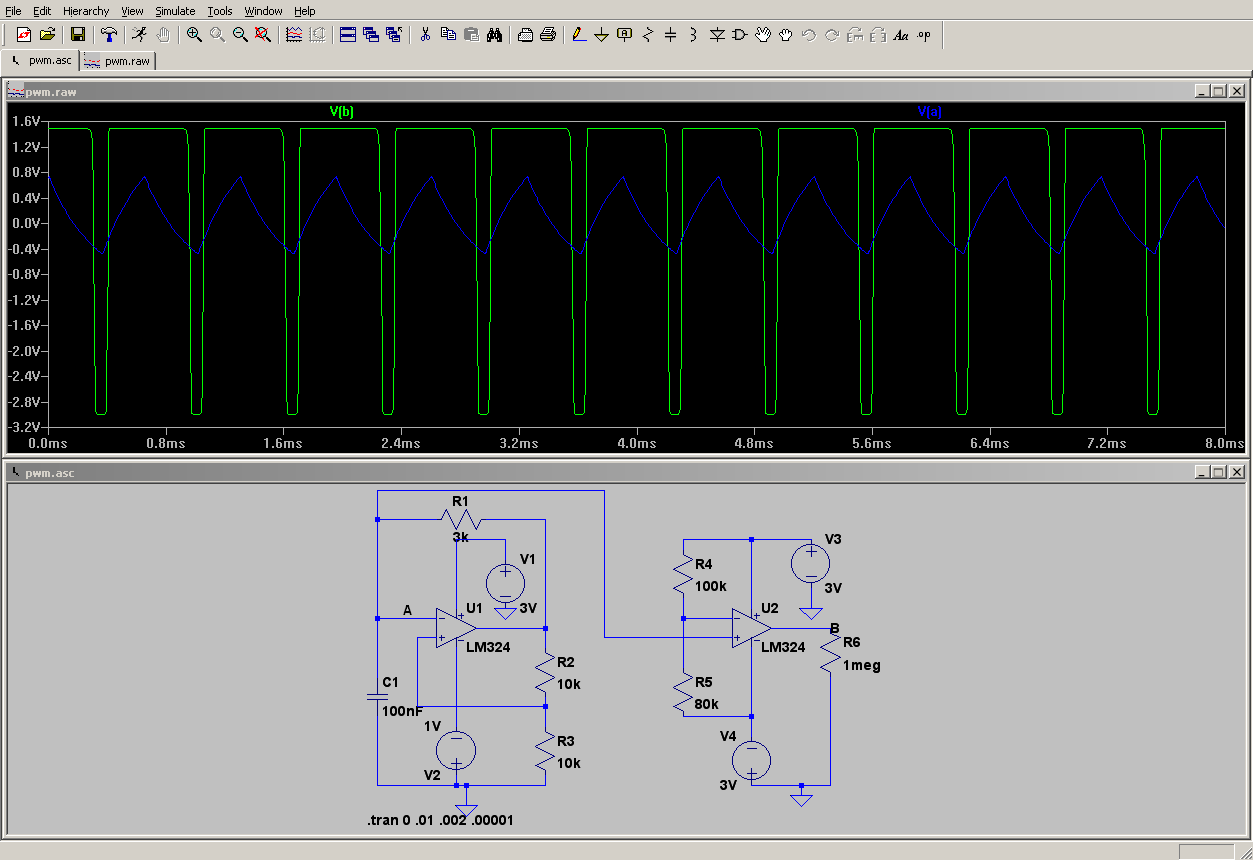

PWM from an op-amp

03/25/2018 at 08:56 • 0 comments![]()

Worthless in any practical sense, but nifty & maybe useful if you have absolutely no microcontroller in lieu of a bag of LM324's. 1 op amp generates a triangle wave. The other op amp is a comparator that chops the triangle wave into PWM. R1 & C1 change the frequency. The R4 & R5 ratio changes the duty cycle.

Simulating it in LTSpice required a dual power supply, though a real circuit probably works on a single supply. The simulator probably needs A starting on a power rail.

The LM324 is actually fast enough to hit 1khz, so it can drive LED lighting or a servo. Higher speeds require a higher slew rate op-amp. A microcontroller uses far less components & is a lot easier to tune. -

Bringing up an STM32H7 with openocd & Linux

03/20/2018 at 19:35 • 2 commentsIt's crazy, but can be done by those who like to suffer. The easiest way is an officially supported Windows IDE. The hardest way is Openocd on Linux. Both methods require an STM32 demo board. All the STM32 demo boards have an STLink programmer with an SWD header for external boards. There's no reason to use the full JTAG interface. All you need are the SWDIO & SWDCLK pins.

Dev boards for the highest end STM32's now cost little more than the STM32 chip itself, so it's not worth building your own JTAG programmer with a custom driver like the lion kingdom did in 2012, back when there was a severe price difference. The SWD header on the Nucleo-F767 & probably most other boards is

pin 1: VDD, SWDCLK, GND, SWDIO, NRST, SWO

On the Nucleo, it connected to the dev board via jumpers on the back. You have to remove the jumpers. Connect SWDIO, SWDCLK, & GND to your board. The command for running openocd for the STM32H7 is

openocd -f interface/stlink.cfg -c "adapter_khz 1000; transport select hla_swd" -f target/stm32h7x.cfg

In another console:

telnet localhost 4444

Normally, the journey begins with a reset halt command. This currently doesn't work & neither does soft_reset_halt. You have to invoke the reset & halt in separate commands. To write the flash:

halt;flash write_image erase program.bin 0x8000000;reset

This normally has to be run once with an error. Then the chip has to be power cycled & the line run again. At this point, it's left running a program in SRAM for writing the flash. You have to power cycle it again to boot into the flash. The BOOT pin has to be grounded. The line does something which causes the 1st power cycle to not boot into flash but write successfully & the second power cycle to boot into the flash but not write successfully.

Since reset halt doesn't work, breakpoints & stepping are useless. You have to put in infinite loops to iron out those 1st bugs. After power cycling it, issue a halt command, which gives the wrong program counter. Step it a few times through the loop to get the real program counter. The lion kingdom used just the program counter & the symbol table from nm|sort, but a sane human might use gdb.

The 1st task of your program is configuring the clock. As an extra bonus, the way the STM32H7 calculates its clock speed from a crystal is

(crystal freq) / PLL_M * PLL_N / PLL_P

Once at 400Mhz, the current consumption rises 30mA. This is the easiest way to see if your program is running, if you have a bench power supply which the lion kingdom didn't originally have.

The STM32H7 also has a ROM bootloader which allows it to be programmed with only a UART & no SWD pins at all. It seemed pretty hard to invoke & only applicable to 1 chip. You need a way to debug it, anyway.

-

Maximizing BLE speed on the NRF52

03/10/2018 at 06:47 • 0 commentsMight be the last lion in the world to realize this or it might have been a change from SDK 8 to SDK 14, but the NRF52 softdevice has 4 buffers for transmitting to the phone. You have to fill all 4 buffers by calling sd_ble_gatts_hvx with separate ble_gatts_hvx_params_t structs to hit the maximum speed & only then start waiting for BLE_GATTS_EVT_HVN_TX_COMPLETE events to keep the buffers warm. min_conn_interval & max_conn_interval also have to be 0.

With the NRF_SDH_BLE_GATT_MAX_MTU_SIZE at 247, the notification size was 244 & it fired 154 times per second, giving 300kbits/sec. Not sure what the speed from IOS to the NRF is, but there was no evidence corebluetooth was firing nearly as fast. CBPeripheral.writeValue could be called 3 times between waits for didWriteValueFor:characteristic, but it wasn't nearly as fast.

CBPeripheral.writeValue is only critical for firmware updates, which are rare & don't have to be fast. The critical point is going from the device to the phone.

-

Nordick notes

03/09/2018 at 07:12 • 0 commentsFor the unseen masses who are able to get an NRF52 SDK14 peripheral to connect to Android but not IOS, the solution was to copy the calls to nrf_ble_gatt_init, nrf_ble_gatt_att_mtu_periph_set, nrf_ble_gatt_data_length_set, sd_ble_opt_set from

examples/ble_central_and_peripheral/experimental/ble_app_att_mtu_throughput/main.c

Initializing these bits became required, somewhere in the SDK versions between 8 & 14. Someone porting from SDK version 8 to version 14 would have a long search, indeed. Norwegian abstraction layer writers haven't discovered abstraction.

There's no reason to set the NRF_SDH_BLE_GATT_MAX_MTU_SIZE any smaller than 247. The packet size can be changed dynamically by setting attr_md.vlen = 1; in the ble_gatts_attr_t struct. They also set the max_len in the ble_gatts_attr_t struct to NRF_SDH_BLE_GATT_MAX_MTU_SIZE.

-

The best product Apple ever made

03/04/2018 at 06:33 • 0 comments![]()

As ineffective as Tim Cook is, lions would consider the 12" ipad the best product Apple ever made, from a hardware standpoint. The software is shit of course. What works in our favor is when it's basically an 8.5x11 sheet of paper with video or a game on it. It's the absolute best portable gaming platform ever made, with the best portable sound & the most immersive portable video ever achieved. It's the science fiction world of our childhood made real.

It's disappointing that tablets are now experiencing the same declining sales that PC's were once famous for & the interface has never made any meaningful advances since Steve Jobless's death. The problem of compressing a full sized keyboard & desktop into the 8.5x11 form factor was never overcome. Lions certainly tried thinking of ways around it, but it couldn't go beyond video & games. -

When the launches didn't end after 2 minutes

02/10/2018 at 09:28 • 0 commentsFor all our lives, we turned off the video after the 1st 2 minutes of liftoff. The boosters separated & then it was back to late night talkshows, football, or taking a piss, for all but the hardest spacefans.

Nowadays, we all keep watching until the landing. It's still a surreal sight to see everyone around the world, no matter how fascinated by space, still glued to the screen or the sky minutes after the liftoff, waiting for the landing burns to start & the triple sonic booms. For every historic leap in spaceflight, we all hear the same voices of John Insprucker & friends taking us there.

![]()

Lions didn't expect it to lift off or keep going. Neither did mission control as they make calls, struggling to keep up with the unexpected events, expecting it to blow up at any moment. Clearing the tower, max Q, booster separation are all preceded by expectations of failure yet proceed exactly as they were envisioned or always did on previous launches.

Then 2 different announcers take turns announcing the side core & center core landing events. The familiar computer graphic of rocket trajectories comes on, but now so does Davie Bowie & not 1 but 3 boosters returning to Earth. Then, the car is revealed, exactly as planned but never expected. Mission control erupts into cheering. As the dust settles & the grid fins deploy, the lion announcer finally regains composure & says "wow. Did you guys see that.".

Musk himself said it was the most exciting thing he ever saw.

-

How to get -5V from a 3.3V square wave

02/02/2018 at 23:48 • 0 comments![]()

A cockroft-walton generator configured for going down instead of up. Unlike a charge pump, it only requires a single input.

-

2018 Lunar eclipse

01/31/2018 at 20:21 • 0 comments![]()

![]()

The light from millions of sunsets & sunrises in countries all around the world. If only there was a way to point a camera back at Earth from the moon. Flip flopped on making another timelapse or even waking up to get a still photo.

-

A mobile app revolution for EDA software?

01/31/2018 at 00:34 • 0 commentsAmazed by how much electronics design still is done on paper because the software tools don't exist. The datasheets are incomplete & the PCB editors don't have something as simple as a ruler. Units are still in inches & you have to draw a lot of sketches to figure out where a pad should go or what a missing dimension is from the datasheet.

It's an industry which was passed up by the software revolution of the last 25 years. The average game does billions more calculations than the average EDA program does. It could experience a monumental change from just bringing the software up to where photoshop was 20 years ago. There was a huge boom in EDA investments in 1997, but it was like the self driving car investments of 2015. A lot of money went chasing it, but there were no results.

-

9 years of etching

01/06/2018 at 23:30 • 0 comments![]()

The CuCl left from 9 years of board etching. Still highly reactive. A bottle of FeCl bought locally is now non existent, outside China.

![]()

-

Meltdown/spectre notes

01/05/2018 at 22:23 • 0 commentsSo basically, AWS automatically protected different customers from breaching other customers instances, but protecting different programs on a single instance from breaching other programs on the same instance requires a yum update kernel. If you're not running customer programs & everything you do run is known to not have an exploit, it's not necessary in the short term, but you could accidentally run a program in the future which does exploit it.

All our instances were automatically drop kicked 2 days ago for the press release, but the kernels were not automatically updated. It must have been a last minute panic in Seattle. Suspect additional kernel updates will come along, as they figure out how to defeat SPECTRE & find better solutions. Not sure why AWS doesn't automatically update their AMI images, since they don't keep any persistent data on an instance.

It's an interesting bug, taking advantage of the fact that multiple instructions can be executed on privileged memory before causing a segmentation fault & resetting the results of the instructions. 1 instruction reads the privileged memory. Another instruction uses the value of the privileged memory to compute another address to read, which is cached. Then, the CPU throws the segmentation fault & resets the reads, but does not clear the cache. By measuring the latency of accessing the addresses which are cached based on the values of the privileged memory, you can get the value of the privileged memory.

Every CPU in stock today is basically worthless. It's a good time to upgrade, if you just care about rendering videos but aren't doing any banking on it. -

Growing up in silicon valley

01/03/2018 at 21:46 • 0 commentsA scratch pad of random notes to be updated.

Silicon Valley is everywhere during the booms & nowhere during the busts.

The very 1st memories of electronics projects were audio projects, manely a well loved crystal radio done on springy kit which was then left 90% unused. Spent many nights listening to KNBR 68 on that kit, although we lived next to the KGO 810 transmitter. 68 had the music. It was the 1st inspiration to make a hifi receiver from scratch. How much more could be involved besides a crystal radio?

The other early project was an LED blinker. It came together by randomly patching one of those kits. It was amazing to see an inanimate bunch of wires seeming to make decisions on its own. It only worked briefly & I could never get it to work again. In old age, it appears it might have been a multivibrator.

Many areas have come & gone, but audio is still a theraputic & rewarding area. It's an attempt to trick one of the most sensitive inputs of the human body into thinking a human or an instrument exists which doesn't. No matter how good electronics get, it's still real hard to trick the human body into thinking something is really there, but that is the ultimate goal of many areas.

As a kid, it's very important to have a mentor, be exposed but not forced into electronics, & not pursue something you hate because it pays more. The internet is not a convincing alternative to a human mentor, because we had magazines in the old days & they weren't very effective. Media is driven by the economy & the economy is pretty bad at predicting what people need, in the short term. A mentor can tell what you need, rather than what the price of Enron or Juicero stock says the world needs.

Science shows on KQED were another source of inspiration, but they must have done a lousy job, because perpetual motion machines & anti gravity machines were a fascination for a long time. Quad copters, LED displays were another fascination, but after the early electronics kits, they remaned conceptual rather than built. It wouldn't be possible to economically build a quad copter or an LED display for many more decades. Thus began a lifelong desire for stuff that the economy couldn't provide until after many fits & starts by people with more money than brains, only to finally produce a commercially viable product long after I had moved on.

The 1st computer was a Commodore 64 which arrived at age 9. The electronics hobby manely disappeared for another 10 years. The 1st programs were character graphics animations using just print & it was quite an obsession. Programming graphics & sound in 6502 assembly was an utter fascination for a few years, but didn't produce anything practical. We had a Logo interpreter, but its terrible slowness & limited capabilities killed it. Programming eventually lost its luster after age 13.

Living in silicon valley didn't encourage engineering as a career. The industry had severe downturns, was entirely men, & required extremely high grades to get into. My grades & math skills were not good enough to attempt any kind of engineering degree. Eventually, that boom/bust cycle expanded to the entire economy. It ended up easier to get jobs in engineering than my degree. While an ivy league engineering degree did prove essential for getting into large companies, it was still possible to get into mom & pop startups.

-

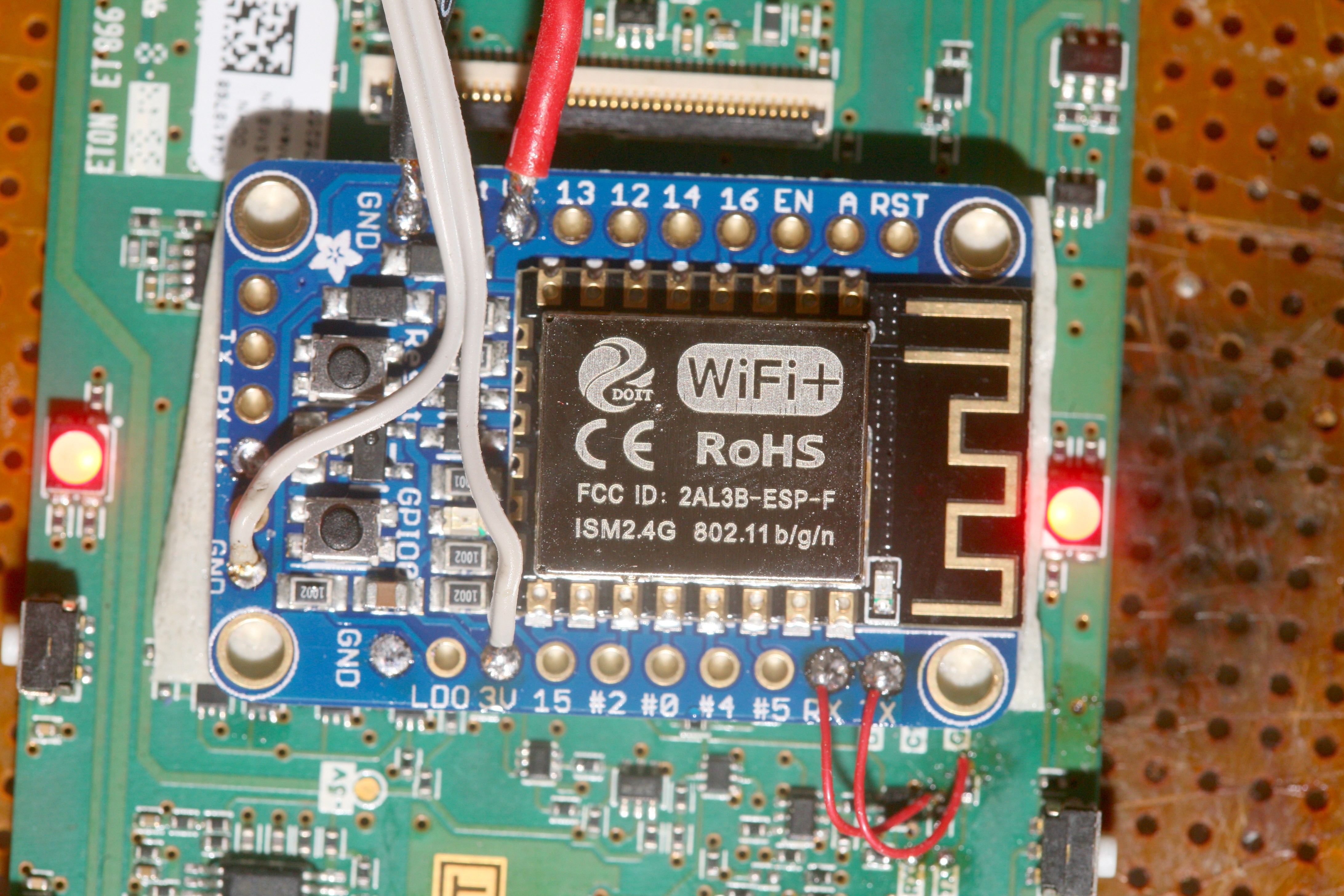

ESP8266 notes

12/14/2017 at 01:02 • 0 comments![]()

Lions are now experts in using the ESP8266 as a modem with access point. To get the highest throughput, you have to program it with the Arduino IDE, copy bits of the library source code from github to enable UART interrupts, allocate a 16384 byte buffer, set the clockspeed to 160Mhz.

This application only sends 148608 bits/sec, but occasionally overflows. Although rumors abound of it sending 7 megbits in bursts, it's very slow at continuously sending data from the UART to wifi. A raspberry pi with USB dongle can go much faster, but costs more & takes more space.

That was the most expensive CPU, motherboard, memory setup the lion kingdom ever got. Getting a speed boost over what was $400 in 2010 took a lot more money than it did, but hopefully will last another 8 years.

That was the most expensive CPU, motherboard, memory setup the lion kingdom ever got. Getting a speed boost over what was $400 in 2010 took a lot more money than it did, but hopefully will last another 8 years.