Hardware

The hardware requirements are a sufficiently powerful computer, with a camera, speaker and some input device to control it. An android phone seams like the obvious choice since it has them all and is portable and relatively inexpensive.

Software

The core image processing will be implemented using OpenCV. The Android platform will be used for text to speech and user interface components, saving a lot of time on UI and allowing me to focus on the image processing algorithms.

User interface

The UI should be as simple as possible, as the main focus is on using the appliance. The first screen will ask the user to choose a profile that is customized for the particular display to be read. After that it should b mostly hands-free, speaking any information on the display, and speaking when the display updates. Simply tapping on the middle of the screen should read the complete contents of the display. There should also be a control to turn off automatic speaking.

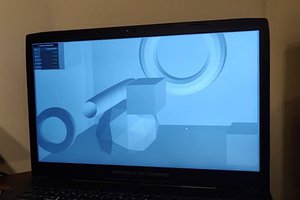

Image Processing

This is the main and most challanging part of the project. It needs to be able to find the display in the camera image, and then determine which segments are lit. To make implementation simpler and hopefully more accurate I intend to have profiles for each device. A profile will contain information such as where each segment is located and it's meaning, the colour of the display and the surround, the shape of the display etc.

OpenCV provides various tools for finding the area of interest within an image that I have still to experiment with. It is also possible to transform the image so that it contains just the display, even if the camera isn't perfectly straight, if it can accurately identify the corners.

After obtaining an image of just the display area, it should be simple to tell which segments are lit.

Andrew Kadis

Andrew Kadis

Roy

Roy

Arvind Sanjeev

Arvind Sanjeev