With the basic wander / avoid / backup behaviors in place, its time to expand on the virtual drones behaviors in order to make it possible for them to co-operate with each other. As these machines must at least in theory be realizable as physical devices, these needs to be done within the confines of the subsumption framework. (It would not do to assume the existence of some over-reaching algorithm with global access to every drone that modified their behavior from the outside. Such an approach would not be practical for a physical swarm).

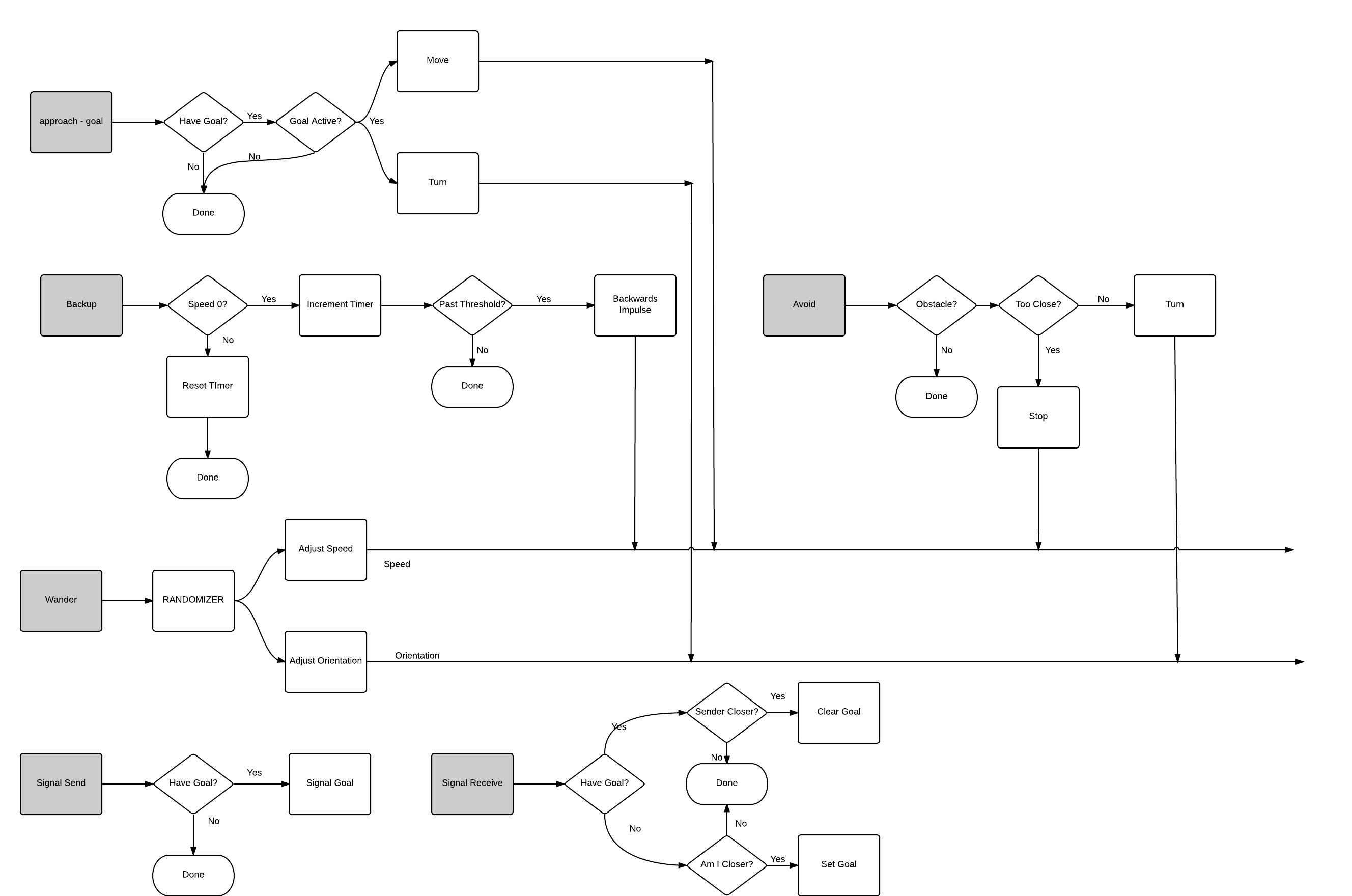

Co-ordination is achieved using three new behaviors which I have added to the updated subsumption architecture I've attached below.

approach - goal: This requires the ability to store a goal state. The goal state is assumed to be a shared memory between all modules. If the system current has a goal state, and that goal still shows up in the sensory input, this behavior moves the individual machine towards the goal.

signal send: This is a simple decision behavior, if there is a goal in the shared memory, send a signal to nearby swarm maters that the individual is pursuing it.

signal receive: This is the key to how these devices will co-operate. When signals are received, each individual compares the signals to their current goals. If they have a current goal, but a local swarm mate has signaled that it is closer to the goal, the individual forgets the goal. If it doesn't, and this individual is closer to a detected goal than any of the signaled goal chasers are, it takes up the goal.

This should create a form of competition between the individual swarm maters where the winner will the device best suited towards achieving the goal. Later on, when grapple, drop, tag, and map actions are added, behaviors can be added that will subsume the signal send, signal receive behaviors to enforce pre-requisites for certain tasks. For example a grapple behavior could subsume the signal send behavior in cases where the individual is chasing a COLLECT goal while already carrying another object.

My goal over the next few days is update the current simulation with the new behaviors and test them out. Once they have been integrated and tested I'll update the code in github so that anyone interested can pull the code and play around with these methods themselves.

Gene Foxwell

Gene Foxwell

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.