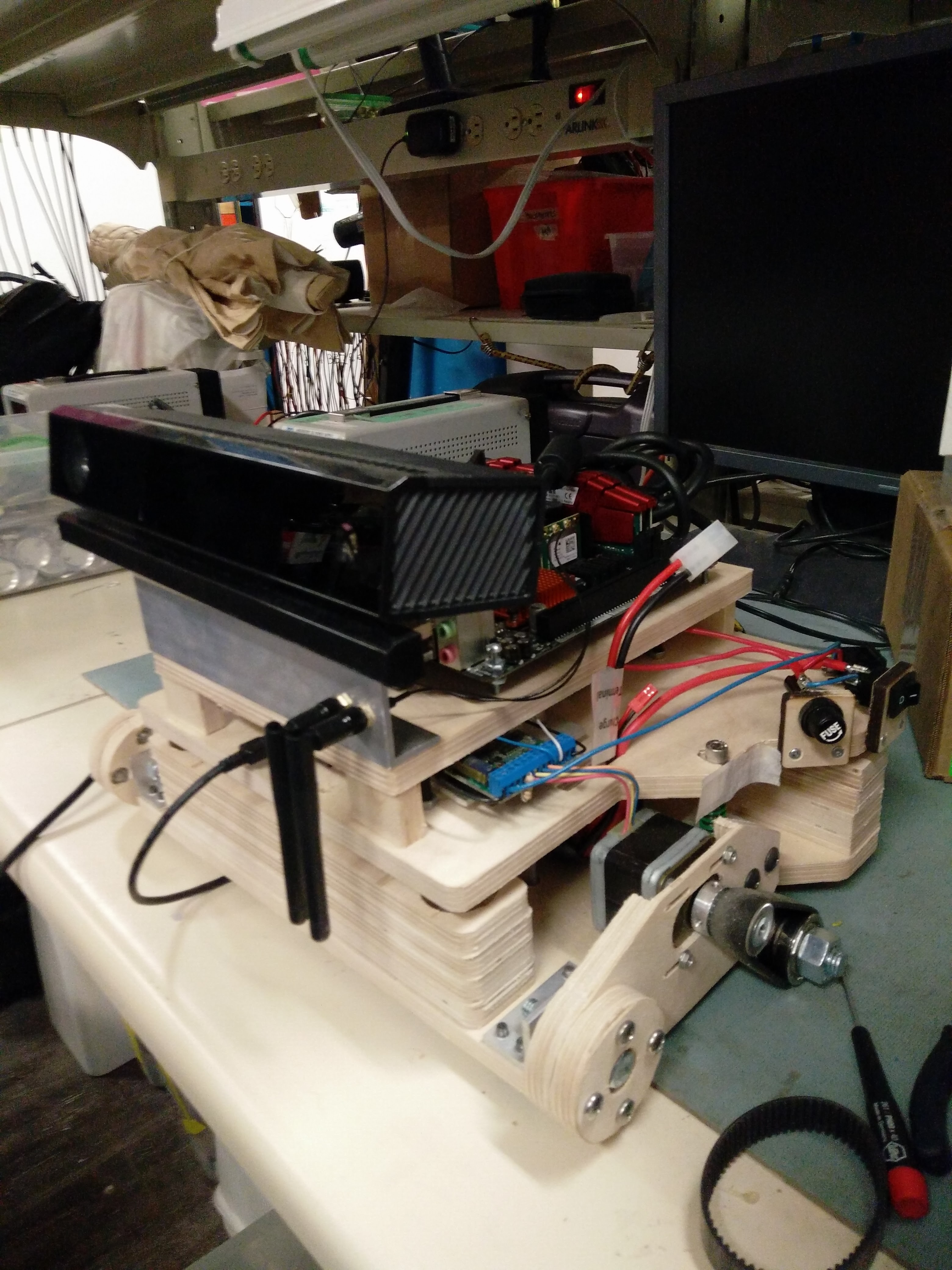

experimenting with 3d depth SLAM using the feature-based visual SLAM in ROS. The basic concept here is to take the depth frame, generate visual features based on a feature detector (SIFT, SURF, ORB etc) and determine the robot's movement (cyan) through associations between feature points in successive frames.

This approach works pretty well when lighting is constant and the depth data is clean, although it's very computationally expensive. Using a kinectv2 the resulting maps are very usable, with a realsense R200 I get frequent erroneous loop closures. Improving the SLAM algorithm will take too much work, so I think I will opt for the tried and true 2d LIDAR for room mapping instead.

Jack Qiao

Jack Qiao

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.