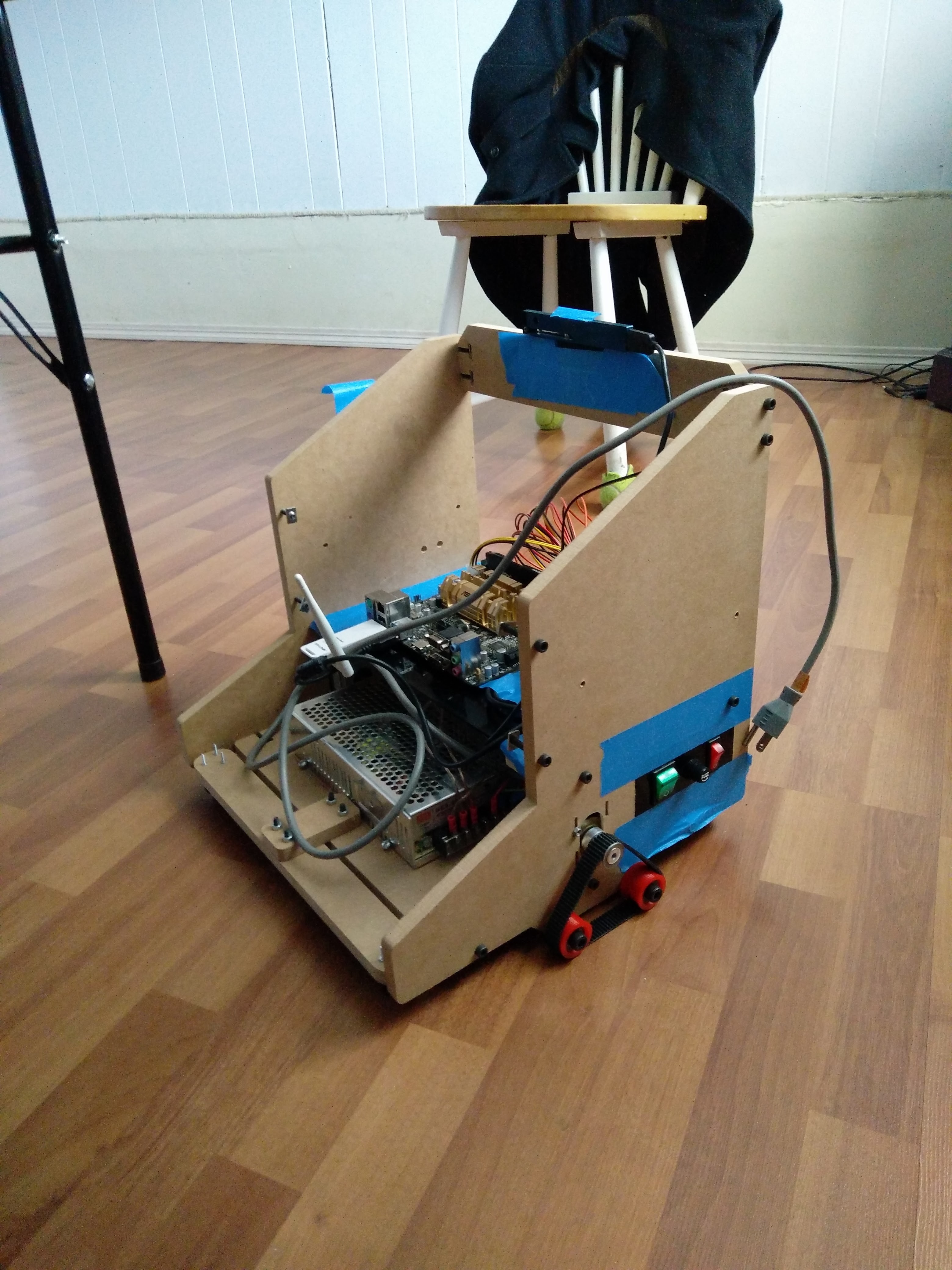

the first prototype had some issues, the biggest of which was that it had no on-board computer and had to be tethered to my laptop. Here I've made a diy dev platform that runs a mini-itx board. It's still tethered with a power cord though.

with this platform I've experimented with 3 different approaches to 2d SLAM:

1. A "Fake" lidar scan from depth-sensor data

2. Laser line projector and triangulation

3. A neato xv-11 LIDAR

Method 1 is basically what the turtlebot uses, and it works ok for the most part. The biggest problem with this method is that the field of view is rather limited, so there are many cases where you have no data to do SLAM with.

Method 2 I saw on this hackaday post. I used a 20mw laser line projector, 650nm band-pass filter and a usb camera. The challenging part of this method is noise filtering - whenever the camera or an obstacle moves, it will show up in the inter-frame difference along with the laser line. While the band-pass optical filter limits the absolute-value of the noise, the laser line will also quickly drop to near the noise floor due to the fan spread. The 20mw laser was only usable to about 2 meters. A very specific problem that I encountered was computer monitors - when moving the camera around the difference looks exactly like a laser line. Overall I don't think this works particularly well for a moving robot.

I've had much better results with the neato LIDAR. Although it also uses parallax, the data I'm getting is a lot more reliable at farther range.

Jack Qiao

Jack Qiao

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.