Where's My Keyboard?

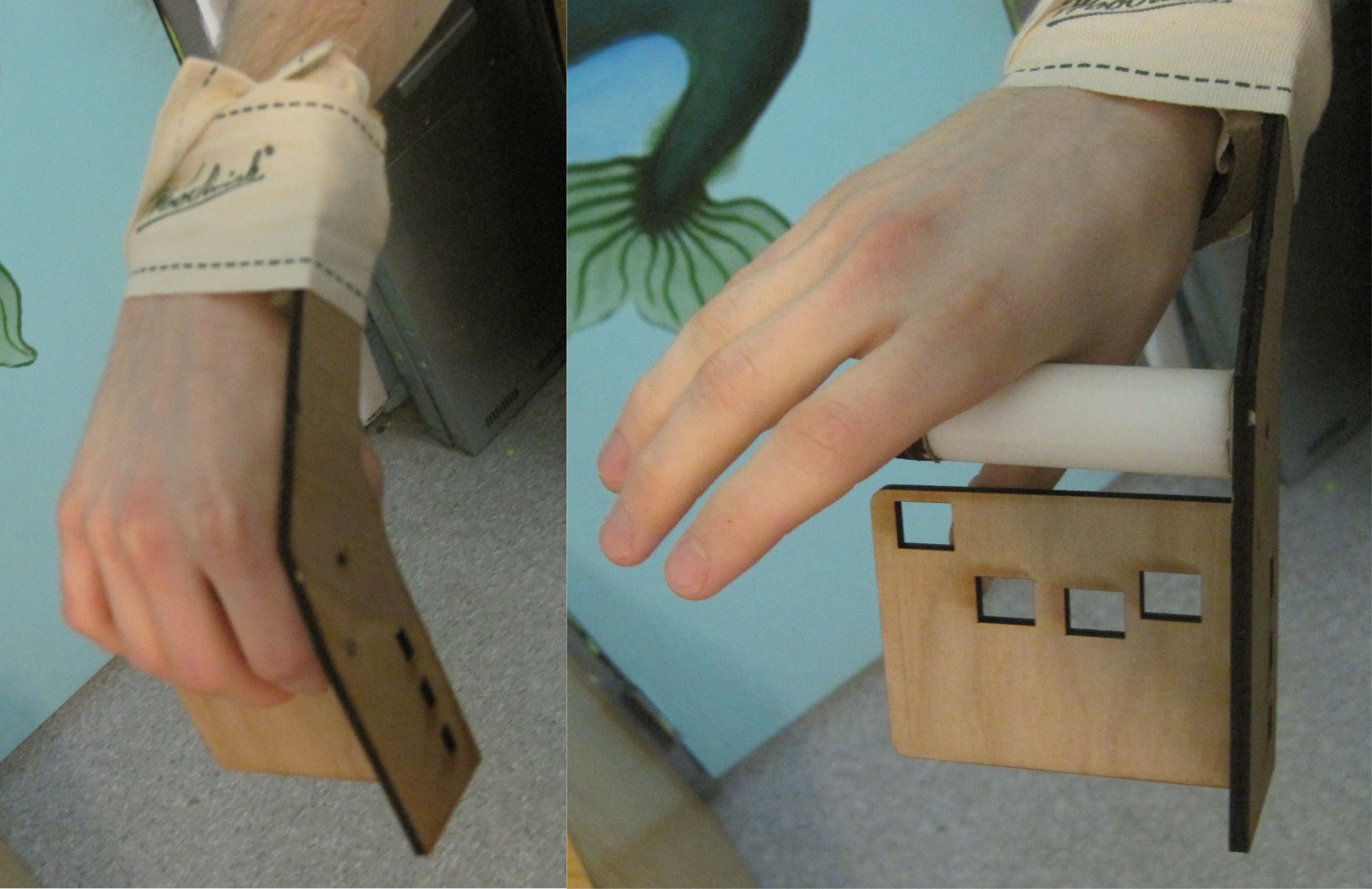

After you experience this problem for the first time it seems very obvious: when you put on a head-mounted display you are going to need to start whatever VR environment you want to play with using your keyboard or mouse. But you can't see your keyboard and mouse anymore because the display is blocking your vision. What do you do? Most people grope around awkwardly for a bit before finding the right device.

After starting one of the virtual environments you are usually set. Either the experience does not require user input (movie-like), or some extra input like head-tracking, a Kinect, or voice control is activated. However, the current selection of VR interaction modes is very limiting because it eschews the interaction channel that we are most accustomed to when working with computers: text. Though this can be a wonderful benefit to a virtual reality, letting us forget that we're just having our buttons pushed by a number-crunching machine, there are lots of interesting applications the textless input situation neglects.

Motivating Example: Creating virtual worlds

Project Philosophy: This video is what started my mind churning on this project. As a programmer whose also an artist, the possibilities for creative construction within virtual reality excite my imagination like nothing else I see in technology today. Usually when I'm making things there's a hard wall between the work that I do with my hands, and the work that I do with a computer. I'm forced to use the "physical knowledge" stored in my muscles in an entirely different context than the abstract knowledge I use to interact with computers, even though all of the work has the same goal. Virtual reality offers a chance to break down that divide. It could let me design and build digital and physical objects with my hands and mind at the same time.

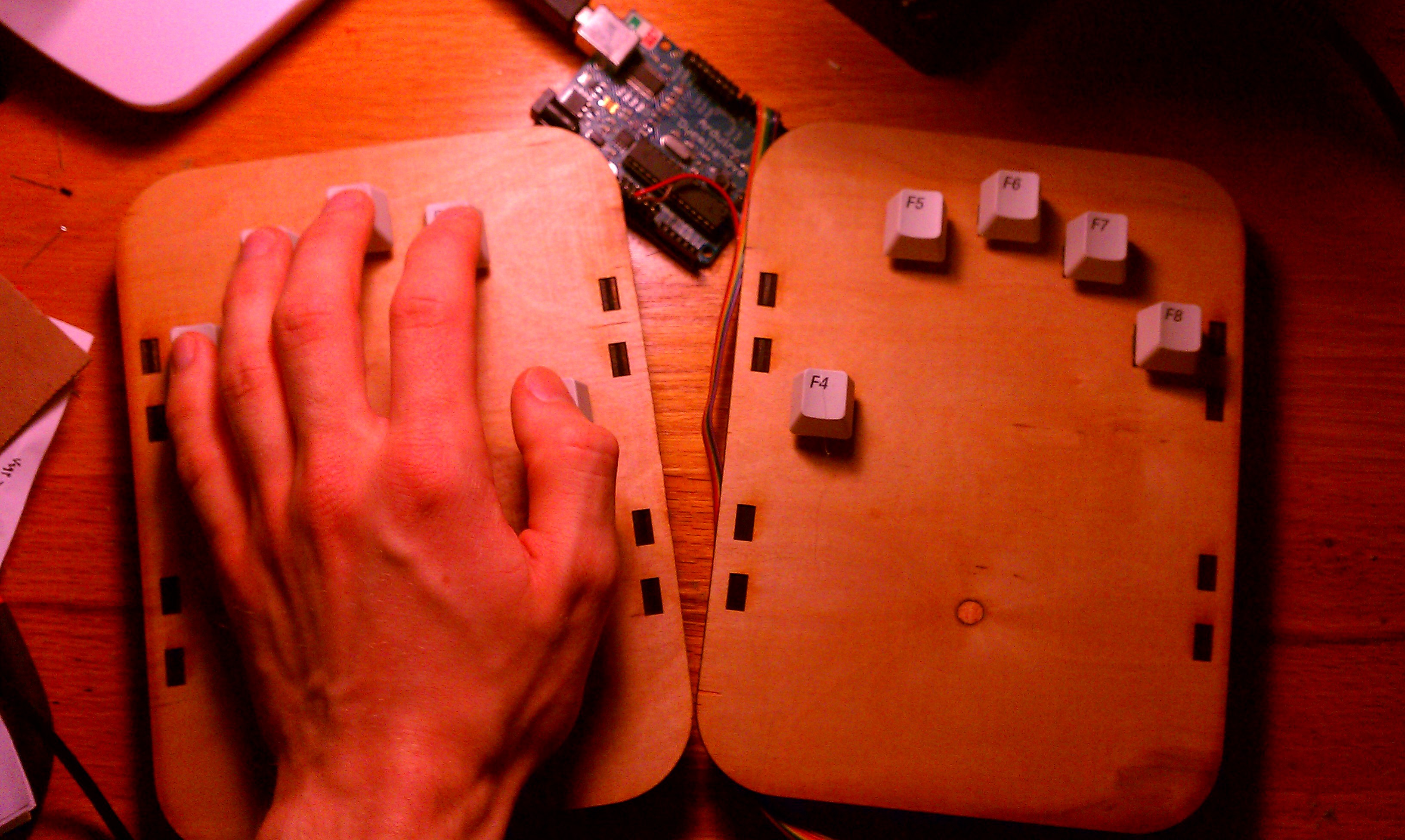

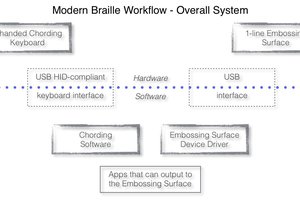

Once virtual reality masters both interaction that is conventional for the physical world (human body movement) and interaction that is conventional for the existing computer world thanks to projects like Keychange, a threshold will be crossed at the edge of a revolution in how computers are used as tools by humans. I'll be able to put on goggles, pick up Keychange, and sculpt something like a robot with my bare hands (making use of interaction that is intuitive for that purpose). At any moment I can switch to modifying its behavior using interaction intuitive for the computer (text). Then I can see how well it works immediately and modify the design as needed. When I'm all done I can have the computer send the designs to places all over the world, which will fabricate the parts, maybe assemble them, and ship the result back to me.

I think it's clear why this would be revolutionary. The design-build loop shrinks close to its limit (assuming teleportation or matter compilers aren't available to revolutionize shipping) and the pace of technology will increase a thousandfold.

Owen Trueblood

Owen Trueblood

Pamungkas Sumasta

Pamungkas Sumasta

Vijay

Vijay

Paul_Beaudet

Paul_Beaudet

Danny Caudill

Danny Caudill

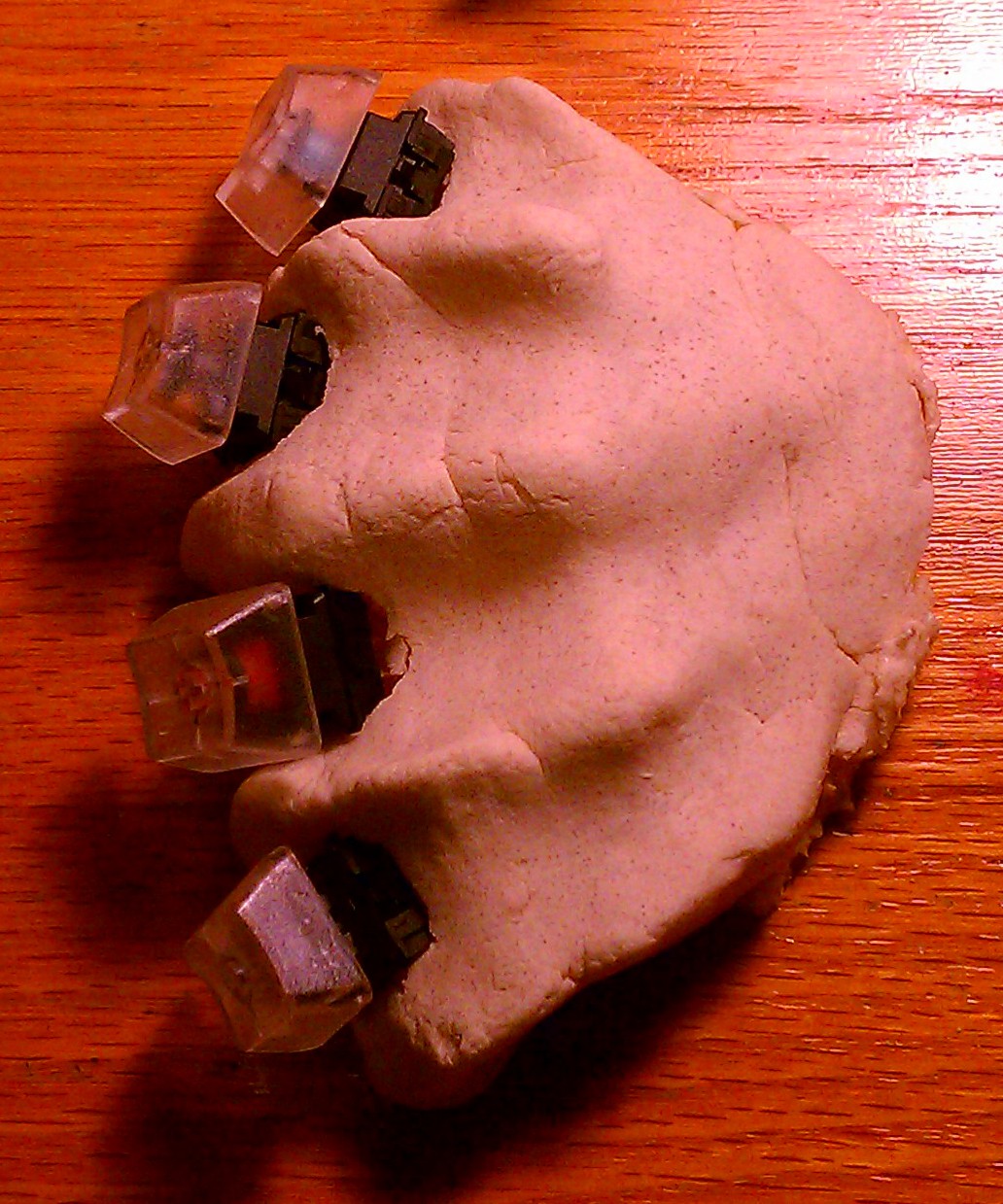

I can only find six keys in your picture. Where is the seventh? I like the simplicity of your mechanical layout (looks easy to build and comfortable); I guess one should just make the pinky key somewhat more accessible. Btw, my keyboards are at http://www.jwwulf.de/en/kbd/intro.html