I think the skin now is near enough complete.

Just as well really, Mary Shelley would be proud lol. I'm used to monsters, but even I'm a little bothered by this.

Cybeardyne Systems T101 Battle Chassis.

"Hey, buddy. You got a dead cat in there?"

Hello Handsome. ;-)

Interfacing to the hardware

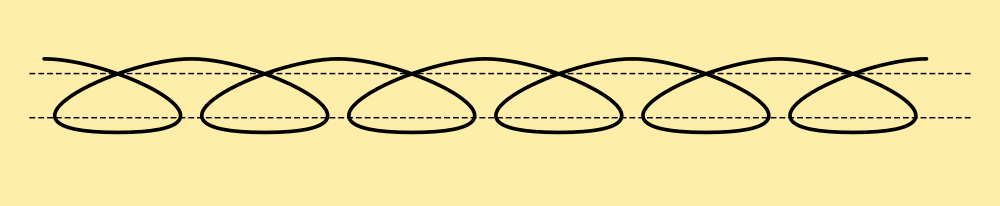

Interfacing to the Echo Dot, so the Panda's eye replicates the light right round the top proved to be a little interesting. I've solved this with waveguides - optical cable glued to a transparent sleeve that fits the Echo lead to a pixel-ring in the eye made with epoxy poured into a mould. This also houses the ranging laser.

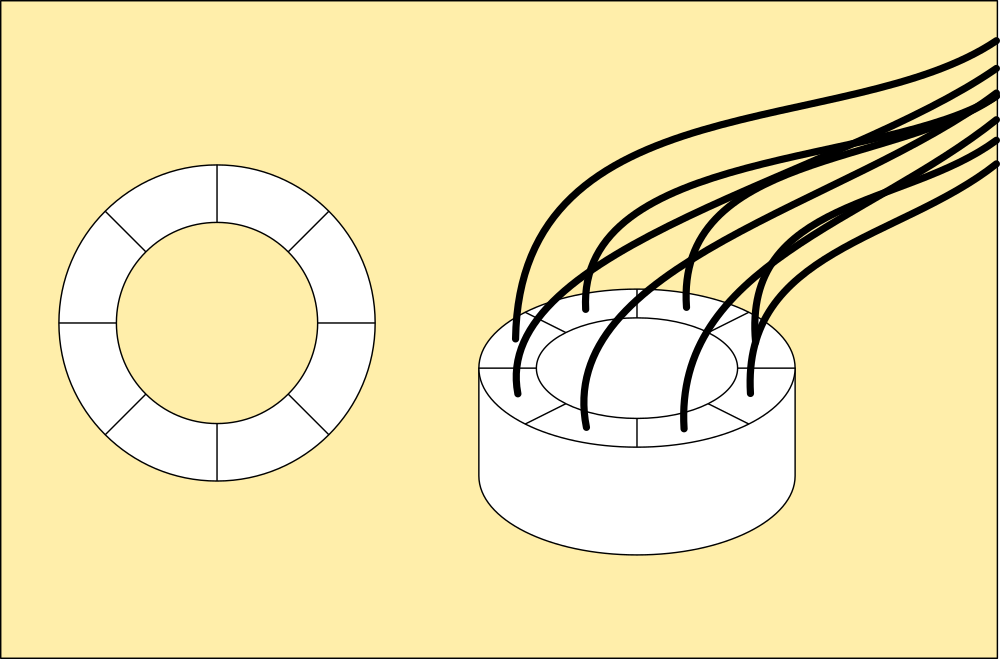

The ability to injure is something I've been thinking about a lot. This is a biometric sensor, made with a flat coil of magnet wire stitched to a strip of fabric. It connects via a large-value resistor to a pair of MCU pins, so it can be charged and discharged. It can also be sensed, to see how long it takes to charge or discharge, and that tells the MCU whether it is close to a capacitive source. Usually foil patches are used for the antenna, as a touch sensor.

However, touch isnt the only thing a Capsense sensor can detect, it can also detect the presence of a person over distance, actually several feet away. Its used primarily for touch though, standard lamps react to the brush of skin to turn on and off, but not clothing, just by measuring the strength of the field. Lamps however are not capable of making a decision to touch you, and find out if they should switch on and off, and a robot is. At least, it is when wearing a bitwise grid of these antenna under its fur, or lining its airframe in all directions.

For the first time, as I am aware at any rate, a machine understands the difference between alive and dead by testing its hypothesis for itself using dedicated sensors. AIMos is keyed to movement, and understands that something moving is either alive or set in motion by life. AI systems like that have to then figure out the content of the image to decide what the moving object is, and infer from that whether it is alive. Biometric sensing cuts across that process, and the robot can pay attention to only living things.

This brings the exciting possibility of hard-coding Asimov's Three Rules into the system, again for the very first time. As defined, the Three Laws are rather abstract and are more directives governing entire behaviours. Pandelphi's behaviours are fairly simple, the drone reacts to the possibility of attack from pets while monitoring and recording the scene in front of it, monitors its batteries, avoids obstructions and complies within those parameters with instructions. Cubs behaviours are slightly more complex, but harness the same technologies to do the same job.

These are all programmed responses that I can hard-code in autonomically, things like not laser-ranging a face by mistake, or allowing a childs fingers near the duct ports on the drone, qualify as within the scope of the Three Laws, as does not crashing while avoiding a pet attack.

Things could get hairy. ;-)

Morning.Star

Morning.Star

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

This is looking great. Now I don't feel quite so weird for having several sets of human sized legs in my room, knowing that you have a lifesize gutted robot panda hanging around, lol!

Are you sure? yes | no

Haha, can I quote you on that? I do get called weird rather a lot. ;-)

Your legs are looking ever more spectacular, they stand and move really well, a lot stronger than I expected a print to be. Keeping a beady eye on lol. ;-)

"Nerds" XD

Are you sure? yes | no