We built an FPV system for our quadcopter and to really enjoy the scenery, avoiding the huge lag resulting from servoed cameras, opted to gimbal the image in software, instead. On our quad we have a stabilized GoPro and a video transmitter. At the ground station the video is received, captured to a laptop with a cheap USB stick, distortion corrected (remember, GoPro), virtually rotated to match the HMD orientation, augmented by virtual instrumentation and predistorted for the Oculus Rift. The resulting system has the minimal orientational lag possible for Oculus Rift (perhaps 30 ms, dominated by the frame rate, buffering at both ends and the LCD response) and totally acceptable minimal sway. Naturally the lag of the actual video content from sky to eye is larger, but much less obvious.While FOV of the Rift is sweet indeed (bringing us back to the golden age of VR), the FOV of the GoPro is larger still. Which is excellent, as we now have a source image that can completely fill the view for the pilot. There is even some room to look around before noticing the edge of the camera view, or the virtual cockpit window, which it really is. Alert readers have already noticed that we have only one camera onboard, yet we present a stereo view. The justification for this is that we intend to fly high, instead of skimming the surface or flying indoors, and the first order estimate of the maximum distance the Rift can differentiate from infinity is around 30 m. The estimates of maximum distance for human stereo vision vary from 5 m to 1 km (depending on which source you believe), after that everything is 'far'. The definition is very complicated and that is why there are so different estimates. In practice, the image pair generated from the single video corresponds to the case where everything is comfortably distant. And if the objects are not distant in reality, the alternative interpretation of the virtual view is that the pilot is infinitely small and indeed indoors scenes appear to be filmed in the houses of giants. Nevertheless, this latency minimizing scheme is equally applicable to the two camera case.

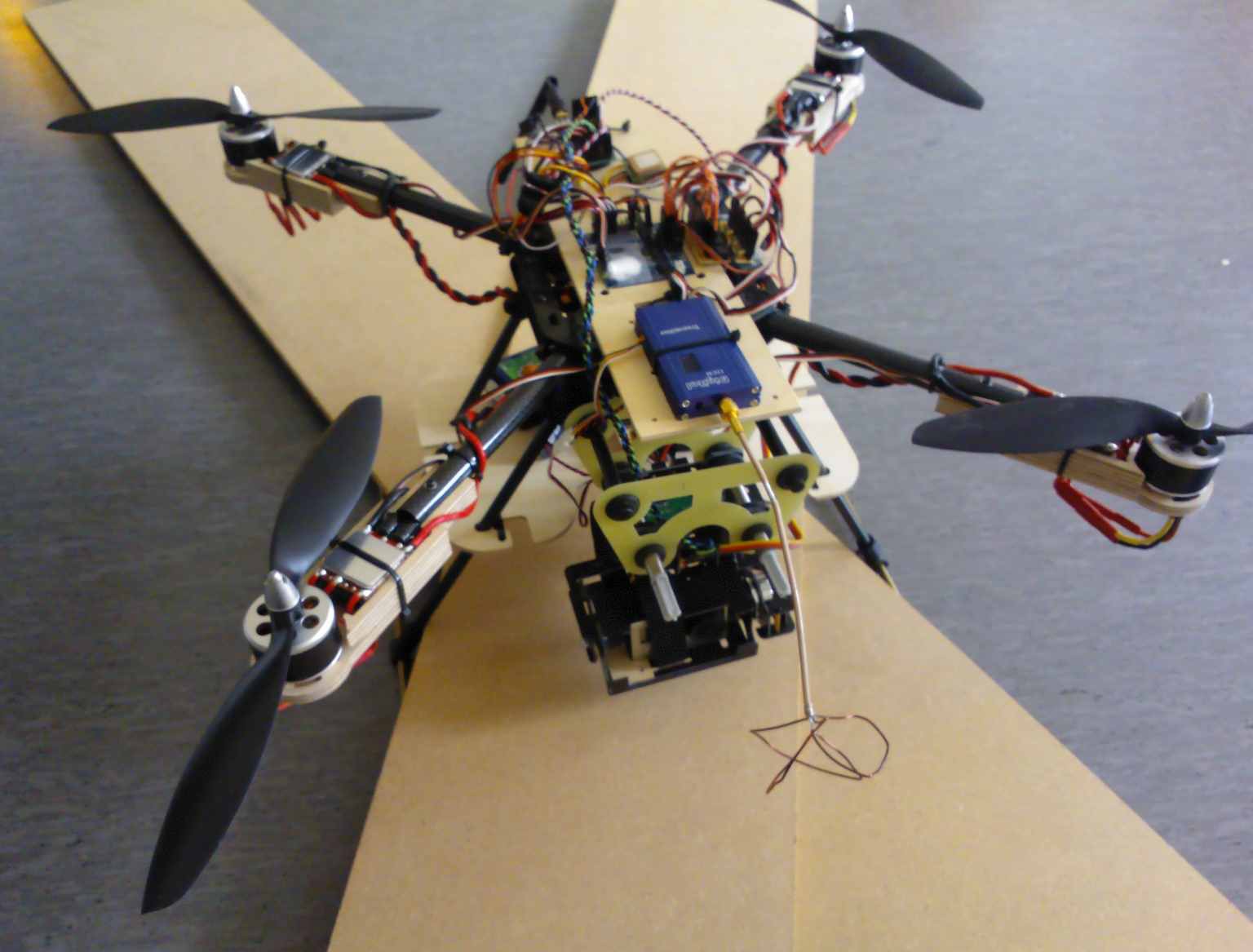

The basic framework of the quad was the Bumblebee from HK. As it turned out during several hours of first-time flying practice with a 3 DOF controller (it's very hard), the concept of using carbon fiber tubes and boards connected with fragile plastic joints is not for beginners. An accidental drop from 0.5 m always broke something. Slowly the parts got replaced with something more forgiving and also the amount of test electronics constantly increased, so the mound of rainbow spaghetti (figure 1) resembled the Bumblebee less and less.

Figure 1. The quad weighted down for magnetic deflection testing

Figure 1. The quad weighted down for magnetic deflection testing

The GoPro (Hero 3) was fixed to the X-Cam X100B gimbal which was found to stabilize it very well. The original plan was to non-critically rotate the camera in the general direction of viewing, report the actual current direction in the telemetry data and then use this to draw the image at the laptop. It turned out that there was no documented way to read the direction and the turning was extremely slow to begin with, so we decided to hold the camera in a fixed forward direction. The obvious solution would had been to rewrite the software of the gimbal, but that never got the top priority. Maybe the FOV of the GoPro was already too good to motivate us. In hindsight, mounting the GoPro sideways tilted down to cover both front and below views could had given a still more enjoyable experience. We'll do that in the next version soon.

The video link was a 1 W 2.4 GHz Tx/Rx pair from HK, the operation of which improved significantly after replacing the original whip antennas with very rough handmade clover leaf ones. The tutorial here was crucially important. My co-worker even measured them and found them very, very good (she said, and she's a pro). At the receiving end there was an Targa USB video grabber (from local Lidl, EUR 20) connected to a Lenovo X220 laptop.

The laptop proved to be a slight bottleneck in the system as it has no real dedicated graphics hardware. As said, the grabbed video needs to be undistorted from the GoPro signal to be rendered to the Oculus view(s), then some 2D and 3D diagnostics graphics are added and finally the Oculus image is antidistorted thrice for geometric and chromatic perfection. The shaders provided by Oculus do not work correctly on X220 (there were a lot of RGB sprinkles topping the image), so the distortion was done by warping the rendering geometry instead. If the DirectX9/hardware is behaving at all decently, this should be one of the fastest ways, too. In practice, the laptop fan goes crazy as soon as the program starts. Occasionally the frame rate drops to 30 fps.

Karri Palovuori

Karri Palovuori