So here is a problem:

My PyBoard real time clock is running slower that ideal clock. Since I'm doing datalogging my idea is this:

- Sync PyBoard and my computer before logging period

- After logging is done (in a month) compare PyBoard time and PC time

- Knowing the difference and duration of logging calculate "lag" coefficient.

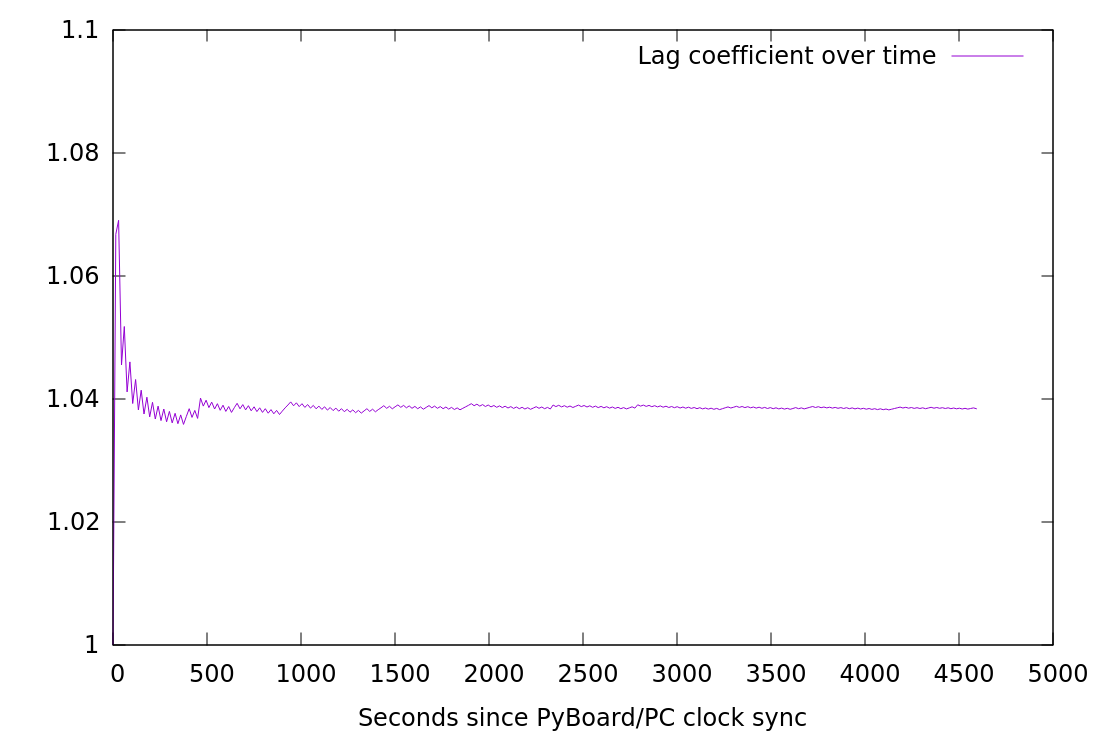

That would work nicely if PyBoard clock was "lagging" consistently at the same rate. However, it's not the case. I've measured "lag" coefficient over the period of 1.5 hour since clock sync between PyBoard and PC and here's what I got:

So it's not constant. At first sight it appears to be converging to some average value. However duration of my measurement is also increasing. So more important question would be: is absolute error (difference between correct clock value and value predicated based on lagging PyBoard multiplied by average lag coefficient) converging? In the end of the day I would love to get my measurements have resolution of not less than 5 min. 1 min would be great.

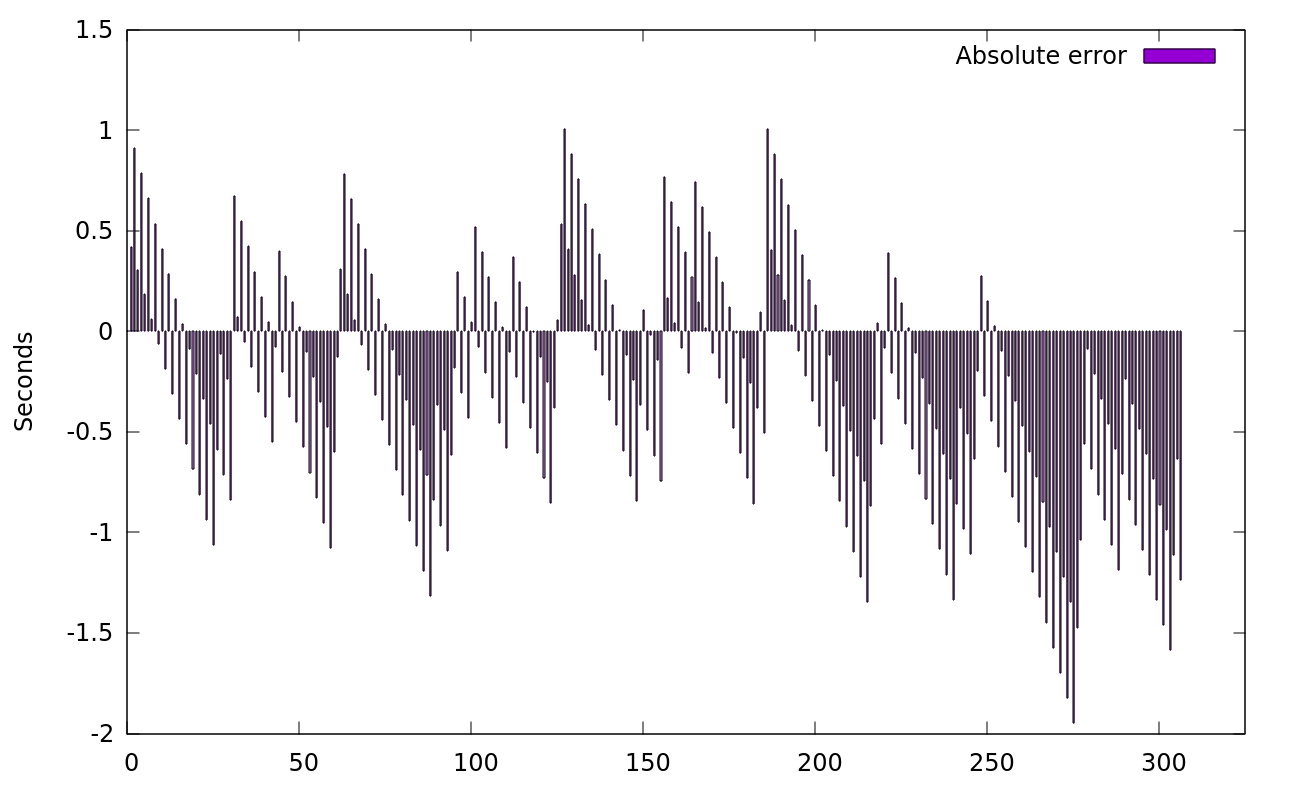

Let's take a look:

(Here x-axis is number of measurements taken. If you multiply by 15 - interval in seconds between measurements - you'll get time since clock synchronization as in previous plot).

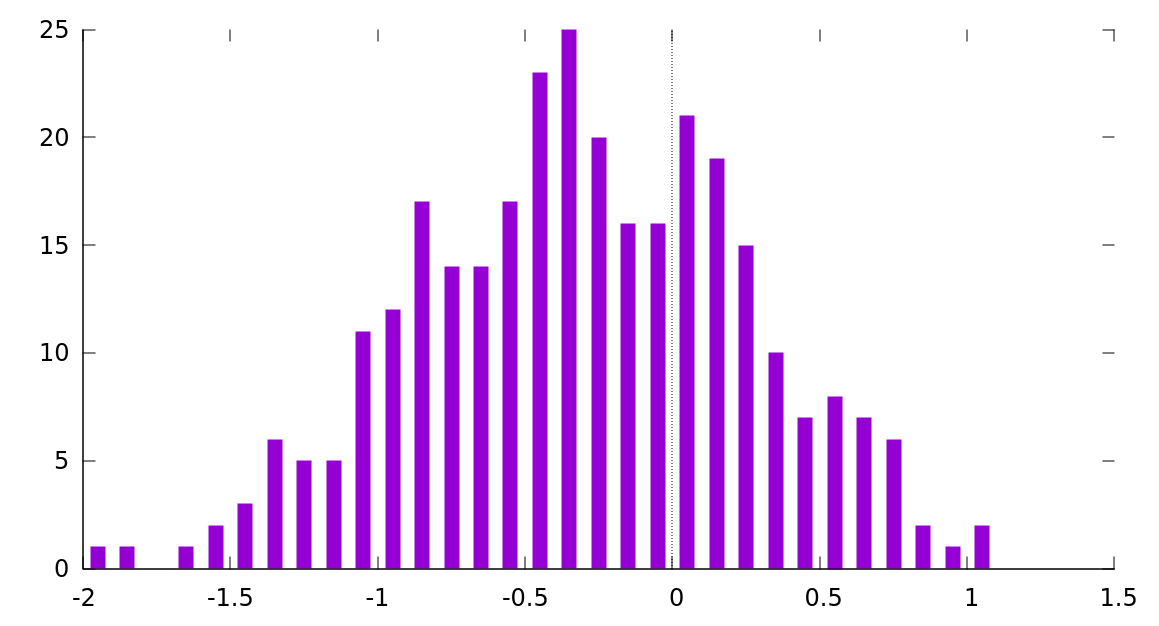

Below is distribution of residuals (errors between PC time and time predicted based on average lag coefficient and PyBoard time):

This distribution appears to be normal. Though one thing I'm missing is that average is -0.3. Shouldn't average of all errors be zero?

Please kindly let me know if you can help me with following:

- How much of this test measurement is good enough to conclude that my absolute error is not increasing (average stays around 0). If I do run this test measurement for whole month that should be good enough but it's a bit too long.

- What is the right way to calculate my time resolution precision based on the information above.

Max Kviatkouski

Max Kviatkouski

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.