The famous trtexec program was in /usr/src/tensorrt/bin. It supposedly can convert directly from caffe to a tensorrt engine.

./trtexec --deploy=/root/openpose/models/pose/body_25/pose_deploy.prototxt --model=/root/openpose/models/pose/body_25/pose_iter_584000.caffemodel --fp16 --output=body25.engine

That just ends in a crash.

Error[3]: (Unnamed Layer* 22) [Constant]:constant weights has count 512 but 2 was expected

trtexec: ./parserHelper.h:74: nvinfer1::Dims3 parserhelper::getCHW(const Dims&): Assertion `d.nbDims >= 3' failed.

Aborted (core dumped)

NvCaffeParser.h says tensorrt is dropping support for caffe & the converter doesn't support dynamic input sizes.

The command for conversion from ONNX to tensorrt is:

/usr/src/tensorrt/bin/trtexec --onnx=body25_fixed.onnx --fp16 --saveEngine=body25.engine

Next, the goog popped out this thing designed for amending ONNX files without retraining.

https://github.com/NVIDIA/TensorRT/tree/main/tools/onnx-graphsurgeon

You have to use it inside a python program.

# from https://github.com/NVIDIA/TensorRT/issues/1677

import onnx

import onnx_graphsurgeon as gs

import numpy as np

print("loading model")

graph = gs.import_onnx(onnx.load("body25.onnx"))

tensors = graph.tensors()

tensors["input"].shape[0] = gs.Tensor.DYNAMIC

for node in graph.nodes:

print("name=%s op=%s inputs=%s outputs=%s" % (node.name, node.op, str(node.inputs), str(node.outputs)))

if node.op == "PRelu":

# Make the slope tensor broadcastable

print("Fixing")

slope_tensor = node.inputs[1]

slope_tensor.values = np.expand_dims(slope_tensor.values, axis=(0, 2, 3))

onnx.save(gs.export_onnx(graph), "body25_fixed.onnx")

time python3 fixonnx.py

This takes 9 minutes.

The onnx library can dump the original offending operator

name=prelu4_2 op=PRelu

inputs=[

Variable (conv4_2): (shape=[1, 512, 2, 2], dtype=float32),

Constant (prelu4_2_slope): (shape=[512], dtype=<class 'numpy.float32'>)

LazyValues (shape=[512], dtype=float32)]

outputs=[Variable (prelu4_2): (shape=[1, 512, 2, 2], dtype=float32)]

Then it dumped the fixed operator

name=prelu4_2 op=PRelu

inputs=[

Variable (conv4_2): (shape=[1, 512, 2, 2], dtype=float32),

Constant (prelu4_2_slope): (shape=[1, 512, 1, 1], dtype=<class 'numpy.float32'>)

LazyValues (shape=[1, 512, 1, 1], dtype=float32)]

outputs=[Variable (prelu4_2): (shape=[1, 512, 2, 2], dtype=float32)]

This allowed trtexec to successfully convert it to a tensorrt model.

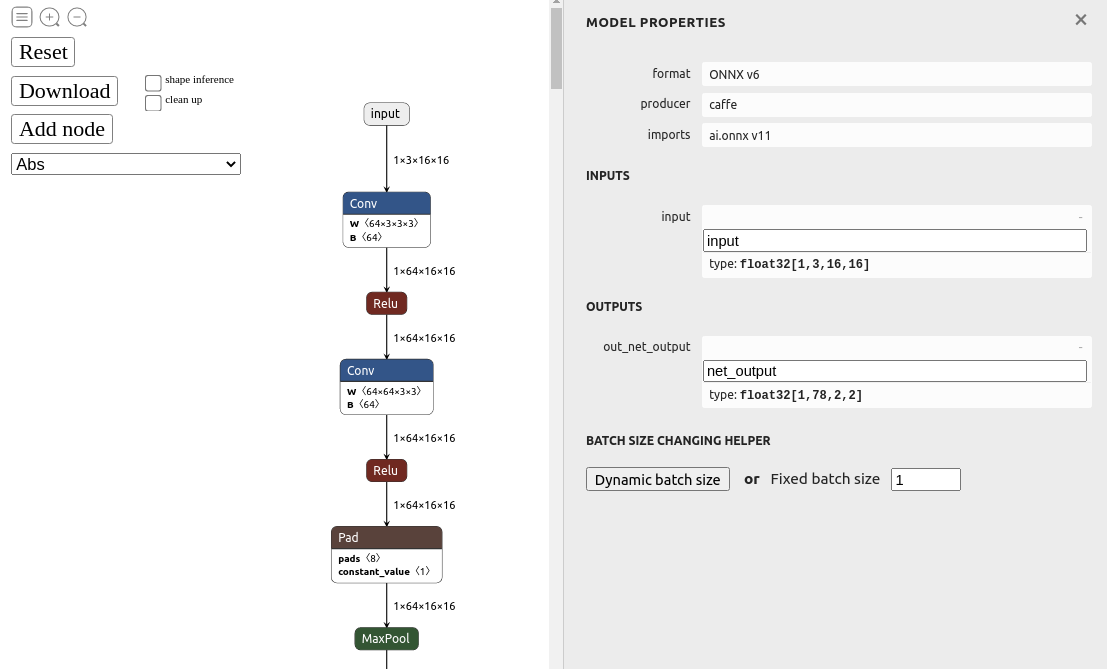

Inputs for body_25 are different than resnet18. We have a 16x16 input image. The 16x16 propagates many layers in.

name=conv1_1 op=Conv

inputs=[

Variable (input): (shape=[-1, 3, 16, 16], dtype=float32),

Constant (conv1_1_W): (shape=[64, 3, 3, 3], dtype=<class 'numpy.float32'>)

LazyValues (shape=[64, 3, 3, 3], dtype=float32),

Constant (conv1_1_b): (shape=[64], dtype=<class 'numpy.float32'>)

LazyValues (shape=[64], dtype=float32)]

outputs=[Variable (conv1_1): (shape=[1, 64, 16, 16], dtype=float32)]

The resnet18 had a 224x224 input image.

name=Conv_0 op=Conv

inputs=[

Variable (input_0): (shape=[1, 3, 224, 224], dtype=float32),

Constant (266): (shape=[64, 3, 7, 7], dtype=<class 'numpy.float32'>)

LazyValues (shape=[64, 3, 7, 7], dtype=float32),

Constant (267): (shape=[64], dtype=<class 'numpy.float32'>)

LazyValues (shape=[64], dtype=float32)]

outputs=[Variable (265): (shape=None, dtype=None)]

A note says the input dimensions have to be overridden at runtime. Caffe had a reshape function for doing this. The closest function in tensorrt is nvinfer1::IExecutionContext::setBindingDimensions

Calling nvinfer1::IExecutionContext::setBindingDimensions causes

[executionContext.cpp::setBindingDimensions::944] Error Code 3: API Usage Error (Parameter check failed at: runtime/api/executionContext.cpp::setBindingDimensions::944, condition: profileMaxDims.d[i] >= dimensions.d[i]. Supplied binding dimension [1,3,256,256] for bindings[0] exceed min ~ max range at index 2, maximum dimension in profile is 16, minimum dimension in profile is 16, but supplied dimension is 256.

1 hit was a browser based ONNX editor.

https://github.com/ZhangGe6/onnx-modifier

This doesn't show any min/max field or allow changing the dimensions. It only allows renaming layers.

Min/opt/max options appeared in a usage of buildSerializedNetwork. This one mentions adding an input & resize layer. It's believed that body_25 is supposed act as a kernel operating on a larger input, rather than operating on a scaled down frame.

https://github.com/NVIDIA/TensorRT/blob/main/samples/sampleDynamicReshape/README.md

Min/opt/max options appeared again in a usage of trtexec

https://github.com/NVIDIA/TensorRT/issues/1581

These options merely end in

[03/01/2023-11:19:21] [W] [TRT] DLA requests all profiles have same min, max, and opt value. All dla layers are falling back to GPU

[03/01/2023-11:19:21] [E] Error[4]: [network.cpp::validate::2959] Error Code 4: Internal Error (input: for dimension number 2 in profile 0 does not match network definition (got min=16, opt=256, max=256), expected min=opt=max=16).)

No tool has been found which can set min/max dimensions. Dynamic input size seems to be another caffe feature which was abandoned as usage of neural networks evolved.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.