Hey guys,

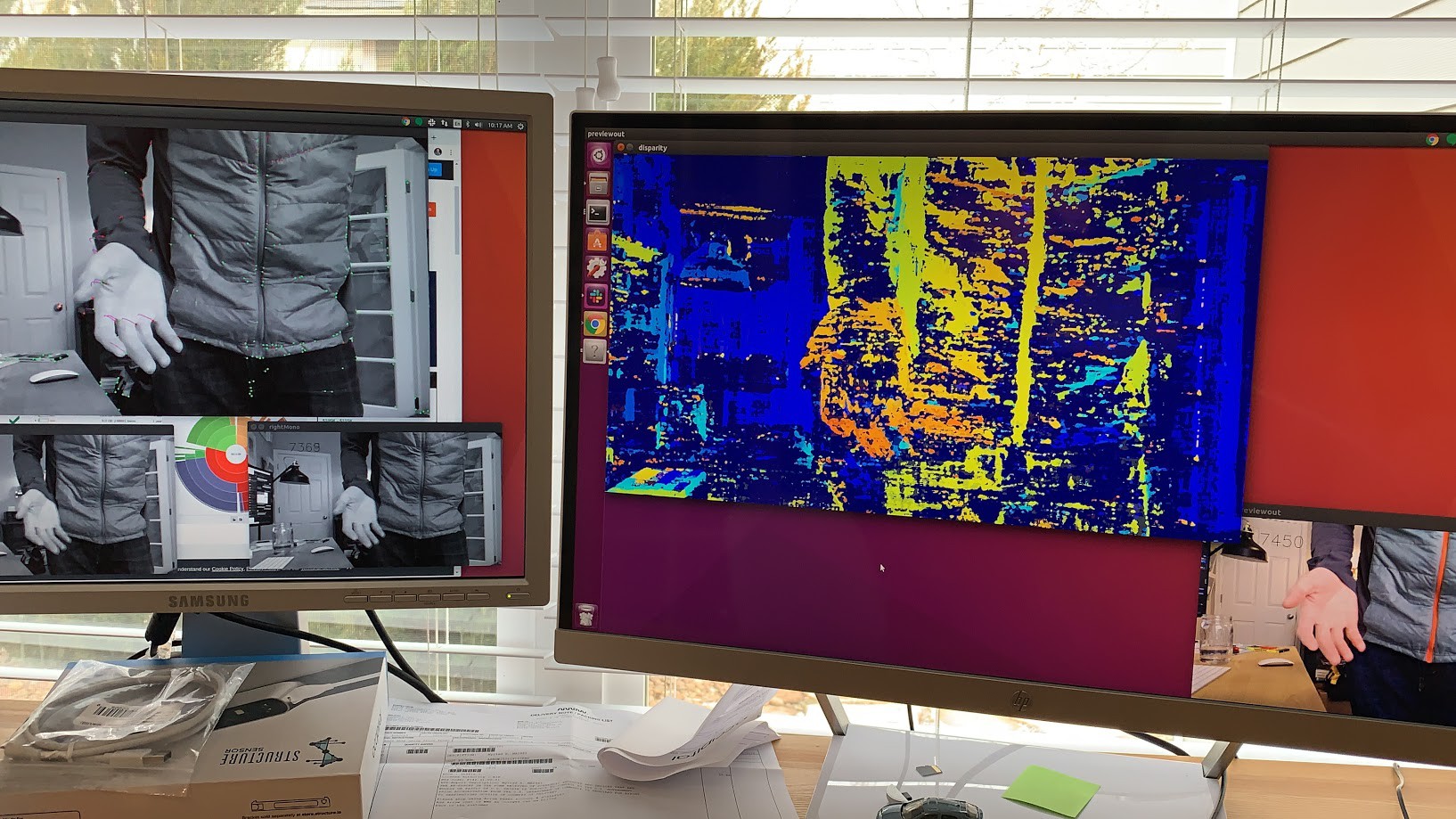

So we got hardware depth and video tracking working. It's not calibrated depth yet (so that's why it doesn't look so great - it's using a unity 3x3 homography matrix). But it's working! (Caveat on that, it's still buggy and crashes on startup 9/10 times, but the 1/10 is so satisfying!)

But to re-iterate, all the calculation shown in the video is being done on the Myriad X (depth calculation and feature tracking). The host is doing nothing (other than just displaying the data that the Myriad X is streaming, which is optional).

The nice part is the Myriad X doesn't even get warm doing this. And that's with zero heatsink. Just the chip exposed to ambient air.

And for more info as to our end goals, check out:

aipi.io - a Raspberry Pi depth vision + AI carrier board, which is itself a product we thought would be useful to the world, and is an internal stepping stone to:

commuteguardian.com - the AI bike light to save lives

Brandon

Brandon

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.