-

Last Chance to get 40% off OAK-D-Lite

10/15/2021 at 19:53 • 0 commentsHi Everyone!

Our KickStarter campaign for OAK-D-Lite ends today! This is your last chance to buy OAK-D-Lite at 40% off of MSRP.

https://www.kickstarter.com/projects/opencv/opencv-ai-kit-oak-depth-camera-4k-cv-edge-object-detectionThings you can do with OAK-D-Lite:

Enable Kids to Learn Programming and Robotics By Doing

Provide NICU-Level Care to Any Baby, In Any Country

Make Food Production Safer, More Efficient - and Less Harmful to the Environment

Make Trains and Heavy Machinery Self-Checking and Safer

Make Drones Autonomous Live-Saving Search and Rescue Systems

Get ESPN-Level Analytics Anywhere for Any Game

VR Laser Tag for the Cost of a Breakfast in Silicon Valley

Don't miss your last chance to get all this, for just $89!

UPDATE: Hackaday.com just covered us. Thanks, Hackaday and Donald Papp!

https://hackaday.com/2021/10/15/oak-d-depth-sensing-ai-camera-gets-smaller-and-lighter/ -

OAK-D Lite KickStarter Launching Tomorrow!

09/14/2021 at 20:49 • 0 commentsHi everyone,

We're super excited about this one. As it's the first time that this technology has been _really_ accessible. We'll be doing a $74 Super Early Bird for those who _really_ want this and can't break the bank. :-)

- Absolutely tiny

- Onboard 4k/30FPS video encoding

- 300,000 depth points at over 200FPS

- Real-time neural inference - including semantic depth

- Real-time 3D object position and 3D object pose.

- Capability to run Python3.9 scripts on-camera

- Built in edge detection, feature tracking, warp/de-warp, etc.

We can't wait to see what you all make with it!

-

Our OAK-D-Lite KickStarter is Launching Soon

09/06/2021 at 19:49 • 0 commentsThe TLDR is that OAK-D was a hit. Folks love the capabilities, and it makes solving all sorts of problems WAY easier. It is the cheat mode for interacting with the world that we thought it would be.

But... it is too expensive for many (most?) applications.

So we started from the ground up to make something that had all the power of OAK-D, but was low-enough cost that folks no longer had to say "Man, I sure wish I could use that... but it's just too expensive".

https://www.kickstarter.com/projects/opencv/opencv-ai-kit-oak-depth-camera-4k-cv-edge-object-detectionWe can't say how much it will cost just yet, but we can say that the first 74 backers will get a special deal. ;-)

Oh and also other applications were blocked by the size and weight of OAK-D. So it's also a bunch smaller and lighter (as the name alludes).

Teaser below:

![]()

My wife found a golf ball that said gold. So we used it as a reference, pointed at the gift box for OAK-D-Lite.

The gift box, with OAK-D-Lite tucked nicely inside, is actually smaller than OAK-D by itself:

![]()

And here is the comparison of the gift boxes for OAK-D-Lite (front) compared to OAK-D (back):

![]()

Cheers,

Brandon and the Luxonis/OpenCV teams -

Bluebox Co-Pilot AI Just Launched Based on DepthAI!

07/09/2021 at 17:49 • 0 comments -

DepthAI API 2.6.0 Release

07/06/2021 at 02:28 • 0 comments- Added EdgeDetector node, using 3x3 HW sobel filter

- Added support for SpatialCalculator on RGB-depth aligned frames; Note: RGB ISP and depth frame must have the same output resolution (e.g. 1280x720), maximum frame width of 1920 pixels is supported

- Added bilateral filter on depth frame

- Added median filter after stereo LR-check

- Added runtime configuration for stereo node: confidence threshold, LR-check threshold, bilateral sigma, median filter

- Added calibration mesh support for fisheye lenses

- Bumped c++ standard to c++14

Python: https://github.com/luxonis/depthai-python/pull/302

C++: https://github.com/luxonis/depthai-core/pull/168#event-4980363587

-

POE Models, Fun Edge Filter Hardware Block

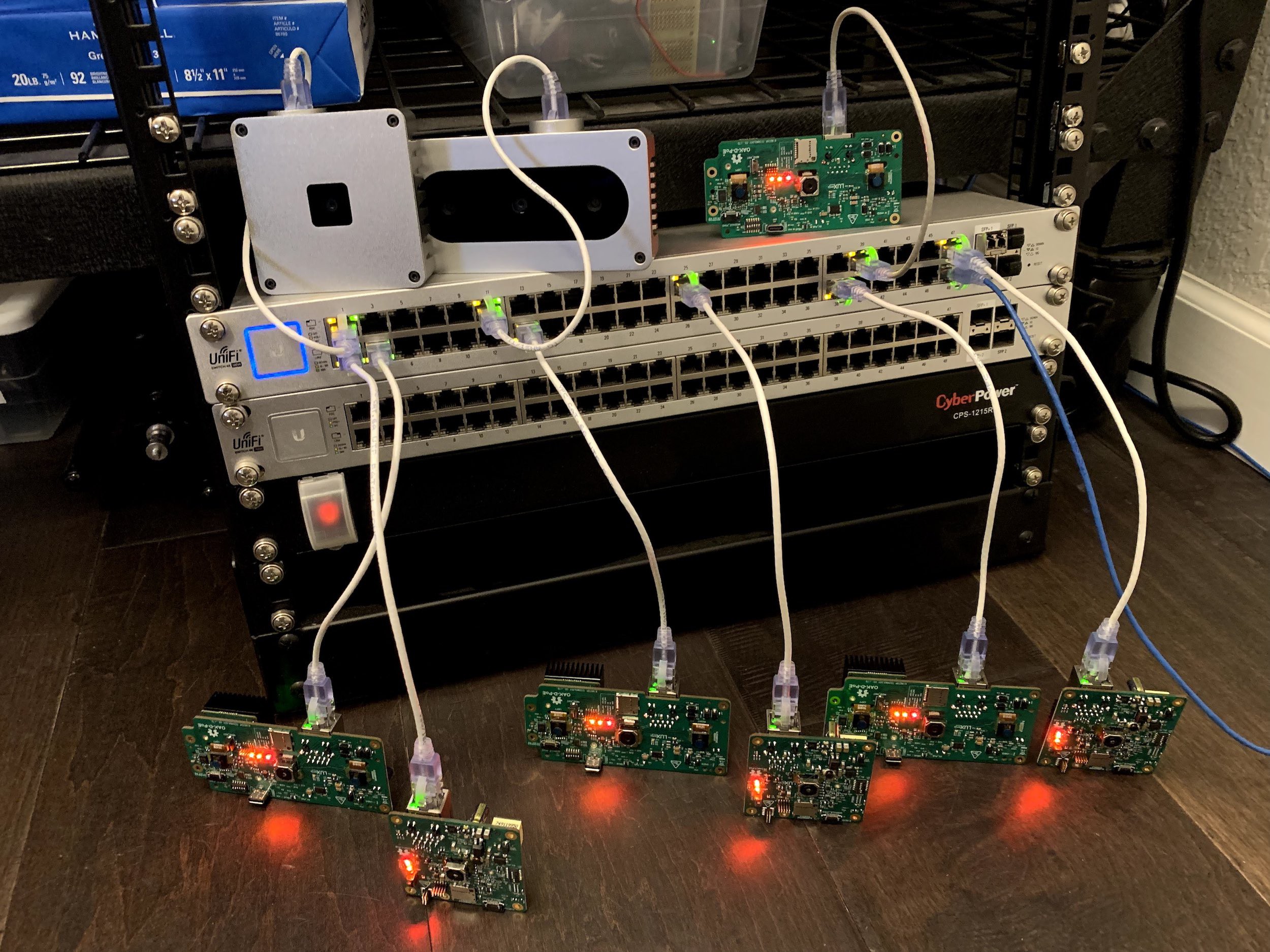

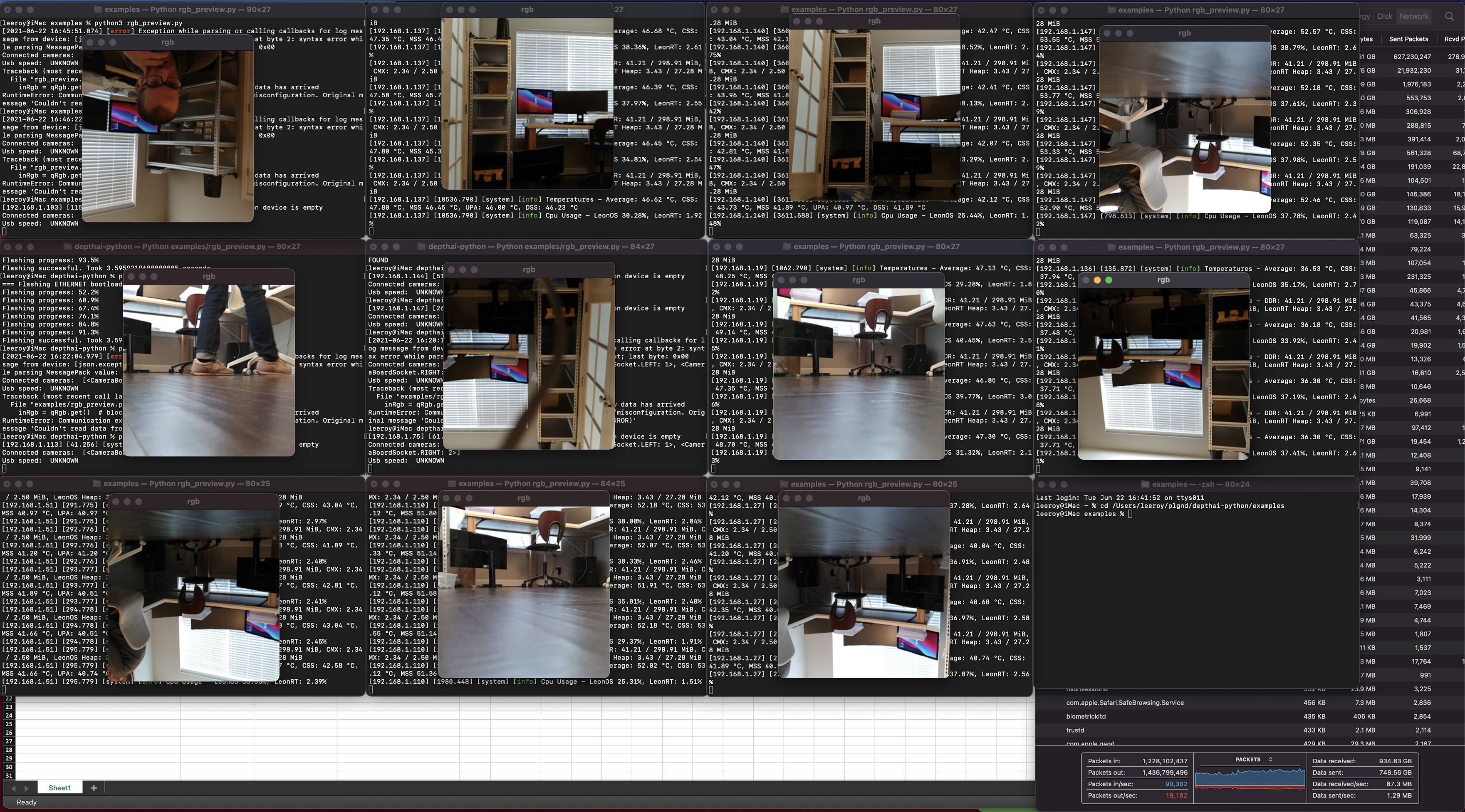

06/23/2021 at 15:48 • 0 comments- We're about to release our POE variants of DepthAI.

- We have the first version of the Edge Filter hardware block running.

POE Models working:

![]()

![]()

Edge HW Filter:

Cool Community Projects:

- https://github.com/geaxgx/depthai_blazepose

- https://github.com/geaxgx/depthai_hand_tracker

- https://github.com/gespona/depthai-unity-plugin

- https://twitter.com/CorticTechnolo1/status/1379147719428558849?s=20

-

The Gen2 Version of Our API Is Out

03/02/2021 at 16:47 • 0 commentsHi Everyone,

We just released Gen2.

First Gen2 release (2.0.0 and 2.0.0.0 for python bindings) is now online:

- Core: https://github.com/luxonis/depthai-core/releases/tag/v2.0.0

- Python: https://github.com/luxonis/depthai-python/releases/tag/v2.0.0.0Core now supports

find_package(depthai)exposing targetsdepthai::coreanddepthai::opencv(opencv support)

Python has docstring built into wheels, so help should display reasonable information for the function.Also documentation for gen2 (API reference) includes both C++ and Python fully documented with all available functions, classes, ...

The API reference is just an overview of everything - we are working on more written documentation for messages, nodes, ...We also have our IP67 depthai POE prototypes in:

Design files are here: https://github.com/luxonis/depthai-hardware/tree/master/SJ2088POE_PoE_Board

And check out this sweet auto-following robot using DepthAI from Scott Horton:

Notes from him:

A bit of success using the OAK-D + Depthai ROS-2 sample with the ROS-2 Nav stack. The Nav stack is running the dynamic object following mode ('follow-point' behavior tree). Running on an RPI4 (the Intel camera and jetson are just along for the ride).

Join our Discord to see even more in action. It's really fun for the whole depthai team to see the things that are being actively made now.

We also have a 20MP 1" diagonal huge-sensor support coming:

![]()

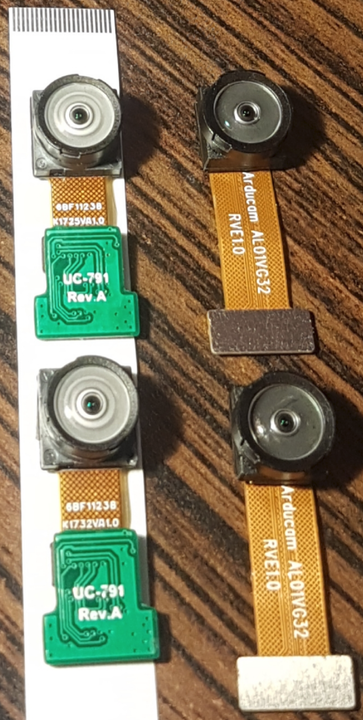

And wide angle support is now available via ArduCam as well. They made these specifically for DepthAI:

![]()

https://github.com/luxonis/depthai-hardware/issues/15

There's a TON more that we're not covering here (out of time), but join our Discord to learn more and discuss:

Cheers,

Brandon and the depthai team

-

A Whole Bunch of New DepthAI Capabilities

12/18/2020 at 04:34 • 0 commentsHi DepthAI Backers!

Thanks again for all the continued support and interest in the platform.

So we've been hard at work adding a TON of DepthAI functionalities. You can track a lot of the progress in the following Github projects:

- Gen1 Feature-Complete: https://github.com/orgs/luxonis/projects/3

- Gen2 December-Delivery: https://github.com/orgs/luxonis/projects/2

- Gen2 2021 Efforts: (Some are even already in progress) https://github.com/orgs/luxonis/projects/4As you can see, there are a TON of features we have released since the last update. Let's highlight a few below:

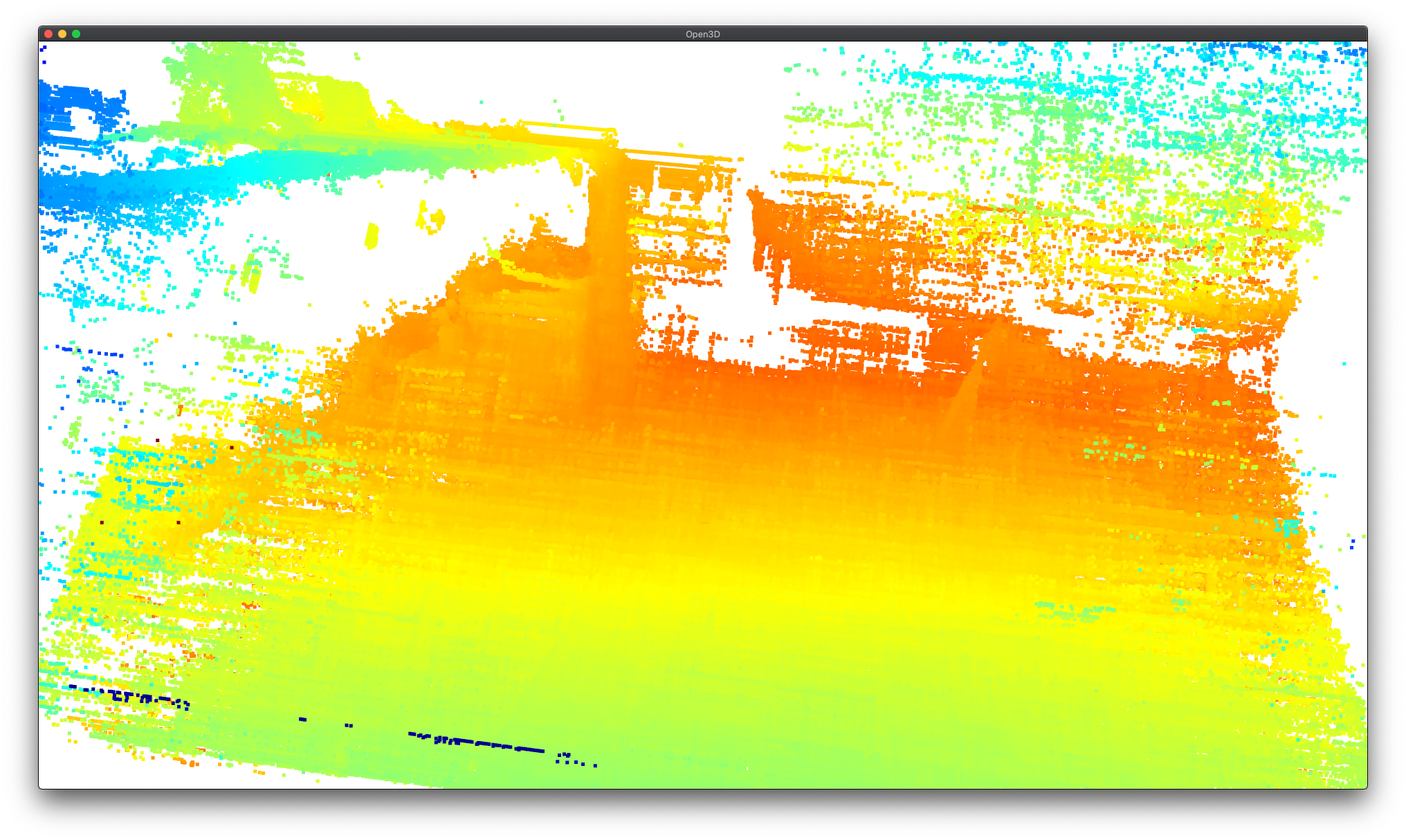

RGB-Depth Alignment

We have the calibration stage working now. And future DepthAI builds (after this writing) are actually having RGB-right calibration performed. An example with semantic segmentation is shown below:

The `right` grayscale camera is shown on the right and the RGB is shown on the left. You can see the cameras are slightly different aspect ratios and fields of view, but the semantic segmentation is still properly applied. More details on this, and to track progress, see our Github issue on this feature here: https://github.com/luxonis/depthai/issues/284

![]()

Subpixel Capability

DepthAI now supports subpixel. To try it out yourself, use the example [here](https://github.com/luxonis/depthai-experiments#gen2-subpixel-and-lr-check-disparity-depth-here). And see below for my quickly using this at my desk:

![]()

![]()

![]()

![]()

Host Side Depth Capability

We also now allow performing depth estimation from images sent from the host. This is very convenient for test/validation - as stored images can be used. And along with this, we now support outputting the rectified-left and rectified-right, so they can be stored and later used with DepthAI's depth engine in various CV pipelines.

See [here](https://github.com/luxonis/depthai-experiments/tree/master/gen2-camera-demo#depth-from-rectified-host-images) on how to do this with your DepthAI model. And see some examples below from the MiddleBury stereo dataset:

For the bad looking areas, these are caused by the objects being too close to the camera for the given baseline, exceeding the 96 pixels max distance for disparity matching (StereoDepth engine constraint):

These areas will be improved with `extended = True`, however Extended Disparity and Subpixel cannot operate both at the same time.

![]()

![]()

![]()

RGB Focus, Exposure, and Sensitivity Control

We also added the capability (and examples on how to use) manual focus, exposure, and sensitivity controls. See [here](https://github.com/luxonis/depthai/pull/279) for how to use these controls. Here is an example of increasing the exposure time:

And here is setting it quite low:

It's actually fairly remarkable how well the neural network still detects me as a person even when the image is this dark.

![]()

![]()

Pure Embedded DepthAI

We mentioned in our last update ([here](https://www.crowdsupply.com/luxonis/depthai/updates/pure-embedded-depthai-under-development)), we mentioned that we were making a pure-embedded DepthAI.

We made it. Here's the initial concept:

And here it is working!

And here it is on a wrist to give a reference of its size:

And [eProsima](https://www.eprosima.com/) even got microROS running on this with DepthAI, exporting results over WiFi back to RViz:

![]()

![]()

![]()

![]()

RPi Compute Module 4

We're quite excited about this one. We're fairly close to ordering it. Some initial views in Altium below:

![]()

![]()

There's a bunch more, but we'll leave you with our recent interview with Chris Gammel at the Amp Hour!

https://theamphour.com/517-depth-and-ai-with-brandon-gilles-and-brian-weinstein/

Cheers,

Brandon & The Luxonis Team

-

Announcing OpenCV AI Kit (OAK)

07/14/2020 at 13:48 • 0 commentsToday, our team is excited to release to you the OpenCV AI Kit, OAK, a modular, open-source ecosystem composed of MIT-licensed hardware, software, and AI training - that allows you to embed Spatial AI and CV super-powers into your product.

And best of all, you can buy this complete solution today and integrate it into your product tomorrow.

Back our campaign today!

https://www.kickstarter.com/projects/opencv/opencv-ai-kit?ref=card

-

megaAI CrowdSupply Campaign Production Batch Complete

07/09/2020 at 19:58 • 0 commentsOur production run of the megaAI CrowdSupply campaign is complete and now shipping to us:

![]()

We had 97% yield on the first round of testing and 99% yield after rework and retest of the 3% that had issues in the first testing.

Brandon

Brandon