-

Pure Embedded Variant of DepthAI

07/02/2020 at 15:34 • 0 commentsHi DepthAI Backers and Fans,

So we've proof-of-concepted an SPI-only interface for DepthAI and it's working well (proof of concept done with MSP430 and Raspberry Pi over SPI).

So to make it easier for engineers to leverage this power (and also for us internally to develop it), we're making a complete hardware and software/AI reference design for the ESP32, with the primary interface between DepthAI and the ESP32 being SPI.

The design will still have USB3C for DepthAI, which will allow you to see live high-bandwidth results/etc. on a computer while integrating/debugging communication to your ESP32 code (both running in parallel, which will be nice for debugging). Similarly, the ESP32 will have an onboard UART-USB converter and micro-USB connector for programming/interfacing w/ the ESP32 for easy development/debug.

For details and progress on the hardware effort see [here] and to check out the SPI support enhancement on DepthAI API see [here]

In short here's the concept:

![]()

And here's a first cut at the placement:

![]()

And please let us know if you have any thoughts/comments/questions on this design!

Best,

Brandon & The Luxonis Team

-

New Spatial AI Capabilities & Multi-Stage Inference

06/17/2020 at 19:53 • 0 commentsWe have a super-interesting feature-set coming to DepthAI:

- 3D feature localization (e.g. finding facial features) in physical space

- Parallel-inference-based 3D object localization

- Two-stage neural inference support

And all of these are initially working (in this PR, [here](https://github.com/luxonis/depthai/pull/94#issuecomment-645416719)).

So to the details and how this works:

We are actually implementing a feature that allows you to run neural inference on either or both of the grayscale cameras.

This sort of flow is ideal for finding the 3D location of small objects, shiny objects, or objects for which disparity depth might struggle to resolve the distance (z-dimension), which is used to get the 3D position (XYZ). So this now means DepthAI can be used two modalities:

- As it's used now: The disparity depth results within a region of the object detector are used to re-project xyz location of the center of object.

- Run the neural network in parallel on both left/right grayscale cameras, and the results are used to triangulate the location of features.

An example where 2 is extremely useful is finding the xyz positions of facial landmarks, such as eyes, nose, and corners of the mouth.

Why is this useful for facial features like this? For small features like this, the risk of disparity depth having a hole in the location goes up, and even worse, for faces with glasses, the reflection of the glasses may throw the disparity depth calculation off (and in fact it might 'properly' give the depth result for the reflected object).

When running the neural network in parallel, none of these issues exist, as the network finds the eyes, nose, and mouth corners per image, and then the disparity in location of these in pixels from the right and left stream results gives the z-dimension (depth = 1/disparity), and then this is reprojected through the optics of the camera to get the full XYZ position of all of these features.

And as you can see below, it works fine even w/ my quite-reflective anti-glare glasses:

Thoughts?Cheers,

Brandon and the Luxonis Team

-

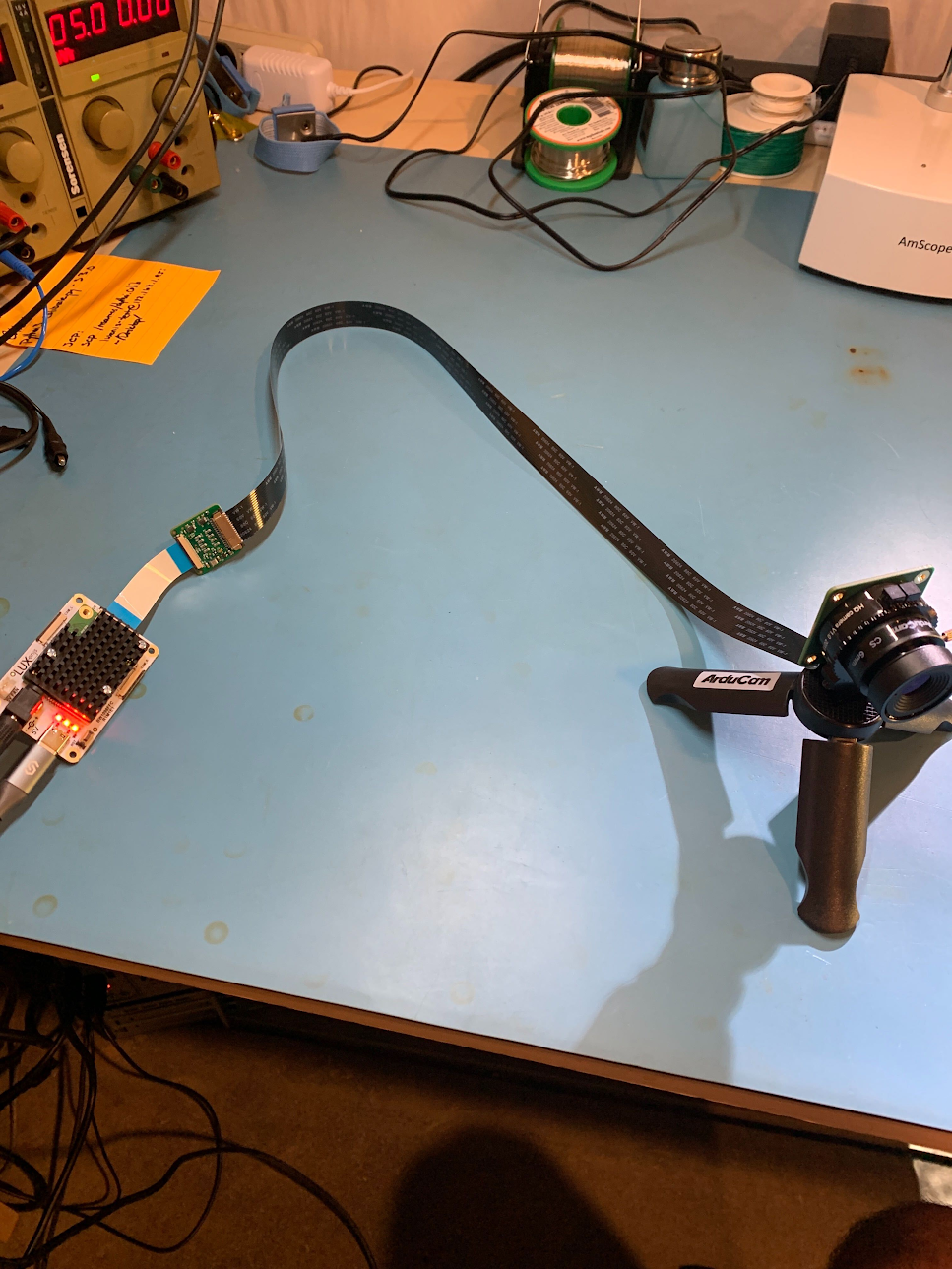

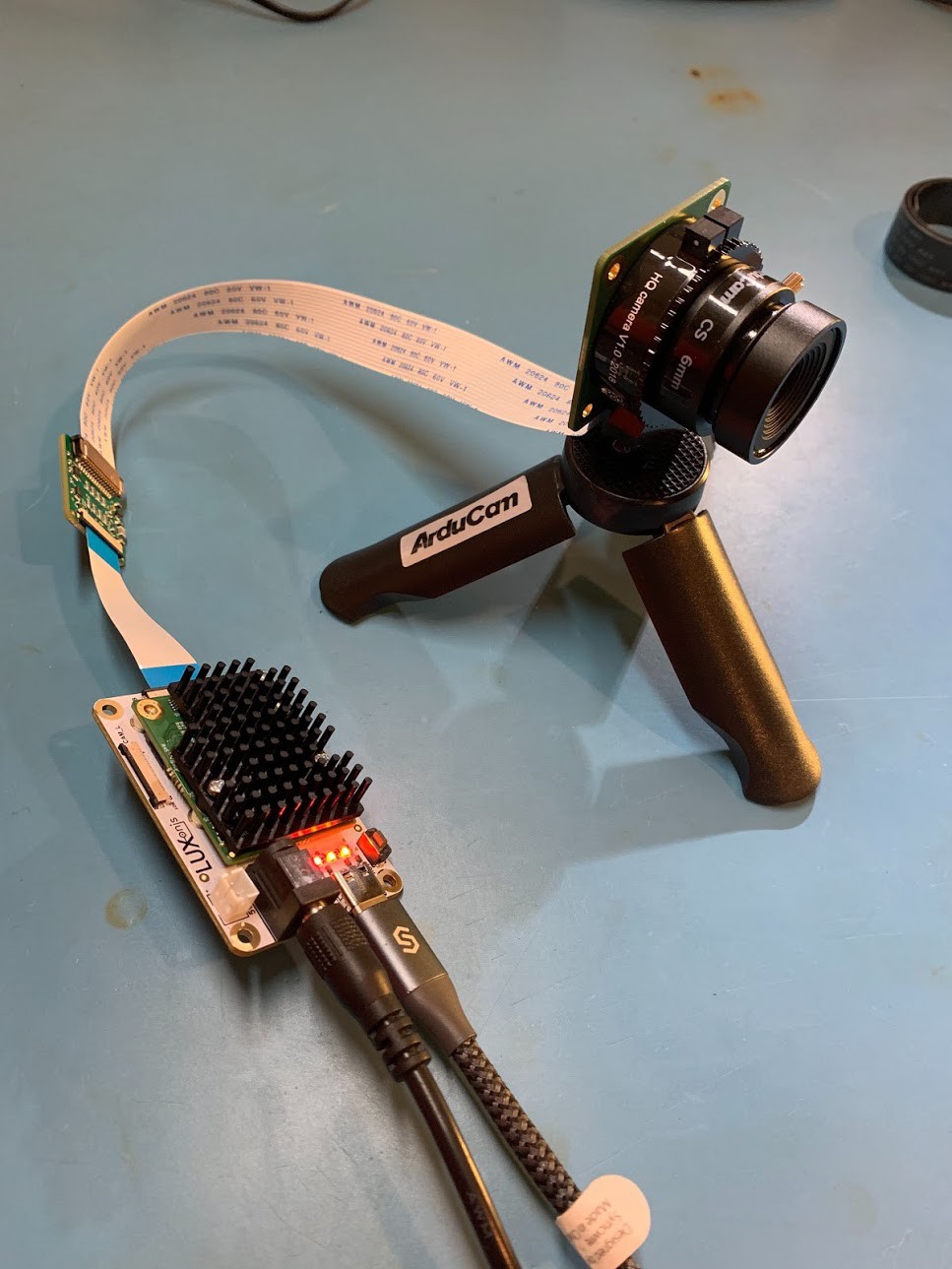

Raspberry Pi HQ Camera Works With DepthAI!

06/10/2020 at 04:07 • 0 commentsHello everyone!

So we have exciting news! Over the weekend we wrote a driver for the IMX477 used in the Raspberry Pi HQ Camera.

So now you can use the awesome new Raspberry Pi HQ camera with DepthAI FFC (here). Below are some videos of it working right after we wrote the driver this weekend.

![]()

Notice that it even worked w/ an extra long FFC cable! ^

More details on how to use it are here. And remember DepthAI is open source, so you can even make your own adapter (or other DepthAI boards) from our Github here.

And you can buy the adapter here: https://shop.luxonis.com/products/rpi-hq-camera-imx477-adapter-kit

Cheers,

Brandon & the Luxonis team

-

IR-only DepthAI

06/03/2020 at 20:53 • 0 commentsHi DepthAI (and megaAI) fans!

So we have a couple customers who are interested in IR-only variants of the global-shutter cameras used for Depth, so we made a quick variant of DepthAI with these.

We actually just made adapter boards which plug directly into the BW1097 (here) by unplugging the existing onboard cameras. We tested with this IR flashlight here.

It's a bit hard to see, but you can tell the room is relatively dark to visible light and the IR cameras pick up the IR light quite well.

Cheers,

The Luxonis Team

-

Fighting COVID-19!

05/26/2020 at 19:35 • 0 commentsHello DepthAI Fans!

We're super excited to share that Luxonis DepthAI and megaAI are being used to help used to help fight COVID-19!

![A doctor cleaning medical equipment]()

How?

To know where people are in relation to a Violet, Akara's UV-cleaning robot. This allows Violet to know when people are present, and how far away they are, in real-time - disabling its UV light when people are present.

Check out this article from Intel for more details about Violet, the UV-cleaning robot.

We're excited to continue developing this effort. Specifically, DepthAI can be used to map which surfaces were cleaned and how well (i.e. how much UV energy was deposited on each surface).

This would allow a full 3D map of what was cleaned, how clean is it, and what surfaces were missed.

So in cases where objects in the room are blocking other surfaces, DepthAI would allow a map of the room showing which surfaces were blocked and therefore not able to be cleaned.

Thanks,

The Luxonis Team -

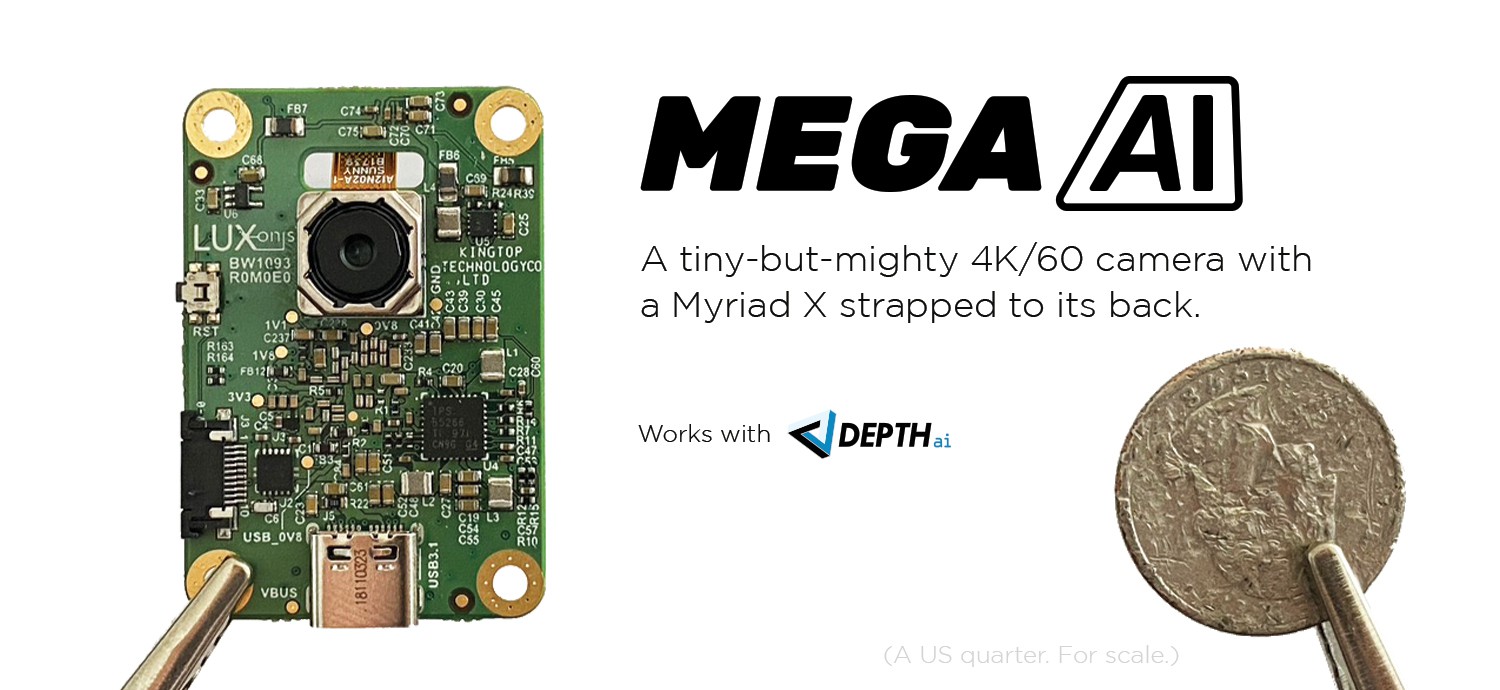

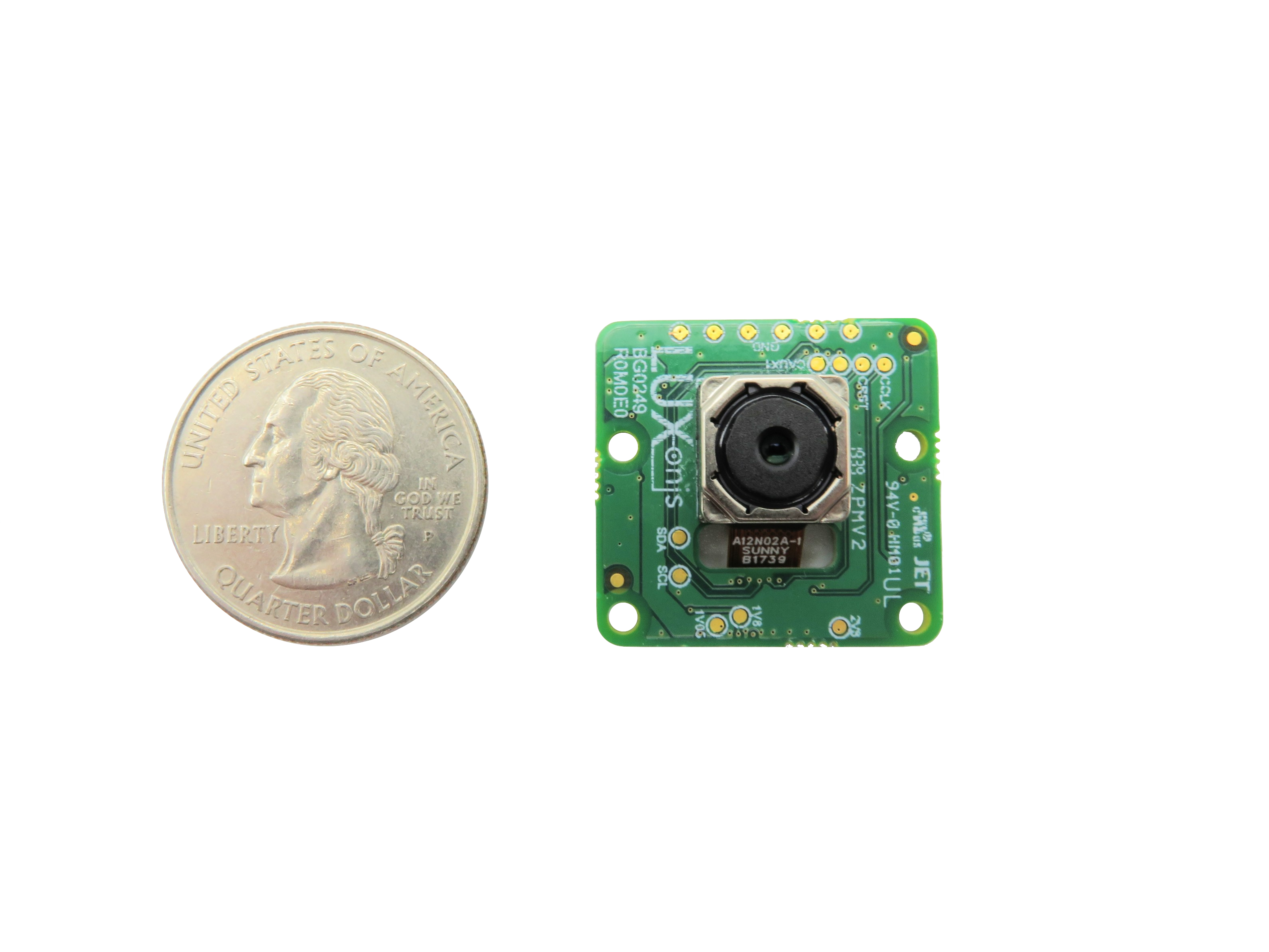

megaAI is Live

05/22/2020 at 16:23 • 0 commentsGreetings Potential Backer,

It is with great pleasure that we announce to you the immediate availability (for backing) of the brand-new Luxonis megaAI camera/AI board.

![]()

It’s super-tiny but super-strong, capable of crushing 4K H.265 video with up to 4 Trillion Operations Per Second of AI/CV power. This single board powerhouse will come with a nice USB3 cable at all pledge levels that receive hardware. If you’re just interested in the project, or a good friend, you can pledge at the $20 level to get campaign updates and some other but currently secret cool stuff.

We hope you’ll join us over the campaign. If you want to be the first kid of your block to receive a megaAI unit, back at the Roadrunner pledge level- you’ll get shipped before anyone else.

https://www.crowdsupply.com/luxonis/megaaiThank you!

- The Luxonis Team

-

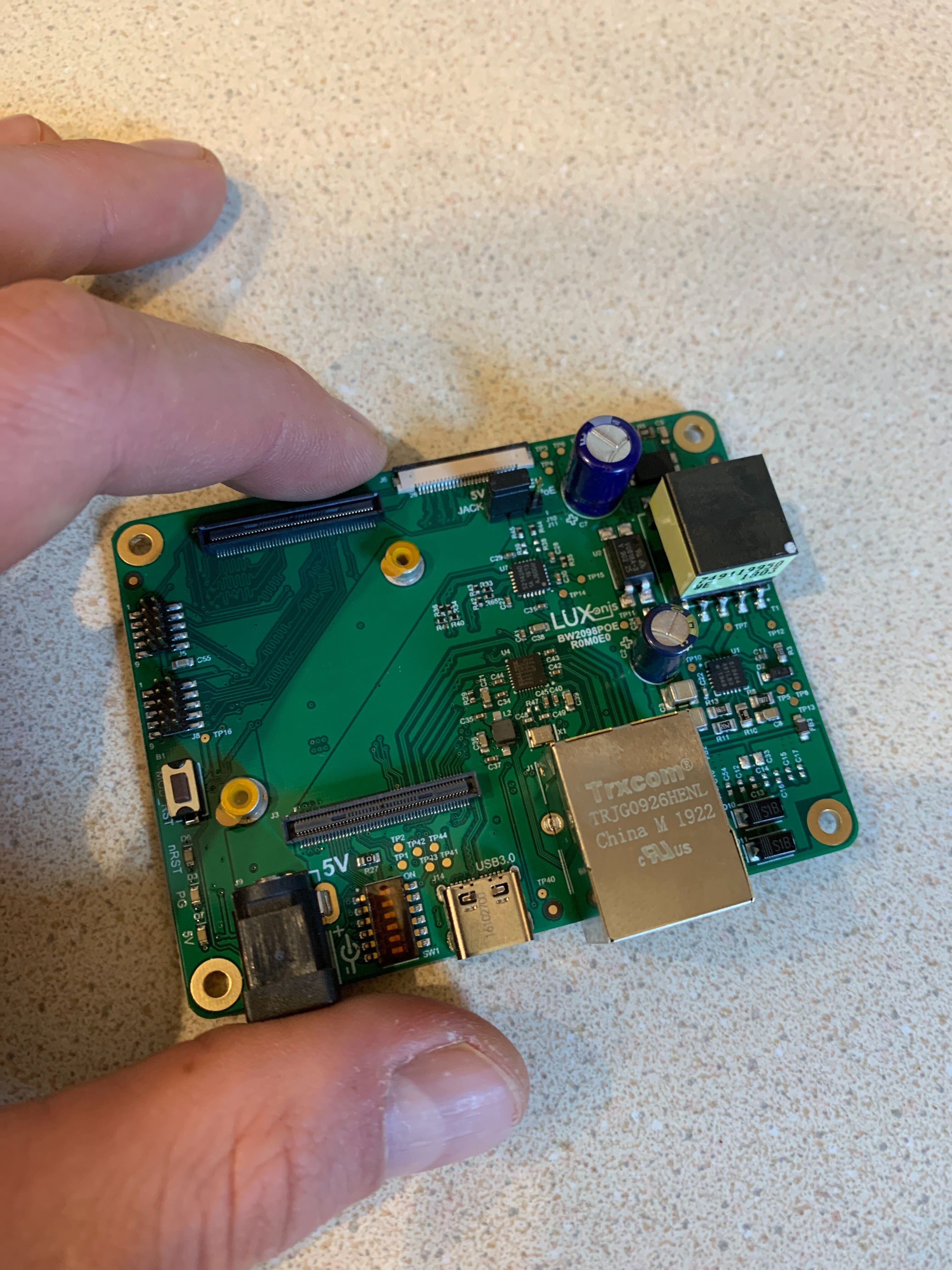

DepthAI Power Over Ethernet (PoE)

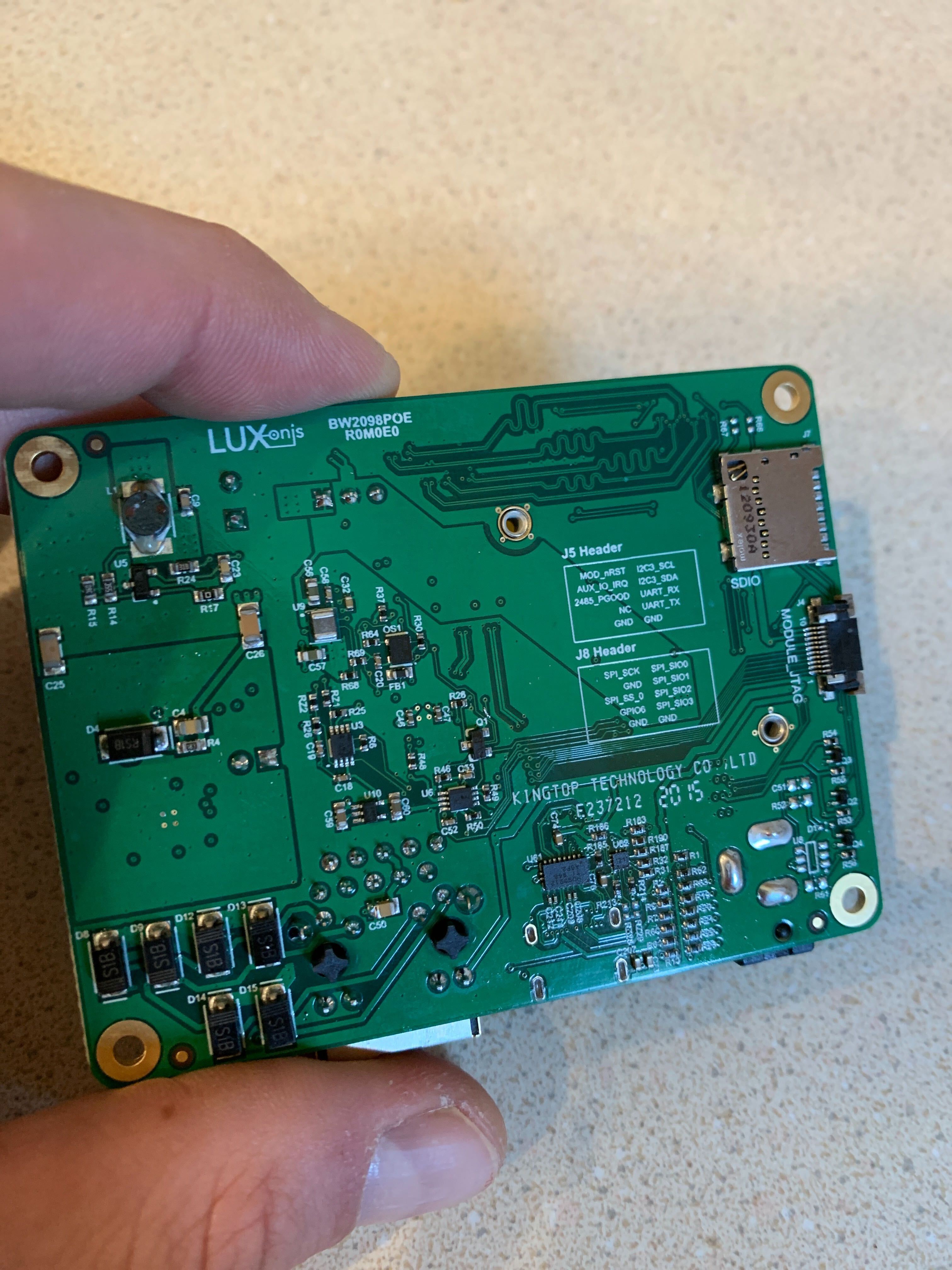

04/24/2020 at 22:52 • 2 commentsOur first cut at the Power over Ethernet Carrier Board for the new PCIE-capable DepthAI System on Module (SoM) just came in.

![]()

![]()

We haven't tested yet, and the system on module (the BW2099) which powers these will arrive in ~3 weeks (because it's HDI, so it's slower to fabricate and assemble). We'll be texting this board standalone soon, while anxiously awaiting the new system on module.

So the new system on module has additional features which enable PoE (Gigabit Ethernet) applications and other niceties:

- PoE / Gigabit Ethernet

- On-board 16GB eMMC for h.264/h.265 video storage and JPEG/etc.

- uSD Slot

-

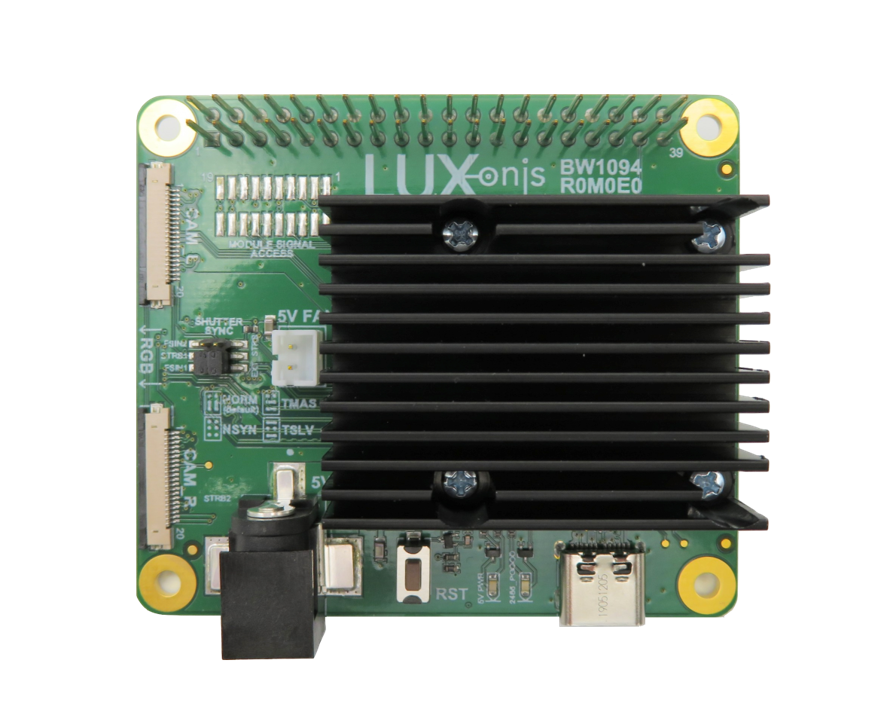

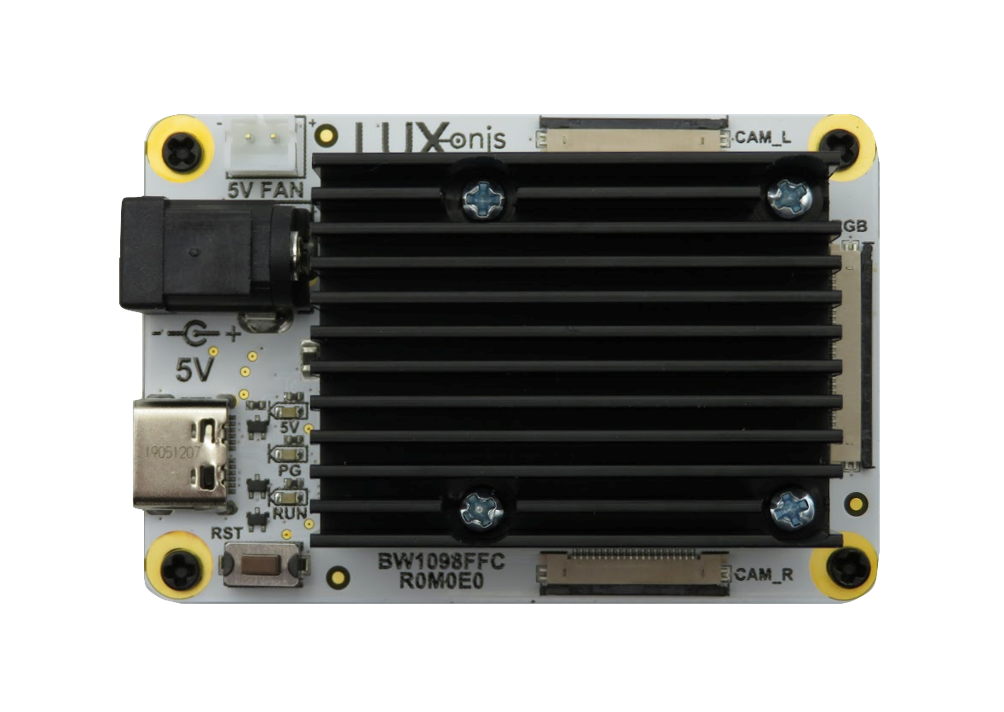

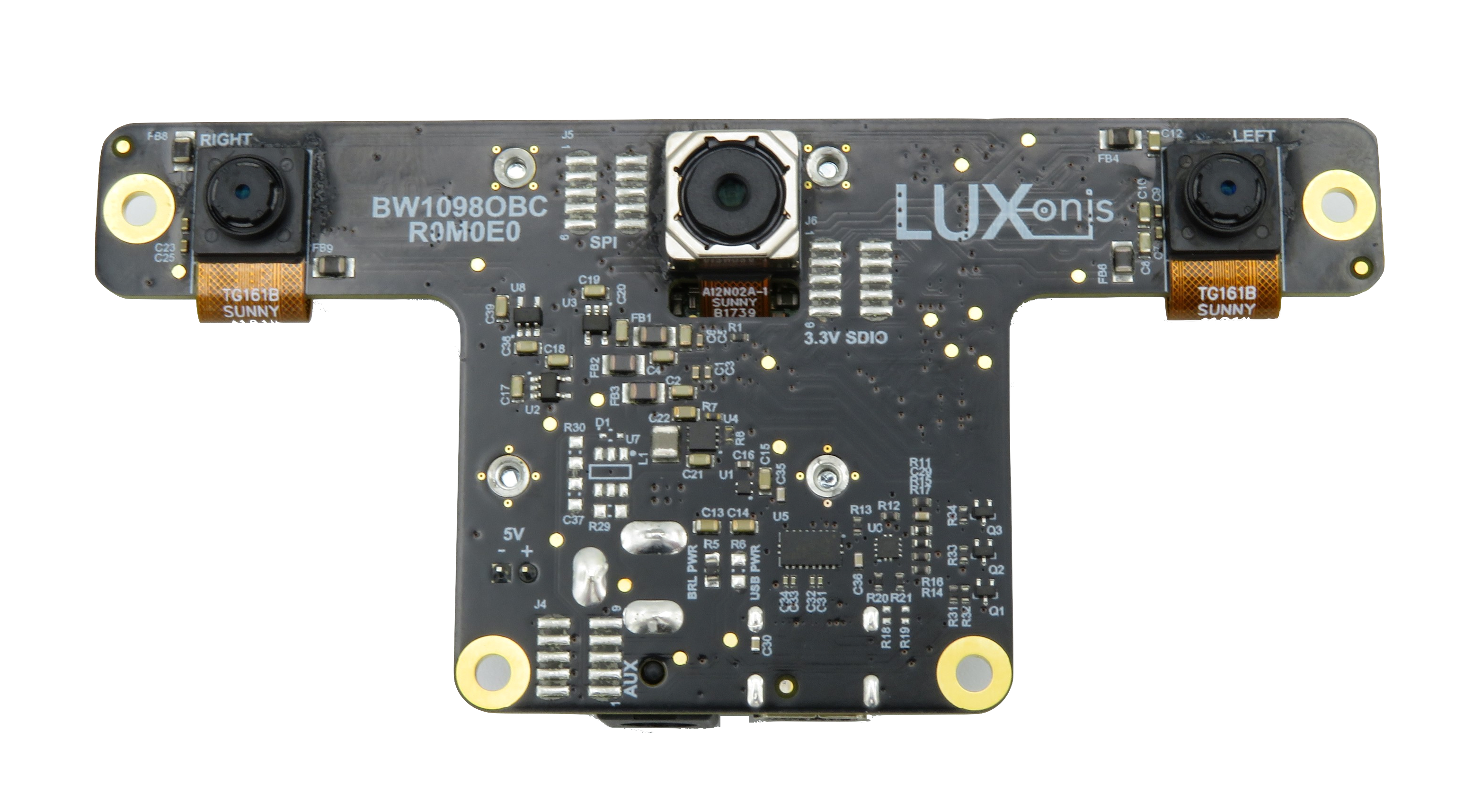

Open Source Spatial AI Hardware

04/22/2020 at 20:46 • 0 commentsNow you can integrate the power of DepthAI into your custom prototypes and products at the board level because...

We open sourced the carrier boards for the DepthAI System on Module (SoM). So now you can easily take this SoM and integrate it directly into your designs.

Check out our Github here:

https://github.com/luxonis/depthai-hardware

So this covers all the hardware below:

![]()

![]()

![]()

![]()

![]()

![]()

-

Mask (Good) and No-Mask DepthAI Training

04/21/2020 at 06:22 • 0 commentsWe did a quick training of DepthAI over the weekend (using https://docs.luxonis.com/tutorials/object_det_mnssv2_training/) on Mask (Good) and No Mask (Bad). Seems pretty decent as long as it's not too-far range: https://photos.app.goo.gl/FhhUCLTsm6tqBgqL8

Running on (uncalibrated) DepthAI real-time: -

MobileNet SSD v2 Training for DepthAI and uAI

04/13/2020 at 20:36 • 0 commentsGot training for MobileNetSSDv2 working: https://colab.research.google.com/drive/1n7uScOl8MoqZ1guQM6iaU1-BFbDGGWLG

You can label using this tool: https://github.com/tzutalin/labelImg

Brandon

Brandon