-

Compute Module (BW1097) Second Revision

10/16/2019 at 04:37 • 0 commentsHi Everyone,

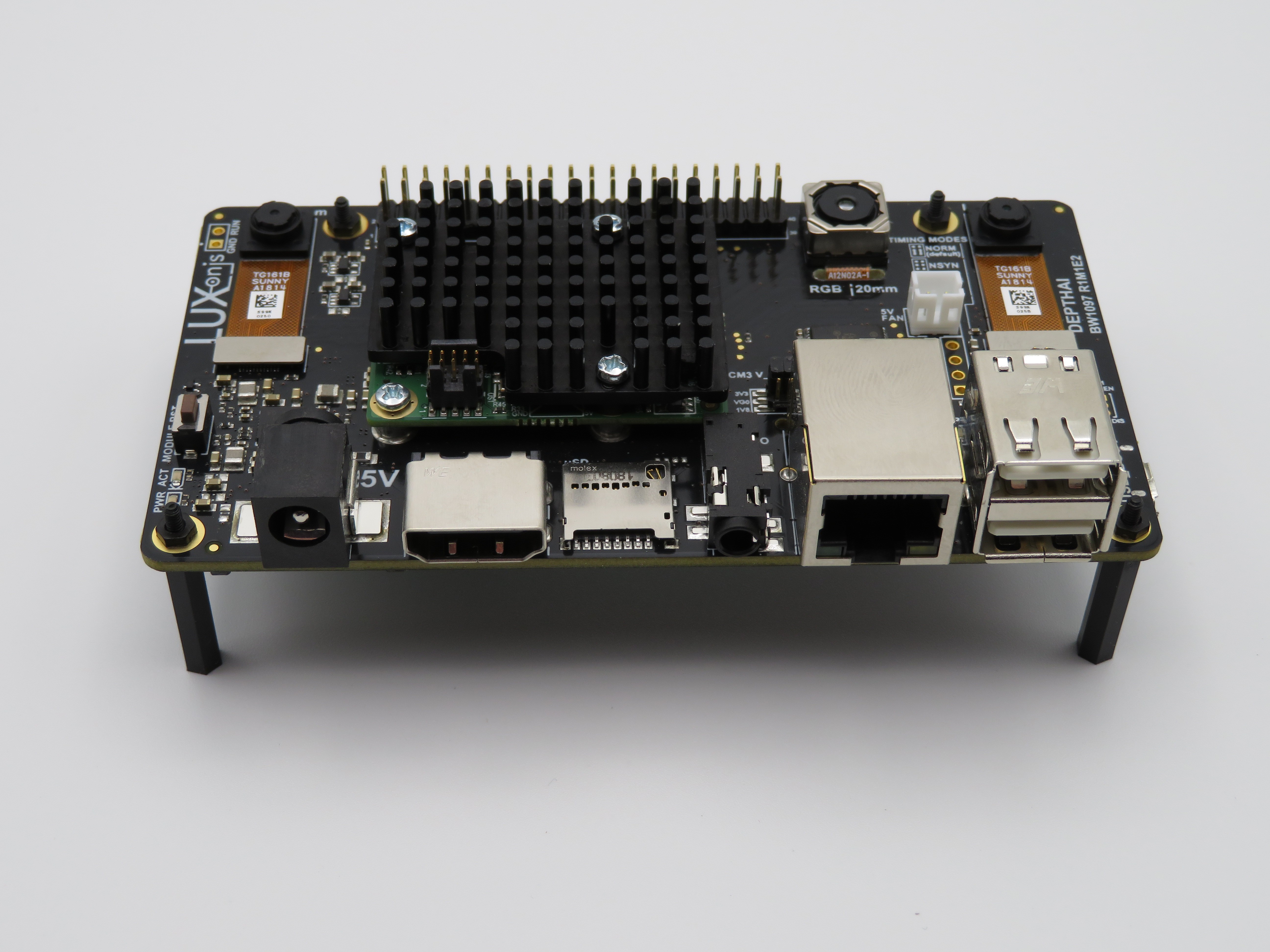

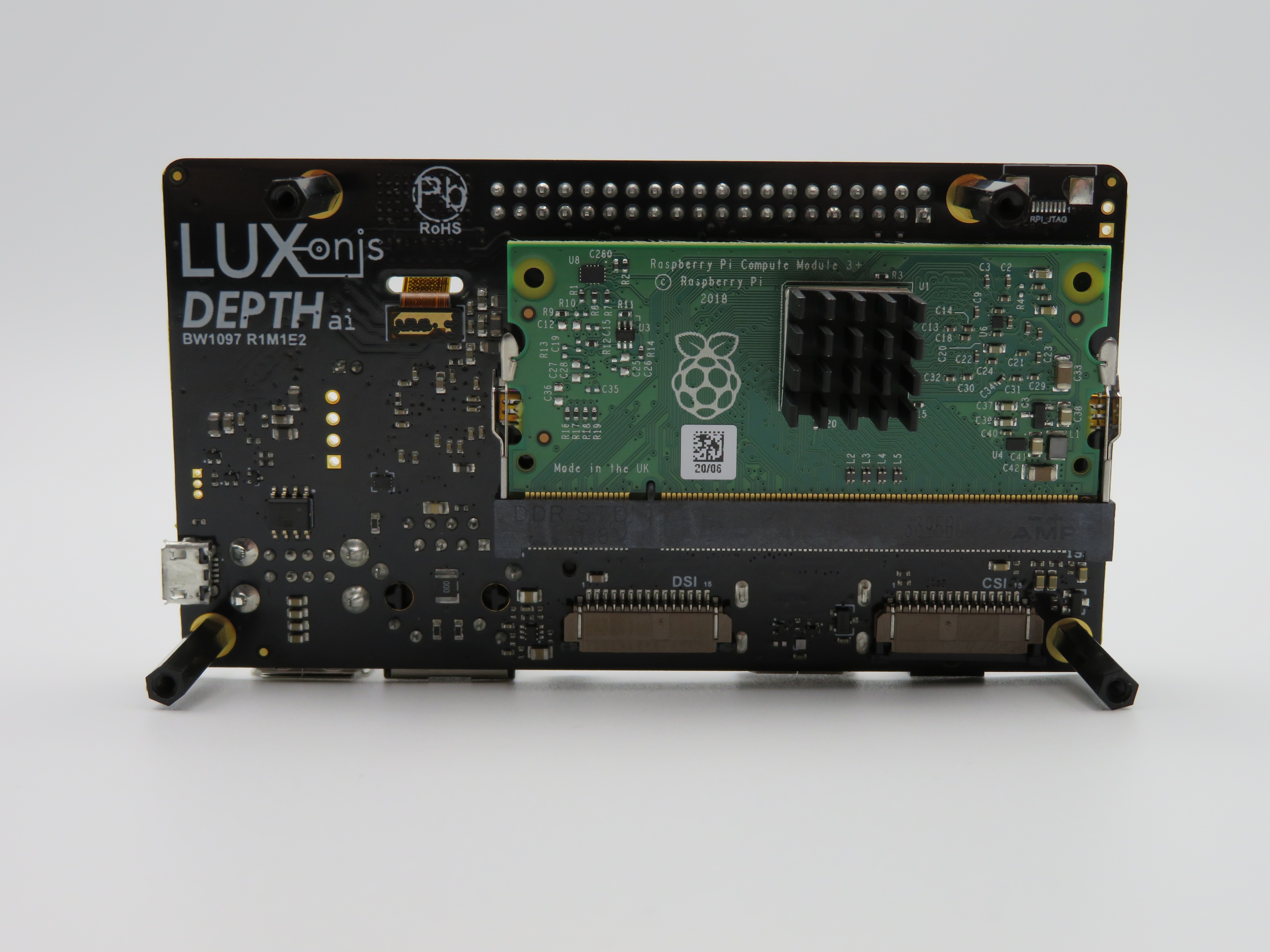

So we received the second revision of DepthAI for Raspberry Pi Compute Module, and everything works as hoped (and it looks a lot cooler because we made the design more compact and ordered it in black). Pictures to follow. :-)

![]()

![]()

We're continuing to integrate the depth portions we've written (here) with the direct-from-image-sensor neural inference (object detection, in this case) above.

Cheers!

The Luxonis Team

-

Power Use And Frame Rate Comparison | Jetson Nano, Edge TPU, NCS2

10/01/2019 at 17:09 • 0 commentsHi everyone,

So we took a bit of time to see what our power use is now that we have neural inference (real-time object detection, in this case) running directly from image sensors on the Myriad X. So this was actually inspired by this great article by Alasdair Allan, here, and we use his charts below in the comparison as well.

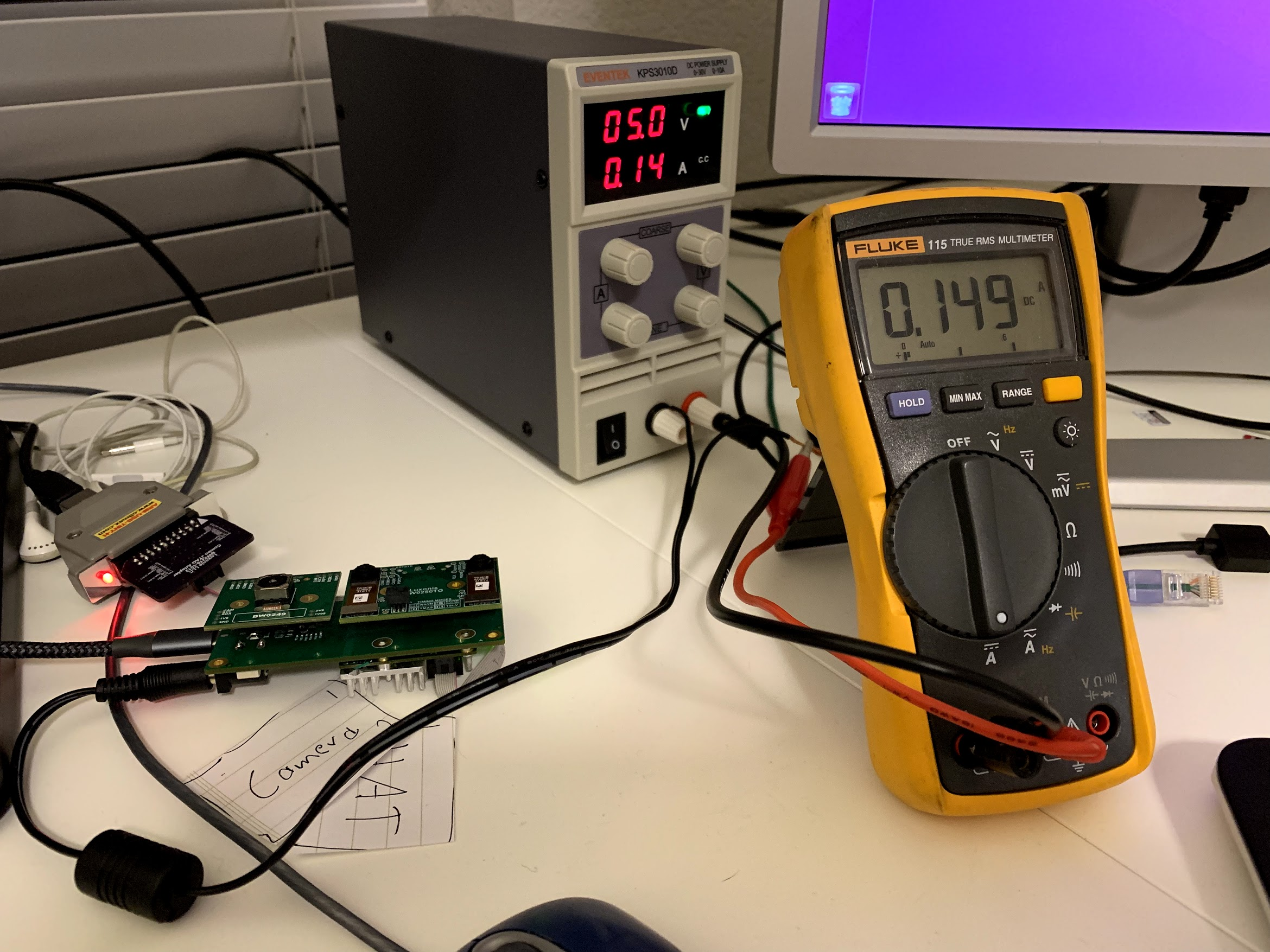

Idle Power:

![]()

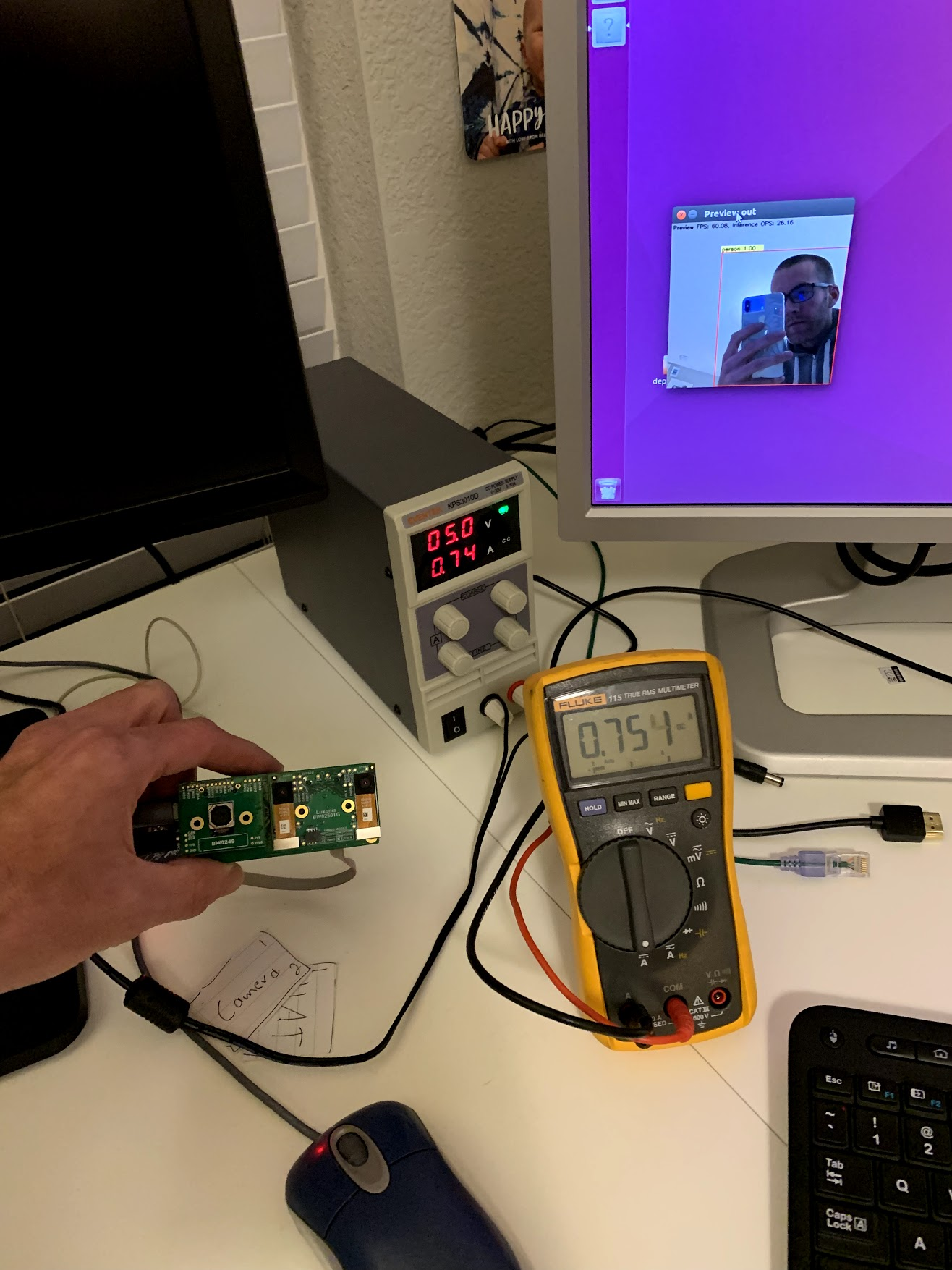

Active Power (MobileNet-SSD at ~25FPS):

![]()

So the DepthAI platform running MobileNet-SSD at 300x300:

Neural Inference time (ms): 40

Peak Current (mA): 760

Idle Current (mA): 150So judging from Alasdair's handy comparison tables (here, and reproduced below), this isn't too shabby. In fact it means we're the second fastest of all embedded solutions tested, and we're the lowest power.

And what's not listed in these tables is what is the CPU use of the host (the Raspberry Pi). Here are our results on that (tested on Raspberry Pi 3B):

RPi + NCS2 RPi + DepthAI Video FPS 30 60 Neural Inference FPS ~8 25 RPi CPU Utilization 220% 35% So this allows way more headroom for your applications on the Raspberry Pi to use the CPU; the AI and computer vision tasks are much better offloaded to the Myriad X with DepthAI.

Thoughts?

Thanks,

Brandon

-

Outperforming the NCS2 by 3x

09/29/2019 at 05:58 • 6 commentsHi everyone,

So last week we got object detection working direct from image sensor (over MIPI) on the Myriad X. MobileNet-SSD to be specific.

So how fast is it?

- 25FPS (40ms per frame)... when connected to a powerful desktop

According to 'The Big Benchmarking Roundup' (here) that's actually quite good.

But, this is connected to a big powerful computer... how fast is it when used with a Pi?

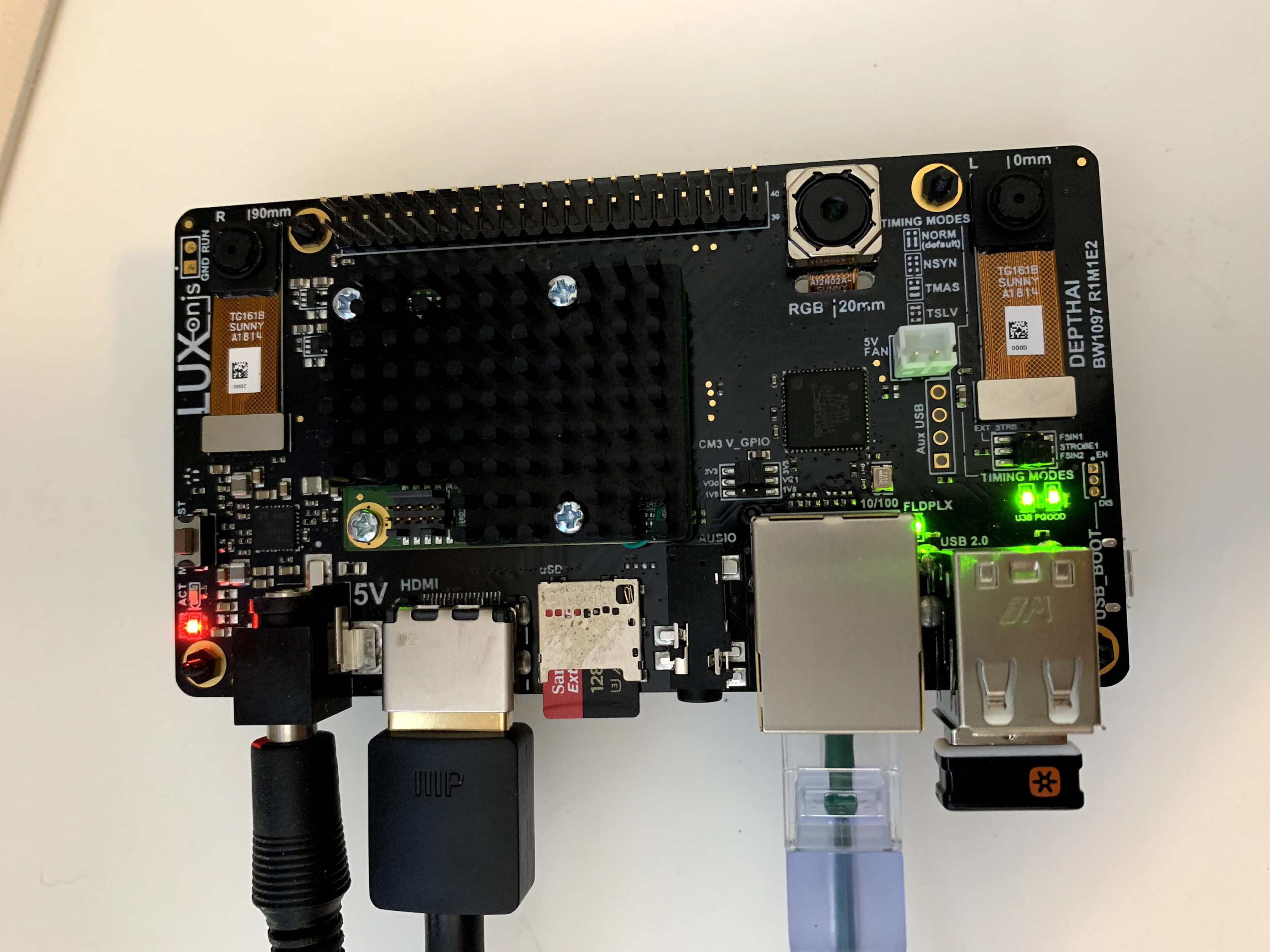

To find out, we ran it on our prototype of DepthAI for Raspberry Pi Compute Module:

![]()

And how did it fair?

- 25FPS (40ms per frame)... when connected to a Raspberry Pi Compute Module 3B+

Woohoo!

Unlike the NCS2, which sees a drastic drop in FPS when used with the Pi, DepthAI doesn't see any at all.

Why is this?

- The video path is flowing directly from the image sensor into the Myriad X, which then runs neural inference on the image data, and exports the video and neural network results to the Pi.

- So this means the Raspberry Pi isn't having to deal with the video stream; it's not having to resize video, to shuffle it from one interface to another, etc. - all of these tasks are done on the Myriad X.

In this way the Myriad X doesn't technically even need to export video to the Pi - it could simply output detected objects and positions over a serial connection (UART) for example.

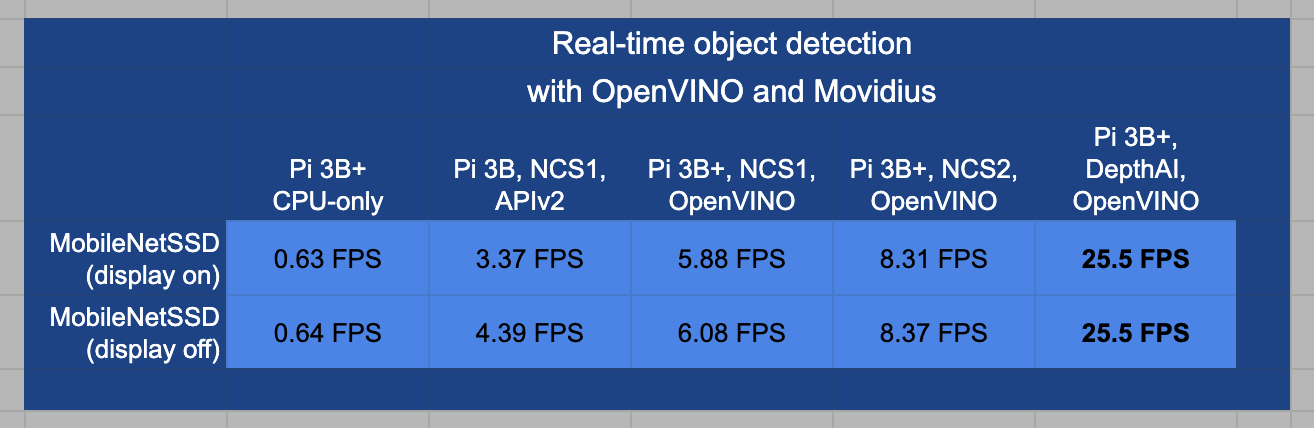

Let's compare this to results of the Myriad used with the Raspberry Pi 3B+ in its NCS formfactor, thanks to the data and super-awesome/detailed post courtesy of PyImageSearch here. (Aside: PyImageSearch is the best thing that every happened to the internet):

So this is a bump from 8.3 FPS with the Pi 3B+ and NCS2 to 25.5 FPS with the Pi 3B+ and DepthAI.

When we set out, we expected DepthAI to be 5x faster than the NCS2 when used with the Raspberry Pi 3B+ and it looks like we've hit 3.18x faster instead... but we still think we improve from here!

And it's important to note that when using the NCS2, the Pi CPU is at 220% to get the 8 FPS, and with DepthAI the Pi CPU is at 35% at 25 FPS. So this leaves WAY more room for your code on the Pi!

And as a note, this isn't using all of the resources of the Myriad X. We're leaving enough room in the Myriad X to perform all the additional calculations of disparity depth and 3D projection, in parallel to this object detection. So if there are folks who only want monocular object detection, we could probably bump up faster than by dedicating more of the chip to the neural inference... but we need to investigate to be sure.

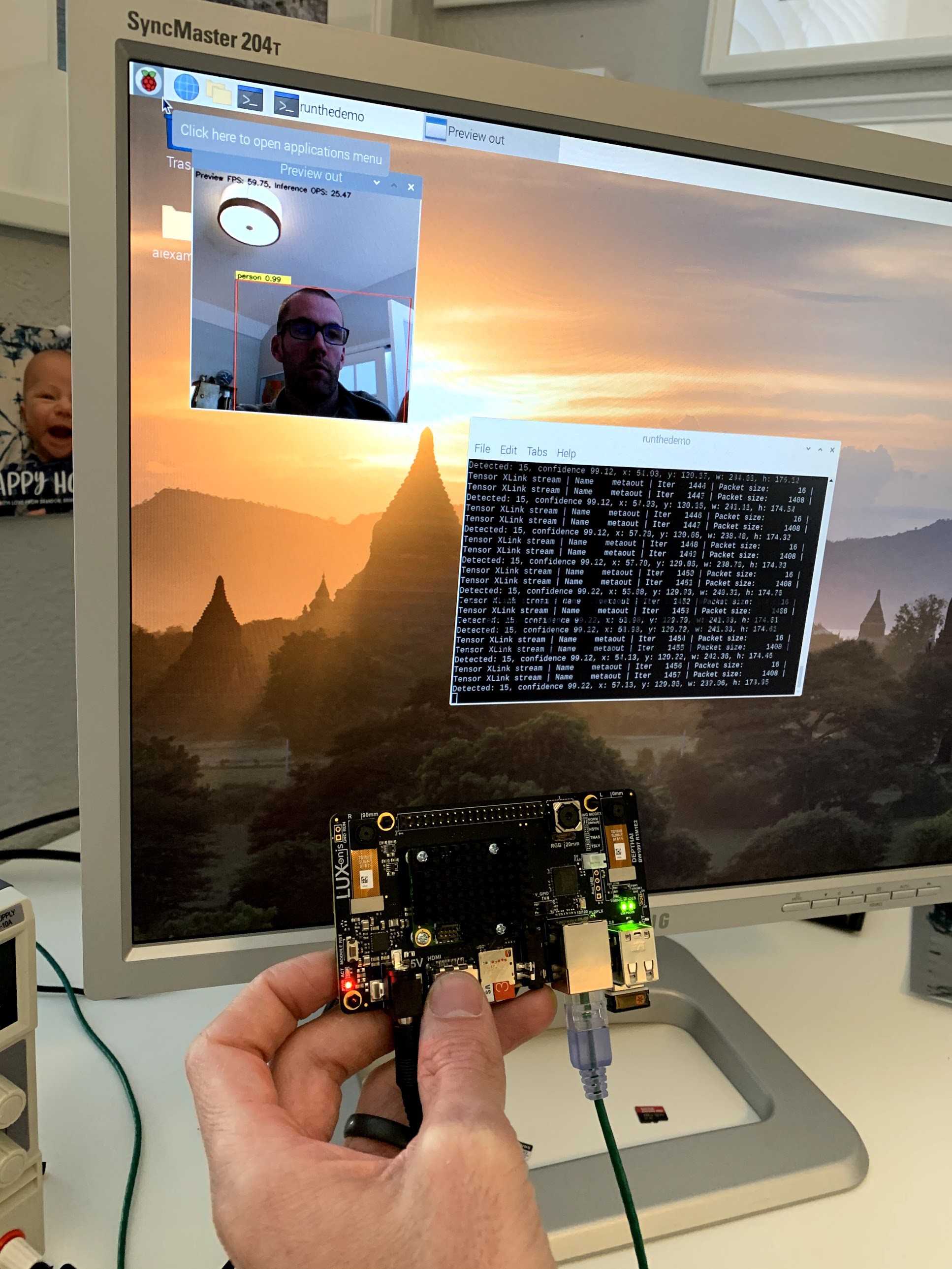

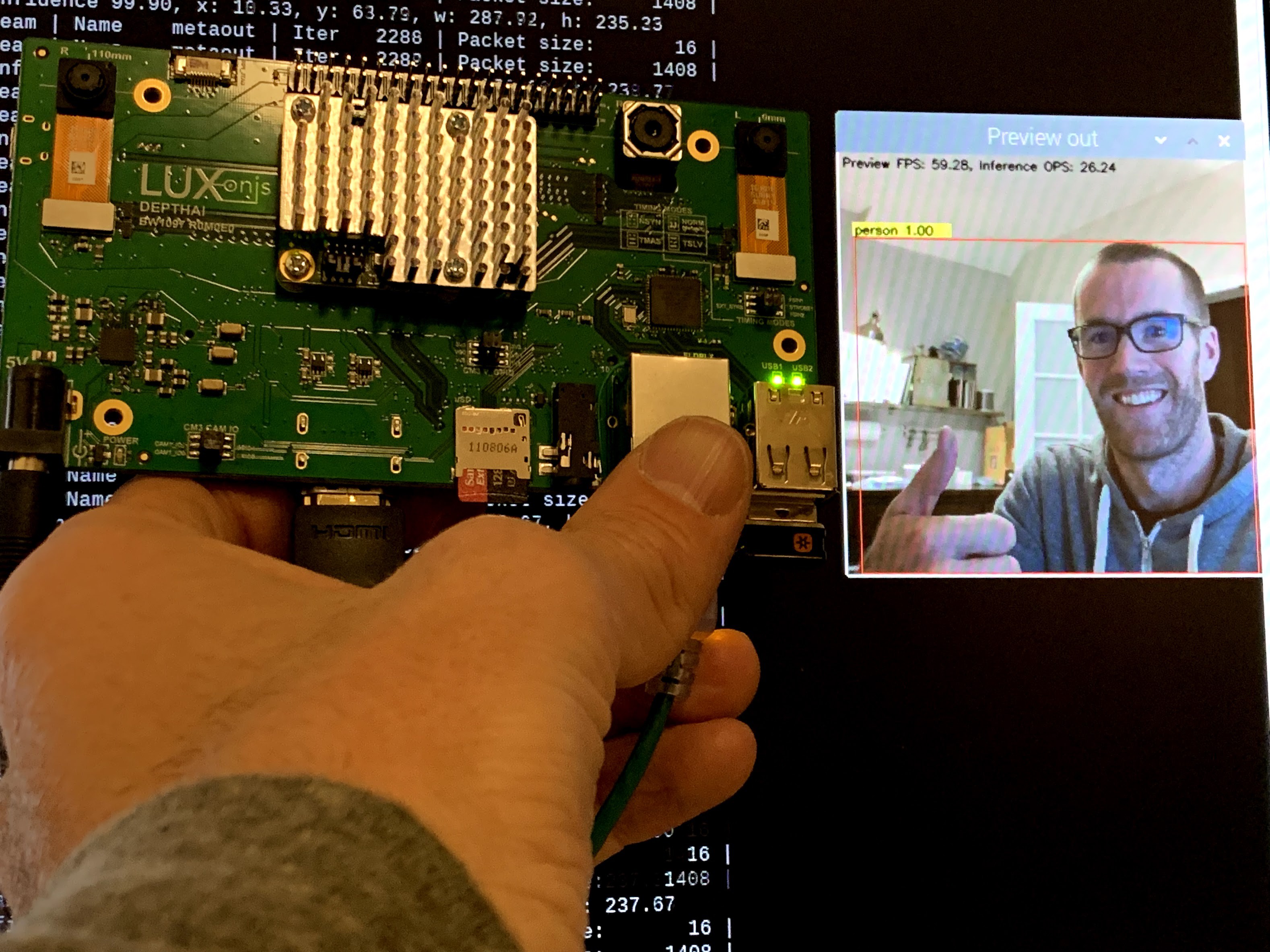

Anyways, we're pretty happy about it:

![]()

Now we're off to integrate the code for depth, filtering/smoothing, 3D projection, etc. we have running on the Myriad X already with this neural inference code. (And find out if we indeed left enough room for it!)

Cheers,

Brandon & The Luxonis Team

-

First Inference Running

09/27/2019 at 16:03 • 0 commentsHi everyone,

So we have some great Friday-evening news (Europe time!):

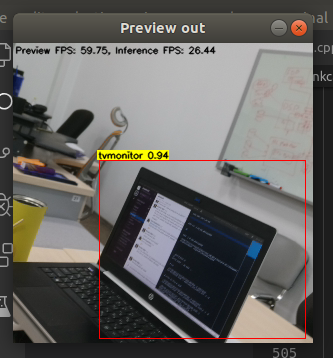

![]()

This is our first output of neural inference (in this case, mobileNet-SSD, which is the core of what we're targeting) from DepthAI.

So we're still refining, simplifying, and also profiling this AI side, but it's a great first step! And once we have all this locked down we're going to be integrating this piece (which is specifically architected to idle about 1/2 of the rest of the Myriad X to leave room for Depth work) with the Depth pieces (and smoothing, reprojection, etc.) that we have working in a separate code-base.

We also have a flurry of new form-factors that we're working on and will be ordering soon. Boards that adapt our Myriad X module for other applications, such as movable camera modules that connect over FFC (flexible flat cable - think like the ribbon cables of the RPi cameras).

Best,

Brandon and the Luxonis Team

-

Raspberry Pi HAT, Compute Module, Smaller

09/17/2019 at 21:51 • 4 commentsHi everyone!

So we've got every last bit of the RPi Compute Module version of DepthAI working, which is exciting. So now that it's verified, we made the design smaller. We had it big on the first prototype so that white-wire fixes would be easier... and we're glad we did that! We ended up with white wires for:

- Ethernet

- 3.5mm Audio

- I2C for the DSI and CSI display/camera for the Raspberry Pi.

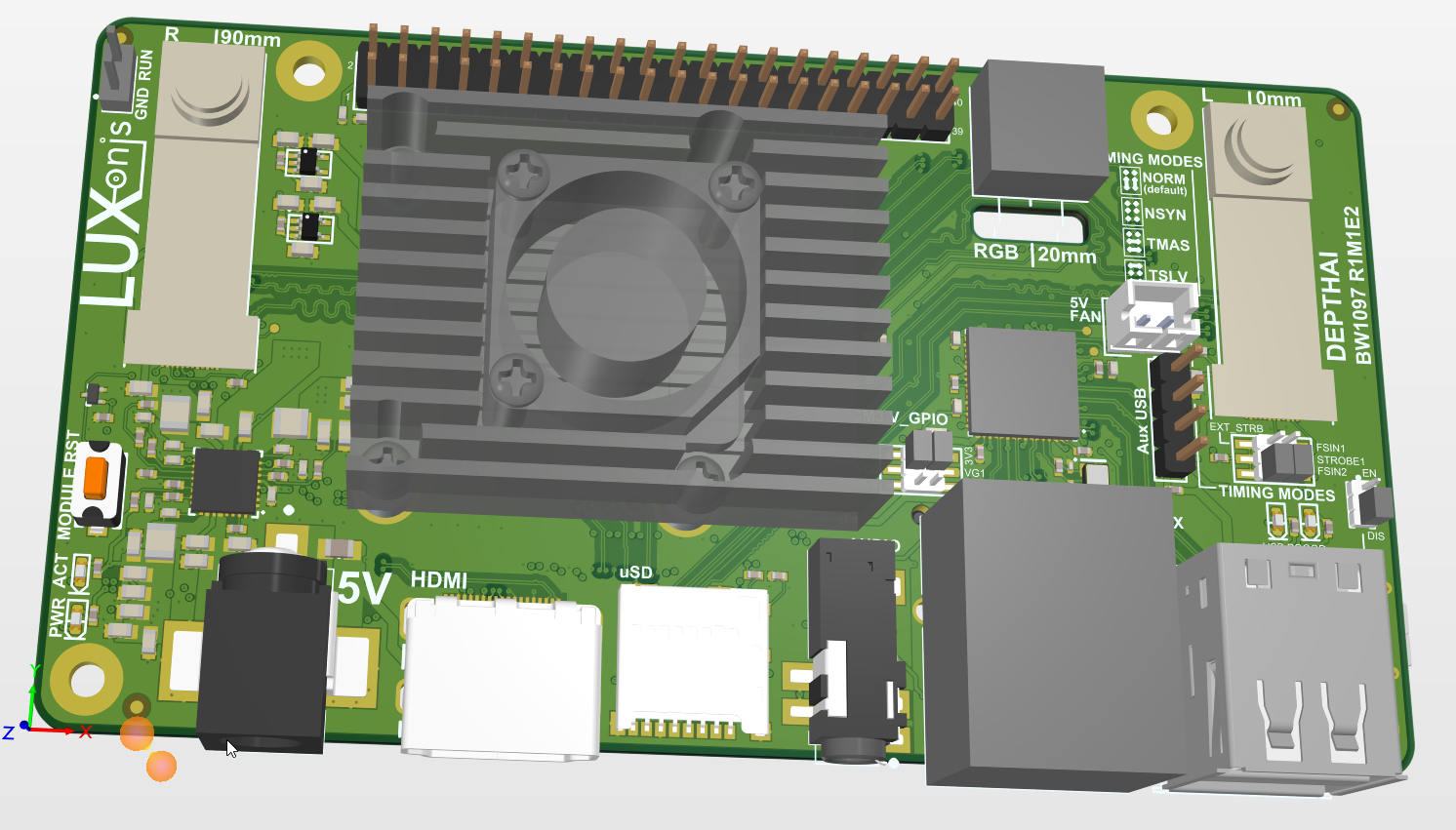

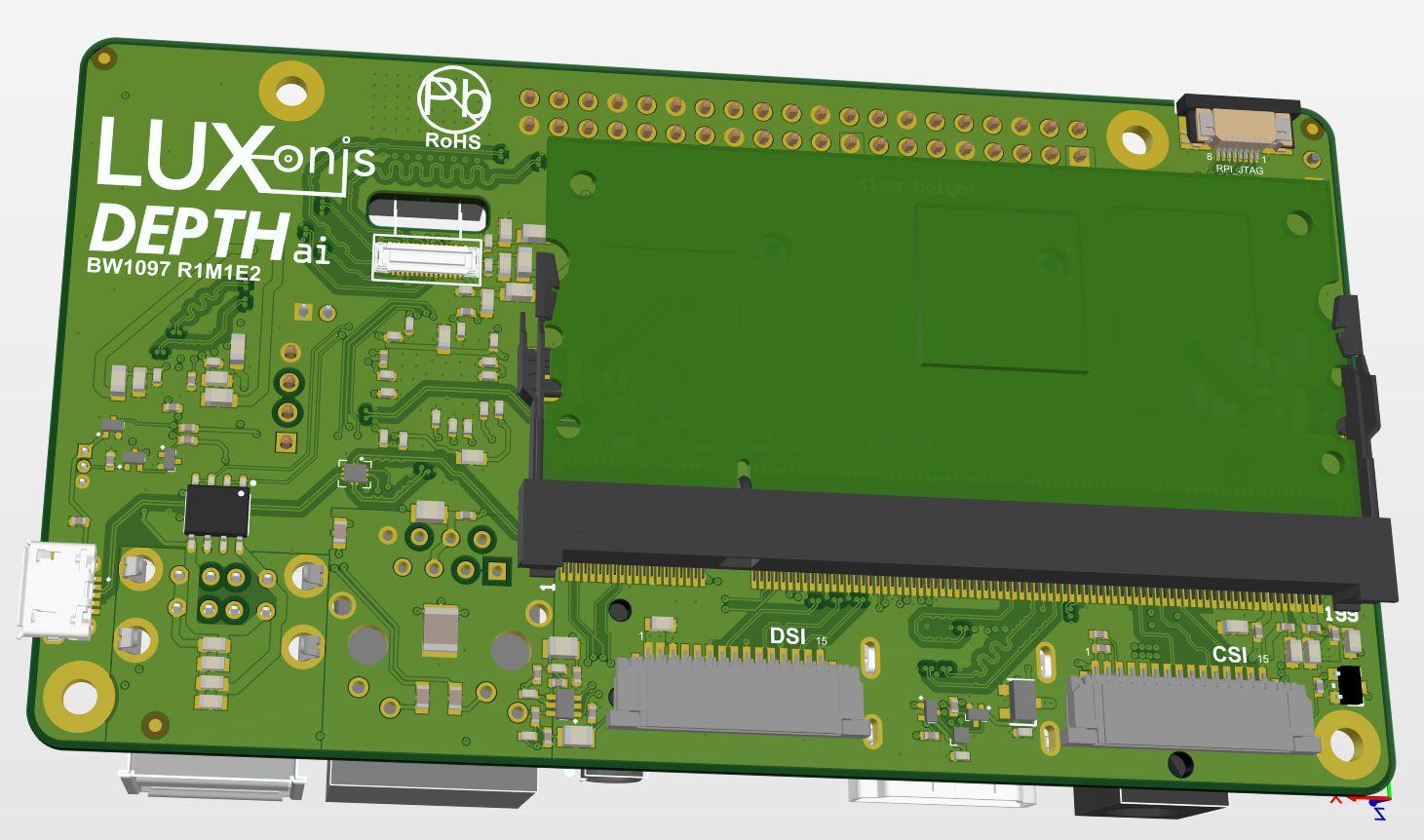

Anyways, these are all corrected, and the revised, now smaller, design was ordered on Friday! Here's what it looks like (thanks, Altium 3D view):

![]()

![]()

And now that this is ordered, we turned our sites on another incarnation of DepthAI that we think will be useful.

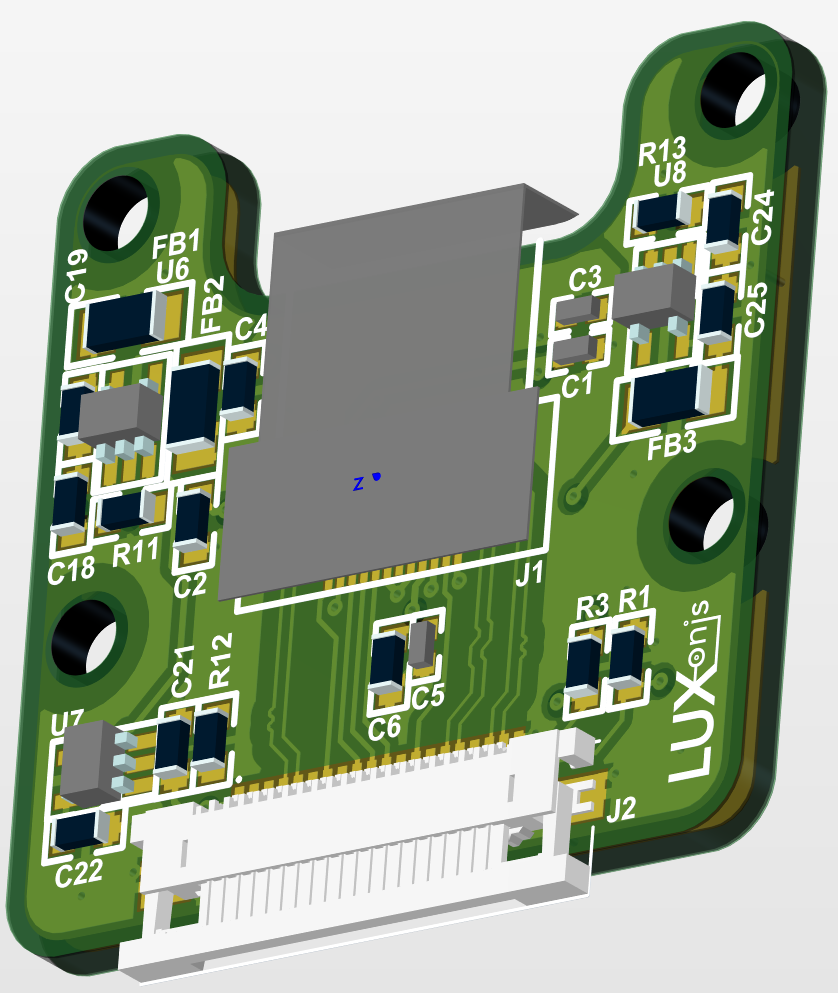

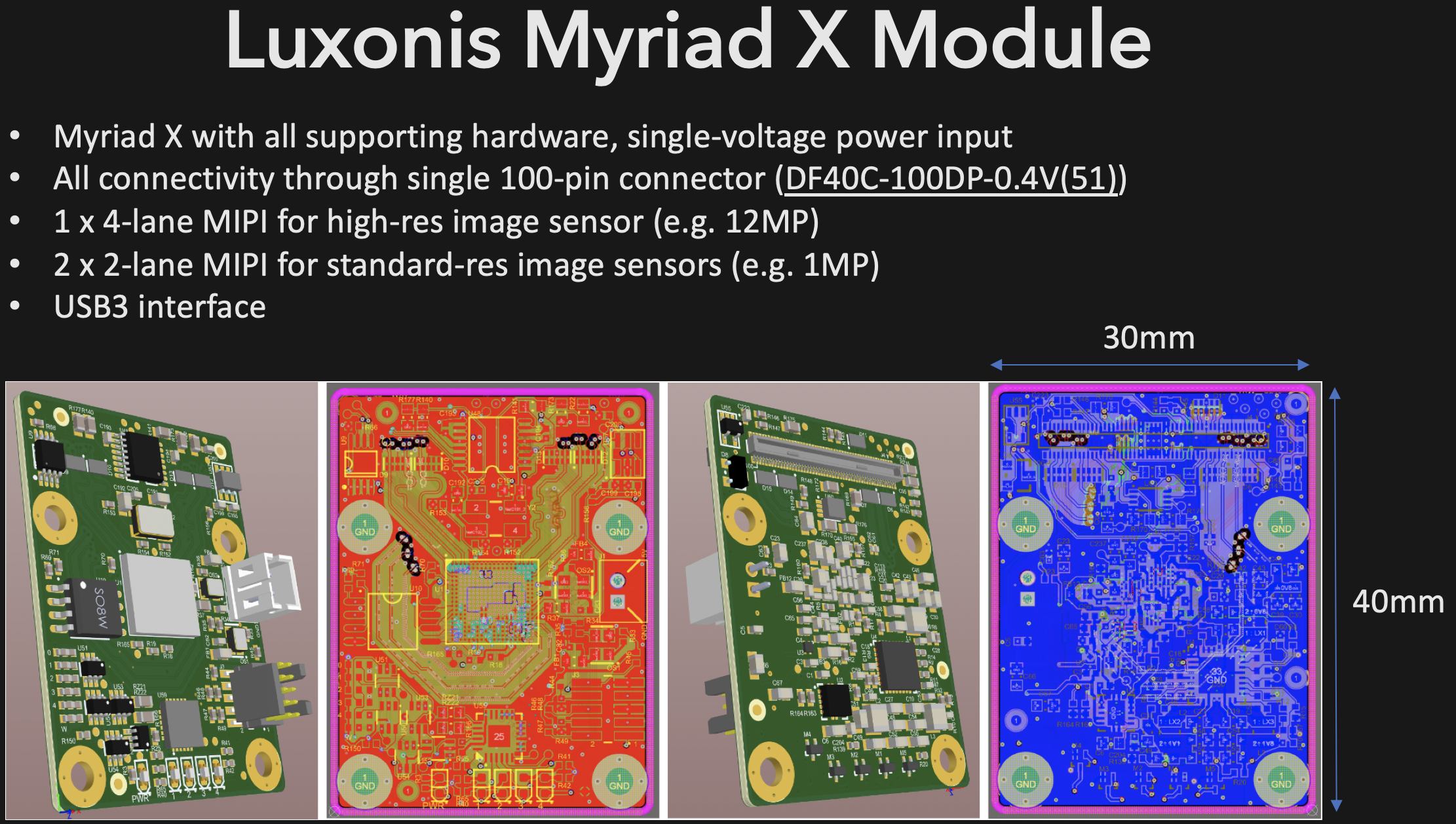

But, before we dive into that. Why did we make the Myriad X Module that we made, well, a module?

It's so that we - and you - can easily turn designs that leverage it. It allows the Myriad X to be used on simple, easy, 4-layer boards (instead of the 8-layer HDI and BGA tolerancing and yielf optimization that's required if you use the Myriad X directly).

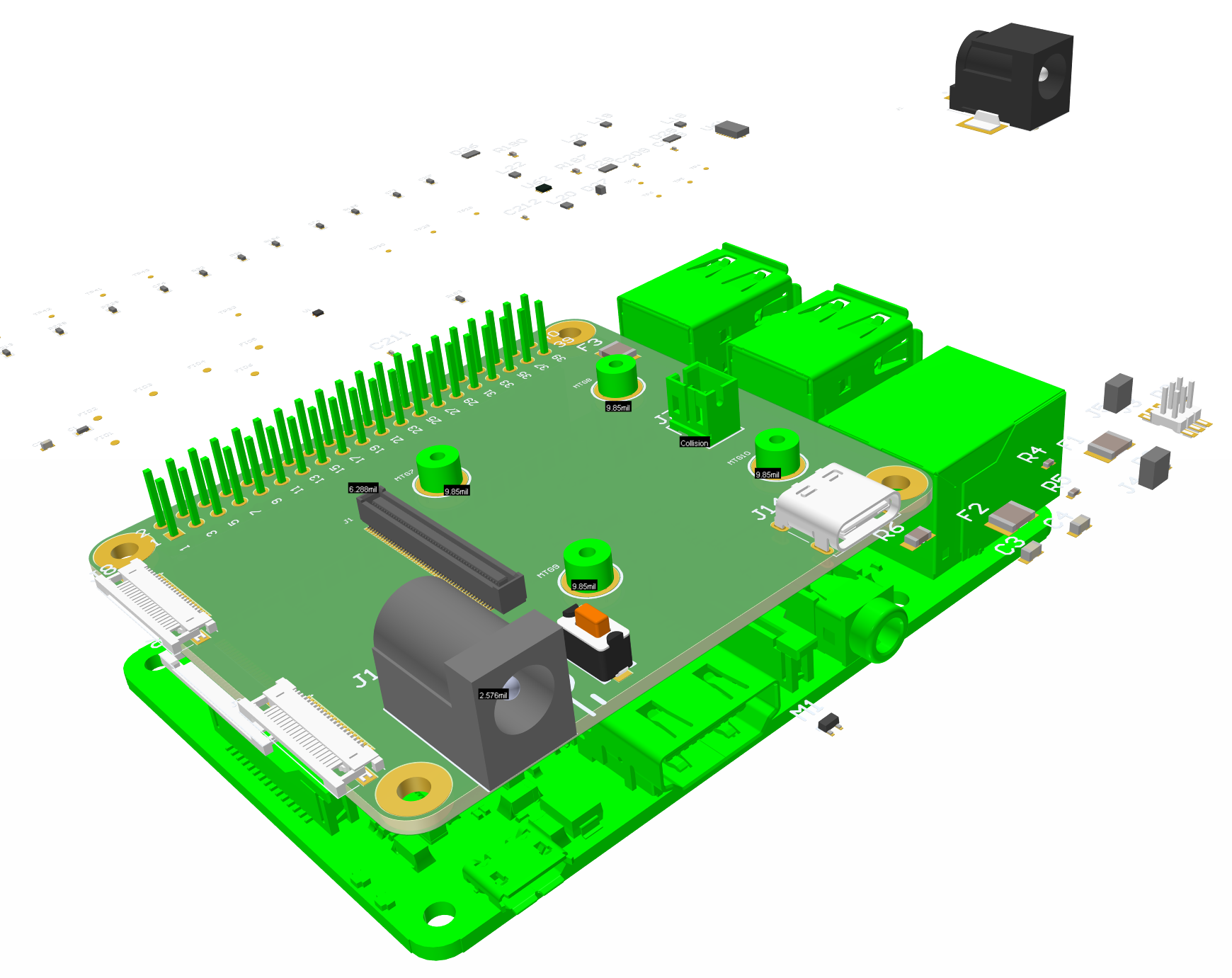

So, leveraging this module, here's the HAT that we're working on:

![]()

You can see the spot for the Myriad X Module right there on top. And then on the left are the FFC (flexible flat cables) connectors to connect to camera modules:

![]()

That's right, we're making it so the cameras can be mounted where -you- want them. We'll have some other follow-own Myriad X boards (e.g. non-RPi-specific) that take advantage of this as well. Oh, and on FFC, think of the Raspberry Pi camera little ribbon cable... FFC is the general acronym for that type of cable.

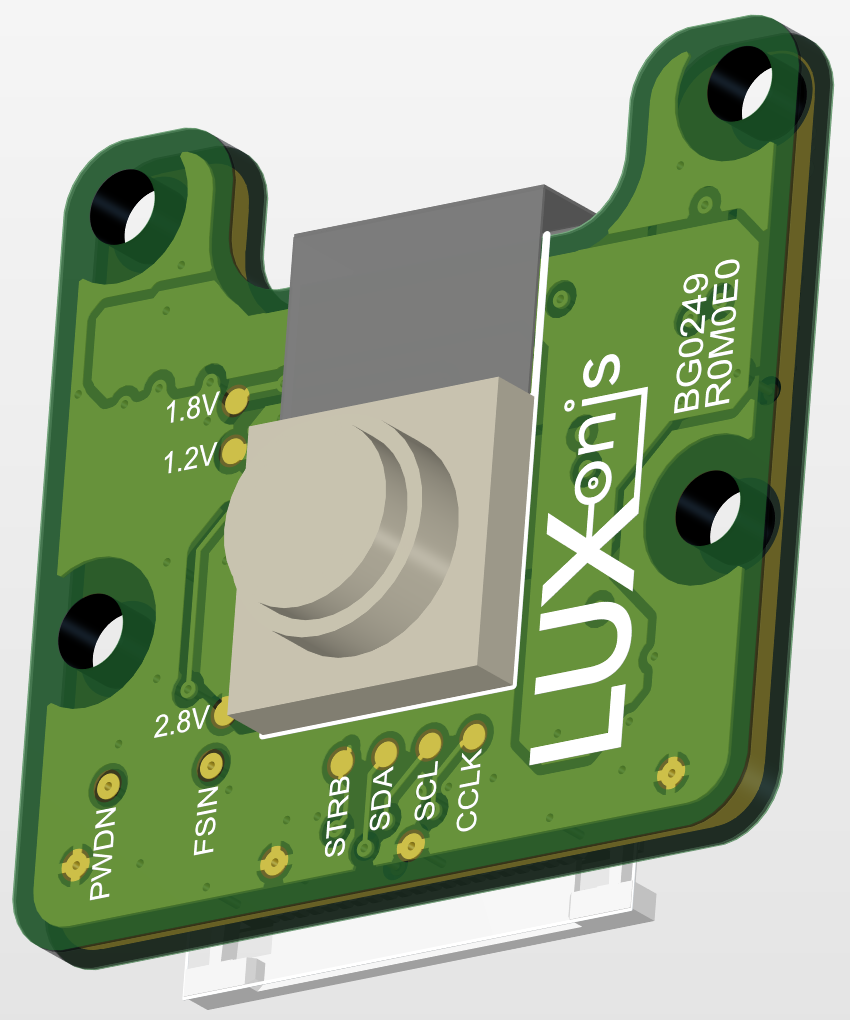

Speaking of which, here are the camera boards:

OV9282 - 720p global-shutter mono camera use for disparity depth:

![]()

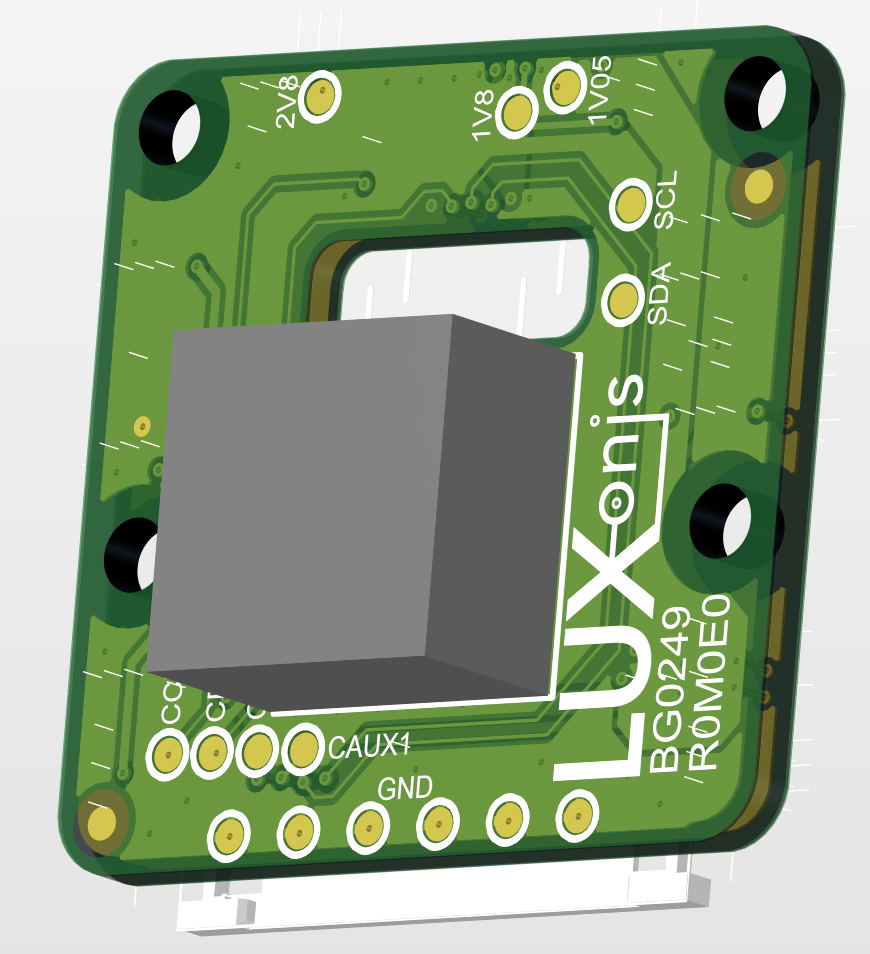

IMX378 - 12 megapixel color image sensor, supporting 4K video!

![]()

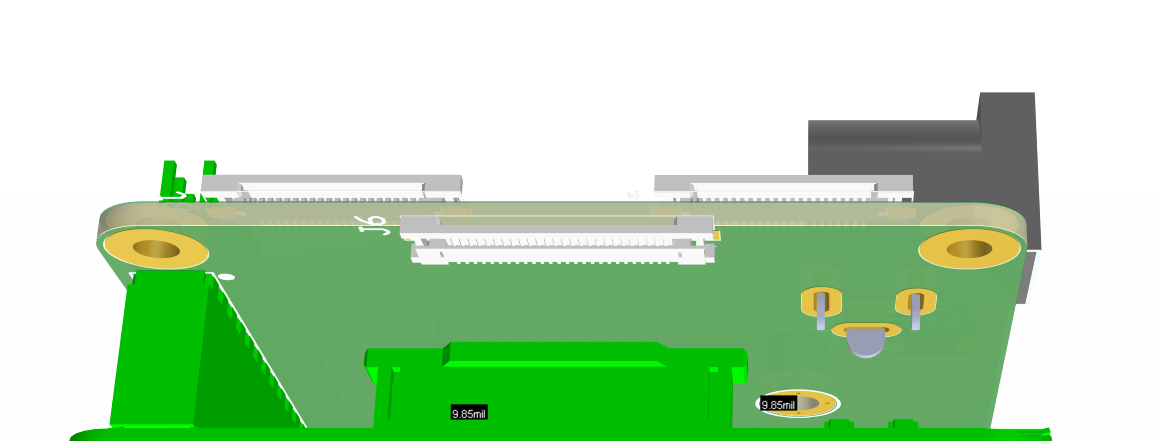

And you can see the FFCs on the back bottom of these respectively:

![]()

![]()

And as usual feel free to ping us with any questions, comments, needs or ideas!

Cheers,

The Luxonis Team

-

Our Crowd Supply Pre-Launch Page is Up

09/04/2019 at 17:23 • 0 commentsHi everyone,

So if you're interested in buying DepthAI when it's ready, head over to Crowd Supply and sign up!

https://www.crowdsupply.com/luxonis/depthai

The pre-launch page is up, and having signed up there will allow you to get notifications when the campaign goes live.

(The advantage of Crowd Supply is it lets us to a larger-than-we-would-be-able-to-otherwise order - which helps to get the cost down for everyone ordering.)

Best,

The Luxonis Team

-

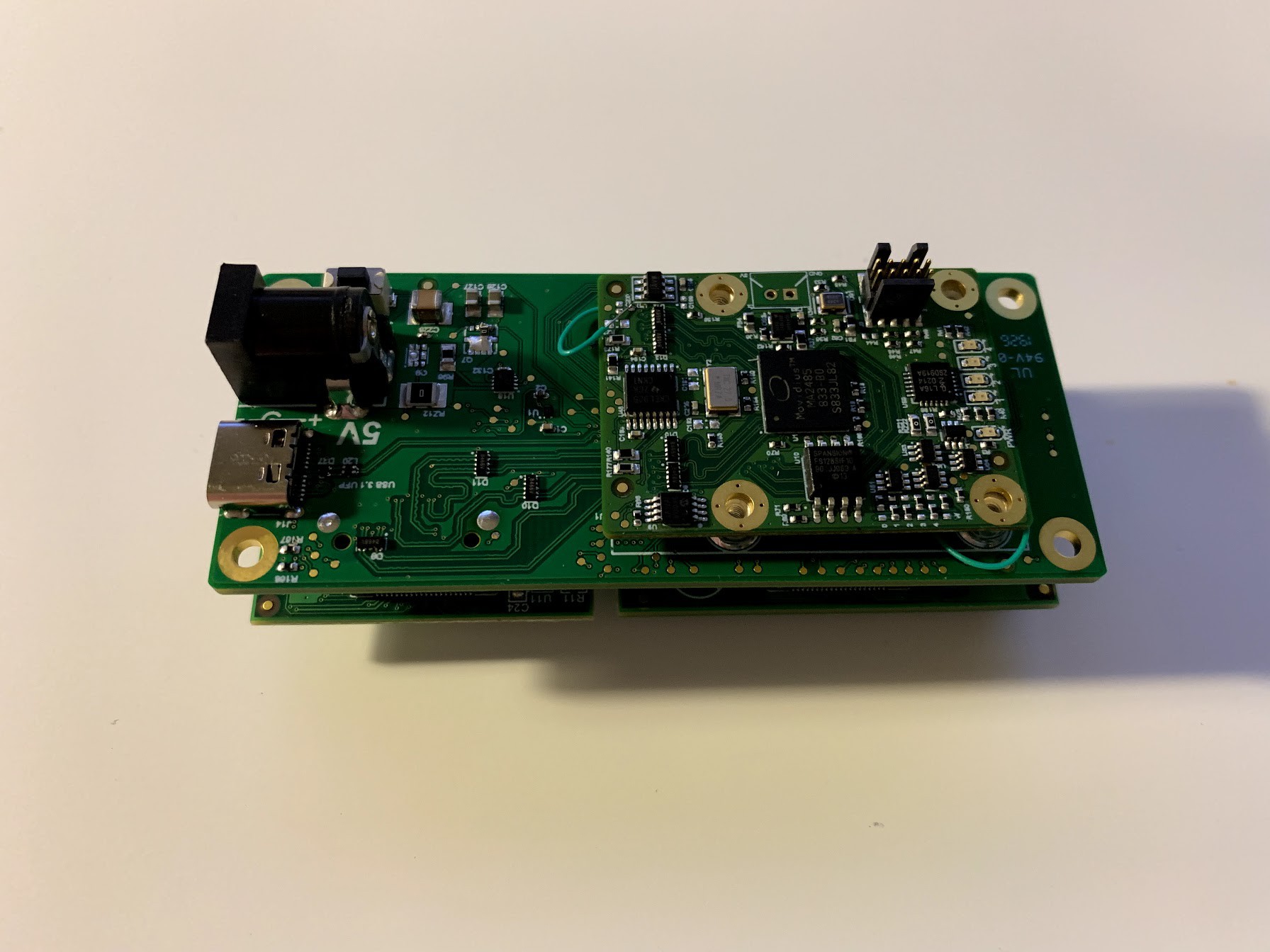

Whirlwind Hardware Arrivals

09/04/2019 at 17:17 • 0 commentsHi everyone!

So we got a TON of hardware in over the past couple weeks, and have been heads-down testing, characterizing, etc. as well as in parallel making good strides on the firmware.

So what did we get?

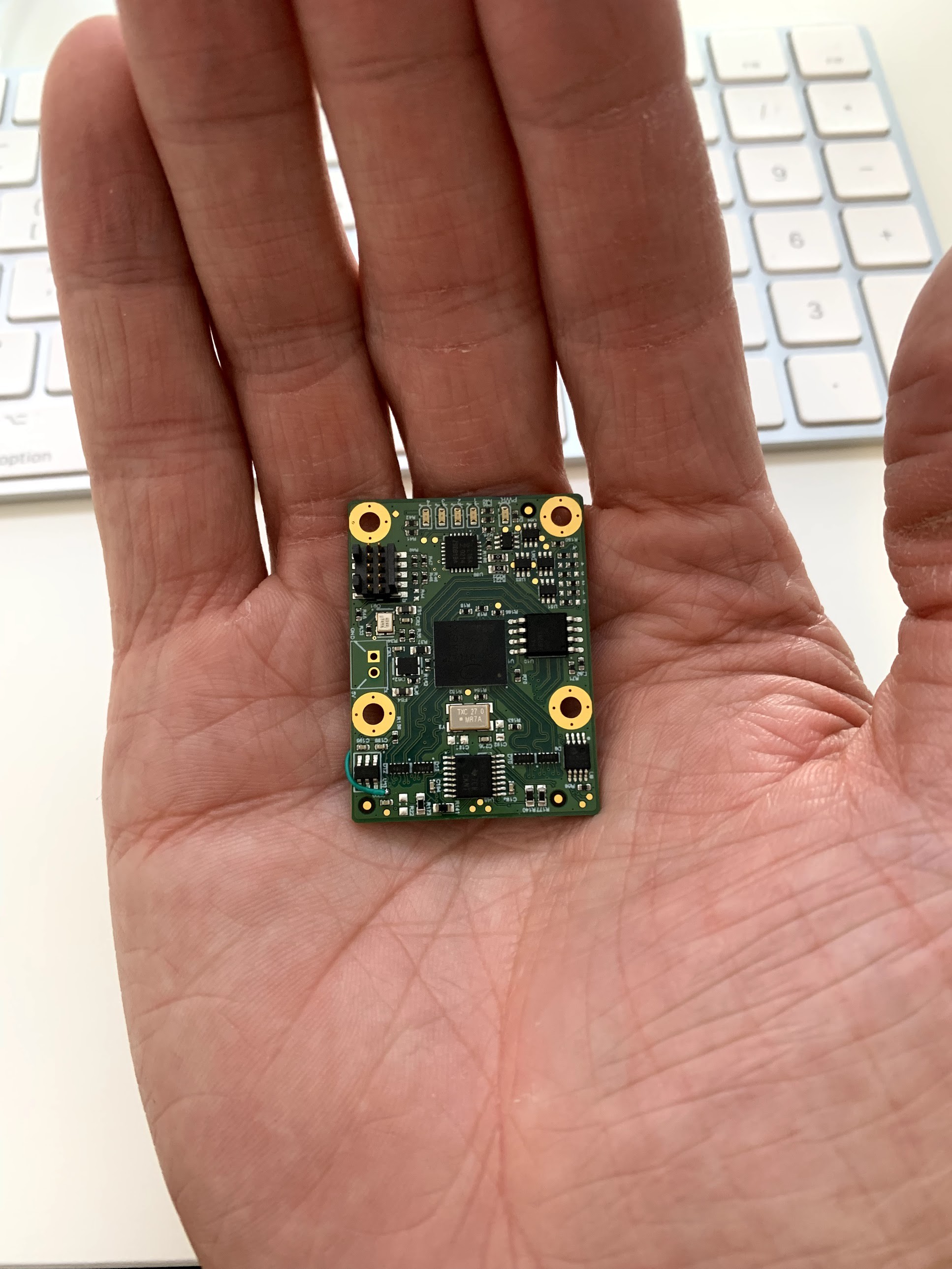

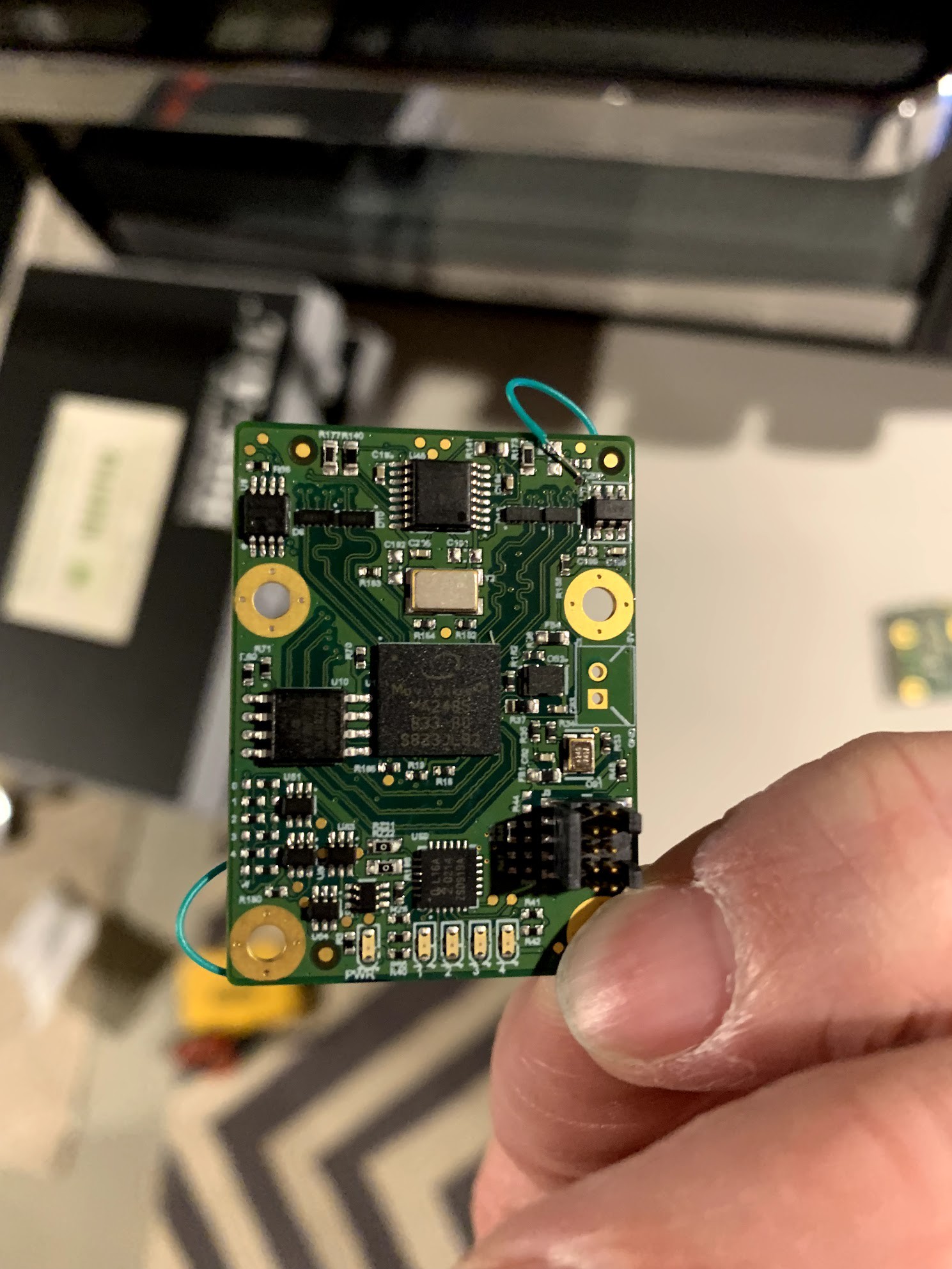

1. The Myriad X Modules

![]()

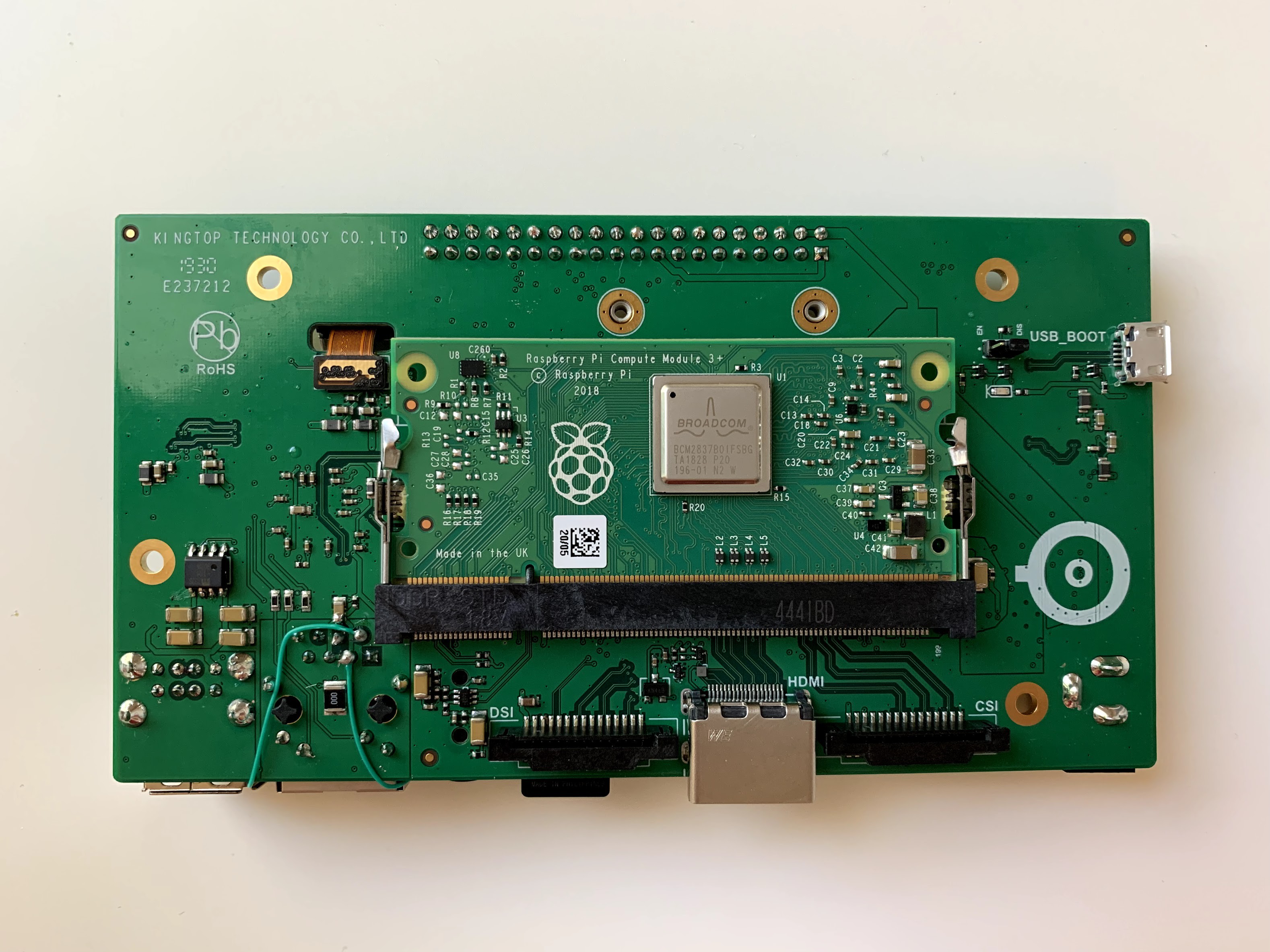

2. The first (oversized) version of DepthAI for Raspberry Pi Compute Module

![]()

![]()

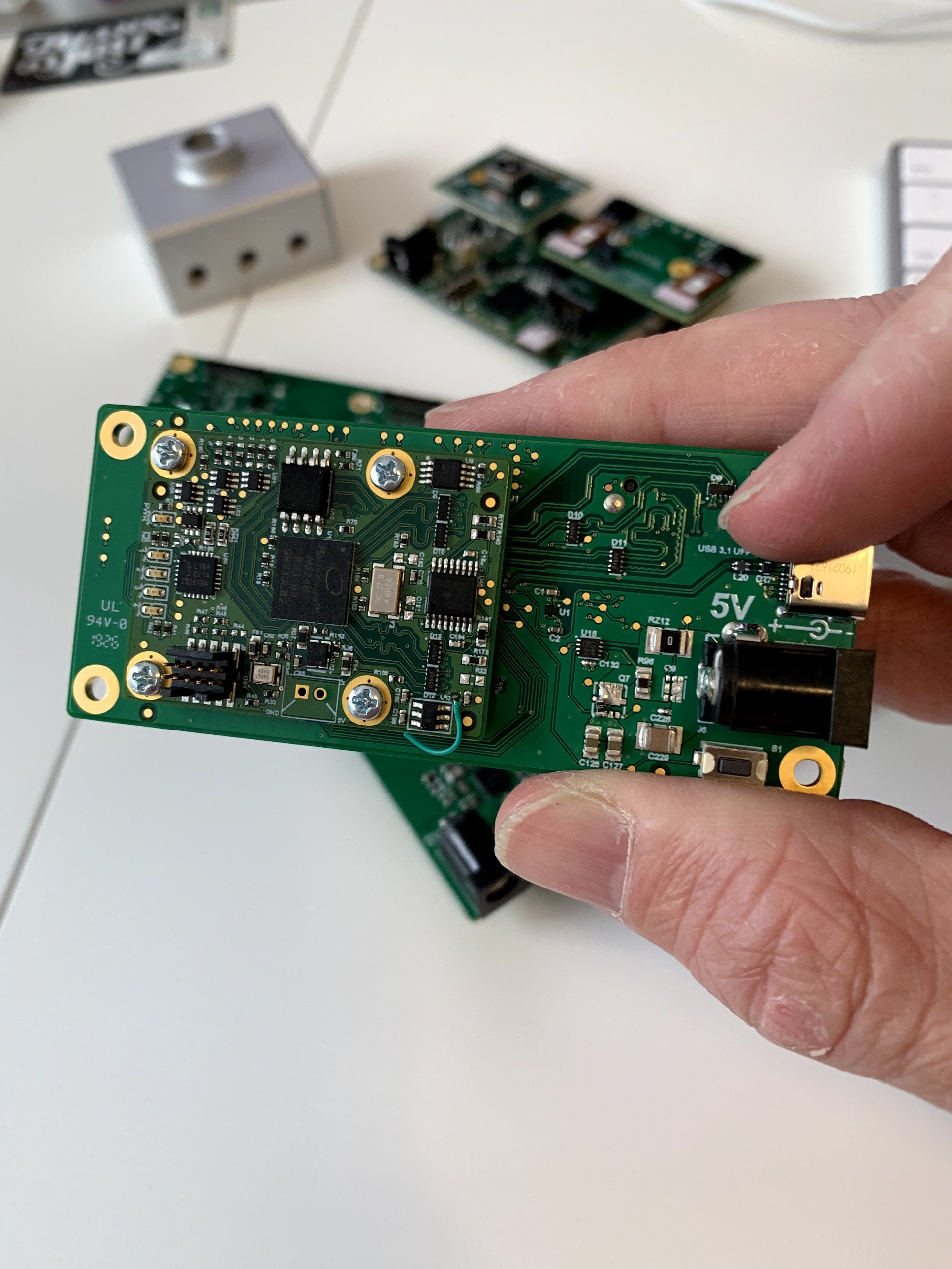

3. Our modular-ized development/test platform (which, in an earlier post, we thought had some sort of error - it doesn't... it was a PEBKAC error).

![]()

![]()

![]()

So all of these work great, which is awesome! We do have a small white-wire fix on the power-on-reset circuit on our Myriad X module as a result of simplifying that circuit and also the power distribution circuit A TON from our previous design. Easy layout fix though, which is already done and ordered. That, and the rewire makes the boards boot up well.

And on the first DepthAI for Raspberry Pi Compute Module, we apparently accidentally hit the space bar, which, in Altium, is the rotate command, when selecting 3 of the ethernet pins on the footprint. So some white wires there to correct that.

So far we've tested/verified all of the following to work as designed/desired:

- IMX378 (12MP camera, 4K video)

- 2 x OV9282 (1MP global-shutter for depth)

- HDMI (1920x1080p works well)

- Ethernet (10/100)

- USB (2x external, and 1x internal to Myriad X Module)

- microSD (including boot with and without N00bs)

- Headphone jack (we initially populated the wrong line driver... got an open-collector variant instead of the push-pull, which we then thought was an error w/ our implementation of a custom Linux Device Tree... so that was a couple days of head-banging trying to get Linux to behave - and it turned out our Linux Device Tree was right all-along!)

We've yet to verify the following (it's on the docket for today):

a. Raspberry Pi Camera

b. DSI Display functionality

Anyways, here's the initial version of DepthAI software/firmware running on this board. It ran first try with no issues, errors, or warnings, which was super satisfying:

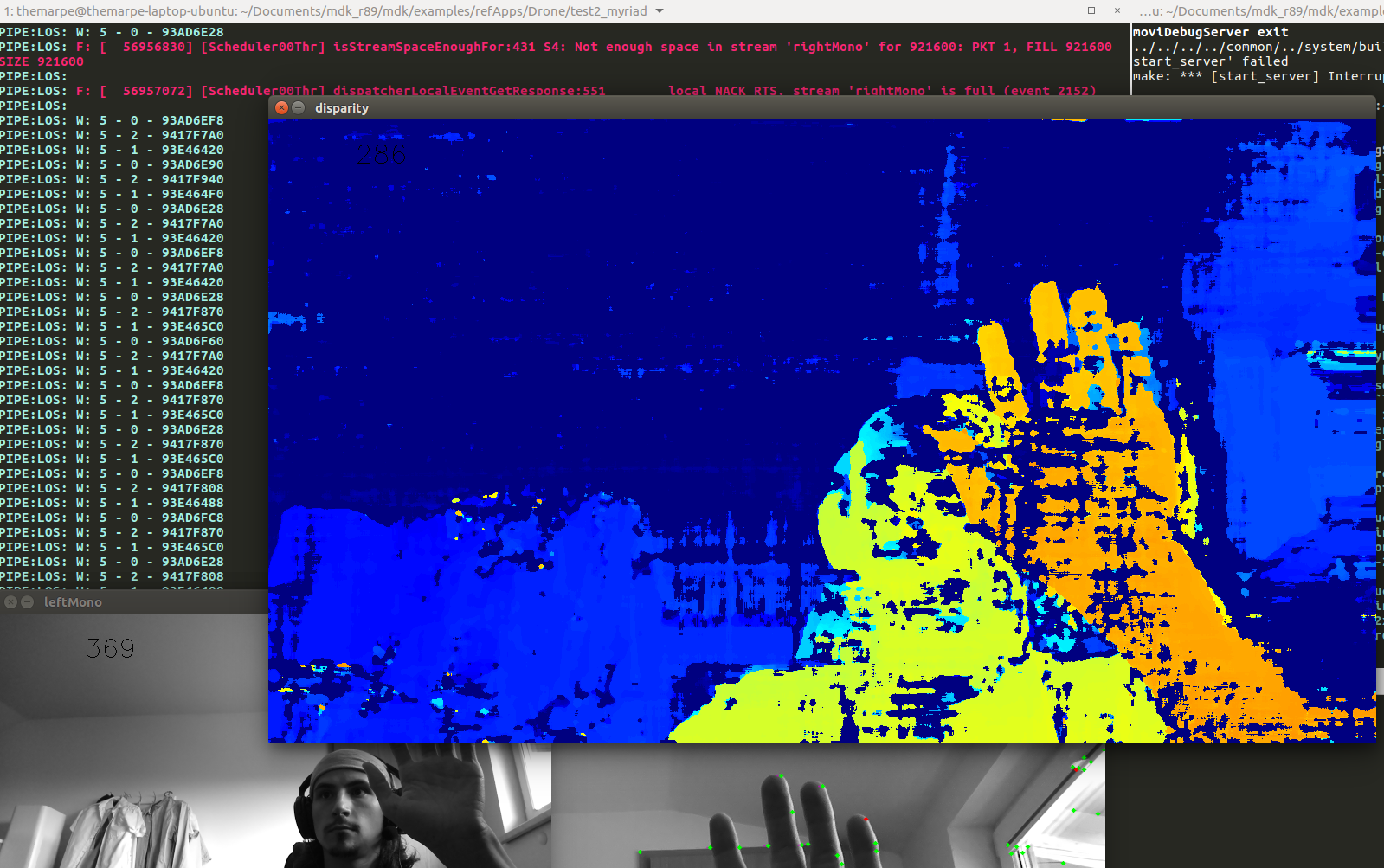

![]()

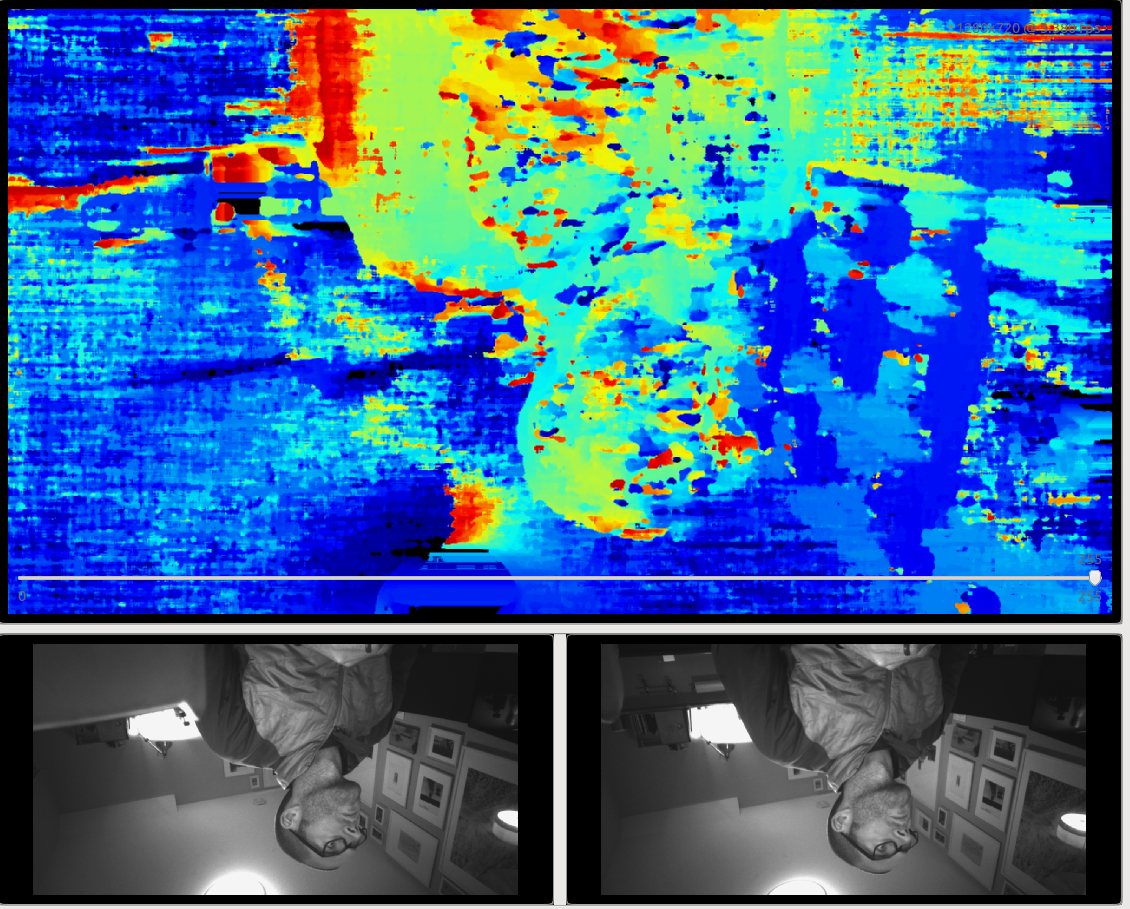

And on the firmware side, we're hard at work getting depth reprojection (which is what produces a point cloud like below) to run directly on the SHAVES in the Myriad X, as well as integrating depth filtering/etc. directly on the SHAVES as well. Below is it running off of our DepthAI Development Board, with OpenGL rendering on the host, for now.

![]()

![]()

And the second image above shows depth filtering experiments being run directly on the Myriad X SHAVES.

Cheers, and more to come!

The Luxonis Team

-

Bad News

08/28/2019 at 23:23 • 0 commentsSo we've been digging into the firmware on the Myriad X as we implement more of the core of DepthAI and we just discovered a show-stopper that we didn't anticipate.

First, the bad news: We won't have the 5x increase in FPS that we were expecting compared to the NCS2 with the Raspberry Pi. As of now we aren't sure what the framerate will be, but it's not looking good and could actually be slower than the NCS2, particularly when depth is enabled in addition to the neural inference.

Of course the platform still has the benefit of providing depth information, offloading the whole datapath from the main CPU, all of those advantages.

But it may actually have a disadvantage compared to the NCS2 in terms of framerate.

We'll keep everyone posted as we learn more. We'll be experimenting over the next ~2 months to see what the framerate is direct from image sensor, and see if it's better or worse than the 8FPS seen with NCS2 + MobileNet-SSD + Raspberry Pi 3B+. We're of course hoping for better, but at this point we're unsure.

Best,

The Luxonis Team

-

Myriad X Modules | Good News and Bad News

08/21/2019 at 06:12 • 2 commentsGood news:

Our Myriad X modules did ship, they arrived, and they do work!

The bad news:

They look like this:

![]()

![]()

![]()

So in simplifying boot sequencing and the power rails required, we swapped some of the timing and control signals... which are fixed above w/ the white-wires (well, green wires, but you know what I mean).

Also, funnily enough, JTAG isn't communicating... so we're debugging that as well. The devices are USB-booting however and running (uncalibrated) disparity depth from the global-shutter sensors. (The color sensor isn't tested yet.)

And currently this board is lower framerate than our BW0235... so we're investigating the root of that as well. We're thinking it has to do w/ the mix-up on the boot/reset signaling, which is causing some code to have to repeatedly timeout - but either way we'll find out soon!

Cheers,

The Luxonis Team

-

Myriad X Modules Shipped!

08/19/2019 at 23:30 • 0 commentsHi again!

So our first Myriad X modules _finally_ shipped! So we're expecting to have these in-hand tomorrow, Tuesday August 20th.

So back-story on these is that we ordered them on June 26th w/ 3-week turn from MacroFab (who we really like, and have used a lot), and the order was unlucky enough to fall in w/ 2 other orders that were subject to a bug in MacroFab's automation (which is super-impressive, by the way).

So what was the bug? (You may ask!)

Well, their front-end and part of the backend were successfully initializing all the correct actions (e.g. components order, bare PCB order, scheduling of assembly) at the correct times. However, the second half of the backend was apparently piping these commands straight to dev/null, meaning that despite the system showing and thinking that all the right things were being done, nothing was actually happening.

So on July 15th, when the order was supposed to ship, and despite our every-2-day prodding up until then, it was finally discovered that the automation had done nothing, at all. So then this was debugged, the actual status was discovered, and the boards were actually started around July 22nd.

Fast-forward to now, and this 3-week order is now a 8-week order - which should arrive tomorrow!

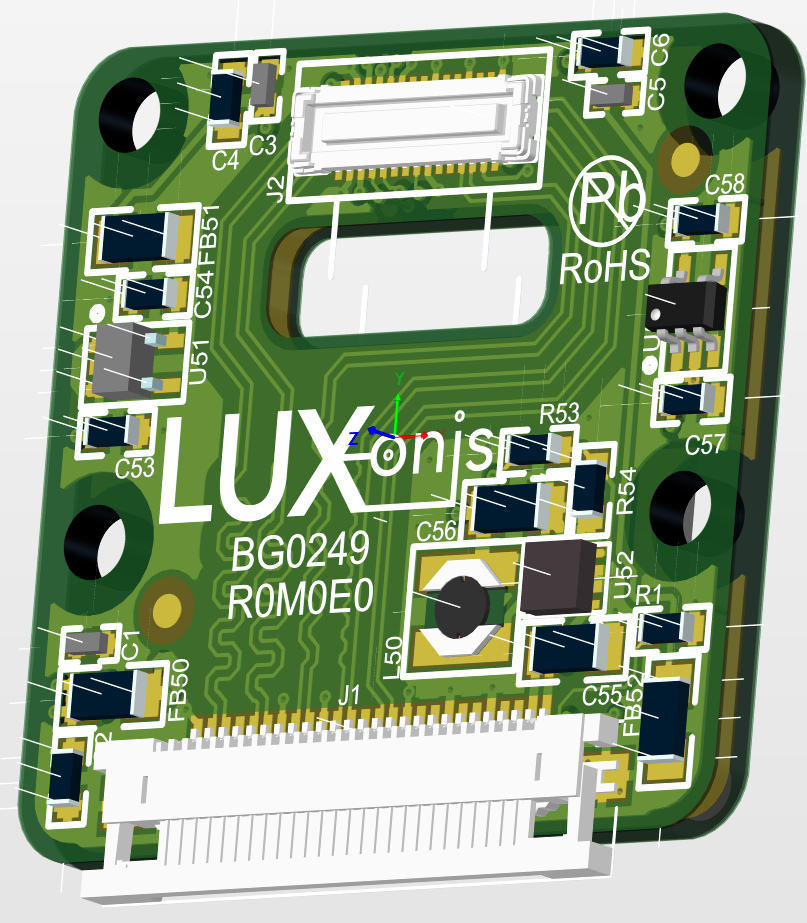

Unfortunately, the only photo we got of the units, was one from a confirmation that the JTAG connector was populated in the right orientation (and it was), so here's a reminder of what the module looks like, rendering in Altium:

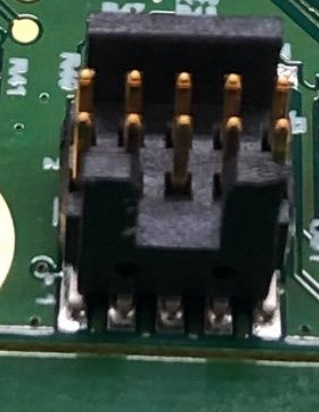

![]()

And for good measure, the only photo we have of the boards so far, which is of the JTAG connector:

![]()

So hopefully tomorrow we'll have working modules! And either way we'll have photos to share.

Cheers!

The Luxonis Team

Brandon

Brandon