-

Commute Guardian Forum Up!

02/11/2019 at 00:16 • 0 commentsHey gals and guys!

So the Commute Guardian forum is now up! Come join the conversation WRT saving bikers' lives!:

https://discuss.commuteguardian.com/

Cheers,

Brandon

-

CommuteGuardian UI/UX and Rider Early-Warning

02/10/2019 at 23:08 • 0 commentsHi everyone,

Quick background: AiPi is us sharing a useful product we're developing on our path to make the commuteguardian.com product. Here's some background on that product:

Wanted to share the idea of how the rider is warned, before the horn goes off. The background here is the horn should never have to go off. Only the MOST distracted drivers won’t notice the ultra-bright strobes, which activate well before the horn will activate.

However, the horn WILL go off should the driver not respond to the strobes. It will activate early-enough such that the driver still has enough time/distance to respond and not hit you.

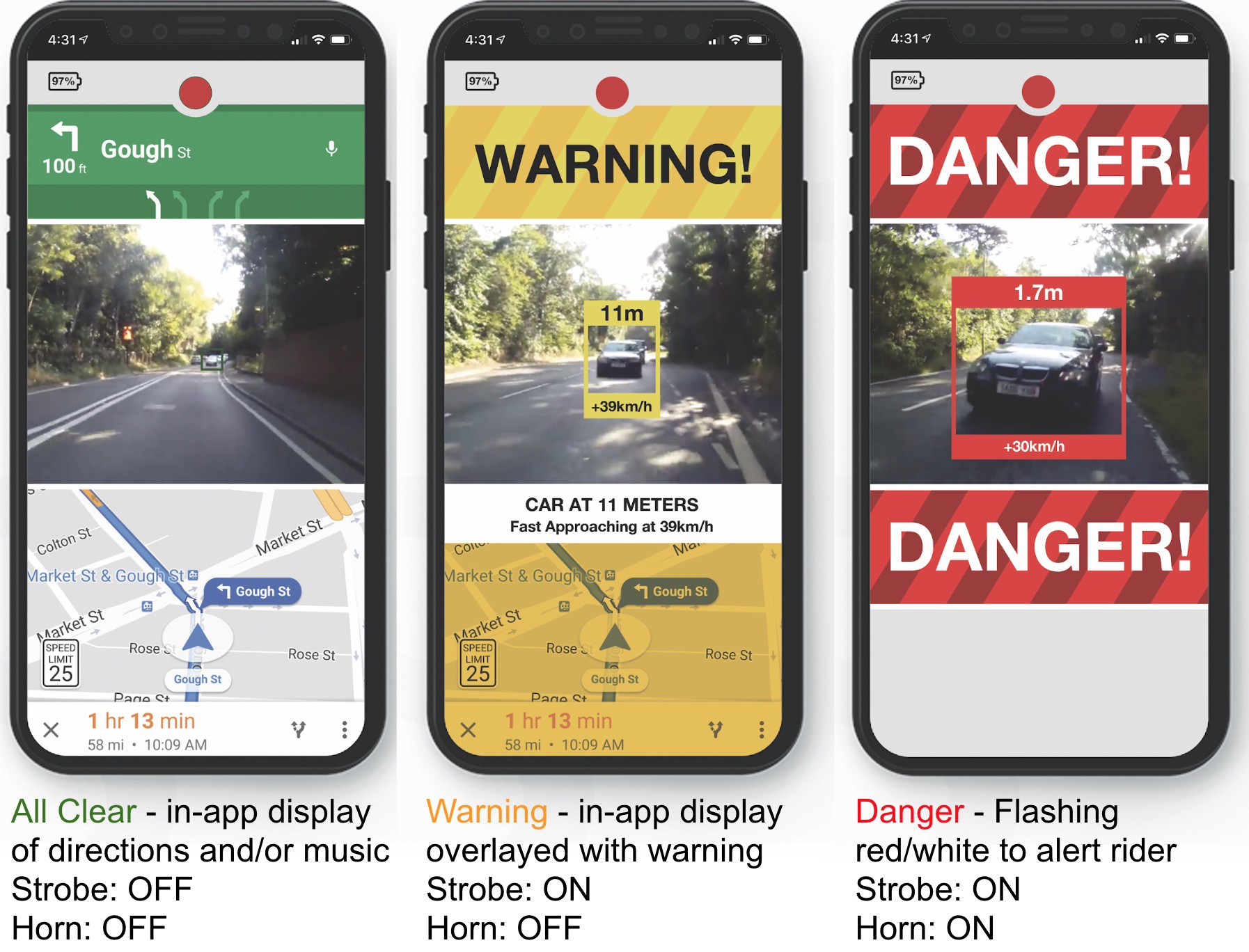

To warn the rider, there are two separate systems. The first one is an optional user interface via smartphone, which we’ll discuss first as it paints the picture a little easier:

![]()

So this gives you the states. In normal operation, it is recording and gives you map operation.When there’s a warning state, the ultra-bright strobes come on, and there’s an overlay to make you aware of the elevated danger.

An example of this is a vehicle on your trajectory at a high rate of speed, which is still far away.

And when that vehicle is closer, the strobes didn’t deter them from an impact trajectory, the horn will sound, and you’ll be visually warned.

So the -second- system of warning the rider doesn’t rely on this optional (although cool) app.

It’s simply an audible alert that the biker will hear (but the car likely won’t) that will sound in the WARNING state, to alert the biker of the danger, and hopefully bring them into a state of being able to avoid the DANGER state (moving over, changing course, etc.)

Thoughts? [Feel free to add comments!]

Best,

Brandon

-

AiPi.io

02/10/2019 at 23:00 • 0 commentsHi everyone!

So we're working on getting AiPi.io up and running (it's currently/temporarily at aipiboard.com). It will be the home for all of this, and the overall goal will be to allow folks working in embedded machine vision/learning or needing capability there, to know the hardware/firmware options (there are so many).

We're hoping to make it easy to leverage the pile of research/work we've put in to figuring out all the stuff to make the commuteguardian.com product (as far as we've gotten so far).

And then also provide a place for folks to express their needs, and maybe we can help to meet those needs by spinning boards/training networks/etc.

We'll still of course update here. Because, you know, hackaday is awesome. That will just give folks who -really- want to dig into what we're doing, a place to do so.

Best,

Brandon

-

Commute Guardian Firmware/Software Prototype Working!

02/08/2019 at 18:12 • 7 commentsHey guys and gals!

So the 'why' of the AiPi (that satisfyingly rhymes) is we're actually shooting for a final product which we hope will save bicyclists lives, and help to make bike commuting possible again for many.

Doing all the computer vision, machine learning, etc. work I've done over the past year, it struck me (no pun intended) that there had to be a way to leverage technology to help solve one of the nastiest technology problems of our generation:

A small accident as a result of texting while driving, a 'fender bender', often ends up killing or severely injuring/degrading the quality of life of a bicyclist on the other end.

This happened to too many of my friends and colleagues last year. And even my business partner on this has been hit once, and his girlfriend twice.

These 'minor' accidents (were they to be between two cars) resulted in shattered hips, broken femurs, shattered disks - and then protracted legal battles with the insurance companies to pay for any medical bills.

So how do we do something about it?

Leverage computer vision + machine learning to be able to automatically detect when a driver is on a collision course with you - and stuff that functionality into a rear-facing bike light. So the final product is a dual-camera, rear-facing bike-light, which uses object detection and depth mapping to track the cars behind you - and know their x, y, and z position relative to you (and the x, y, and z of their edges, which is important).

What does it do with this information?

It's normally blinking/patterning like a normal bike light, and if it detects danger, it does one of two actions, and sometimes both:

1. Starts flashing an ultra-bright strobe.

2. Honks a car horn.

So case '1' occurs when a car is on your trajectory, but has plenty of time/distance to respond. And example is rounding a corner, where their arc intersects with you, but their still at distance. The system will start flashing, to make sure that they are aware of you.

And if the ultra-bright strobes don't work, then the system will sound the car horn with enough time/distance left (based on relative speed) for the driver to respond. The car horn is key here, as it's one of not-that-many 'highly sensory compatible inputs' for a driving situation. What does that mean? It nets the fastest possible average response/reaction time.

So with all the background out, we're happy to say that last weekend, we actually proved out that this is possible, using slow/inefficient hardware (with a poor data path):

What does this show?

1. You can differentiate between a near-miss and a glancing impact.

2. The direct impact fired a little late, mainly just as a result of the 3FPS that this prototype is running at (as a result of a poor data path).

What does it not show?

1. Me getting run over... heh. That's why we ran at the car instead of the car running at us. And believe it or not, this is harder to track. So it was great it worked well.

And the following shows what the system sees.

Keep in mind that using the Myriad X hardware more completely (only the neural engine, which is 1 TOPS, is being used, the other 3 TOPS are unused in the NCS form-factor), the frame rate bumps to ~30FPS. The Raspberry Pi is having to do video processing/resizing/etc. here and - all of which that the Myriad X has dedicated hardware for, but can't be leveraged in the NCS formfactor.

So the AiPi data path (cameras directly to the Myriad X) allows all this hardware to be used, while completely removing the burden of the Pi having to massage/pass-around/etc. video feeds. So it's a huge benefit in terms of speed.

Here's me initially testing it, figuring out what thresholds should be, etc.

I wanted to do it on a super-strange vehicle, to prove how robust at the computer vision is at detecting objects. So this is the 'strange' vehicle I used, and it worked great!

To learn more about CommuteGuardian, feel free to check out commuteguardian.com, and also be a first-poster on the forum if you have ideas/questions. And bear with us, it's just going up now.

Best,

Brandon

-

Papers With Code

02/01/2019 at 15:35 • 0 commentsIn case anyone missed it (we had), this website seems awesome!

https://paperswithcode.com/sota

There are a slew of ways to convert neural models now (effectively every major player has a tool), so most/all of the video/image neural networks there are convertible to run on the Myriad X (the AiPi) through OpenVINO.

-

Example Object Detection and Absolute XYZ Postion

02/01/2019 at 14:16 • 1 commentHere's an example of what you can do with the Myriad X carrier board. In this case, it's object detection and real-time absolute-position (x, y, z from camera in meters) estimation of those objects (including dz speed for people).

This is actually run using the NCS1. Why? The datapath issues of using the NCS2 with a Pi means it doesn't provide a significant advantage over the NCS1 - and results in higher overall latency because the bottlenecks really fill up with it. So you have to drop framerate even lower, or deal with the really-high latency.

Which is actually part of what prompted the AiPi board. The NCS1 over USB on the Pi actually isn't hurt that much. It goes from say 10FPS on a desktop to say 6FPS on the Raspberry Pi.

The NCS2, however, goes from ~60FPS (and potentially faster) on a Desktop to ~12FPS on a Pi, because of the datapath issues (latency/redundantly having to process video streams, etc.). (And AiPi will fix that!)

Because the Myriad X hardware-depth engine can't be used until the AiPi board is out (we're working feverishly), these examples are using the Intel D435, which has very-similar hardware depth built-in. With the AiPi board, no need for this additional hardware. :-)

-

Closed Source Components and Initial Limitations

01/31/2019 at 22:51 • 0 commentsJust like the drivers for say some graphics cards/etc. some portion of the Myriad X will be in binary only.

So part of our effort will be writing the support code for loading these binaries, and also producing these binaries based on customer interest.

That said, all the portions of interacting with the Myriad X as one already does now through NCS2 with OpenVINO should be the same flow, or very similar (open source, modifiable, etc.).

Specifically (but potentially not limited to this), the hardware and firmware for the stereo depth calculation is non-public, so the stereo-depth capability will be provided as a binary download.

If/as more functions which are not covered by OpenVINO (and hopefully OpenVINO soon simply covers stereo depth as well, which would be a great possibility), we can respond by implementing these and releasing binaries.

All that said, OpenVINO is a very promising platform, and is quickly adding great capabilities - so there's a chance that these initial limitations may disappear soon.

Brandon

Brandon