Motivation

One major barrier to a fluid creative workflow with robots is the often stilted sense-think-act loop. Every time through the loop the robot first gathers information about the environment, crunches the numbers to generate a plan, and then executes that plan. Without special care the individual steps can take significant time, which results in a robot that can’t fluidly adapt to changes in the environment or high level control inputs.

For my system the think step has been the bottleneck. Previously I demonstrated real-time communication with my Kuka KR 16 robot arm using a driver from ROS Industrial and motion planning with the MoveIt motion planning framework. Even though the underlying control data being sent to the robot was on a 12 ms time step the motion planning step in MoveIt often took a few seconds. And there was no straightforward way to append new plans found while moving, so the robot would come to a stop after finishing executing each one. Metaphorically, the robot was closing its eyes, covering its ears, and running through a room. Only when it finished running did it open its eyes to see whether it had made it to the other side or slammed into an obstacle; and uncover its ears to be told what it needed to do next.

If we had a system that kept its eyes open and ears uncovered while taking action we could change the kind of problems we can solve with it and the ways that we implement the solutions. We could:

- Have a human step in at a moment’s notice to move the robot out of a tight situation or give it hints about what to do. For example, we could use a VR controller to move the tool to the canvas. Or do computer mediated painting where input from a haptic controller is filtered, scaled, or otherwise modified before directly moving the tool on the end of the robot in real-time.

- Adjust motion in response to fast, unpredictable, and chaotic changes in the environment. Obstacles moving around, the work surface changing, or small details like paint drips.

- Use iterative algorithms to explore and manipulate the environment. For example, using a camera to visual servo towards a canvas. Often it's much easier to come up with a solution to a perception problem by thinking about taking small steps down a cost gradient.

To do these things we need real-time motion planning to go with our real-time trajectory execution. There are many kinds of planning problems in robotics but the one that I am mainly interested in here is about taking a path for the tool on the end of the robot through cartesian space (XYZ and rotation) and finding a corresponding path through joint space that has the tool follow the target path as closely as possible while avoiding problems like collisions and singularities. MoveIt provides several planners that can solve this particular problem in a global, offline way. That is, it will find a solution that is close to the “best” possible given the constraints, but it will calculate it all at once beforehand. What we want instead is a hybrid: local-first, global when feasible, and very online (real-time).

Survey of Solutions

BioIK

Back in 2019 I was looking at BioIK as a potential solution. It is robust because it continuously creates populations of potential solutions for what to do in the next moment and quickly scores them based on various criteria that are based on how well they meet the goals and avoid potential issues. This lets it be very flexible but also fast. Because there’s a whole population of potential solutions at each step you can tune its performance or characteristics by controlling the population. For example, if you want more speed you can reduce the population of potential solutions.

It seemed pretty great on paper, but in practice I’ve run into difficulty getting it to produce motion with the qualities I desire. It provides a myriad of different solvers that have different properties. The default one is global and not ideal for adapting to on-the-fly updates. Some of the other solvers are ok for that but come with other limitations.

When I found CollisionIK (described below) I grokked that much quicker and immediately had results I liked, so I set BioIK aside. Some day I hope I can finish exploring BioIK and either make my criticisms concrete or find the way to get it to do what I want.

V-Rep / CoppeliaSim

In the past I played a lot with V-Rep, which is now called CoppeliaSim. I wrote plugins for synchronizing the state of a UR5 or a KR 16 in V-Rep with a real robot and then developed many different experiments on top of that. Most were done during a short two week workshop that I ran at MIT:

And some were done at other times:

For IK I was using the built-in damped least squares (DLS) method provided by V-Rep (an overview of how that works can be found here). It was surprisingly stable in most cases. Though occasionally performance would drop and sometimes crazy solutions would be returned.

To a large extent I’m just trying to port the experience I had working in V-Rep over to ROS and build on it. V-Rep was great for rapid prototyping. I could quickly script new ideas in Lua, test them out in the sim, and then “flip a switch” and run them in real-time on the robots. By communicating via sockets with external programs (like Unity) we were able to build a lot of interesting dynamic tools and experiences quickly. But V-Rep / CoppeliaSim is not free and open and it was often frustrating not being able to reach in and see exactly what was going on or make changes. It was also not a great fit for collaborating with the rest of the robotics ecosystem, which is heavily oriented towards ROS-native systems.

MoveIt Servo

In the time since I stopped working on this project there have been a good number of new developments in motion planning in ROS. One of these is the moveit_servo package for MoveIt 2.

I have not evaluated it yet, but may in the future as part of a bigger effort to switch to ROS 2 and take advantage of the many improvements in MoveIt 2 (e.g. hybrid planning). One thing that gives me pause is that the package seems to have mostly just been tested on Universal Robots robot arms so far. I have the impression that it’s still early days for this package, but that it has potential and is worth mentioning even though I haven’t yet rigorously evaluated it.

CollisionIK

Now we get to the basket that my eggs are currently stored in.

I have been hearing about neural-network based approaches to motion planning for some time, and did a little reading on them. But I mostly saw research papers without practical, working open source code that I could immediately use. When I started working on this project again I decided to take a fresh look at what is out there. The one that stood out was CollisionIK (preceded by RelaxedIK) from researchers in the University of Wisconsin-Madison's Graphics Group.

The key idea is that you can come up with loss functions for the constraints you want the robot to obey, combine them into an objective function, and then train a neural network to approximate that objective function 2-3 order of magnitude faster than you can evaluate the original full objective function. By normalizing the relative importance of your constraints using a well-chosen parametric loss function you have control over which ones get relaxed first when a perfect solution can’t be found. In the context of this project that means we can tell the robot to give up on following the tool path closely if it needs to avoid a collision or a singularity, but in a way that smoothly scales based on the “severity” of the problem.

This ends up being a really great approach in practice. It’s relatively easy to nudge the planner to do what you want by adjusting the weights of the constraints or adding or removing them. In a sense you can take a hammer to the objective function and the neural network training process will smooth everything out and make it really fast. In practice I am able to eval the objective function at >3 KHz on the control PC in my studio. It’s not magic so there are still a lot of challenges hiding in the details, but speaking generally I’ve found that working with it feels a lot less like math and a lot more like painting.

On top of the nice approach there’s actual working code in a GitHub repo!

Setting it Up

Let’s get CollisionIK working in ROS. If you want to start out exactly like me then first follow this tutorial I wrote, “Containerizing ROS Melodic with LXD”. But using a non-containerized ROS Melodic installation will work fine too.

We are going to be focusing on getting the ROS1 wrapper for CollisionIK working. It’s essentially a three step process. Step 1 is to get the dev branch of the original uwgraphics/relaxed_ik repo working, because that’s where the code for training the neural network resides. Step 2 is to get the ROS1 wrapper package built. Step 3 is to copy the configuration and NN weights trained in step 1 into the wrapper package so it can run and eval the objective function.

Step 1: Training the Neural Network

We will install from my forked version of the relaxed_ik repo which fixes some small issues and is already set up for training the NN for my KR 16, the Waldo. You can see the specific changes I had to make in this commit on my fork. The summary is:

- Add a KR 16-2 URDF with the information about the robot.

- Create a “collision” file that contains:

- A description of the obstacles in the environment. At the moment I just create a box that represents the floor.

- Sample joint states that are not colliding for the NN training to start from.

- Edit src/start_here.py and fill in:

- URDF file name

- Name of the fixed frame (i.e. what is at the root of the kinematics chain in the URDF and bolted down in real life)

- Info file name, which will be generated later and loaded before NN training

- Joint names and their order, taken from the URDF

- The frame where the tool is attached

- The starting position of the robot. For the KR-16 this is a forward-facing, upside-down L with the tool plate “looking down”.

- The name of the collision file with

- Set the name of the info file in load_info_file.launch so it will be properly loaded before training later.

# Install system dependencies

apt update

apt install -y \

build-essential \

git \

gfortran \

python-pip \

python-rosdep \

liboctomap-dev \

libfcl-dev \

libopenblas-dev \

ros-melodic-urdfdom-py \

ros-melodic-kdl-parser-py \

ros-melodic-kdl-conversions \

ros-melodic-tf

# Install python dependencies

python -m pip install setuptools

python -m pip install --upgrade \

readchar \

python-fcl \

scikit-learn \

scipy \

PyYaml \

numpy

# Create our Catkin workspace if necessary and go there

mkdir -p $HOME/catkin_ws/src

cd $HOME/catkin_ws

# Clone the repo into the src subdirectory of our catkin workspace

git clone -b dev --depth 1 https://github.com/jmpinit/crash_relaxed_ik src/crash_relaxed_ik

# Grab package dependencies

rosdep init

rosdep update

# Build the package

catkin_make

At this point we are almost ready to train the neural network. But we need to fix one issue before that can happen. There is a shared library file at ./src/RelaxedIK/Utils/_transformations.so which I needed to recompile for my architecture. To do that we’ll download the library separately, build it, and then copy across the shared library file:

cd $HOME # Or wherever you want git clone https://github.com/malcolmreynolds/transformations cd transformations python setup.py build_ext --inplace cp ./transformations/_transformations.so $HOME/catkin_ws/src/crash_relaxed_ik/src/RelaxedIK/Utils/_transformations.so

Now we can train the NN, which takes about 10 minutes on my laptop:

cd $HOME/catkin_ws/devel source setup.bash roslaunch relaxed_ik generate_info_file.launch roslaunch relaxed_ik load_info_file.launch rosrun relaxed_ik generate_input_and_output_pairs.py roslaunch relaxed_ik preprocessing_rust.launch

Once this is complete the configuration and NN weights are ready but we need to set up the ROS package before we can make use of them.

Step 2: Installing the ROS1 Package

cd $HOME/catkin_ws/src git clone https://github.com/jmpinit/crash_relaxed_ik_ros1 cd crash_relaxed_ik_ros1 git submodule update --init cd $HOME/catkin_ws catkin_make # Install an up-to-date rust toolchain # I'm using: # cargo 1.63.0-nightly (4d92f07f3 2022-06-09) # rustc 1.63.0-nightly (cacc75c82 2022-06-16) curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh # Make sure to add cargo to your PATH after installing # I had to update my version of CMake to get the NLopt package to build # I'm using cmake-3.24.0-rc1-linux-x86_64 # See <https://cmake.org/download/> cd $HOME/catkin_ws/src/crash_relaxed_ik_ros1/relaxed_ik_core cargo build

With some luck your build will succeed.

Step 3: Configure and Run

Copy the configuration files created during step 1 into the relaxed_ik_core submodule:

RIK_CONFIG=$HOME/catkin_ws/src/crash_relaxed_ik/src/RelaxedIK/Config cd $HOME/catkin_ws/src/crash_relaxed_ik_ros1/relaxed_ik_core cp $RIK_CONFIG/collision_nn_rust/kr16_2_nn config/collision_nn_rust cp $RIK_CONFIG/collision_nn_rust/kr16_2_nn.yaml config/collision_nn_rust cp $RIK_CONFIG/collision_nn_rust/kr16_2_nn_jointpoint config/collision_nn_rust cp $RIK_CONFIG/collision_nn_rust/kr16_2_nn_jointpoint.yaml config/collision_nn_rust cp $RIK_CONFIG/info_files/kr16_2_info.yaml config/info_files cp $RIK_CONFIG/joint_state_define_functions/kr16_2_joint_state_define config/joint_state_define_functions

We also need to install my waldo_description package that has the meshes for the KR 16:

cd $HOME/catkin_ws/src git clone https://github.com/jmpinit/waldo_description cd $HOME/catkin_ws catkin_make

Now we can finally run it!

# Run these in separate terminals roslaunch relaxed_ik_ros1 rviz_viewer.launch roslaunch relaxed_ik_ros1 relaxed_ik_rust.launch rosparam set /simulation_time go rosrun relaxed_ik_ros1 keyboard_ikgoal_driver.py

Here I’m moving a bunny 3D model back and forth in RViz while the robot tries to reach a fixed goal pose. You can see it smoothly move out of the way of the bunny (relaxing the position constraint) and then smoothly move back when the obstacle is removed.

Experiments

The code for these experiments can be found in the repo here: jmpinit/crash_blog.

For all of these I am just posting target poses to the /relaxed_ik/ee_pose_goals topic.

Following a Square

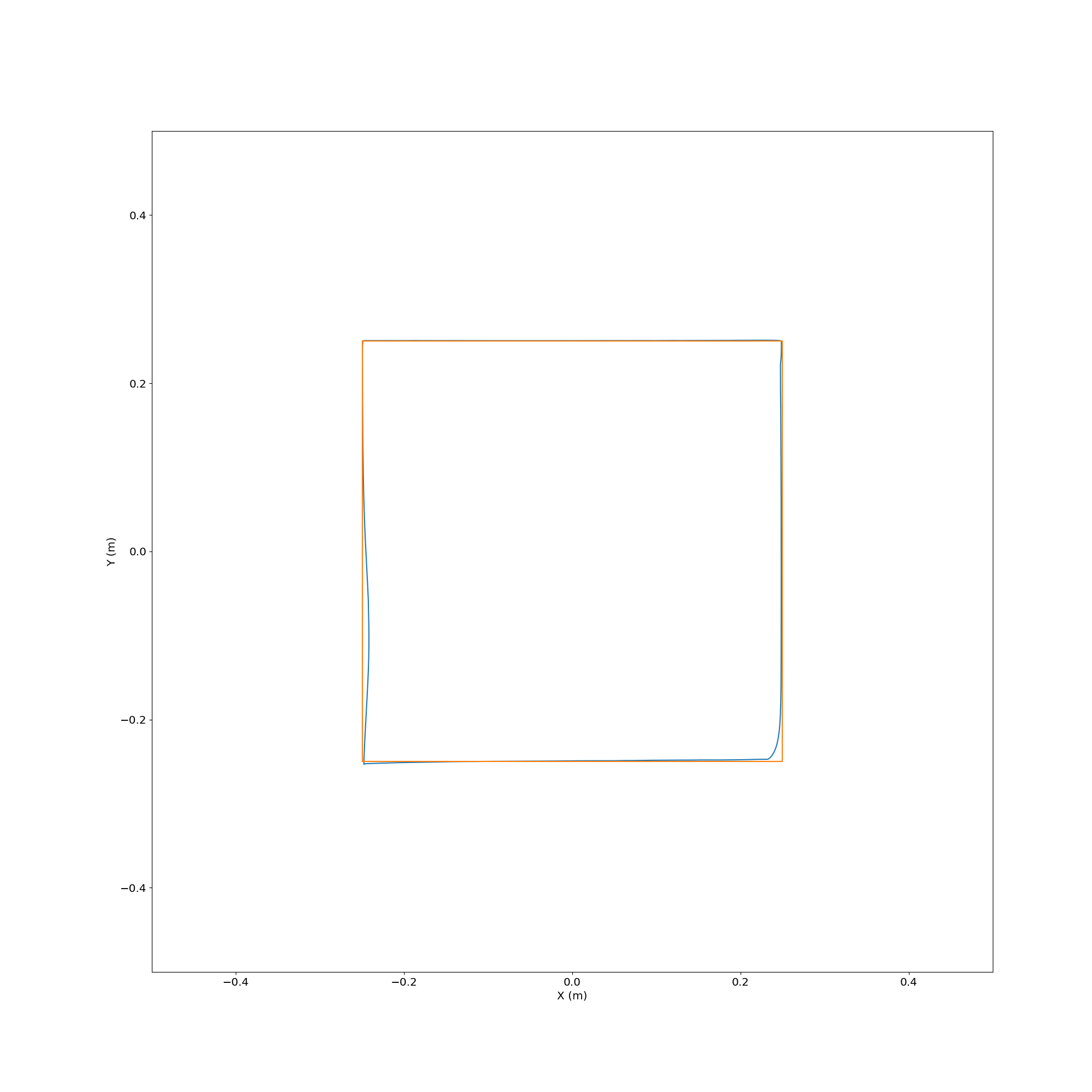

Here’s what the actual path looked like relative to the target from above:

Plotting an SVG

Plotting an SVG with a Bunny in the Way

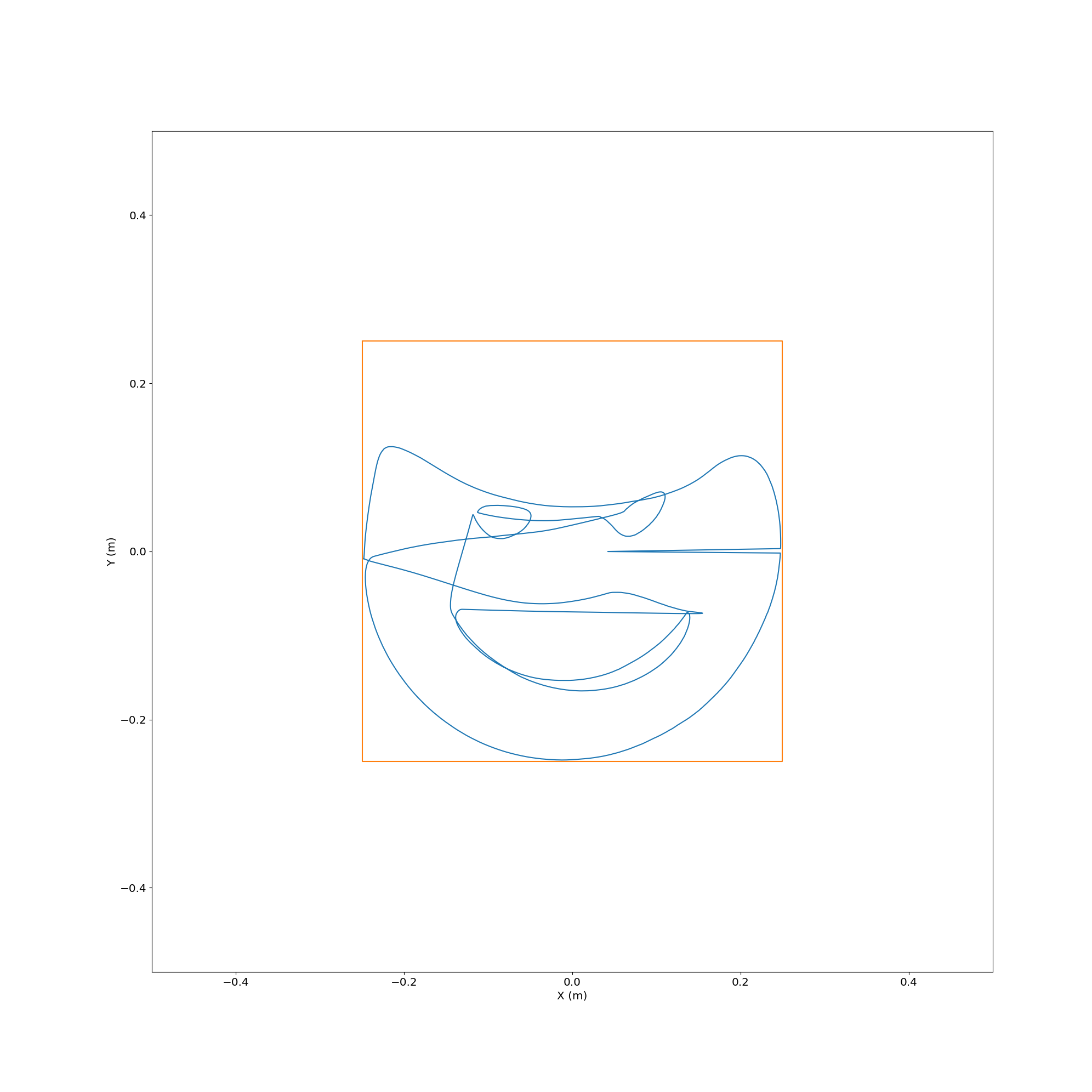

Here’s what the actual path looked like relative to the target from above:

Future Work

Now I have a proof-of-concept setup with my robot of a flexible online IK solver. But you’ll notice that I didn’t include any demonstrations of this with the real robot arm. That’s not just because I ran out of time. There are some issues that must be addressed before this can be connected to the real bot.

Mostly CollisionIK does the right thing. But sometimes it does “glitch” and tell the robot to go as fast as it can after a point that is far away from where it is at and where it should be. For the real robot that could be extremely dangerous, or at the very least impractical. The real robot has speed and torque limits. RSI, which I use for real-time trajectory execution on my bot, will die whenever those limits are exceeded.

Additionally, even though it looks like it was intended for the CollisionIK implementation to have velocity limits, as far as I can tell those are not yet implemented. It tries to minimize velocity, acceleration, and jerk by setting minimization of those properties as objectives but without hard limits there’s always going to be the possibility of motion that is too aggressive.

We need to implement hard limits on these motion properties outside of CollisionIK. Ideally it would be done at the point just before the RSI interface on the ROS side of the system, because that will reduce the chance that some other node we add in the future unexpectedly sends unsafe motion directly to the bot. And the implementation needs to be fast, because these limits need to be enforced on every RSI update, which can happen up to 83 times per second (12 ms update cycle).

Making the challenge harder, instead of just failing when motion is attempted out of our desired parameter bounds we want to try to smooth it out. Often when prototyping we can accidentally generate fast transient glitches but still have overall motion that accomplishes our goals. If we could filter out the glitches and always have smooth motion while still being able to completely stop if motion stays out of bounds too long then we will have a practical system that can still ensure some degree of safety.

Next time we will look at how to use the very impressive Ruckig motion trajectory generation library for motion smoothing and limiting. And finally get to take a look at some motion on the real robot.

Owen Trueblood

Owen Trueblood

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.