-

Back to the BluePill...

05/07/2020 at 18:42 • 5 commentsSummary

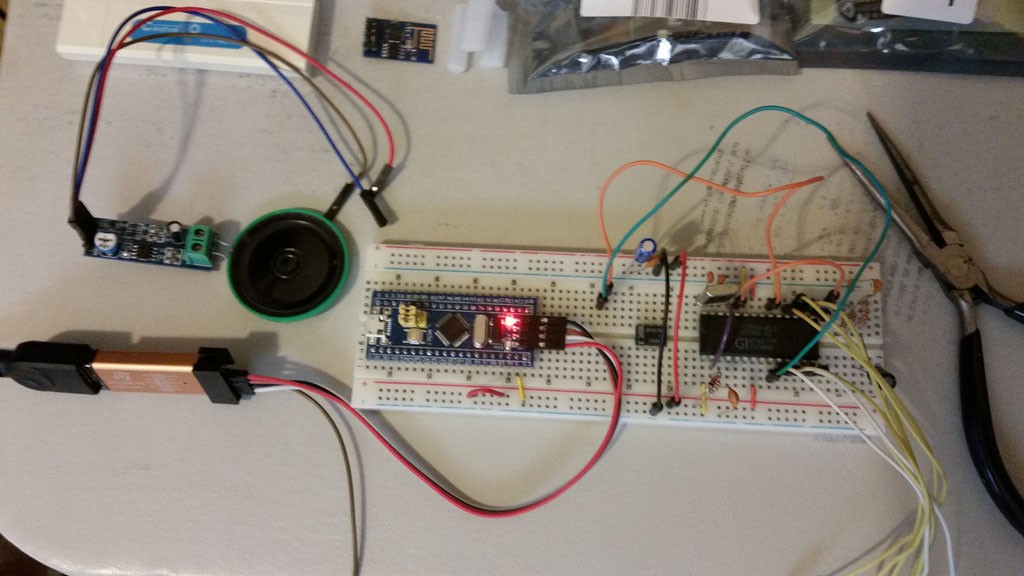

Just setting up the project and plugging stuff into the breadboard.

Deets

My motivation for this mini-project was simply to set up to get recorded phonemes. Strictly, since I already have that, and since I have already proven the concept with the python code, I really don't need to do the BluePill interface anymore, but I'm going to do it anyway for a few reasons:

- there was a cautionary statement about the quality of the existing recorded phonemes, so maybe I want to make my own recordings under a controlled environment

- I think it might be useful to someone else in some way

- more wild geese: it occurred to me that if this 'concatenate the phonemes approach works' (which it seems to), then I might be able to put the whole thing on the BluePill -- no SP0256-AL2 required! And moreover with the text-to-speech, then also no CTS256 required! Those chips have become quite rare, and are pretty expensive when you can find them. So, instead, you could just use an STM32F103 for a couple bucks (possibly also with no external crystal needed) and have it output the speech signal on a PWM line.

Anyway, it was time to gather parts and start stuffing the protoboard:

![]()

Some things:

- I am very displeased to have gotten burned on the ST-Link-V2. These are very cheap from Chinese sources, and I like to keep a pile of them on-hand for various projects that are in various stages of development, rather than just have one that I need to move around between the various projects. I had bought a batch of them some months ago and opened one of those up. It looked like an ST-Link, but the legend for the pins was quite different. I noticed the labeling was 'STC Auto Programmer' and then googled to see what the heck it was. Well, it turns out that many others have gotten burned this way before as well. So 'caveat emptor' when ordering these things from overseas. It turns out this is simply a USB-to-serial adapter using the CH340 chip. I suppose I can make use of that in some other context, but it was completely useless here. So I was back to cannibalizing another project for the ST-Link, *sigh*.

- The SP0256 specs an oddball crystal (3.12 MHz), so I am using a colorburst instead. This was commonly done, and I've heard that some folks have even overclocked it as much as 4 MHz (the sound sample on wikipedia seems to be like that).

- Since this is going to be breadboard only, I am going to reuse a separate LM386 breakout board that I already have a speaker attached to save a little time rummaging for parts.

- The SP0256 is a 5V part, and the STM32F103 is a 3.3V part. Many but not all of the STM's pins are 5V tolerant, but you have to double check. In particular, the lower port A pins are not 5V tolerant (pretty much anything that can be used as analog input is not), and I planned to use those for the address lines. I think this will work safely because those are inputs on the SP0256 side, and outputs on the STM side. But for the couple lines that come back into the STM (SBY and /ALD) those will definitely need to be on 5V tolerant pins.

- Call me a 'Nervous Nelly' if you will, but the SP0256 part is precious, so I and double and triple checking it's wiring before putting power to it. Once it's blown, that's the end of the party. The same caution applies to the STM, but I have more of those and those are cheap.

So, I am planning the pin assignments:

- port A0-7 (not tolerant) will go to the SP's address A1-A8 (hey, I didn't name these pins)

- port PB1 (not tolerant) will go to the SP's /ALD

- port PB10 (tolerant) will go to the SP's SBY

- port PB11 (tolerant) will go to the SP's /LRQ (and probably be configured as EXTI)

- and later, if/when I try to make a STM32 standalone simulator/emulator, then PB0 will be configured as PWM output

- there will be USB CDC

- I might see if I can do a UART, too. This is not required, however when debugging, USB CDC is a pain because halting the processor when single-stepping causes the USB to break, and then you have to reset and that's the end of your debugging sessions. By using a physical UART, you can use an FTDI and not break the connection (or maybe I can use that bogus programming adapter; lol!)

Next

Building the Basic BluePill Interface

-

When I Hear That Squealing, I Need Textual Healing

05/07/2020 at 00:09 • 0 commentsSummary

While the voice synthesizer is cool, manually sequencing phonemes is not particularly fun. Text to speech is imperfect, but it definitely lightens the load. While this was not a goal of the project, I thought I'd spend a couple days on this feature creep.

Deets

Some time back I had implemented a text-to-speech algorithm that was to be a software equivalent of the CTS256A-AL2. This was a companion chip that accepted text data and implemented a derivative of the Naval Research Lab algorithm, and then drove the SP0256-AL2. I do happen to have one of those devices, but I have never used it myself.

The algorithm is derived ultimately from some work done by the Naval Research Lab in 1976 contained in 'NRL Report 7948' titled 'Automatic Translation of English Text to Phonetics by Means of Letter-to-Sound Rules'. It describes a set of 329 rules and claims a 90% accuracy on an average text sample. It also asserts that 'most of the remaining 10% have single errors easily correctable by the listener', which I assume means you giggle and figure it out for yourself from context.

Further works was done in the 1980's John A. Wasser and Tom Jennings -- I have lost that code but it should be findable on the Internet since that's where I got it. I also added some additional rules of my own.

The gist of the algorithm is to crack incoming text into words. Then each word is independently translated. The translations process consists of three patterns: a 'left context', a 'right context', and a middle context that I call the 'bracket context' simply because that is the notation that the original rules used. e.g.:

a[b]c => p

where 'a' is the left context, 'b' is the bracket context, 'c' is the right context, and 'p' are the phoneme sequence produced.

The way this is used is that you pattern match the three and if you get a match, then you replace the 'bracket context' with the phoneme sequence and advance the position in the text that you are processing. So, 'a' can be thought of as backtracking.

Regular expressions comes to mind for pattern matching, however the NRL report uses some some particular 'character classes' that make sense in this phonetic context, such as 'one or more vowels/consonants' and 'front vowels' and 'e related things at the end of the word'. So for practical reasons I did not use regex's for this project. For one, I would have an enormous number of machines since there are hundreds of rules, and for another I wasn't going to have a regex library on this embedded processor anyway. So I hand coded the pattern matching logic. Mercifully, it was straightforward even if tedious.

You can view the processing as proceeding with a cursor moving across the word being translated. The cursor is at the start of the 'bracket' pattern, so the 'left context' can be considered to be 'backtracking'. If there is a match of all three patterns, then the phoneme sequence is emitted, and the cursor is advanced by the length of the 'bracket' pattern.

Rules are tested sequentially until a successful match is made, so more specific rules should precede more general ones. To expedite the process of this linear search, I exploited the fact that the 'bracket' context only has literals -- no patterns. Then I separated the rules into groups on the first character of the 'bracket' context. In this way, the majority of the rules do not have to be tested at all since it is known that they have no hope of matching.

In real time this actually took a few days to do (I'm behind on posts but nearly caught up), and I possibly have made some transcription errors, but I did get it running and generating some text-to-speech. E.g., this time I am using Jefferson Airplane's "Somebody to Love": somebodytolove.wav

Not too bad; there are certainly some oddities, such as 'head' 'breast' comes out more like 'heed' and 'breest'. This could easily be errors in my transcription, perhaps bugs in my pattern matching, or maybe crappy phoneme recording. I'll investigate this more closely later, but punt on that for now in the interest of getting back on-track with getting the BluePill interface to the physical chip running.

At any rate, I put this Python proof-of-concept prototype in a separate repo from the BluePill stuff, the link to which is in this project's 'links' section.

Next

Back to the BluePill...

-

Detour de Farce

05/05/2020 at 18:01 • 0 commentsSummary

I implement a simulation of my simulation in Python.

Deets

While preparing to get my hands dirty with the breadboard and jumper wires, I found on the web an existing set of pre-recorded SP0256-AL2 phonemes Allophones.zip. It was cautioned that these files are recorded incorrectly, but I carry on anyway.

Since I don't know if this 'concatenate pre-recorded sounds' approach will generate acceptable quality at all, I thought it might be best to do a cheap proof-of-concept first, before I bother with developing the embedded controller. Since I found those pre-recorded audio files, this made it even cheaper. As mentioned, it was cautioned that the files were defective in some way, but I decided to rush in where angels fear to tread.

Python is not my mother tongue, so it was with some embarrassment that I would have to google as much as I did to get syntax correct for things like 'how do I make a for loop', but oh well. I got through it. The application was trivial -- read a bunch of audio files into arrays, and then whizz over a list of phoneme codes and concatenate them into a single array, which then gets written out as a wave file. Conveniently, I had a rather long sequence of phonemes from another project that is of The Doors 'Hello'. (I did that because the first word I hand-crafted was 'hello', and upon hearing that I immediately thought of that song.) The results were actually not that bad: testcase_001.wav

A little crackly at the phoneme transitions, but it seems to give credence to the hypothesis that this concatenation approach could be workable. It also immediately made me think of other ideas outside of the scope of the original project, so now I'm off pursuing undomesticated aquatic fowl (once again). One such canard is resurrecting some old text-to-speech code I have somewhere.

Next

A detour of a detour onto text-to-speech.

-

Look Who's Talking 0256 - The Feeling Begins....

05/05/2020 at 03:20 • 2 commentsSummary

For a separate activity, I needed to make a little testing setup where I could record phonemes from an SP0256-AL2 to see if using prerecorded audio would be an acceptable simulation of the actual device. To do this, I intended to hook up one of my existing physical SP0256-AL2 to a 'BluePill', and send data over the USB CDC serial port.

This was intended to be a throw-away project, but it seemed like it might have some use to someone else, so I have started this project to document such.

Deets

I have to backtrack a little since this activity actually started four days ago. At that time another hackaday user [Michael Wessel] had skulled one of my other projects -- a TRS-80 emulator. I noticed he had done some synthesizer work for the TRS-80 Model I physical machine, and I had been meaning to extend my emulator to do similar, but I didn't have manuals. He sent me a critical page that lists the TRS-80 Voice Synthesizer phonemes and codes, and some other snippets relating to mapping those codes to DecTalk, which he prefers for quality.

This was enough to pique my interest in seeing if I could pull off some sort of simulation in my emulator. I say 'simulation', because at this moment I didn't plan on emulating the chip (i.e. reimplementing the digital filters and voice model), but rather just try to concatenate pre-recorded phoneme output. Also, the only chip I have is the SP0256-AL2, which is almost certainly not the chip that was used in the original product anyway. But maybe I could make a serviceable approximation in the meantime -- my emulator is just for entertainment, anyway.

So my thought was that I should record the set of phonemes from the physical device (which I have a couple of from back in the day). The device needs some other processor to drive it, so I thought about using my beloved BluePill, of which I keep a small supply on-hand for these sorts of occasions.

Next

Planning...

ziggurat29

ziggurat29