-

The first working version of CAN library for Zakhar

12/26/2021 at 16:55 • 0 commentsIt is done for my robot but can be used everywhere else.

The link: https://github.com/an-dr/zklib_espidf_canbus

Using a system of descriptors, you can easily:

- send data to the bus periodically

- automatically store data with specific IDs

- send data in response to the RTR request frame

The developer should specify Store, Response, and Transmit (with a period in ms) descriptors and the library will do the rest.

The only missing and important thing is a mechanism to check which device is online. It will be implemented soon:

https://github.com/an-dr/zklib_espidf_canbus/issues/5As always, any contribution is welcome. See the opened issues (https://github.com/an-dr/zklib_espidf_canbus/issues) or create a new one.

Join the group on Facebook! https://www.facebook.com/groups/zakhar

![No photo description available.]()

![May be an image of indoor]()

-

Attention! DEVELOPERS WANTED!

12/12/2021 at 20:52 • 0 commentsThe project is ambitious, and I cannot move it that fast as I want to. If you are as interested in robotics as I am, let's try to work together. I'm confident that we will develop something unique!

The project covers many different areas and programming languages, including:

- 3D modeling (for simulation)

- 3D printing (for the robot itself)

- C / C ++ development (embedded: Arduino, ESP32, STM32; hi-level: Linux, Robot Operating System - ROS)

- Computer vision (image recognition, especially for indoor navigation)

- Hardware design (robot modules)

- Python development (Robot Operating System - ROS)

Please look at the list of repositories and non-green milestones below, see the issues, and take something if you want. If you want to participate but do not know where to start, create an issue in the main repository, and we will figure this out.

Any volunteer participation will be appreciated!

![]()

-

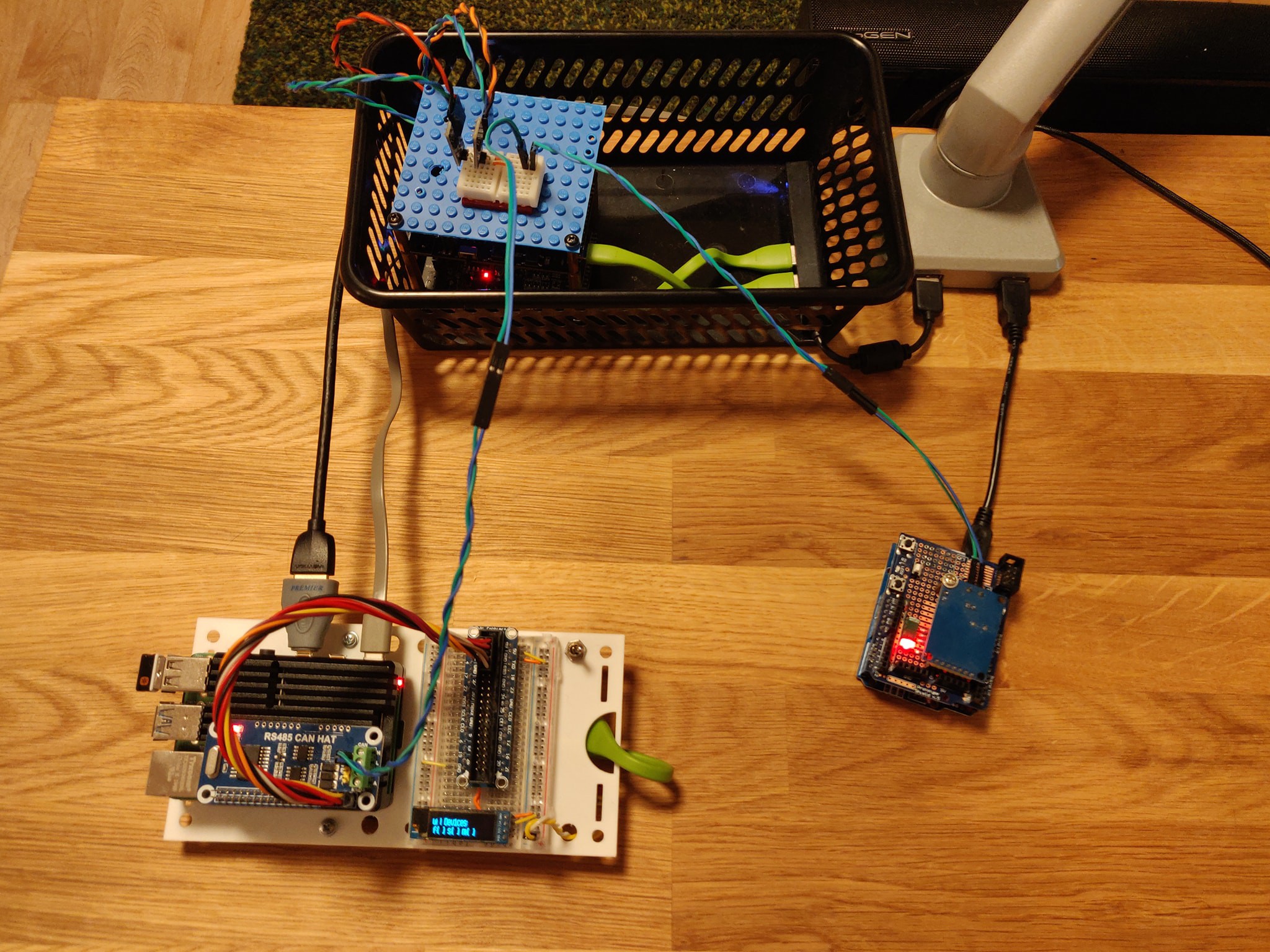

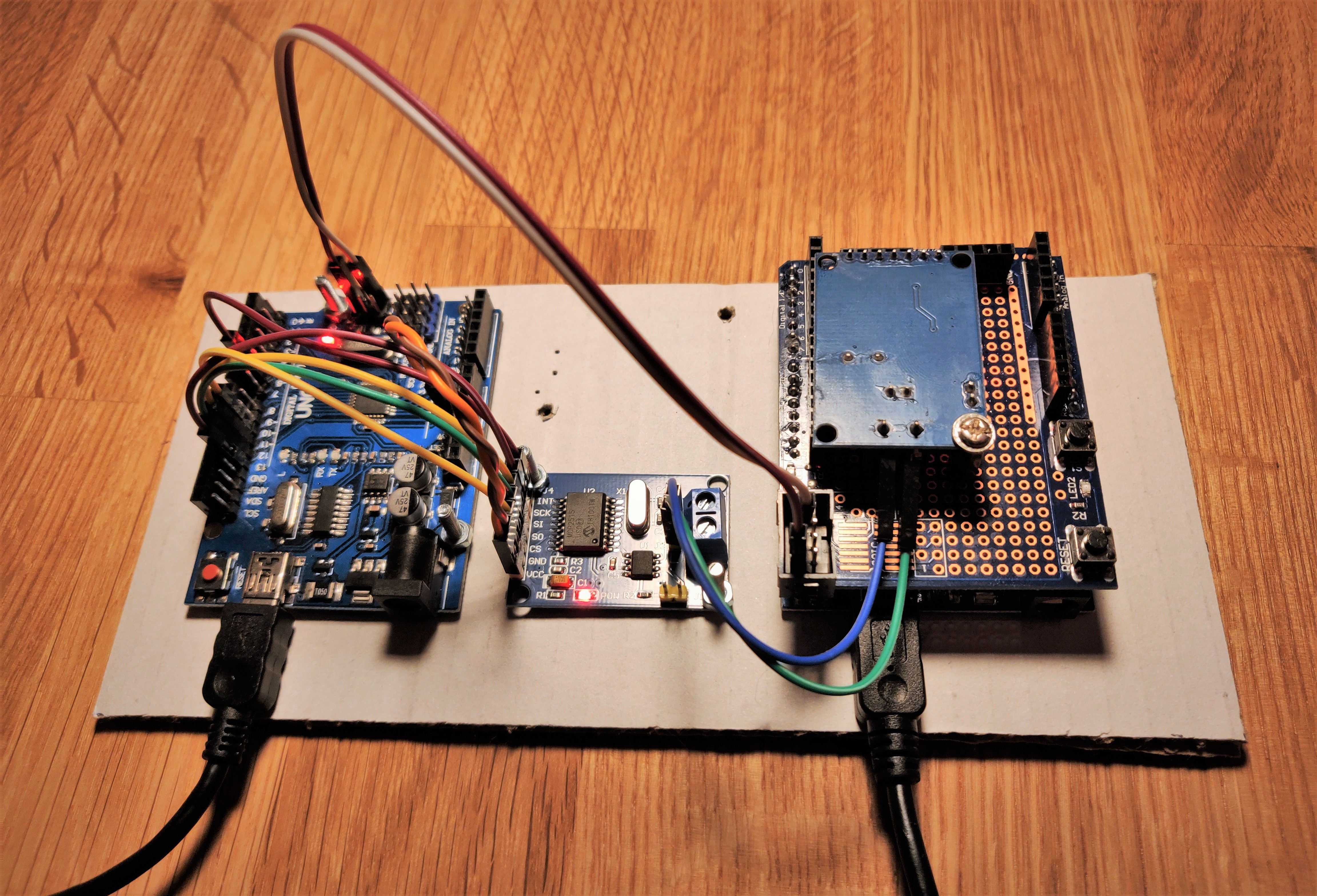

Can bus debugging

12/12/2021 at 01:47 • 0 comments![]()

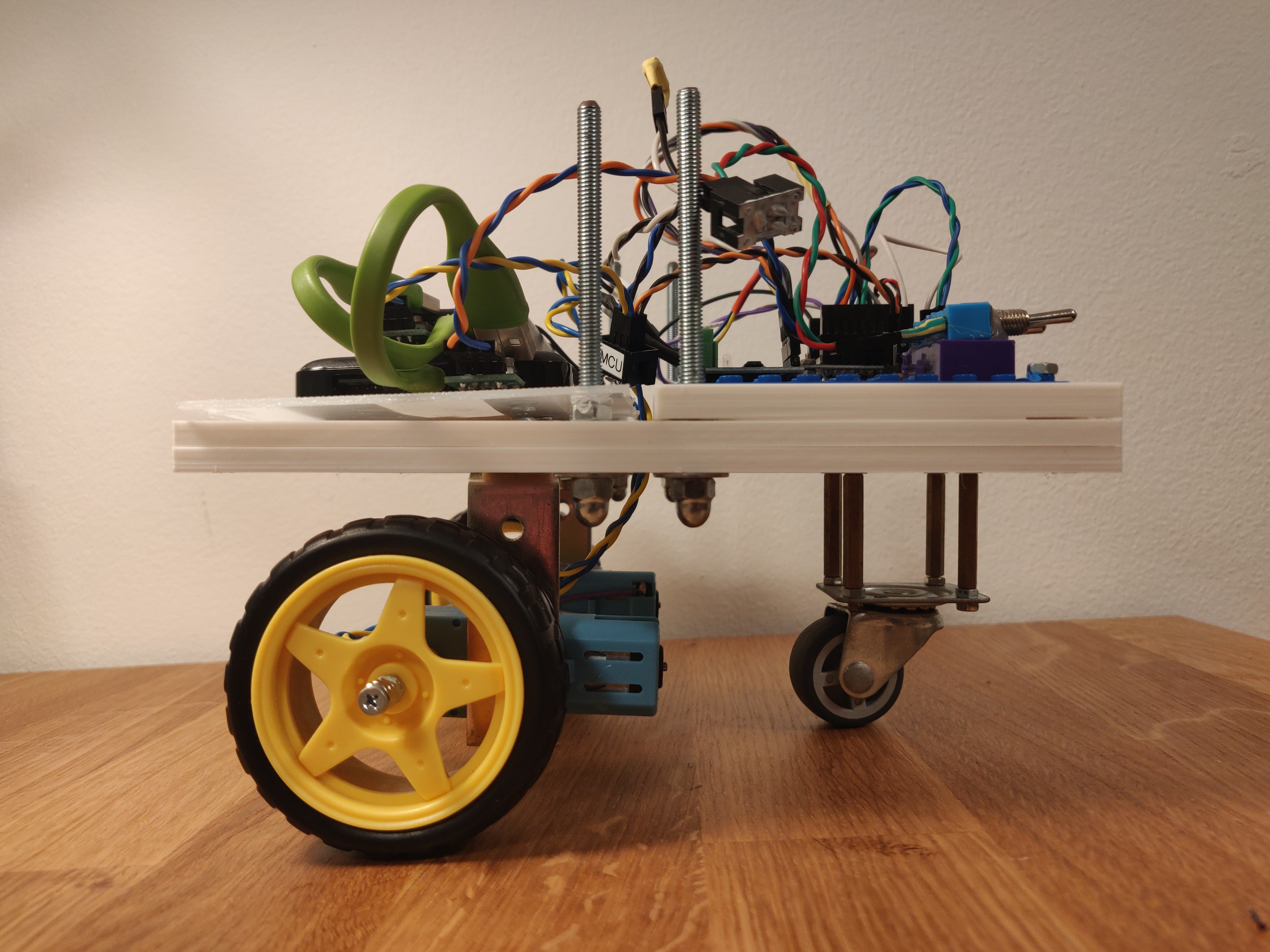

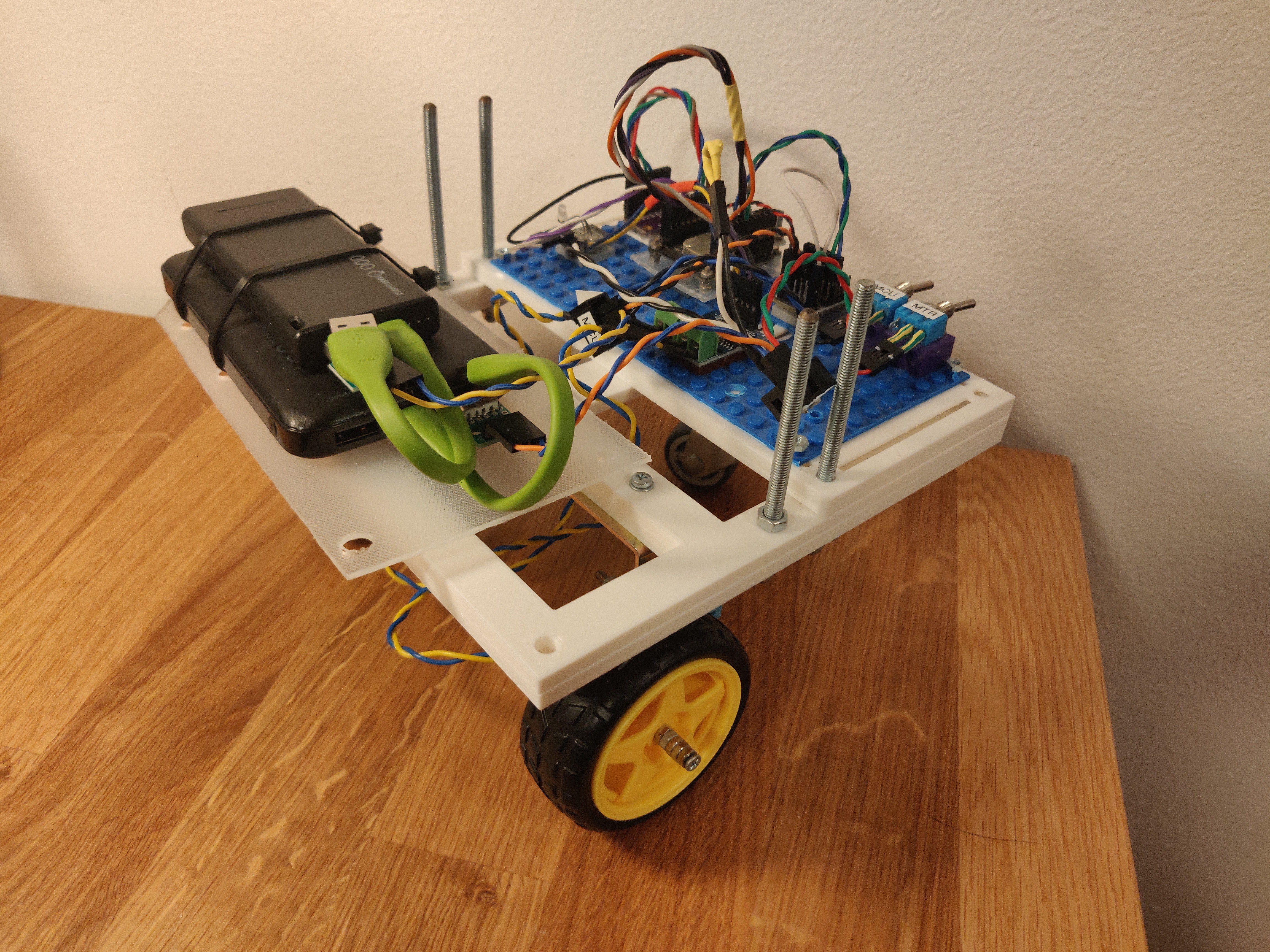

In the upper part there are two ESP32 stacked together, bellow there is a Raspberry Pi with my new CANbus hat and a CAN test tool.

The Raspberry part needs some tweaks from the Linux side plus a python CANbus subpackage. ESP32 Can library works excellent.

Since it is needed to write a library for STM32, Arduino, and who knows what else I want to build an Arduino-based CAN test/verification tool (lower right corner). The hardware is ready only code remains.

The main project of the transition from I2C to CAN is here, many things need to be done.

https://github.com/users/an-dr/projects/3

Want to join development? See links below!

https://github.com/an-dr/zakhar_service (Python, Linux)

https://github.com/an-dr/zakhar_pycore (Python)

https://github.com/an-dr/zklib_espidf_canbus (C/C++, CAN, ESP32)

https://github.com/an-dr/zakhar_tools (C++, Arduino, Hardware)

-

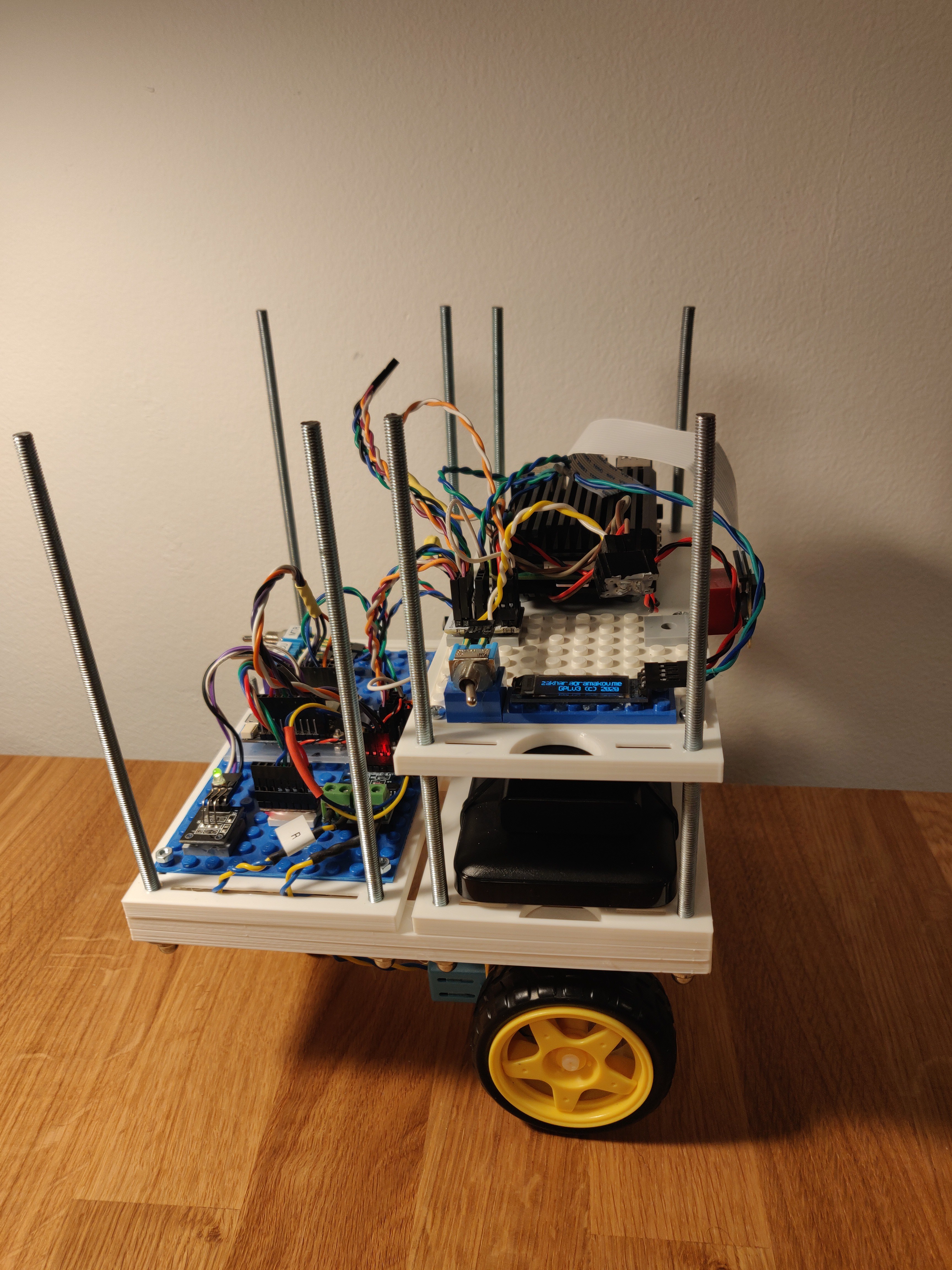

Zakhar 2: Migration to a New Frame

08/31/2021 at 20:00 • 0 comments -

Dear I2C, I resemble

08/22/2021 at 23:04 • 0 commentsI2C is a simple and elegant protocol. But probably because of its simplicity, MCU manufacturers deliver it poorly to developers.

I'm using several platforms in the project, and all of them have completely different driver implementations. Ok, Arduino means to be simple, I'm not complaining there. But my dear ESP32 brought me so much frustration with I2C (unlike STM32 and Raspberry Pi where everything works perfectly). I spent a couple of weeks solely trying to get it working as I designed, but even after writing my interruption handler, I haven't gotten any success.

To be precise, I lack the START interruption, which exists on paper but doesn't work on my boards. I presume that there are some problems in hardware (e.g., here https://github.com/espressif/arduino-esp32/issues/118 my ex-colleague points out that the hardware is not that flexible). Also, it can be that something is slipping out from me, and the problem can be easily solved...

I want to take advantage of this situation and will try to switch to a more suitable bus for robotic applications. Let's make it CAN.

I will start with the creation of a small device that can verify the connection to each module in the system and development of some minimalistic protocol that could fulfill project needs.

![]()

Update 2022-Jul-12 : Fix links

-

Got a 3D Printer

08/22/2021 at 21:43 • 0 comments![]()

![]()

![]()

-

Zakhar II: Separately controllable DC motor speed

07/10/2021 at 09:34 • 0 comments -

"On the way to AliveOS" or "making of a robot with emotions is harder than I thought"

03/22/2021 at 20:52 • 0 commentsSo, indeed, the more I develop the software for Zakhar, the more complicated it goes. So, first:

Contributions and collaborations are welcome!

If you want to participate, write to me and we will find what you can do in the project.

Second, feature branches got toooooo huge, so I'll use the workflow with the develop branch (Gitflow) to accumulate less polished features and see some rhythmic development. Currently, I'm actively (sometimes reluctantly) working on the integration of my EmotionCore to the Zakhar's ROS system. So now, the last results are available in the `develop` branch:

https://github.com/an-dr/zakharos_core/tree/develop

Third, to simplify the development of the robot's core software I would like to separate really new features from the implementation. So, Zakhar will be a demonstration platform and the mascot of the project's core. The core I'd like to name the AliveOS, since implementation of the alive-like behavior is the main goal of the project. I am not sure entirely what the thing AliveOS will be. It is not an operating system so far, rather a framework, but I like the name :).

Fourth and the last. Usually, I write such posts after some accomplishment. That's true and today. I just merged the feature/emotion_core branch to the develop one (see above). It is not a ready to use feature, but the core is working, getting affected by sensors and exchanging data with other nodes. It doesn't affect the behavior for now. For this I need to make some huge structural changes. The draft bellow (changes are in black and white)

![]()

Next steps:- Implementing of the new architecture changes

- Separation of the core packages into the one called AliveOS

- Moving AliveOS to the separated repository and including it as a submodule (the repo already exists: https://github.com/an-dr/aliveos)

Thank you for reading! Stay tuned and participate!

-

EmotionCore - 1.0.0

02/23/2021 at 19:59 • 0 commentsFirst release!

The aim of this library is to implement an emotion model that could be used by other applications implementing behavior modifications (emotions) based on changing input data.

The Core has sets of **parameters**, **states** and **descriptors**:

**Parameters** define the **state** of the core. Each state has a unique name (happy, sad, etc.) **Descriptors** specify the effect caused by input data to the parameters. The user can use either the state or the set of parameters as output data. Those effects can be:

- depending on sensor data

- depending on time (temporary impacts)It is a cross-platform library used in the Zakhar project. You can use this library to implement sophisticated behavior of any of your device. Contribution and ideas are welcome!

Home page: r_giskard_EmotionCore: Implementing a model of emotions

![]()

-

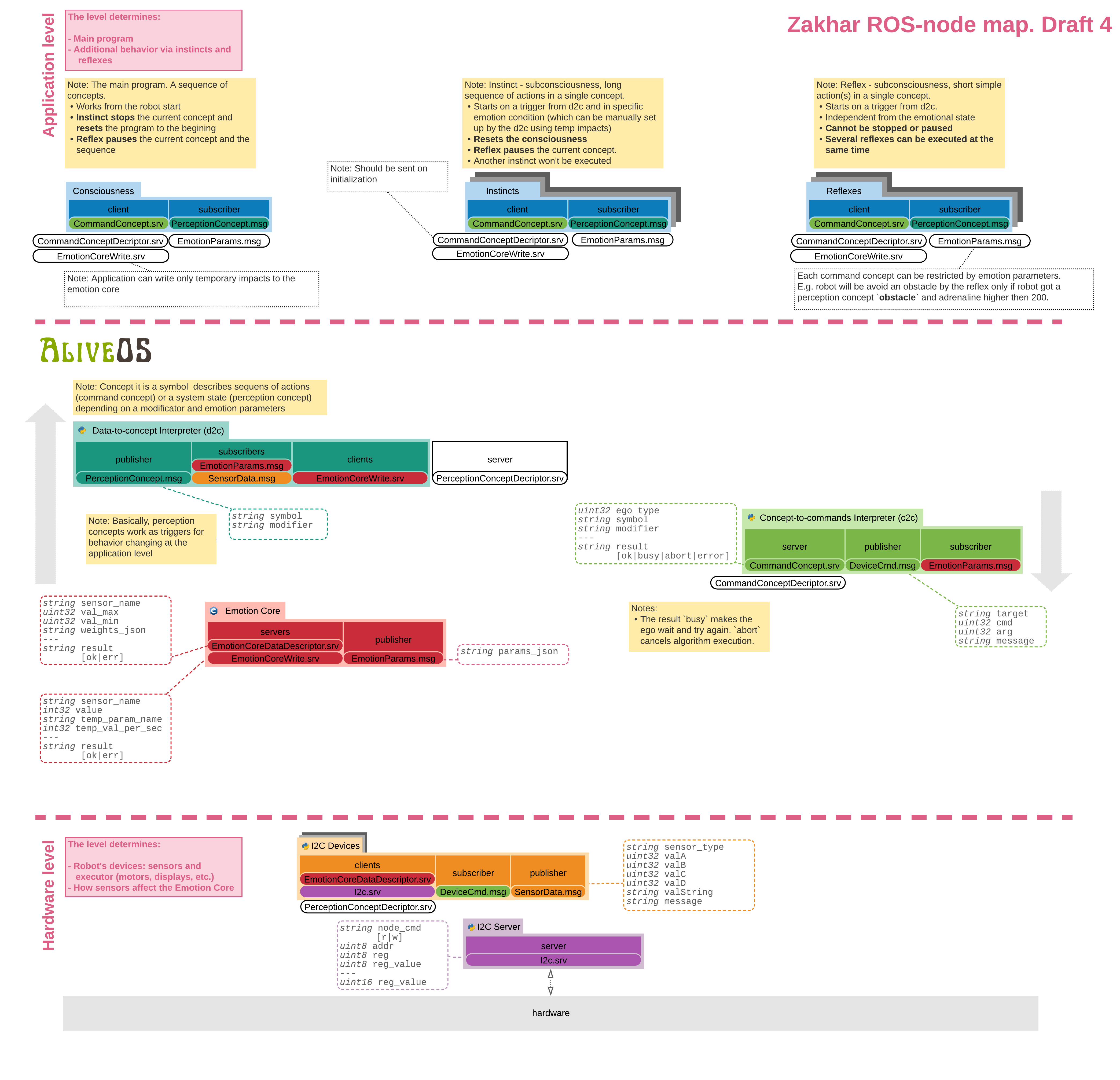

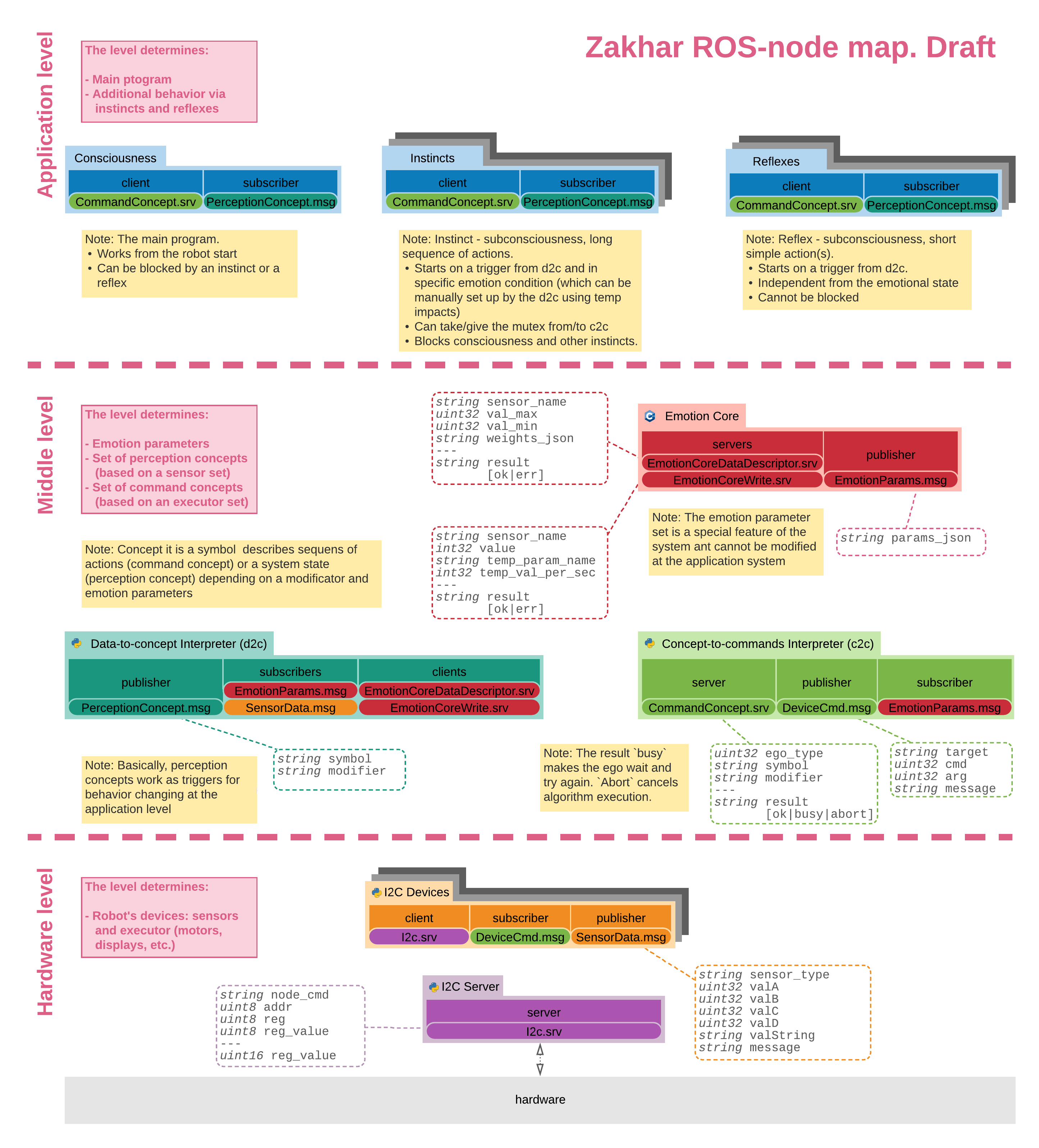

Updated draft of the ROS-node network with the Emotional Core

01/28/2021 at 15:25 • 0 commentsWorking on the Emotion Core update it became clear to me that placing responsibility of emotional analysis to Ego-like nodes (a Consciousness, Reflexes, and Instincts) is a wrong approach. It leads to the situation where the developer of the application should specify several types of behavior themself, which is too complicated for development.

I want to implement another approach when concepts themselves contain information about how they should be modified based on a set of the emotion parameters. For example:

1. An Ego-like node sends the concept `move` with a modifier `left`:

{ "concept": "move", "modifier": ["left"] }2. The concept `move` contains a descriptor with information that: if adrenaline is lower than 5 - add a modifier `slower`

3. The Concept-to-command interpreter update the concept to:

{ "concept": "move", "modifier": ["left", "slower"] }4. According the concept descriptors the Concept-to-command interpreter sends commands to the device Moving Platform:

- Set speed to 2 (3 is maximum for the device)

- Move left

- Set speed to 3 (default value)

In addition, I updated the diagram itself by structuring it and adding notes to make the entire system easier to understand. Here is the diagram:

![]()

Now I will implement the structure above in the emotion_core branch of the zakharos_core repository. The feature is getting closer to implementation. More updates soon.

Links:

https://github.com/an-dr/zakharos_core/tree/feature/emotion_core

Andrei Gramakov

Andrei Gramakov