-

Syncing in the kitchen

10/14/2021 at 13:17 • 0 commentsFor now enjoy this dual-system sound demo. Stay tuned for a multicam demo with Olive and OpenTimelinIOHere is the camera recording: image + sync signal (fingers sound is absent, remember: this is a dual system sound set-up):![]()

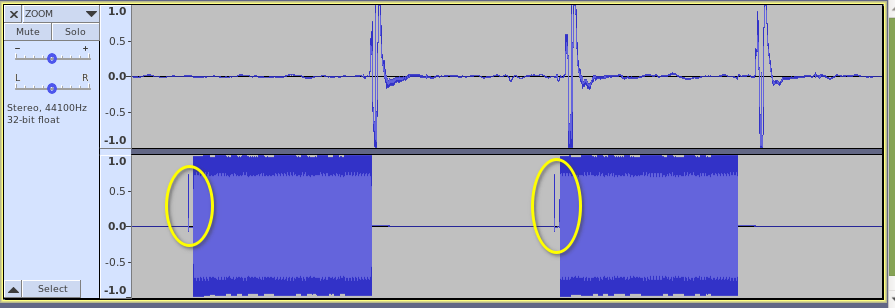

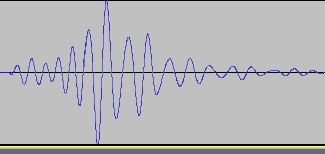

This is the ZOOM H4n Pro recorder tracks. Note the thin spikes in the right channel (the yellow ovals): they are the sync pulses from the GPS, straight on the UTC second (the left channel are the fingers snipping). Those sync spikes are present in the movie too (by construction)

![]()

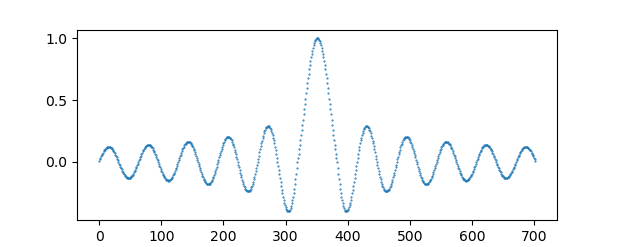

![]() Postprocessing with dualsoundsync.py, aligning the spikes in the audio recorder file with those in the camera file:

Postprocessing with dualsoundsync.py, aligning the spikes in the audio recorder file with those in the camera file:$ dualsoundsync /media/shooting_files_rep scanning rushes_folder splitting audio from video... splitting audio R and L channels trying to decode YaLTC in audio files 1 of 4: nope 2 of 4: ZOOM_Rchan.wav started at 2021-10-13 01:45:08.263145+00:00 3 of 4: nope 4 of 4: fuji_a_Lchan.wav started at 2021-10-13 01:45:14.361967+00:00 looking for time overlaps forming pairs... joining back synced audio to video: dualsoundsync/synced/fuji.mp4

![]() Tadaam, fuji.mp4 automagically synced!

Tadaam, fuji.mp4 automagically synced! -

Synced !

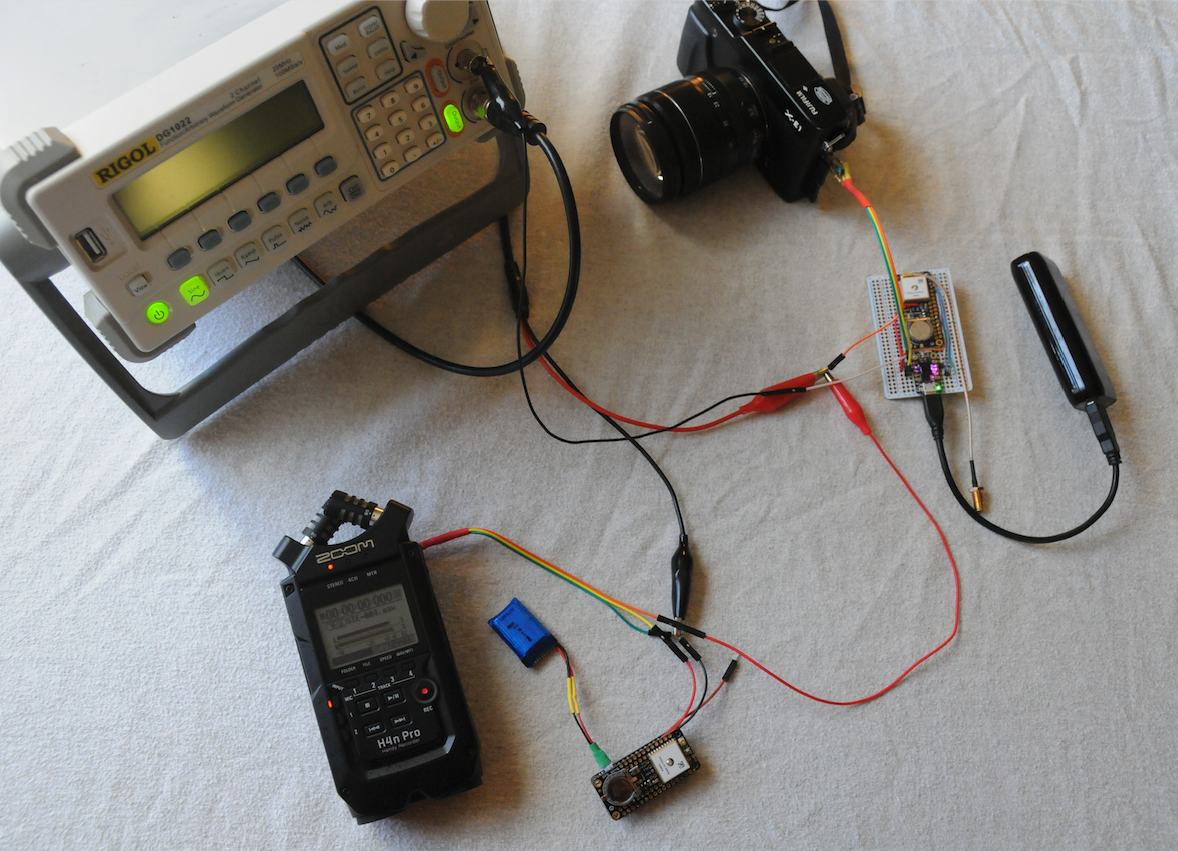

09/11/2021 at 14:17 • 0 commentsAs a test for assessing system performance, the same pilot tone (a continuous sinus wave of 220 Hz) has been recorded simultaneously by two devices: a ZOOM H4n Pro and a FUJIFILM X-E1) alongside their atomicsynchronator dongle (recordings where started one after the other so they were off sync by approx. 3 seconds)

![]()

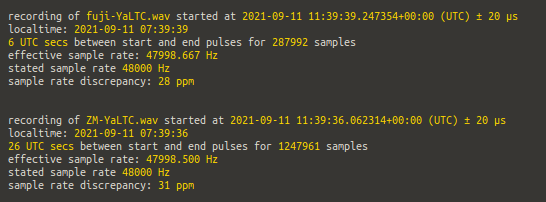

Each devices recorded two channels: the common 220Hz CSW and the YaLTC signal from their respective synchronator (an Adafruit Feather based for the H4n and a Trinket M0 one for the FUJI). The camera was used as a stereo sound recorder: the movie itself being discarded.The two tone tracks have been synchronized using the timestamp deduced from their YaLTC track and mixed into a single stereo file (the earlier recording is simply trimmed of the exact samples number so they start at the same time). Below are the decoding program output; we see the H4n (ZM-YaLTC.wav) was started 39.247354 - 36.062314 seconds earlier. Take note: 7 out of 8 of those yummy digits are significant!

![]()

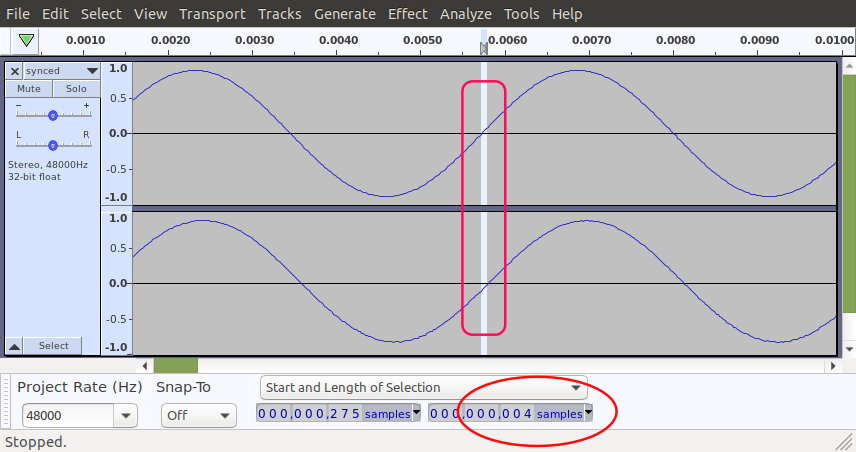

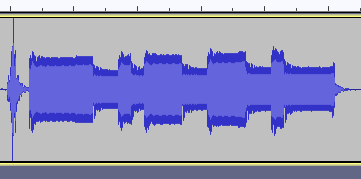

The precision of the sync process is then given by how much the left and right 220 Hz recordings are out of phase:

![]()

They're out of sync by a mere 4 samples: a 83 μs offset! (for reference, typical video frames are spaced 33000 μs apart). Cool.

-

But it's only parts per million!

08/05/2021 at 01:04 • 0 commentsThe atomicsynchronator encoding is precise enough to evaluate each device *effective* sample rate: my Nikon D300S sound is spec'd at 44100 Hz but is really 44099.688 Hz (all digits are significant), a discrepancy more simply expressed in ppm (here 7 ppm). My Fuji X-E1 shows an error of 33 ppm. Why is this consequential? Because when syncing sound and video from 2 distinct devices at the clip start, the tracks will drift apart slowly and for long enough takes (interviews, live shows) it will be noticeable.

How long, you ask? Take the maximum acceptable offset between video and sound to be 15 milliseconds (cf link); this small interval would be 33 ppm of the whole recording, hence T = (1e6/33)*15e-3 = 454 seconds! After about 8 minutes the drift is out of spec... The solution? Stretch (or compact) one of the sound track to match the reference track frequency using sox prior to merging sound.

-

Important milestone: 2FSK demodulation working!

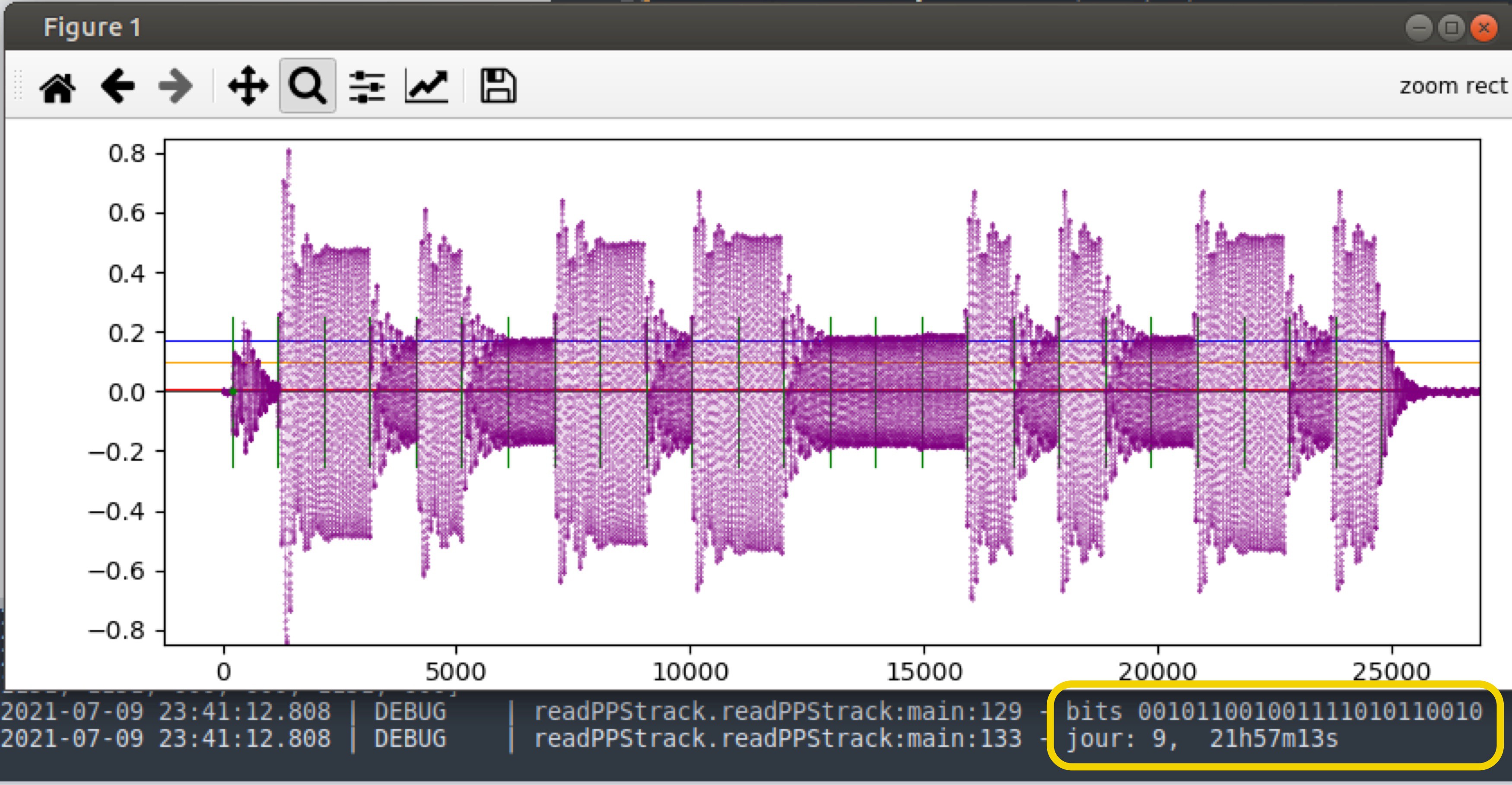

07/10/2021 at 04:01 • 0 commentsOuvrez le champagne!

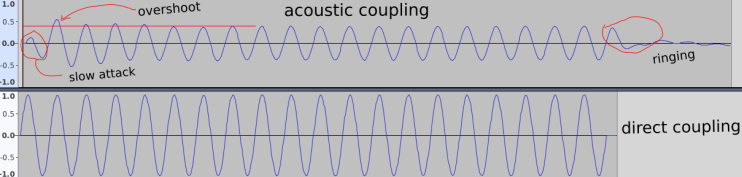

With the Canon, acoustic coupling:

![]()

Next step: whipping up a program 'syncNmerge' with ffmpeg as engine.

-

Almost a Kickstarter sales pitch

06/22/2021 at 01:09 • 0 comments8-)

-

Back to a square

06/15/2021 at 23:16 • 0 commentsI didn't tell you I changed, yet again, the synchronization pulse, did I? It's a square! Other shapes are too distorted when acoustic coupling is used and it was impossible to robustly detect it. See and ear for yourself the result (filmed on a NIKON D300s with mic input):

-

Working on doc

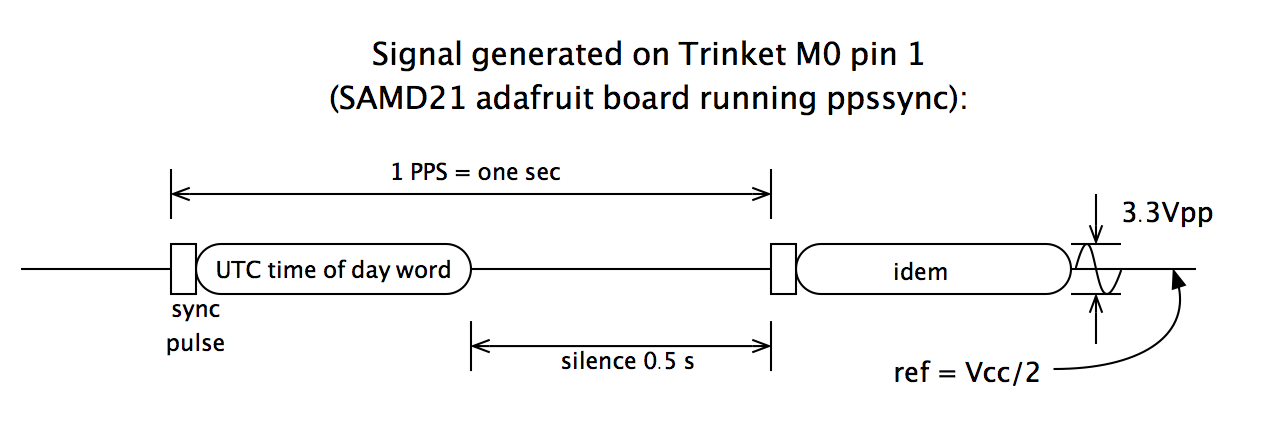

06/15/2021 at 01:15 • 0 commentsSignal:

![]()

Encoding:

![]()

-

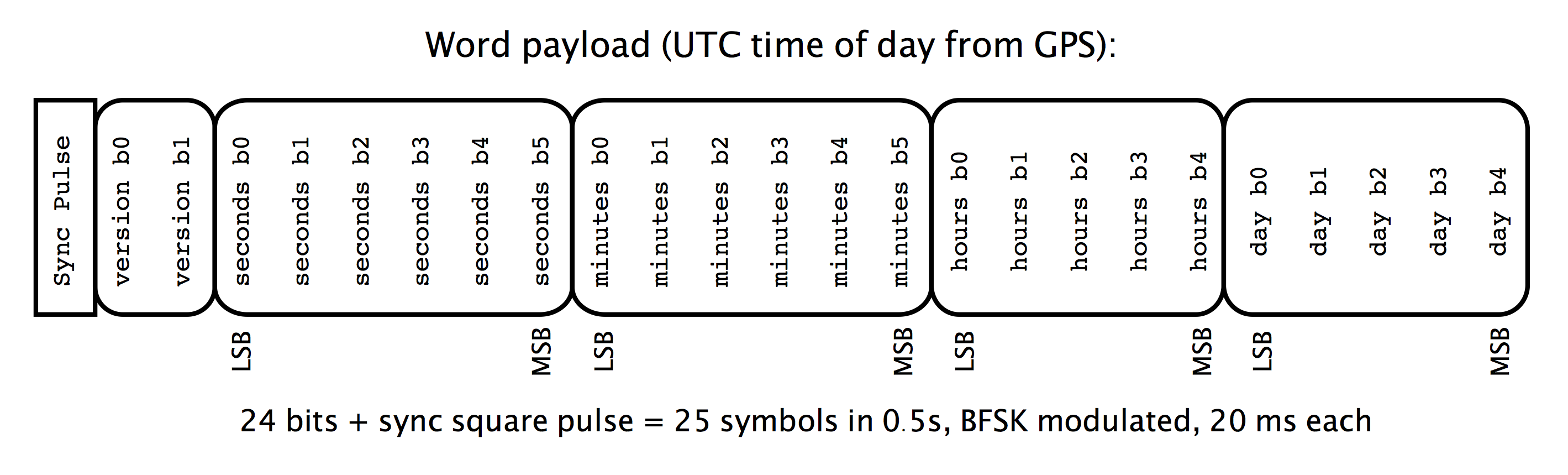

Found the right sync pulse shape

06/02/2021 at 16:45 • 0 commentsBut still, I'm stuck with strange artifacts (nothing too serious)... Note the exponential decay in the camera recording (a Canon PowerShot ELPH 330). Gonna do the same measurements with my Nikon D300S.

![]()

-

Celebrated too early

06/01/2021 at 21:00 • 0 commentsWell, I rejoiced too soon. A much larger sampling (a 10min take) revealed the pulse location is much less robust than I thought... back to the drawing board.

Restating project goals

For those who just jumped in, here's a recap: I want to design and implement an audio time code (and accompanying hardware) for automated video synchronization, cheaper than existing solutions, working on camera without sound input and using off the shelf components.

![]()

Acoustic coupling challenges

I want to localize pulses at the highest possible precision in the recorded audio. Typical cameras use a sampling frequency of 48 kHz so I’m aiming at a maximum variation of more or less 4-5 sampling periods, ie ±100 μs. For now I’m still exploring which pulse shaping is better at avoiding transients in the recordings.

Fighting against the camera automatic gain control

I generate this symmetrical shape from the SAMD21 DAC output and feed it to a small headphone speaker:

![]()

And here’s the resulting sound recording, note the asymmetry now present:

![]()

I guess I should slow down the attack to avoid triggering the AGC too heavily, and I should generate an already odd signal (odd vs pair).

Something like a tri-level sync pulse (surprise!) and aim at the zero-crossing point as the timing anchor.

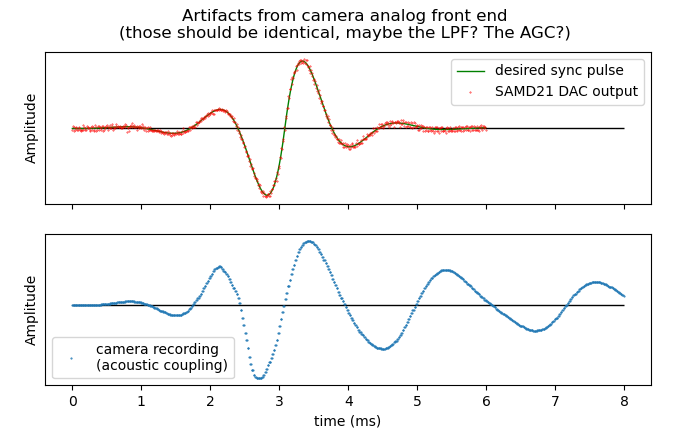

Time of day (UTC) is done and is part of the 23 bit BFSK word following the aforementioned sync pulse whose location I'm still optimizing:

![]()

Nota: I don't modulate the amplitude, it's an artifact of some non-flatness in response curves (mic, speaker, air gap?) The two used frequencies are 1000 and 1800 Hz.

-

acoustical problems

05/25/2021 at 00:59 • 0 commentsaaaahh... Sync pulse direct recording is OK. See second track below, exactly 20 cycles, direct meaning with electrical connections between the board and my computer audio line in.

But acoustical coupling shows transients that will make postprocessing harder...

Acoustical coupling for board → speaker → mike → computer ADC

![]()

I'll rather try to shape the pulse with a gaussian on the SAMD21 to avoid those.

Atomic Synchronator => TicTacSync

Beyond '67 SMPTE timecode: YaLTC is a new GPS based AUX syncing track format for dual-system sound based on affordable hardware

Raymond Lutz

Raymond Lutz