With time running out, I made a push to add a few features before finishing things up. In the course of this, I was able to add more animations and icons for the Neopixel matrix, add a system for playing songs, and make a couple of final adjustments.

Adding Animations

One of the early objectives was to better utilize the flexibility offered by the Neopixel matrix. To that end, I expanded the sprite sheet to include more animations and icons.

Before I get too far into discussing my new way of handling these icons and animations, I will briefly discuss how it worked in last year's version of the costume. The original testNeopixel.py (as seen in the speechRec branch in the GitHub repo) is divided into two main parts. First, the displayImg() function. It takes in a monochrome green 16x16 LED matrix and displays it on the screen. In addition to taking in a .gif, it also has a reflect parameter. When true, the .gif will be displayed flipped across the center vertical axis of the screen. This was used to reduce the number of frames needed for eye animation. Rather than drawing the eye looking left and right, only left was drawn and reflection was used to make it look right.

The second part of the old implementation was a simple state machine. Each state represents an animation state (looking far left, left, right, far right). Looking at the looking far-left state, you can see that it has a random chance of either remaining in the looking far-left state for a random amount of time or changing to the looking left state. Similarly, the left state can randomly transition to the right or far-left states, stay in its current state with no animation, or stay in its state while executing a blink.

The new implementation adds a metastate for a nested state machine. If the metastate is "default", the old eye state machine will run will minimal differences (timing was tweaked a little). However, the metastate can be used to enter different animations or static icons, such as a moving clock or a jack-o-lantern. The metastate is taken from a shared.pkl file, with this pickle file serving as the interface between the speech recognition and display driving programs. This is ugly and more than a little bit of a mess, but I just needed something that would work without me having to learn threading.

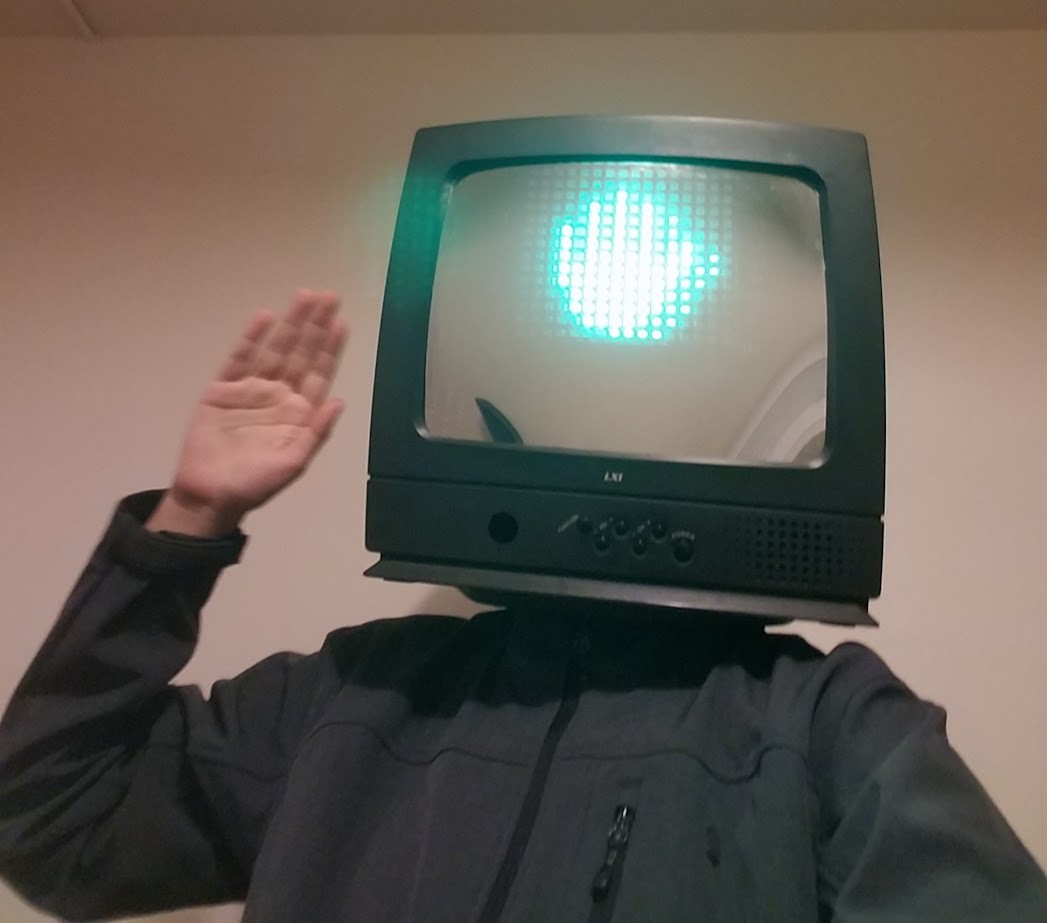

The speech recognition side is fairly simple. Once speech is recognized, it is checked for validity (neither an empty string nor just "he", as random noise would often be recognized as "he"). From there, it is checked against a set of command words. If it is a command word, shared.pkl is updated to reflect the new state for testNeopixels.py and the word is said if needed. For instance, "yes" results in a state of "check" (for a checkmark to be displayed on the LED matrix) while the word is said. Alternatively, "waving" is the command word for starting the waving animation while not having espeak repeat the word. If the recognized text is not a command word, it is just said by espeak without any change to shared.pkl. The result can be seen below, with me wearing the helmet. Not the best footage, but hopefully a good proof of life.

Adding Music

As a last fun feature, I decided that the music note icon could use some actual music. The desired functionality is to randomly play a .mp3 file (8-bit classical music in my case) from a folder when the note icon is selected. Similarly, any change of state (saying anything) should stop the music. The solution was just to use the python VLC module. While seemingly simple, I ran into two issues. First, the speech recognition system would hear the music. It wasn't loud enough to make it start recording a sample, but it was loud enough to make it not stop recording the sample so it could be processed. The result is that I couldn't say anything before the music stopped, preventing me from stopping the music. Luckily, the solution was easy to find. While I lamented the cost of the Samson Go Mic earlier, it has redeemed itself. It has two microphones (front and back), which gives it the ability to be directional. By enabling the directional mode and slightly reducing the music volume, it was no longer loud enough to keep the voice recognition system recording.

This was first implemented in testNeopixel.py, but this was ill-fated. For reasons that are not entirely clear to me, I could not have this autostart without preventing either audio or neopixels from working. The solution is simply to play the music from mic_vad_streaming.py. As an aside, the music folder contains both the music (labeled 1-11) and a credits.txt file that provides the name and source of the music.

Final Adjustments

Final adjustments have been pretty simple. First, I replaced the acrylic one-way mirror. Unfortunately, I wasn't as cautious as needed when storing and transporting the costume during the past year. Luckily, the acrylic panel was inexpensive and easy to replace.

Second, I made a final pass of cable management, if you can call it that. Just some cable ties and hot glue to keep wires out of my face.

Third, I made both testNeopixel.py and mic_vad_streaming.py start automatically. I had some troubles with audio and needing to use the default pi user (which has the libraries installed), but other than that it is the fairly standard use of /etc/rc.local.

Finally, I made a couple of minor improvements to comfort. First, I moved the battery from the side to the back of the helmet, improving balance. Second, I got a haircut. With long hair, I would have to tilt the hard hat forwards before putting it on and tightening it, or my hair would end up in my face. Unfortunately, there is very little room for tilting the hard hat when the costume is assembled, and I had to screw together the back and front pieces while wearing it. With shorter hair, I can put the helmet on assembled without the discomfort of hair in my face.

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.