As I said in the description, Aina is an open-source humanoid robot which aim is to be able to interact not only with its environment in terms of moving through it and grasp some light things but also speak and interact with humans by voice and facial expressions. There are several tasks that this robot can accomplish once it's finished like:

- Assistance: Interaction to assist, for example, elders, students, and office workers through remembering events, looking for unusual circumstances in the home, between others

- Development: Aina is also a modular platform to test algorithms of robotics and deep learning.

- Hosting: It can guide people in different situations and places to inform, help, or even entertain someone.

These tasks by no means are far from reality, a good example of it is the pepper robot, used in banks, hotels, and even houses., so let think about which characteristic the robot must have to do similar things:

- Navigation: It must be able to move through the environment.

- Facial expressions: It must have a good-looking face with enough set of emotions.

- Modularity: Easy to modify the software and hardware.

- Recognition: Of objects with Deep Learning techniques.

- Socialization: Be able to talk like a chatbot.

- Open source: Based on open-source elements as much as possible.

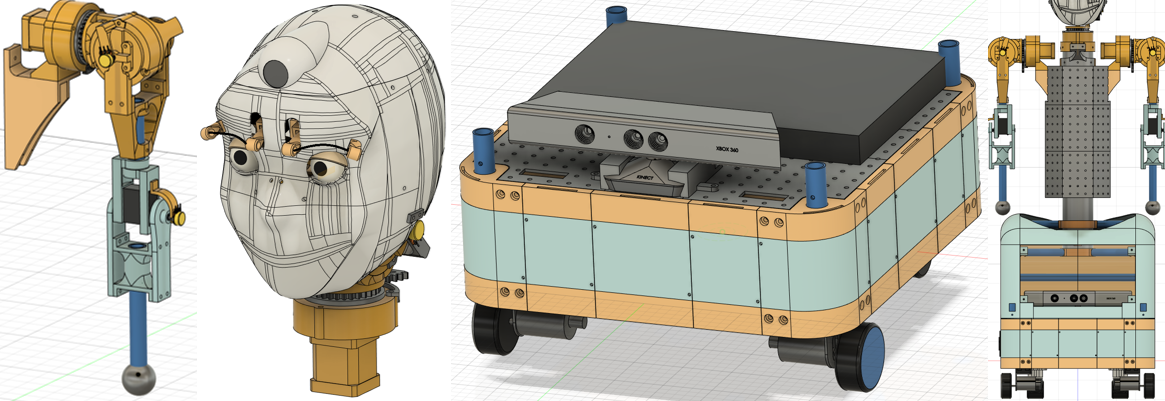

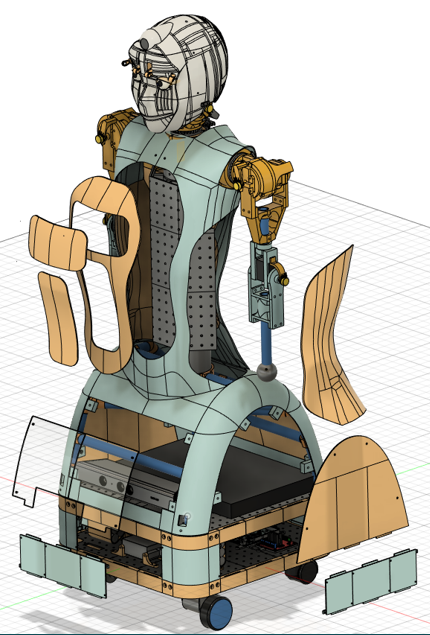

Because there is a lot to do a good strategy is divide to conquer, for this reason, the project is separated into three main subsystems and a minor one:

- Head: this is part that enables the emotional side of the communication through facial expressions with the eyes and eyebrows (and sometimes the neck), no mouth is added because I think that the noise of it moving can be annoying when the robot talks.

- Arms: No much to say, the arms are designed in an anthropological way and due to financial constraints this cant can have high torque, but I can it move with some NEMA 14 with 3d printed cycloidal gear to gesticulate and grasp very light things, so it's fine.

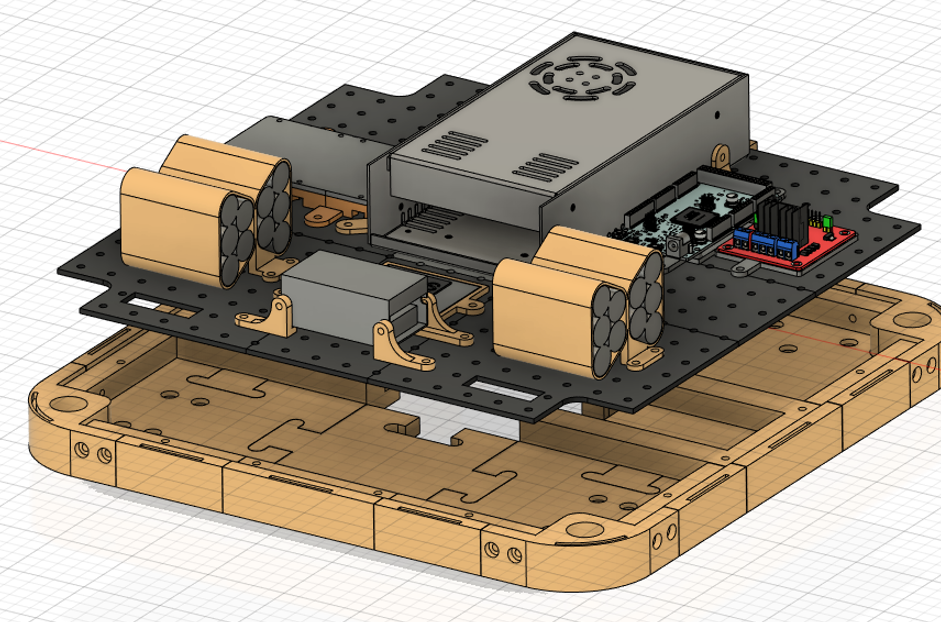

- Mobile base: This is the part that enables the robot to map, position it, and move the robot in the world through a visual slam with a Kinect V1.

- Torso: As the middle part that interconnects the other subsystems.

The general characteristics of the robot are:

- A mobile base of area 40x40 cm2.

- Total height of 1.2 meters.

- A battery bank of 16.4@17000 mAh.

- estimated autonomy of one and a half hours.

- It has spaces for modularity, which means that it's easy to adapt it to future necessities.

- The arms has 3 degrees of freedom each one and three more in the hand.

- The software is based on ROS and docker, so it's faster and easy to develop.

- Part of its behavior is based on deep learning techniques.

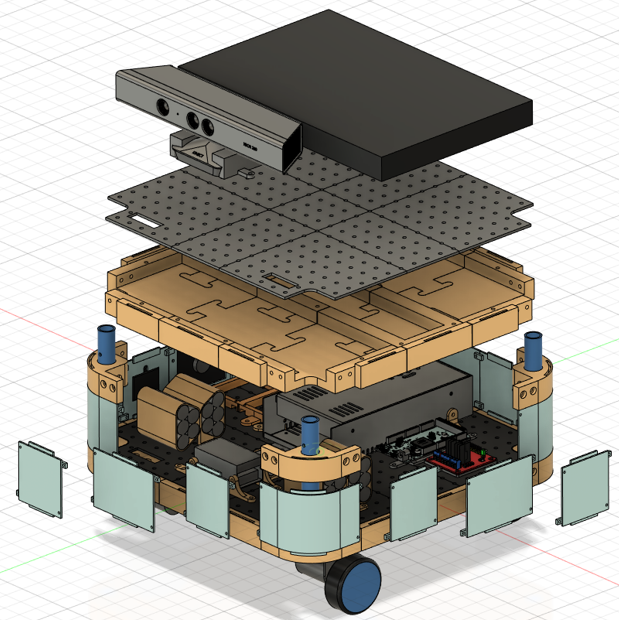

Several parts of the robot has holes or are detachable and even interchangeable, the holes are useful to attach electronic components to the robot keeping the possibility to remove them (or add others) whenever necessary, meanwhile, the detachable parts can be modified to fulfill future requirements, like add a sensor, a screen or just make room for more internal functionalities, a good example of this is the mobile base, as you can see in the next images there are several parts that others can modify (light blue ones), and if you want to move or exchange some electronic part just unscrew it.

Of course, the same principle applied to the rest of the robot.

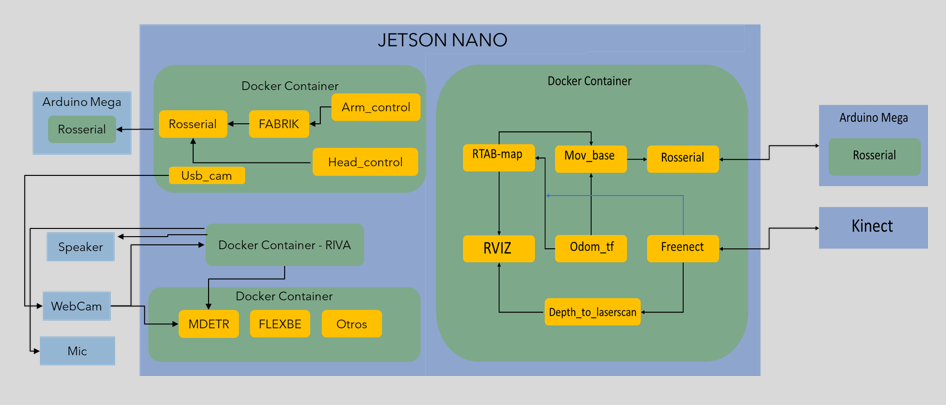

In regards to the software, as I said before is ROS and docker based, the simplified scheme of connections between the different elements are presented in the next image.

As you can see several containers are running on a Jetson Nano, let me clarify the purpose of them, the docker container on the right part is responsible for the navigation task, it's able to do the whole slam process with a Kinect V1 and control the non-holonomic mobile base to the goal point specified by the user, the yellow squares represent the ROS packages implemented to accomplish the task, the up-left docker container has all the necessary programs to control the head, neck, and arms, to find the right angles for the last one a heuristic inverse kinematic algorithm called FABRIK is implemented as a node (you can have my Matlab implementation in the file section), the middle-left containers has all the necessary files to run the SDK conversational AI provided by Nvidia called RIVA (is free and there is any license trouble to be used in non-commercial open-source projects), it has more capabilities like gaze and emotion detection, that will be very useful for the social interaction part, the las container (down-left) has a neural network model called MDETR wich is an multiomodal understanding model based in images and plain text to detect objects in images, FLEXBE is a ROS tool to imeplemente state machines or behavior schemes in a firendly graphical way, so it can be said that this containers is about the "reasonong and behaviur part" of the social interaction.

That's it for now, in the next logs more details will be presented.

Maximiliano Rojas

Maximiliano Rojas

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.