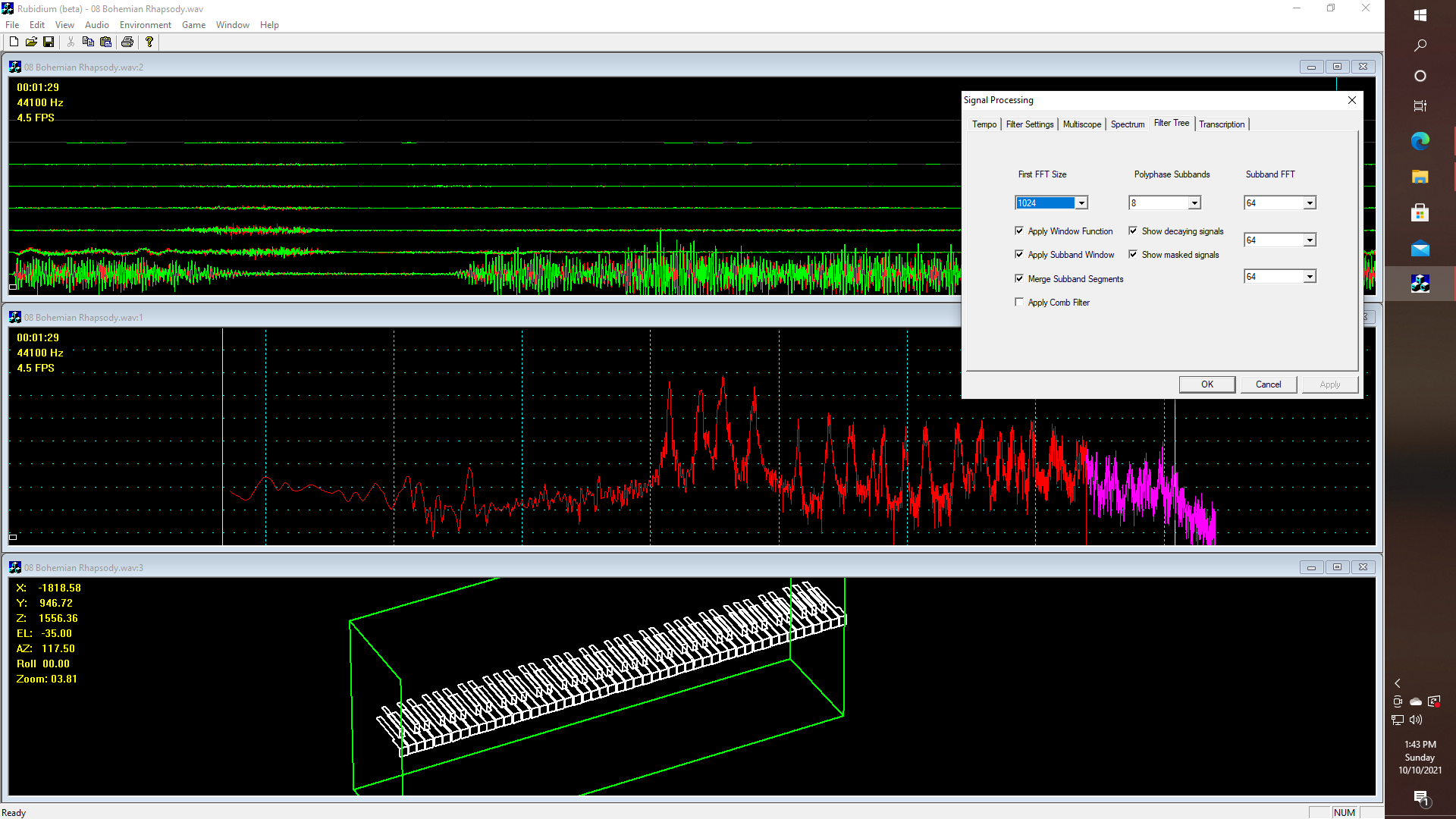

I know what you are thinking. As WOPR (pronounced "whopper") said when asked "Is this real or is this a simulation"?" -- What's the difference? Right? What are a few megatons among friends, or former friends? On the other hand, if you have ever run spectrum analyzer software on a song like "Bohemian Rhapsody" let's say, you might have noticed a very prominent second harmonic component of the British power line frequency sticking out prominently at around -60db, that is to say at 100Hz. Wow!! Let's have a look at a screenshot of a processed sample block from the CD:

Here is what is happening. The yellow vertical lines in the middle window are the upper and lower bounds of the range of notes available on a standard full-size piano, and the minor divisions on the horizontal axis represent semi-tones, i.e.. so that there are 12 divisions per octave based upon a so-called log-log plot of the spectrum. Thus for this image, I am performing an initial 1024-point FFT, whereby I take the output of that first FFT and break up the spectrum into eight blocks of frequencies, as indicated by the dialog box, just like MP3 on the one hand, that uses a "filter bank" to split the spectrum up into 32 sub-bands, which can then be subjected to further processing - such as in separate threads, or according to different algorithms, according to the need for beat and bass line detection, harmonic analysis, etc.

Hence, one of the things that I have found a need for is the creation of a menu that allows me to select different FFT parameterizations, so that I try every possible combination, including ridiculous ones like million-point FFTs that split the spectrum into individual channels which are, whatever - lest say 10Hz wide, if we wanted. Thus what the upper window represents, is in the present case, taking like I said: an initial 1024 point FFT, which is then split up into 8 blocks of frequencies, and then converted back into TIME-DOMAIN signals, resulting in what you see on the top window. Thus that window is running an eight-channel, (actually 16 because of stereo pairs) oscilloscope, that is displaying the output of the aforementioned "poly-phase filter tree", although such an oscilloscope could just as well be used as a "simple" logic analyzer, in the old-fashioned sense.

Now all of this has interesting implications, For example - by taking an audio signal and splitting it into something like 128 (or 256) time domain signals, an initial 44100-hertz sample rate (stereo signal) can be turned into 128-time domain signals where the sample rate for the sub-bands, (works as if we have made a kind of loud-speaker crossover from hell), is 345 Hz since 44100 divided by 128 is 344.53, and this goes a long way toward solving the event detection problem, if one of the things that we want to to is generate MIDI on and MIDI off events on a note by note bases, either as part of an audio in and sheet music out kind of system, or audio in and then possibly drive a piano with light-up keys, (if you have one or want to build one). or else if you wanted an application with light-up keys, and a piano on the screen - maybe that would be an ideal project for Android or as a WebGL-based application for general use. But first, we need to create the right metadata so that we can stream it, if at the end that is what we are most likely to want to do.

Then there is the issue of speech recognition. Now I know that there are several "bots" on this forum that use Google speech, or Apple speech, and yet you still can't say "OK Google - take dictation", and why not? Besides that, do you really want to let Google listen to everything you say and do, with no accountability, that is to say - NO why what so ever so as to be able to "look under the hood" and ask "just how much data is being processed locally on the device" before a query goes out the to cloud. And then what if there is an Internet outage, or a certain major provider of services starts facing other configuration issues, that affect service availability?

I don't own a farm. I am not trying to convert a John Deere tractor to propane, but I do believe should that I have the right to do so I if wanted to, or run my tractor on natural gas that matter, if I wanted to do that.

When will they ever learn? Oh, but wait - there is something else I should mention since I did mention games.

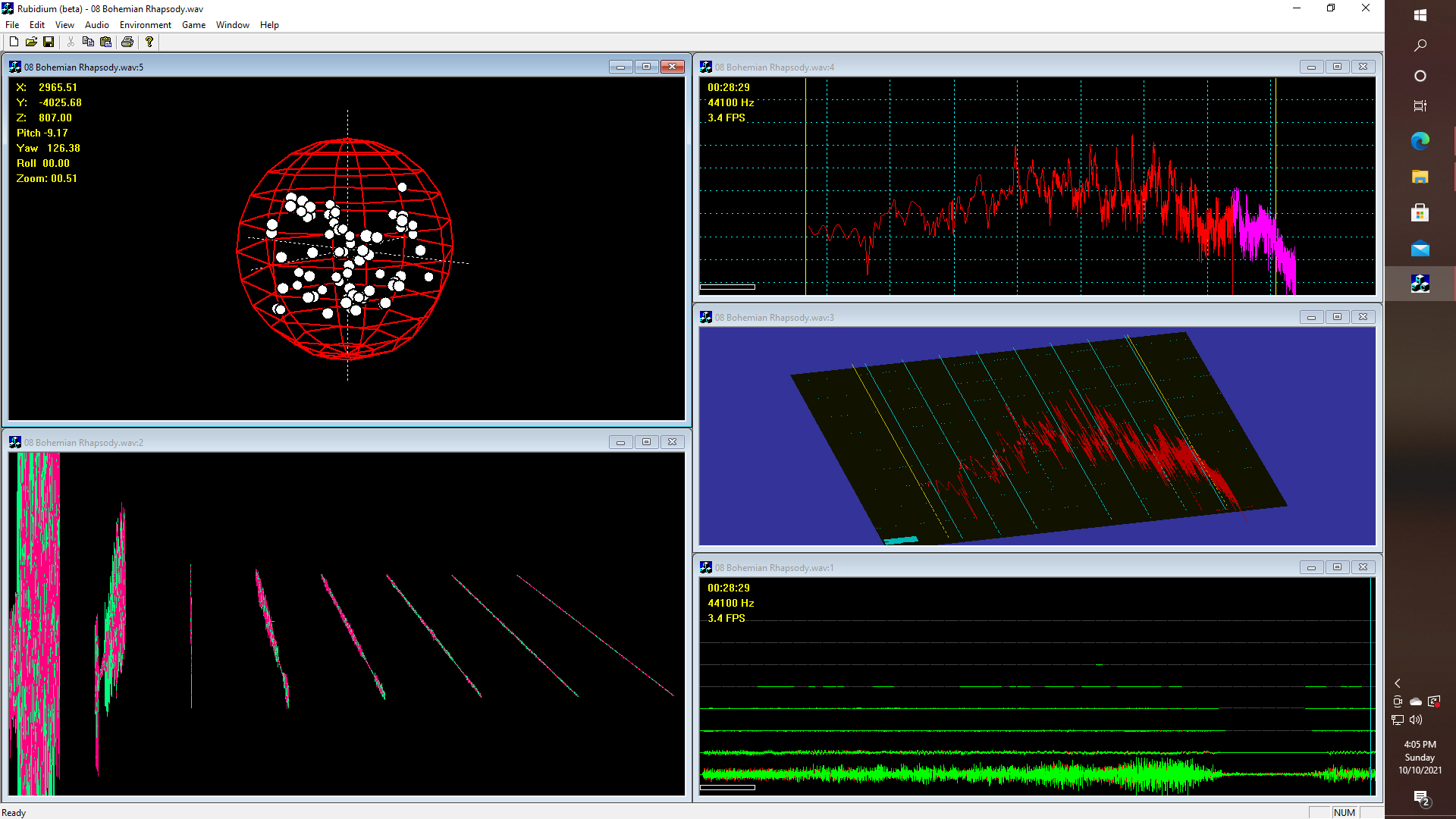

Now, what might this be? Well, think about what just sort of happens if you decide to run an oscilloscope or a spectrum analyzer view in an open-GL stack, even though an oscilloscope doesn't really NEED 3-D. Maybe it does. If we create 128 logarithmically based sub-bands, additionally take the waveforms and compute the logarithm of the power per sub-band, and then pass that data through a threshold function, then we could display the data in the form of some interesting shape - such as warping note data onto the surface of a sphere, or how about an animated piano-roll like display?

Now imagine drawing a simple piano, or perhaps even a player piano, where you can see inside where if you know how old-fashioned player pianos work - where there is this glass window so that you can see how the player piano music is on a roll so that the piano roll goes over some kind of cylindrical thing with holes, and that is how the system controls a bunch of air operated actuators that drive the keys! Maybe it would be much easier to try a simple "Star Wars (TM)" type of effect, which is easy to do by just setting the camera position. But warping music onto a sphere is pretty silly, and yet on the other hand, I don't know (haven't checked yet ) if "Guitar Hero" is still patented, so for now this demo is STRICTLY FOR RESEARCH AND EDUCATIONAL PURPOSES.

Right now, I need to finish the Propeller Debug terminal, and possibly add an OpenGL stack to the C++ version, which would allow anyone to run "processing style" calls on the Propeller while having the graphics rendered on a connected PC or tablet. Eventually, this would allow audio in, sheet music out type applications, and open source speech recognition applications to run on the Propeller with no PC required. Still, I think what people are going to want is to be able to plug some kind of hardware device into their DJ rig or their MIDI instruments, or both, and have real-time control over all kinds of new modular fun synth stuff, via a phone or tablet, such as over Blue tooth or WiFi.

glgorman

glgorman

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.