Various deployment possibilities:

- POE Power Supply Integration

- POE Power Supply with WIFI connection

- 12V DC with Ethernet cable

- 12V DC with WIFI connection

- Solar panels and battery packs with WIFI connection

- In the deployment of large traditional network systems, how to find new Edge AI cameras is very important, OpenNCC WE supports the ONVIF protocol to discover cameras.

- If you want your camera to connect directly to the cloud, OpennCC WE reserves the ability to let you integrate with AWS IoT.

- Deploy your own model remotely, compatible with OpenVINO.

How it works

Power usage

The OpennCC WE accepts power input from the 802.3af. It can also accept power from 12V@1A DC power adapter.

Power usage for OpennCC WE ranges between 1.5 W (standby) and 7 W (max consumption with WIFI).

AI Model inference deployment

System framework and introduction

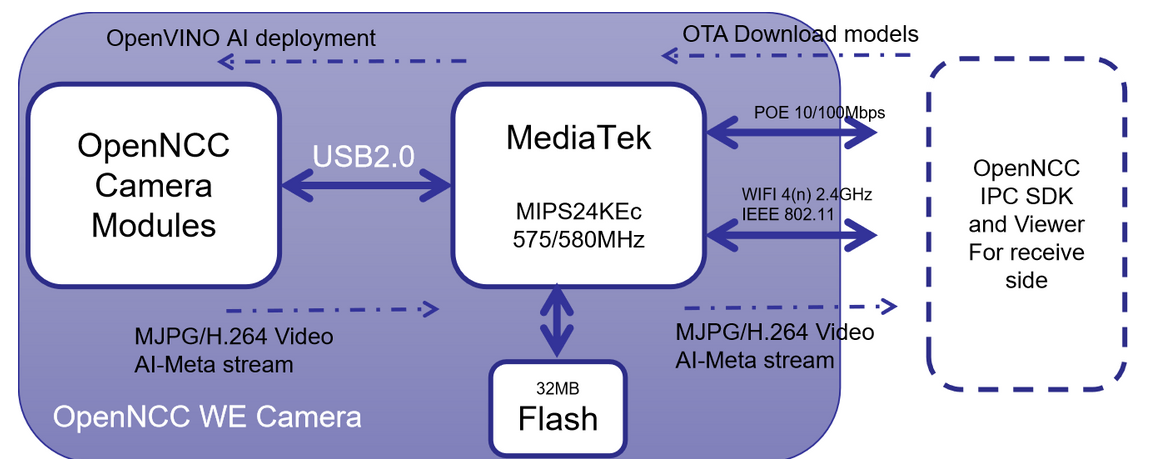

From a software system perspective, OpenNCC WE consists of the following development packages:

1. OpenNCC SDK

A generic OpenNCC development SDK running on MediaTek SoC on OpenNCC WE. Responsible for obtaining video stream from OpenNCC VPU camera through USB2.0, controlling the camera, downloading AI model, and obtaining AI-Meta stream.

2. OpenNCC IPC SDK for device side

Also, run on MediaTek SoC side, this part of the source code is responsible for: RTSP protocol stack, ONVIF protocol, system watchdog, and TCP proprietary protocol framework.

The OpenNCC team has helped users develop reference designs for streaming media management, network camera framework, and AI inference management framework, and open-source sharing.

3. OpenNCC IPC SDK for client-side

This is part of the IPC SDK but runs on the side that needs to manage and control OpenNCC WE and get video streams and AI results from it. It can be PC, server, NVR, and edge box. However, the default Github version is based on PC, other platforms can contact FAE to obtain.

- The client library under ClientSdk

- OpenNCC IPC Viewer is a QT based demo tool,which already integrated the ClientSdk, and could use it to display video, extract the AI results and draw box on the window, download AI-model to OpenNCC WE.

- For systems integrators, just pay attention to OpenNCC IPC SDK for the client-side.

- If you want to develop an application on MediaTek SoC to integrate into your cloud or special systems, you could contact the local FAE.

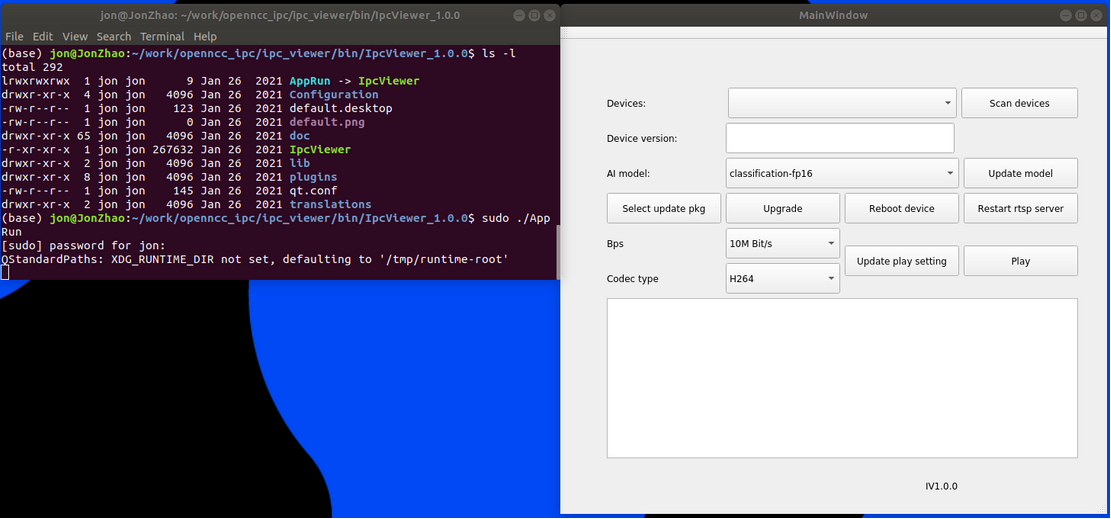

Start with OpenNCC IPC Viewer

1. Git clone the repo from github:

$ git clone https://github.com/EyecloudAi/openncc_ipc.git

2. Enter your openncc ipc sdk installed path,then:

$ cd ipc_viewer/bin/IpcViewer_1.0.0

$ sudo ./AppRun

Would pop up a window as following:

3. Prepare network connection

We need connect the camera to a POE switch,and power on the OpenNCC WE camera.

###span class="_2PHJq public-DraftStyleDefault-ltr"

>span class="_2PHJq public-DraftStyleDefault-ltr"###

4. The PC and the OpenNCC WE camera are connected to a LAN

5. Or the PC could connect to OpenNCC WE camera's WIFI AP 'eyecloud_ipc'

6. Click 'Scan devices' on the IPC Viewer demo, it would list out all the cameras under the same LAN, which support ONVIF.

7. Select one of the scanned OpenNCC WE cameras, then you could select one AI model to download to the camera. 10 models already have been integrated and can be selected.

8. Click 'Update model', and click 'Play' to display the stream, and the demo application would extract the AI results and draw a box on the image.

* How to extract the AI results from AI-meta stream

Start with OpenNCC IPC library

Under the ClientSdk directory,you could see:

├── ClientSDK_API.pdf

├── CliTest

├── inc

├── libClientSdk.a

├── main.cpp

└── Makefile

- You can refer to the API documentation, it introduces the function in detail.

- main.cpp is the reference code.

- The libClientSdk.a for X86 platform.

Johanna Shi

Johanna Shi