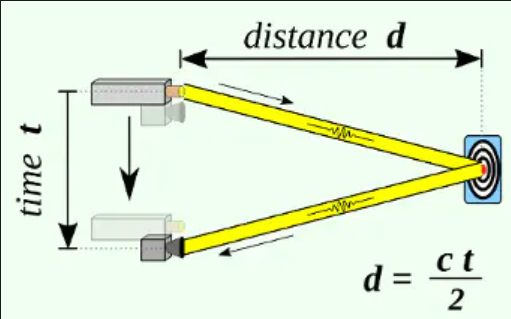

LIDAR uses laser beams to compute distance based on reflection time.

Cameras generally have higher resolution than LiDAR but cameras have a limited FOV and can't estimate distance. While rotating LIDAR has a 360° field of view, Pi Cam has only 62x48 degrees Horizontal x Vertical FoV. As we deal with multiple sensors here, we need to employ visual fusion techniques to integrate the sensor output, to get the distance and angle of an obstacle in front of the vehicle. Let's first discuss the theoretical foundation of sensor fusion before hands-on implementation.

The Sensor Fusion Idea

Each sensor has its own advantages and disadvantages. Take, for instance, RADARs are low in resolution, but are good at measurement without a line of sight. In an autonomous car, often a combination of LiDARs, RADARs, and Cameras are used to perceive the environment. This way we can compensate for the disadvantages, by combining the advantages of all sensors.

- Camera: excellent to understand a scene or to classify objects

- LIDAR: excellent to estimate distances using pulsed laser waves

- RADAR: can measure the speed of obstacles using Doppler Effect

The camera is a 2D Sensor from which features like bounding boxes, traffic lights, lane divisions can be identified. LIDAR is a 3D Sensor that outputs a set of point clouds. The fusion technique finds a correspondence between points detected by LIDAR and points detected by the camera. To use LiDARs and Cameras in unison to build ADAS, the 3D sensor output needs to be fused with 2D sensor output by doing the following steps.

- Project the LiDAR point clouds (3D) onto the 2D image

- Do object detection using an algorithm like YOLOv4

- Match the ROI to find the interested LiDAR projected points

LIDAR-Camera Low-Level Sensor Fusion Considerations

When a raw image from a cam is merged with raw data from RADAR or LIDAR then it's called Low-Level Fusion or Early Fusion. In Late Fusion, detection is done before the fusion. However, there are many challenges to projecting the 3D LIDAR point cloud on a 2D image. The relative orientation and translation between the two sensors must be considered in performing fusion.

- Rotation: The coordinate system of LIDAR and Camera can be different. Distance on the LIDAR may be on the z-axis, while it is x-axis on the camera. We need to apply rotation on the LIDAR point cloud to make the coordinate system the same, i.e. multiply each LIDAR point with the Rotation matrix.

- Translation: In an autonomous car, the LIDAR can be at the center top and the camera on the sides. The position of LIDAR and camera in each installation can be different. Based on the relative sensor position, we need to translate LIDAR Points by multiplying with a Translation matrix.

- Stereo Rectification: For stereo camera setup, we need to do Stereo Rectification to make the left and right images co-planar. Thus, we need to multiply with matrix R0 to align everything along the horizontal Epipolar line.

- Intrinsic calibration: Calibration is the step where you tell your camera how to convert a point in the 3D world into a pixel. To account for this, we need to multiply with an intrinsic calibration matrix containing factory calibrated values.

To sum it up, we need to multiply LIDAR points with all the 4 matrices to project on the camera image.

To project a point X in 3D onto a point Y in 2D,

- P = Camera Intrinsic Calibration matrix

- R0 = Stereo Rectification matrix

- R|t = Rotation & Translation to go from LIDAR to Camera

- X = Point in 3D space

- Y = Point in 2D Image

Note that we have combined both the rigid body transformations, rotation, and translation, in one matrix, R|t. Putting it together, the 3 matrices, P, R0, and R|t account for extrinsic and intrinsic calibration to project LIDAR points onto the camera image. However, the matrix values highly depend on our custom sensor installation.

This is just one piece of the puzzle. Our aim is to augment any cheap car with an end-to-end collision avoidance system and smart surround view. This would include our choice of sensors, sensor positions, data capture, custom visual fusion, and object detection, coupled with a data analysis node, to do synchronization across sensors in order to trigger driver-assist warnings to avoid danger.

Anand Uthaman

Anand Uthaman

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.