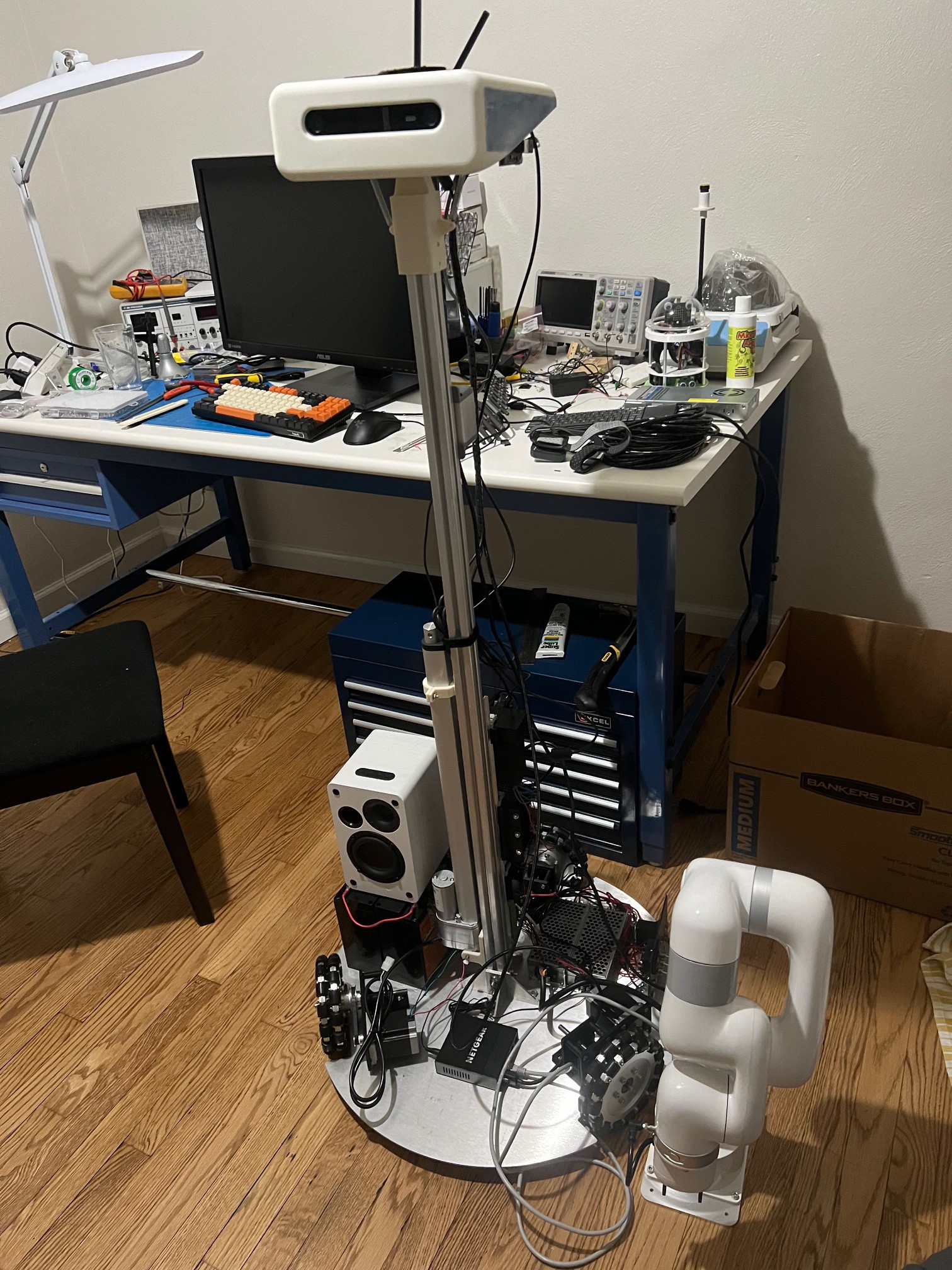

Made some good build progress

Hardware:

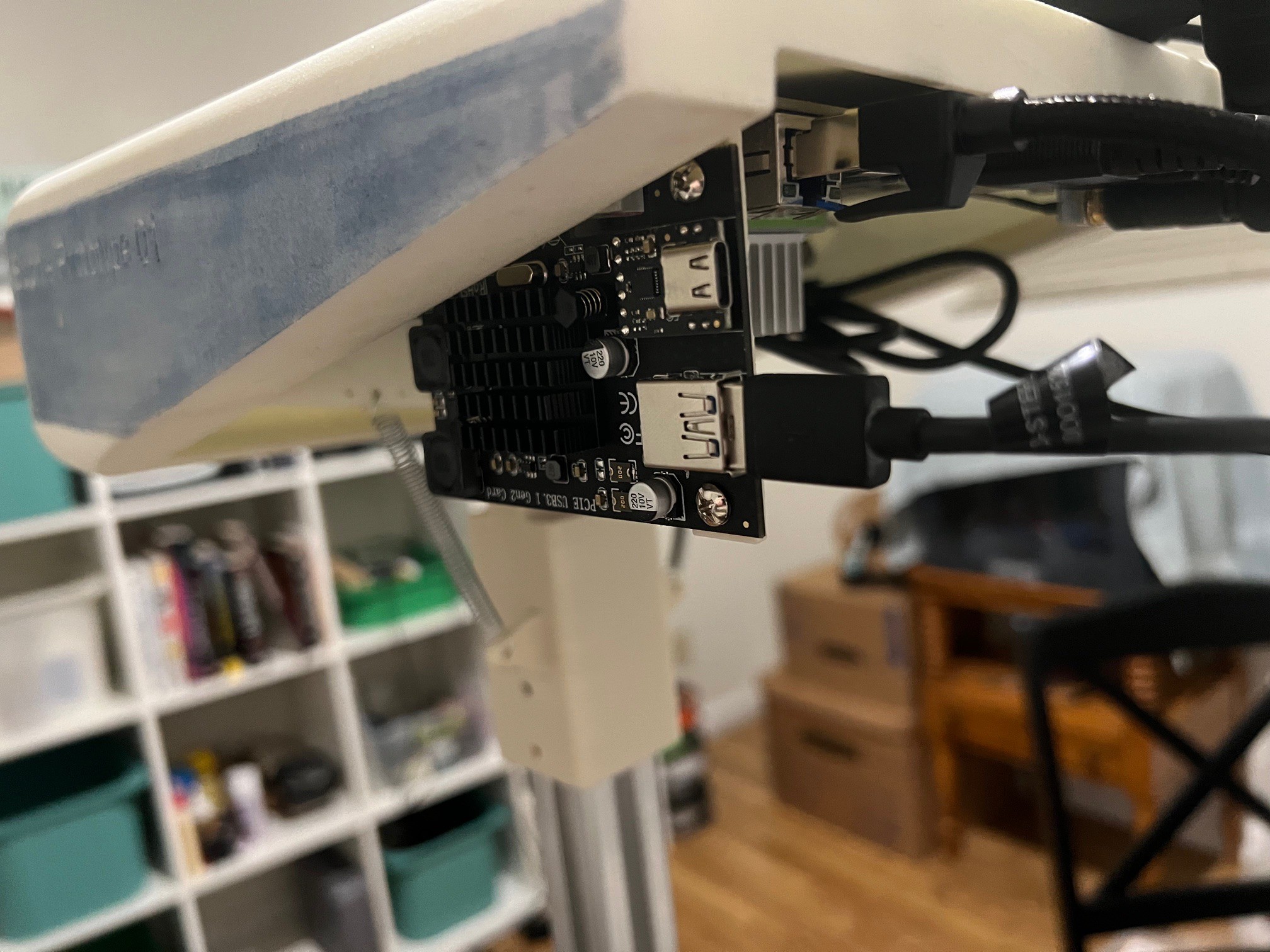

- Added the PCI 3.0 card to get the bandwidth needed for the ZED, also designed and 3D printed a bracket for holding that into place.

- Added springs to the neck, it now mechanically auto levels and is compliant in that axis.

- Installed braided Ethernet cable that is used to communicate with the arm and the LIDAR.

- Wire wrapped the three cables needed to run to the head. (power, Ethernet, and one USB)

- Mounted the 18inch stroke linear actuator

- Added a cheap Netgear 5 port switch to the base and powered it via the 12volt rail

- Designed and 3D printed a battery holder (not yet installed, waiting on m5 threaded rod)

- Initially connected arm to power and communication, I'm able to control it though the browser interface on the TX2

- Played with the arm way to much...

Software:

- Figured out that I was resetting the conversation history each user input, it now remembers the context across multiple interactions

- Updated model to chat GPT 4, I think chat GPT 3.5 was funnier, oh well technology marches on I guess. :(

- Added gpt_inference.py to the repository a fun chat GPT 4 image script, it takes a image from the ZED, sends it to GPT 4 image and then the response is voiced with the TTS. It was too fun to wheel the robot around the house and hearing what it said. This is highly experimental code and uses a preview chat_gpt. (

gpt-4-vision-preview)Could be used to evaluate a room when first entering to know what home care task needs to be done. Takes a good 20 seconds though, It merits more experimentation.

Apollo Timbers

Apollo Timbers

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.