-

Upgrades

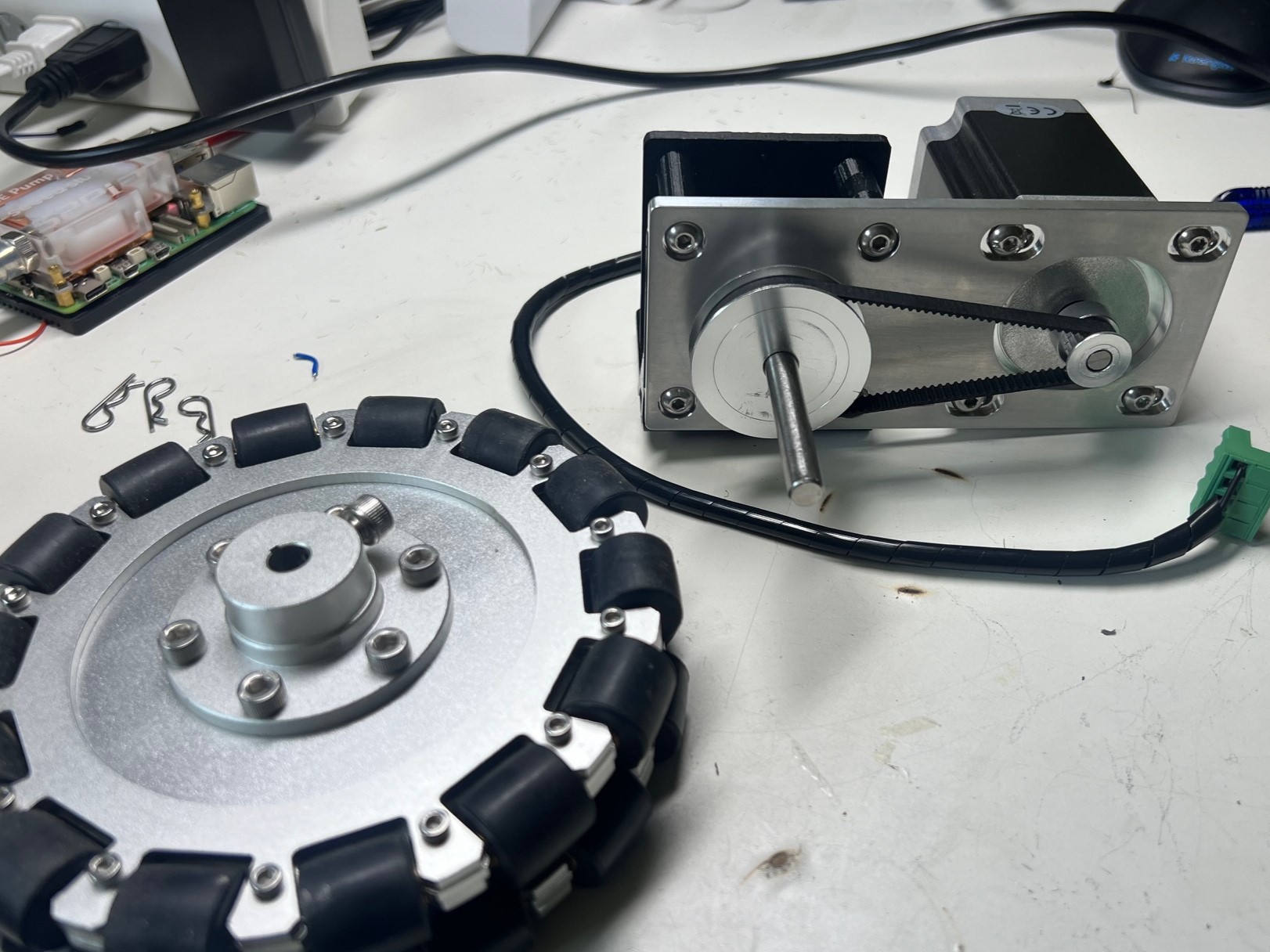

05/19/2024 at 16:08 • 0 commentsFinally got around to start swapping out the plastic prototype parts with a metal one. Seems to not have much of any flex too it so the belt is likely not going to slip. Once I have all three done this should allow me to get the robot moving. Which will be nice. The bearing was not as press fit tight as I would like, but it is good enough.

Yes that is a Raspberry Pi 5 water-cooled in the the background, also yes I know it does not need that much cooling. (but still fun)

![]()

-

Refactoring the code

05/15/2024 at 13:49 • 0 comments![]()

Chat GPT 4o came out the other day, apparently the "o" is for omni, and is the most advance AI so far. I swapped over the model to that as it allows the robot to respond much quicker. It also seems to be funnier then GPT4 which is a important factor to me, as the robot is set up to be very snarky.

I also found that each time the main voice_assit.py program ran, when there was not a offline command recognized it would bring up Chat GPT as a subprocess, problem is it would do that each time and not allow the chat GPT module to feed back previous responses. So, the robot would ask you a question and then you would answer yes and it would not remember it even asked you as it was a completely new conversation as the program was rebooted.

I'm attempting to fix, and have it working so so, Though the problem where the robot would hear itself is cropping up again.

-

New machined parts!

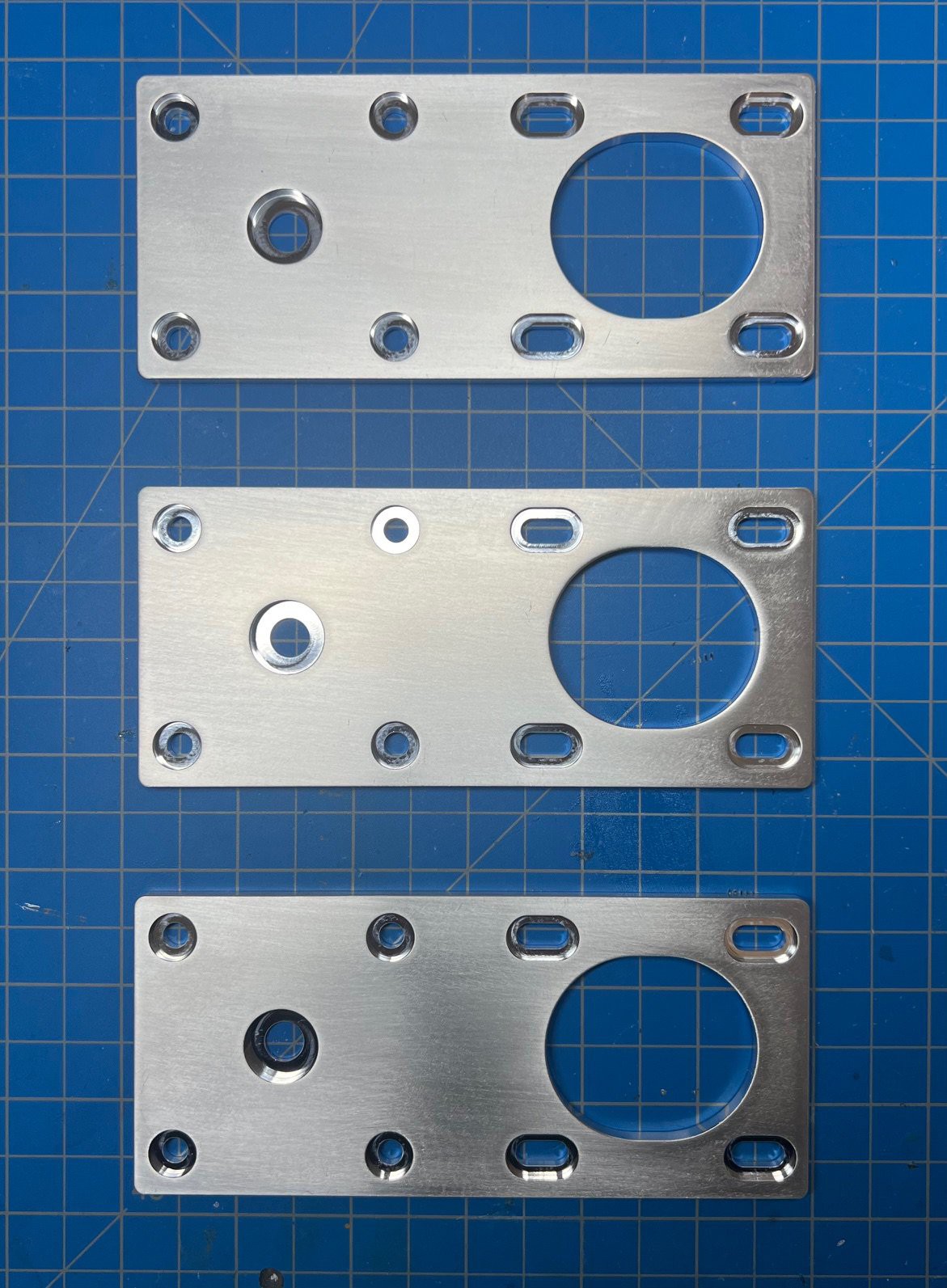

02/11/2024 at 17:59 • 0 commentsNew machined parts came in for the motor drive units!

I ordered though https://www.xometry.com/ very cool place that I have got multiple things made at and there service is top notch. (highly recommend them) These have a press fit bearing that may or may not press fit but I will soon find out. hmmm speaking of which.. I need to find a press, probably one at the local makerspace. They were machined out of 6061 aluminum and look very shiny. I have yet to install them as it will be quite the task and I have been a bit lazy on this project but they will go on soon (if the bearing fits...)

![]()

-

Build Progress

01/28/2024 at 23:18 • 0 commentsWas able to get the neck motor adaptor designed and printed, went though two revisions as I wanted it to also hold the upper shell in place. The motor assembly is in place for the neck and can tilt up and down quite a bit. I did however break a plastic piece form the USB hubs host port, so I will need to get another. :( I was pulling the wire a bit much, I have a replacement cable that is longer, though the hub will need to be replaced for longevity. It however is coming together nicely.

The directional mic also has it 3D printed shell in place and looks very much to the design spec.

*Note will need to print a plastic cover for the gears, so no fingers are lost as the worm gear motor combination is very strong.

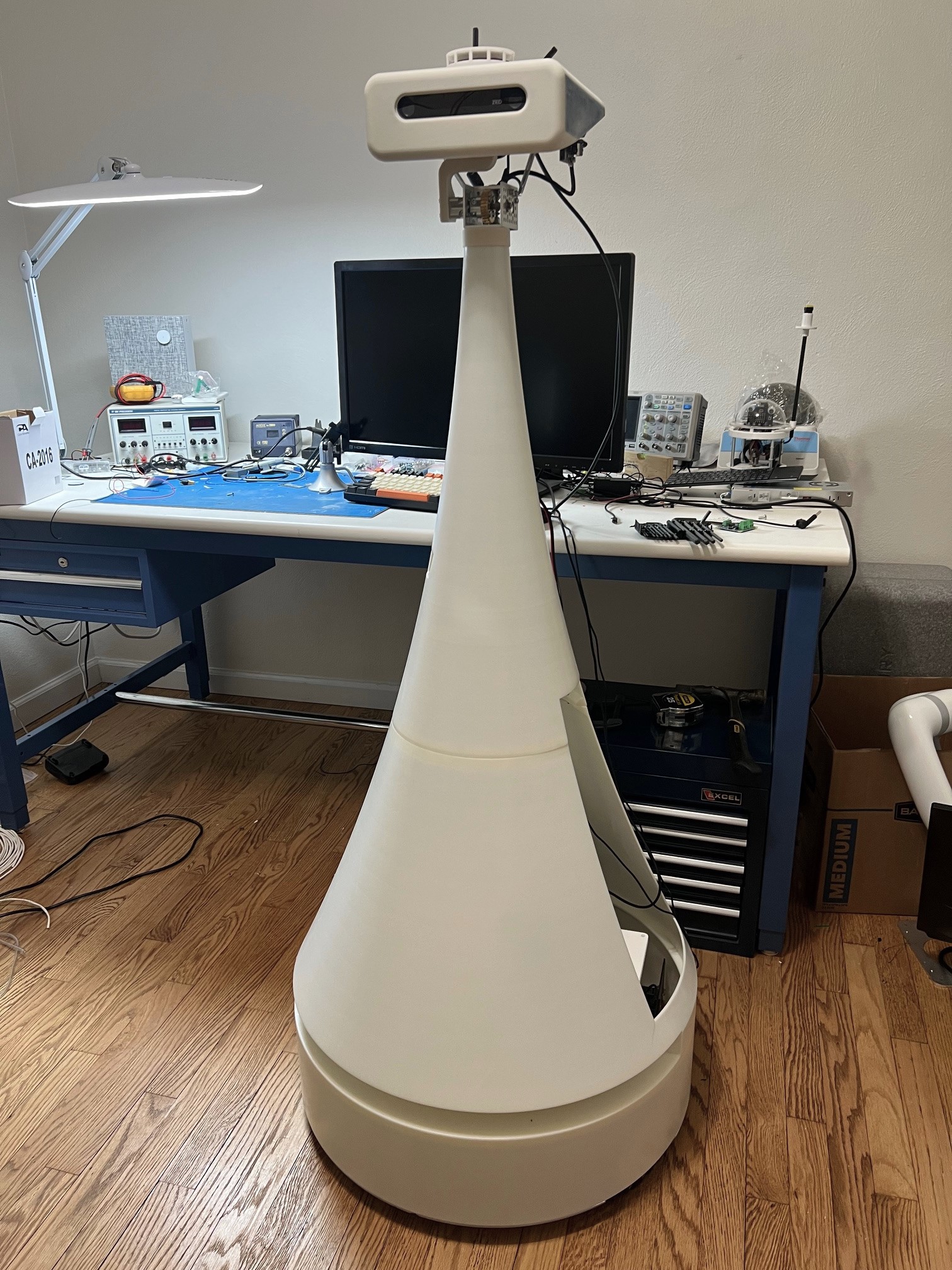

Robot full height (not counting antenna is 4' 8" or 1.42 meters

![]()

![]()

![]()

-

All About Sensors

01/26/2024 at 14:14 • 0 commentsThe lidar ordered came in and it seems to be in working order, I think the bearings are going out but it should suffice for testing and getting the ROS navigation stack up and running. That is what you get buying used sometimes. ROS itself also requires a IMU so I have one on the the way that is compatible. It's funny I'm big into lower level electronics, but the time saved just ordering a module is so worth it sometimes. I'm a bit stuck with ROS melodic at the moment as it is tied to the Jetson TX2. I have however been looking at the NVIDIA Jetson Orin Nano Developer Kit as a upgrade once the robots mechanical is finished.

List of current sensors -

Zed Stereo camera v1, This has the ability to give a distance point map and up to this point I have not utilized the distance feature yet. The following list is expected uses.

- Have the head tilt down a few degrees during a move command so that it can detect obstacles the lidar does not catch and fall hazards.

- Measure distance to wall when playing hide and seek this is so it can head to the wall and face it when starting to count. (like it is not looking)

- Measure the distance of a book held in front of it.

- Measure the approximate size and figure out a good grip for a unknown object that the robot will attempt to pick up.

- Inference from Chat GPT vision (when entering a large room or unknown location)

- Allows for face and object detection

- Taking pictures

hfi-a9 IMU - needed for the navigation stack (Gyroscope Accelerometer Magnetometer 9 Axis).

Seed studio Respeaker v2 - A x4 microphone array with on-board noise canceling and DOA (direction of arrival)

- Used for offline and online voice assist.

- Will use to "cheat" playing hide and seek (what is the purpose of a directionally based mic array if you can use it to travel in the rough direction of noise)

- Turn and face a active talker

HOKUYO UST-10LX - a powerful scanning lidar again used for navigation and detecting objects, also helps the main computer create a map of the environment.

AM2315C - Encased I2C - a enclosed temperature/humidity Sensor this will enable the robot to tell the user the indoor air, so user can ask (what is the current temperature inside... so on)

Linear actuator potentiometer - used to tell where the arm is at and will allow for the robot to place it at different heights instead of just down and up.

Current and voltage sensors - these will be used to tell the main battery packs voltage and current draw. Will allow a time remaining and when the robot needs to go look for power/charge.

IR break sensor - in the custom gripper there is a IR break sensor to detect objects entering/leaving the grip. (old school but still helps)

Possible more but this should cover a good portion of what the robot is intended to do.

![]()

-

That Professional Look...

01/22/2024 at 18:51 • 0 commentsSo I knew it would come to this... All my hard work in engineering the robot from the ground up will now be covered by a slick outer shell. I suppose the head is still there to see. I asked my 8 year old daughter if she likes it more with the shell or without. She said with, so what the 8 year old says goes! Modern robotics seem to weigh heavily to having a nice clean outer shell, it seems to add to the perception of a advanced piece of technology. I think it looks like a cool rook chess piece.

The rather large two piece 3D printed shell has arrived. It was printed with the https://www.stratasys.com/en/materials/materials-catalog/stereolithography-materials/somos-evolve-128/ material. I chose a two piece design that bolts together and is already in a mostly finished state. This is ~10 pounds and I have did a quick test fit. Looks like it is good to go. I also sprayed it with UV-Resistant Clear acrylic so it does not yellow (as fast?)

The proto neck does not fit as it was not the last revision that was designed for the shell. A new 3D printed ASA part will need to be made to mount the neck motor/head.

![]()

![]()

-

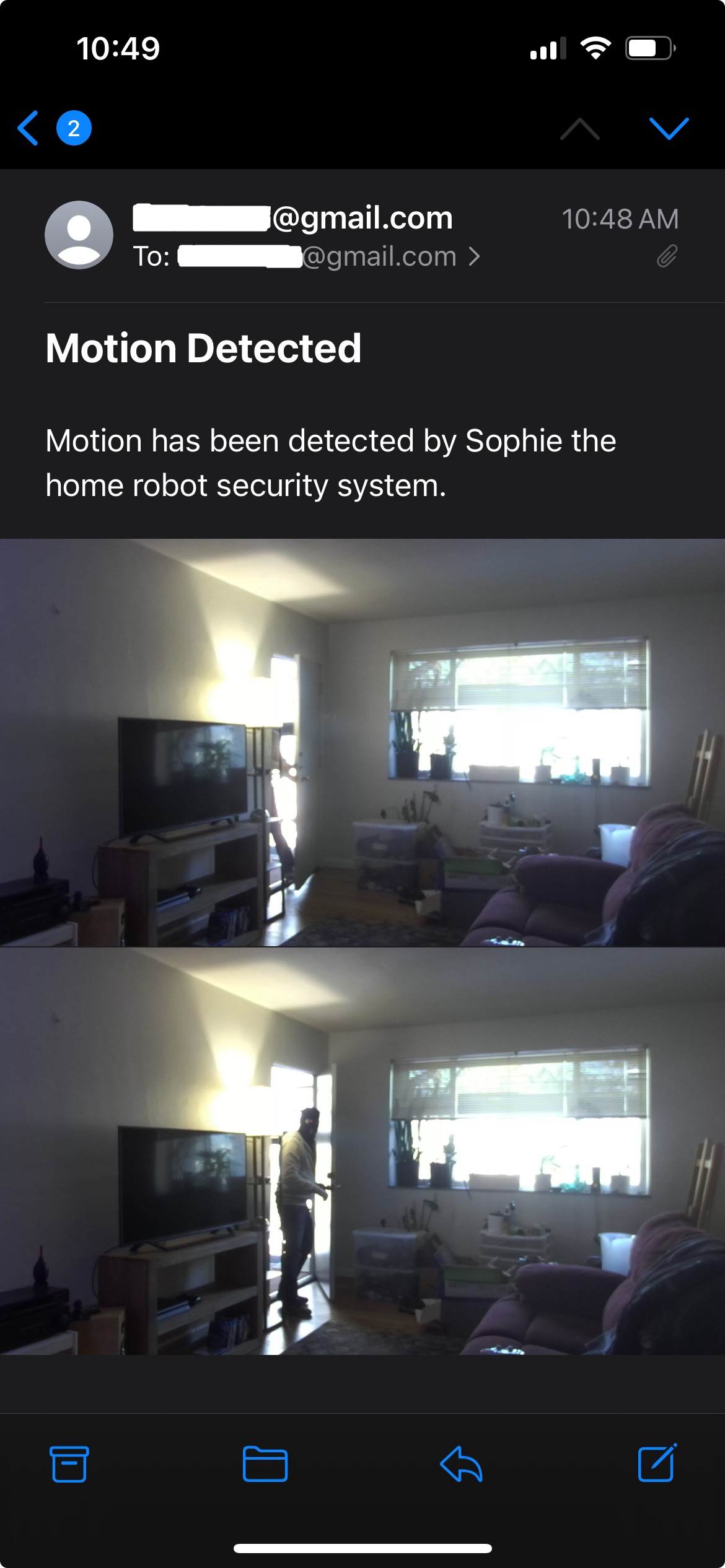

Motion Detected!

01/20/2024 at 16:59 • 0 commentsI did a bit more programming of the security module and now it will send the detected frame and one slightly after to your email. I tested all day and found it only used about a volt of the battery pack in this mode, that works for me. Below is a email of a test of the system. The code is available on the GitHub.

*note it was found that you will need to make a "app" password after signing up for two factor with Gmail accounts specifically.

![]()

-

Security Robot Time

01/07/2024 at 17:49 • 0 commentsWhile I still wait for the drive unit plates and 3D parts I wanted to continue development in some way. I asked chat GPT 4 what it thought of the project. It stated it did not have feature parity with commercially available home robots. I was like well there can't be too many around like this. lol

Anyhoo, It said some had "security features" I was like ok I can do that. So, not to be one upped by messily commercial bots I spent a good half day on a security_module.py that is a class. It is still a work in progress but it works non the less.

The system is capable of detecting motion on a pre-set window of time (say when your away at work) and is planned to be equipped with AI audio analysis for detecting sounds like breaking glass, enhancing its security capabilities.

https://github.com/thedocdoc/Sophie/blob/main/security_module.py

It has the following:Features:

- Utilization of ZED stereo camera for high-resolution imaging.

- Motion detection using background subtraction and contour analysis with OpenCV.

- Planned integration of audio analysis for breaking glass detection.

- Text-to-speech functionality for audible alerts in case of security breaches.

- Time-based activation and deactivation of the security system.

- Logging system for tracking events, errors, and system status.

- Threaded implementation for concurrent processing of camera feed and monitoring tasks.

- Takes two pictures when a security breach is detected and saves to a folder with a date/time stampChange log:

- First revision of code that is in a working state

- The mic mute functions may not be needed as voice_assist.py will be off during the security monitoring

- Fixed* It does a single false detection at the beginning that I'm still trying to resolve

- There is a place holder for a glass breaking monitor, this will need to be a trained AI model for accuracy and to reduce false-positives

- This module is in active development. Future versions will include refined motion detection algorithms, real-time audio analysis for additional security features, and improved error handling and logging for robustness and reliability

- Has a issue where the ZED hangs and has trouble connecting to it, will be a future fix

- Takes two pictures when a security breach is detected and saves to a folder, of the frame the detection was made and one a second later

- False initial detection solved -

New video!

01/06/2024 at 19:37 • 0 commentsI got around to getting uploaded a current video of the much improved offline/online voice assistance. The arm is not in it's final resting place it is just clamped down for testing. The robot is no longer hearing itself (huge milestone), the microphone does randomly hear a huh or ah in the background noise. (may end up reducing sensitivity).

So, because of these improvements I can leave the voice assistance on for much longer, possibly no need to close it/restart. Need to have it run when the robot first powers on however, as I'm still manually running the program from the terminal.

Major refactoring of the code to including:

voice_assist.py

- -Made the time function more natural sounding and state am/pm

- Refactored code, reduce main down and add dictionary for the offline phrases

- Lots of additional descriptions made for easier understanding

- Turned weather function into a class, it was getting quite large

Created a whole new weather class as that function was getting very long

weather_service.py

This class retrieves the current weather report for a specified city using the OpenWeather API. It constructs

and sends a request to the API with the city name, desired units (imperial or metric), and the API key. After

receiving the response, the function parses the JSON data to extract key weather details such as temperature,

humidity, and weather description.Based on the weather data, it formulates a user-friendly weather report which includes temperature, feels-like

temperature, humidity, and a general description of the weather conditions. Additionally, the function provides

attire recommendations based on the current weather (e.g., suggesting a raincoat for rainy weather, or warm

clothing for cold temperatures). This function enhances the robot's interactive capabilities by providing useful

and practical information to the user in a conversational manner.reading_module.py

It can now read fine print from a book held about 12" away. It does this by scaling the image up after boxing text.

- Changed to Python text-to-speech.

- Heavily modified the pipeline to scale the image and box the text to help OCR be faster and more accurate, it also arranges the bounding boxes from left to right and top to bottom, so that it reads in the correct order.

- You need to hold a book/text ~12 inches from the camera, this may be improved by upping the scale factor but it is working well at that distance for now.

- More optimization is needed in the pipeline... -

Sophie can now read!

01/05/2024 at 13:42 • 0 commentsI had a reading module I made long ago, it worked but only with hard coded path images. Now that the ZED is all set up, I have the reading module boot up zed_snap.py and then get the image path of the image taken. Pytesseract a optical character recognition (OCR) tool then attempts to read what is in the image. It does indeed work the robot can now read stuff put in front of it. It will need so fine tuning, like maybe the ability to scale the image up, stabilize and also just block just the text/book page removing any background noise. Goal is a kid can hold up a book and it can read to them...

*Forgot to mention it has no understanding of what it is reading it is just reading what it can find in the image...but still!

The reading module and zed snap python programs can be found here- https://github.com/thedocdoc/Sophie/tree/main

Positives:

- Did I mention that it works!

Negatives:

- The text needs to be larger or the book held closer to the ZED camera

- It like black text on white background more

- It gets a bit confused about background stuff, or images in a book

Home Robot Named Sophie

A next generation home robot with a omni directional drive unit and 6 axis collaborative robot arm

Apollo Timbers

Apollo Timbers