The 1 thing making the tracker basically worthless has been its preference for humans instead of lions in crowds. Pondered chroma keying some more. No matter what variation of chroma keying is used, the white balance of the cheap camera is going to change. The raspberry 5 has enough horsepower to ingest a lot more pixels from multiple cameras, which would allow using better cameras if only lions had the money.

https://www.amazon.com/ELP-180degree-Fisheye-Angle-Webcam/dp/B01N03L68J

https://www.amazon.com/dp/B00LQ854AG/

This is a contender that didn't exist 6 years ago.

Maybe it would be good enough to pick the hit box with the closest color histogram rather than a matching histogram. The general idea is to compute a goal histogram from the largest hit box, when it's not tracking. Then pick the hit box with the nearest histogram when it's tracking.

It seems the most viable system is to require the user to stand in a box on the GUI & press a button to capture the color in the box. Then it'll pick the hit box with the most & nearest occurrence of that color. The trick is baking most & nearest into a score. A histogram based on distances in a color cube might work. It would have a peak & area under the peak so it still somehow has to combine the distance of the peak & the area under the peak into a single score. Maybe the 2 dimensions have to be weighted differently.

Doesn't seem possible without fixed white balance. We could assume the white balance is constant if it's all in daylight. That would reduce it to a threshold color & the hit box with the largest percentage of pixels in the threshold. It still won't work if anyone else has the same color shirt.

Always had a problem with selecting a color in the phone interface. The problems have kept this in the idea phase.

The idea is to make a pipeline where histograms are computed while the next efficiendet pass is performed. It would incrementally add latency but not reduce the frame rate.

--------------------------------------------------------------------------------------------------------

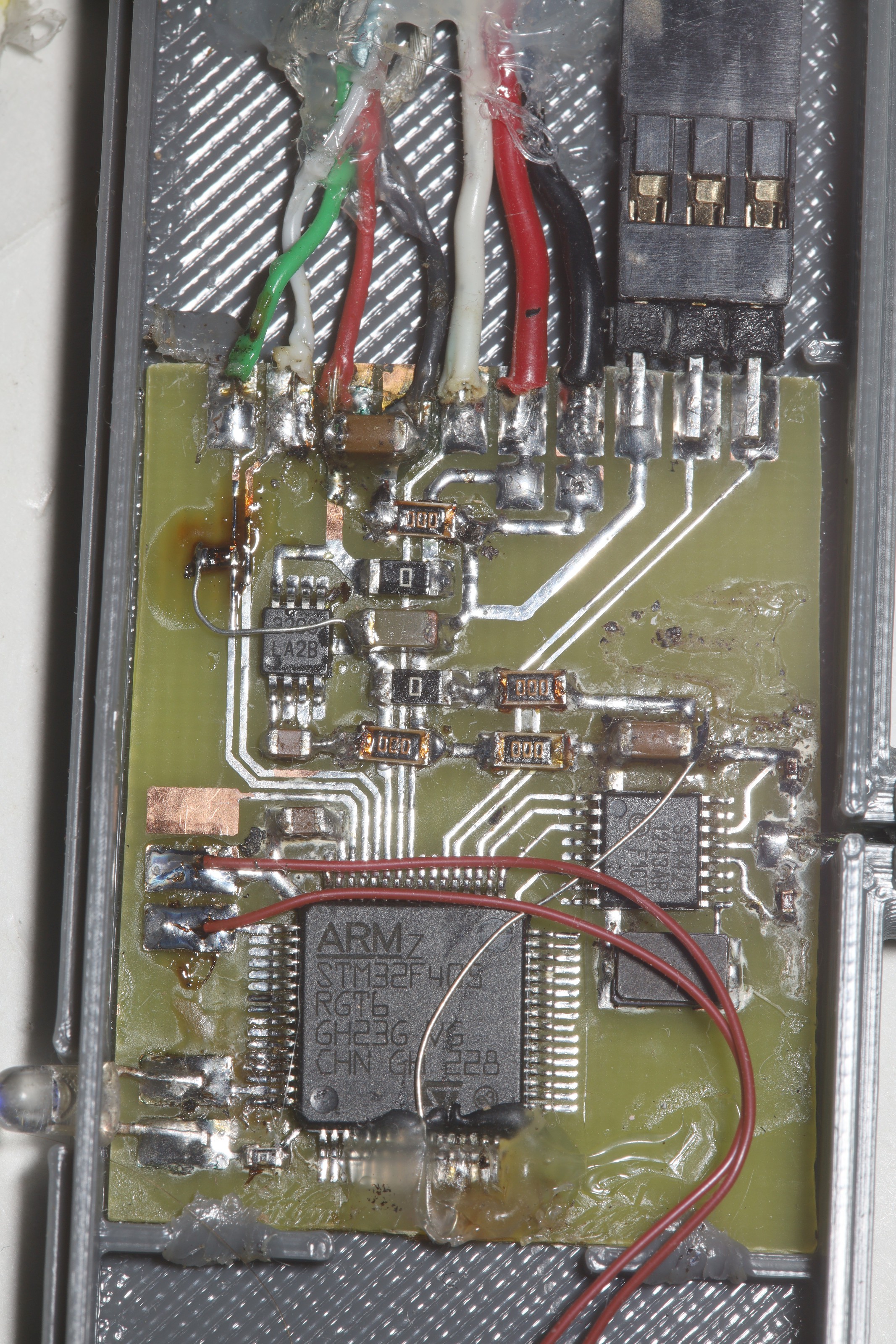

USB on the servo driver was dead on arrival, just a month after it worked.

It seems the STM32 didn't react well to being plugged into a USB charger over the years. The D pins were shorted in the charger, a standard practice, but shorting the D pins eventually burned out whatever was pushing D+ to 3.3V in the STM32. The D+ side only went to .9V now & it wouldn't enumerate. Bodging in a 1k pullup got it up to 1.6. That was just enough to get it to enumerate & control the servo from the console. That burned out biasing supply really doesn't want to go to 3.3 anymore.

The best idea is just not to plug STM32's into normal chargers. Just use a purpose built buck converter with floating D pins & whack in a 1k just in case.

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------

efficientdet-lite1 showed promise. Resurrecting how to train efficientdet-lite1, the journey begins by labeling with /gpu/root/nn/yolov5/label.py

Lite1 is 384x384 so the labeling should be done on at least 384x384 images. The training image size is set by the imgsz variable. The W & H are normalized to the aspect ratios of the source images.

After running label.py, you need to convert the annotations to an XML format with /gpu/root/nn/tflow/coco_to_tflow.py.

python3 coco_to_tflow.py ../train_lion/instances_train.json ../train_lion/

python3 coco_to_tflow.py ../val_lion/instances_val.json ../val_lion/

The piss poor memory management in model_maker.py & size of efficientdet_lite1 mean it can only do 1 batch size of validation images. It seemed to handle more lite0 validation images.

It previously copied all the .jpg's because there was once an attempt to stretch them. Now it just writes the XML files to the source directory.

It had another option to specify max_objects, but label.py already does this.

run /gpu/root/nn/tflow/model_maker.py

model_maker2.py seems to have been broken by many python updates. It takes 9 hours for 300 epochs of efficientdet-lite1 so model_maker2.py would be a good idea. https://hackaday.io/project/183329-raspberry-pi-tracking-cam/log/203568-300-epoch-efficientdet A past note said 100 epochs was all it needed.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.