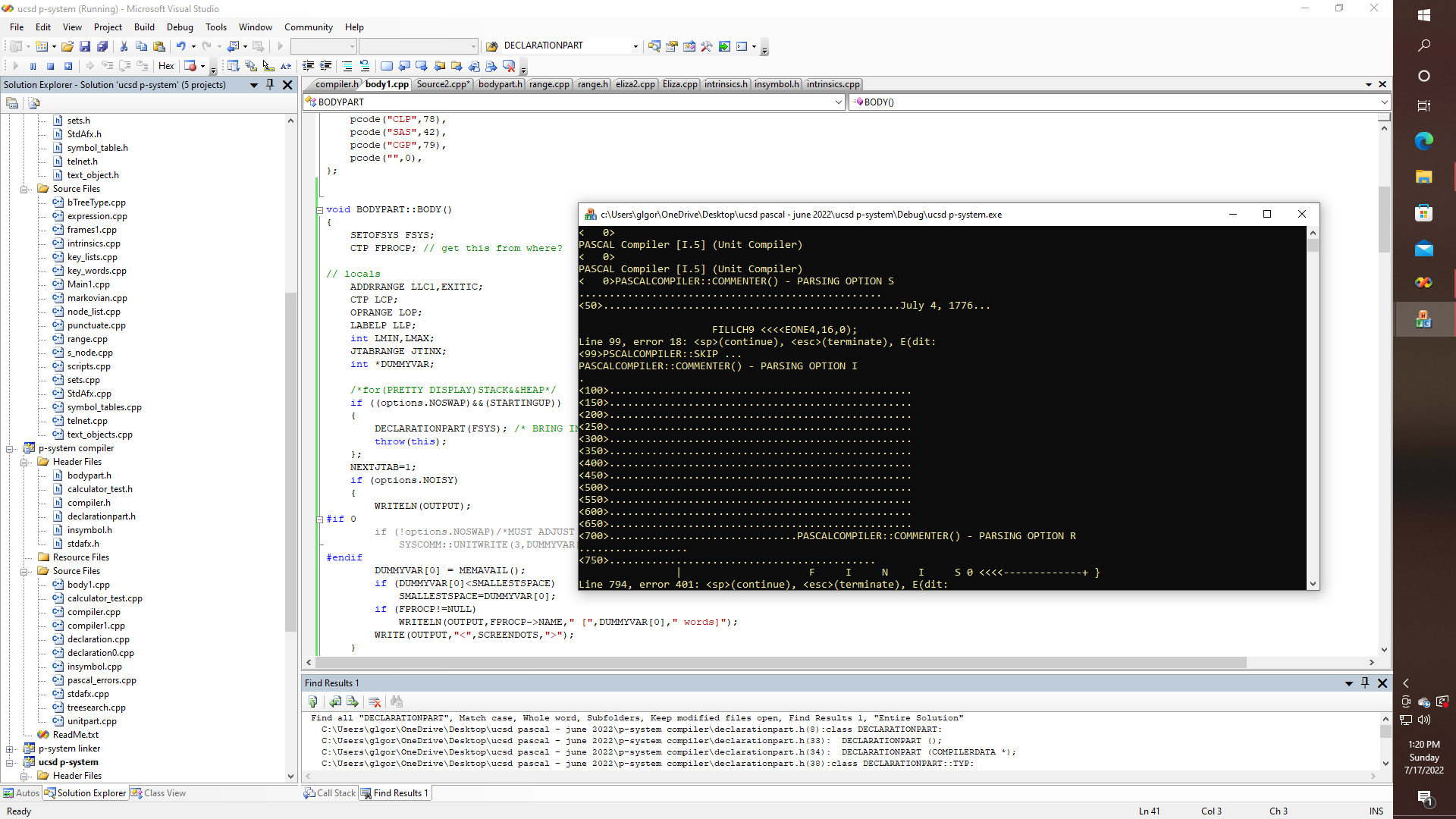

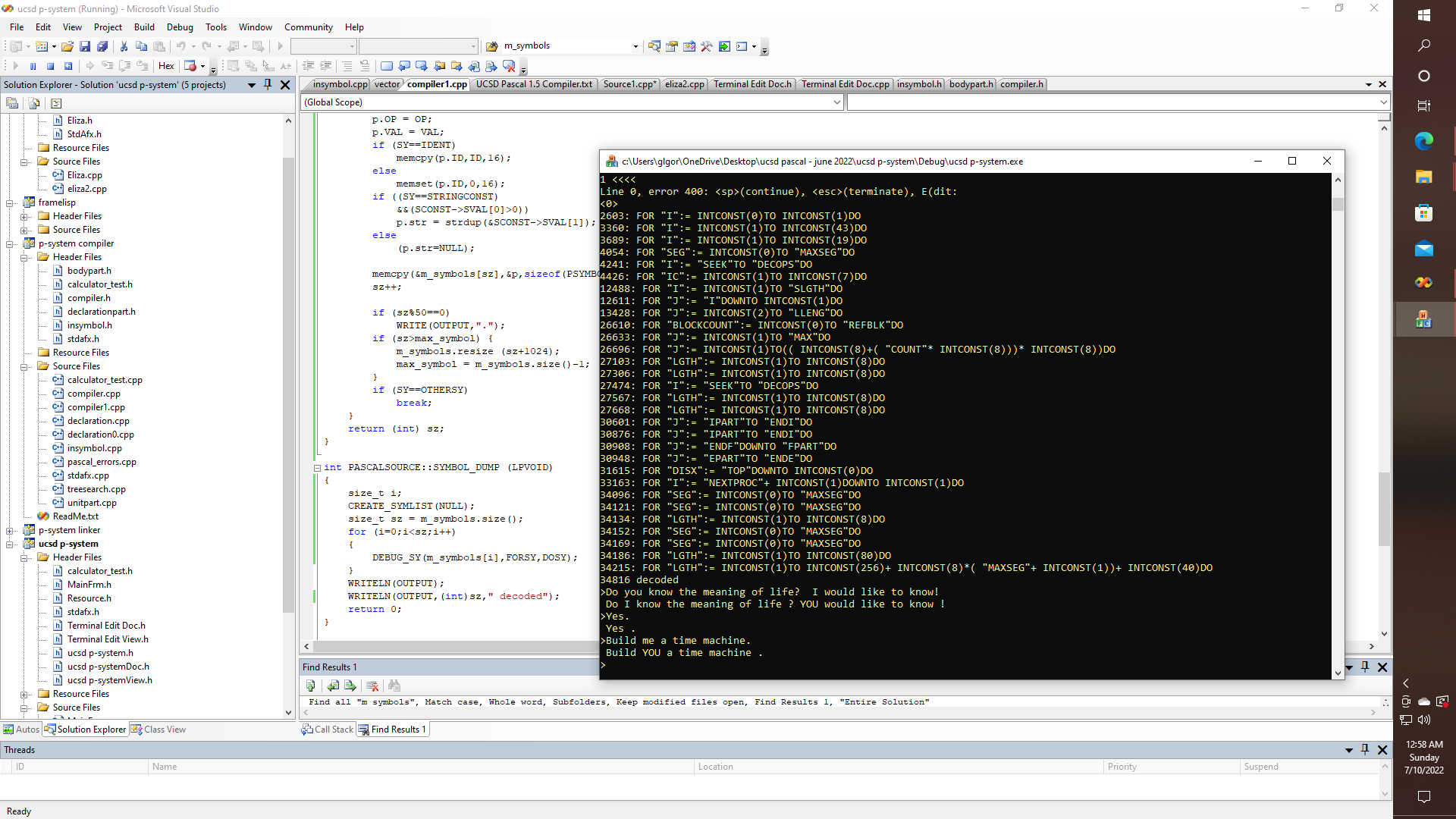

Several approaches are frequently taken when developing projects that involve some type of AI. In the traditional approach, interaction with a simulated intelligence can be produced by combining simple pattern matching techniques with some type of scripting language which in turn provides a seemingly life-like experience, which within some contexts can be highly effective, even if only up to a certain point. This is the approach taken by classic chatbots such as ELIZA, PARRY, MEGAHAL, SHURDLU, and so on. Whether this type of AI is truly intelligent might be some subject for debate, and arguments can be made both in favor of, as well as against claims that such systems are in some way intelligent, on the one hand - even though nobody can reasonably make any sort of claim that such systems might in any way be sentient - yet WHEN they work, they tend to work extremely well.

Most modern attempts at developing AI as of late seem to be focused on efforts to develop applications that more accurately model some of the types of behaviors associated with the types of neural networks found in actual biological systems. Such systems tend to be computationally intensive, often requiring massively parallel computing architectures which are capable of executing billions of concurrent, as well as pipelined non-linear matrix transformations so as to perform even the simplest simulated neuronal operations. Yet this approach gives rise to so-called learning models that might not only have the potential to recognize puppies, etc. but why not build networks that can try to solve more esoteric problems like certain issues in bio-molecular research, and mathematical theorem proving, etc.

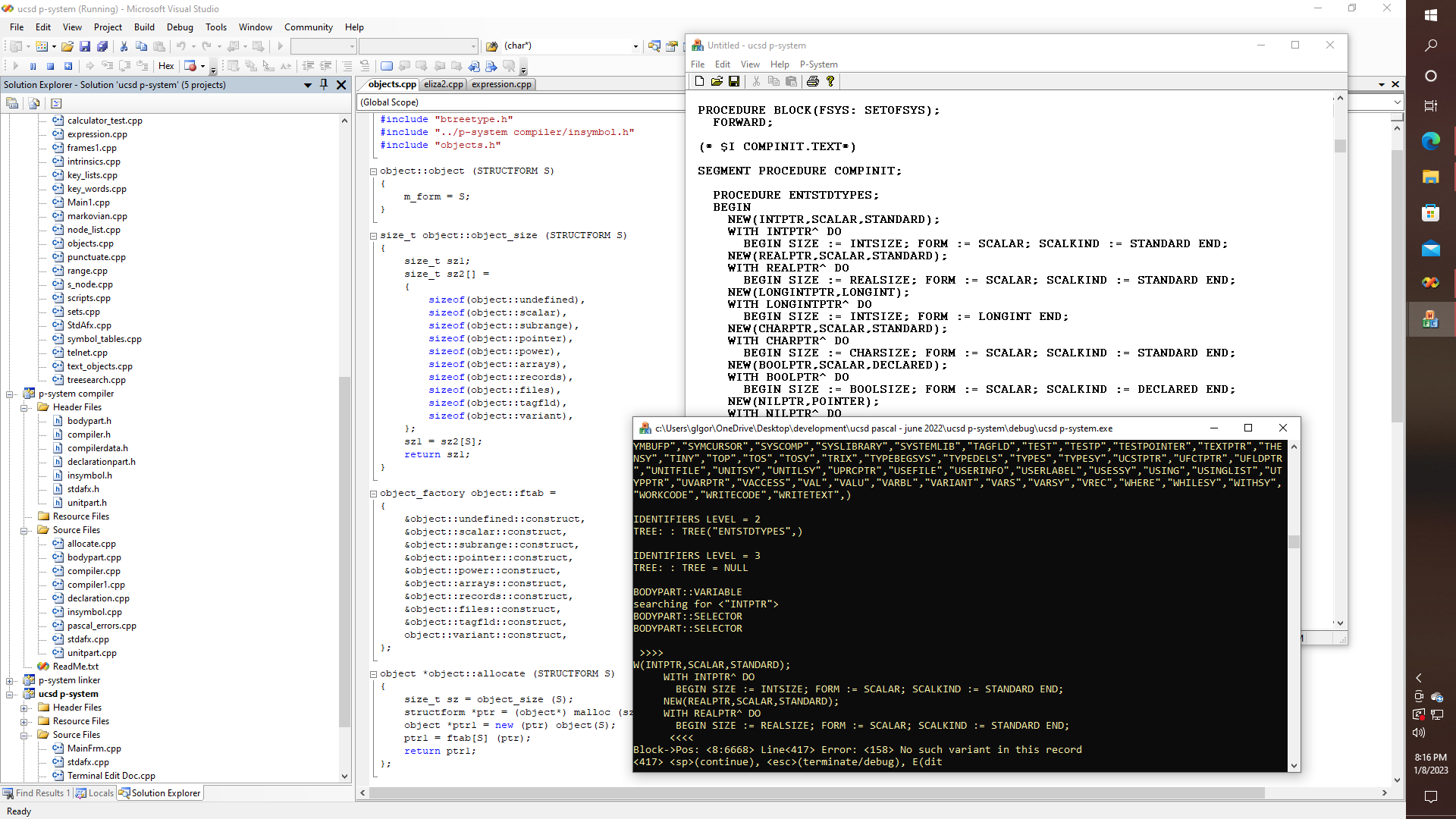

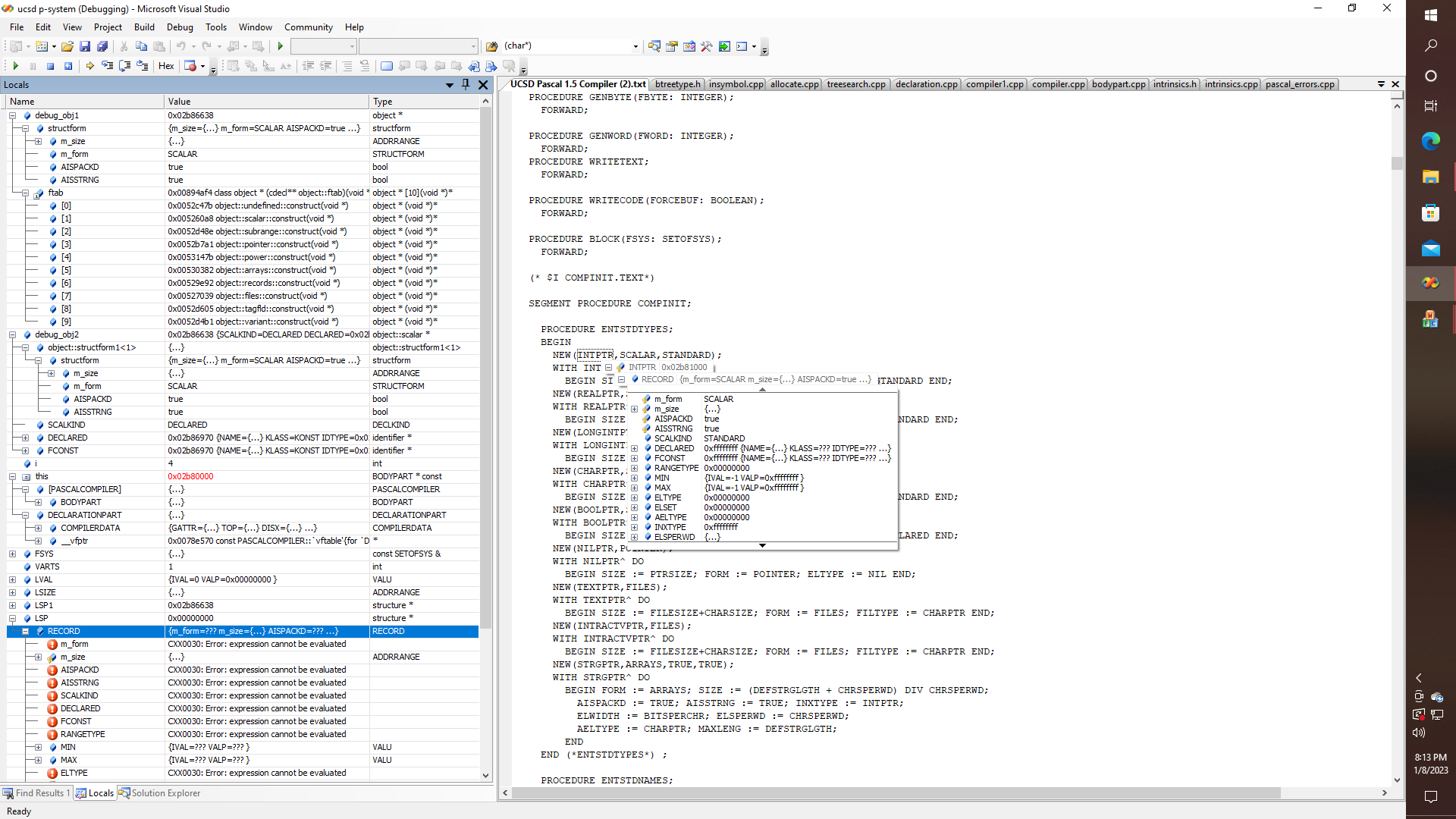

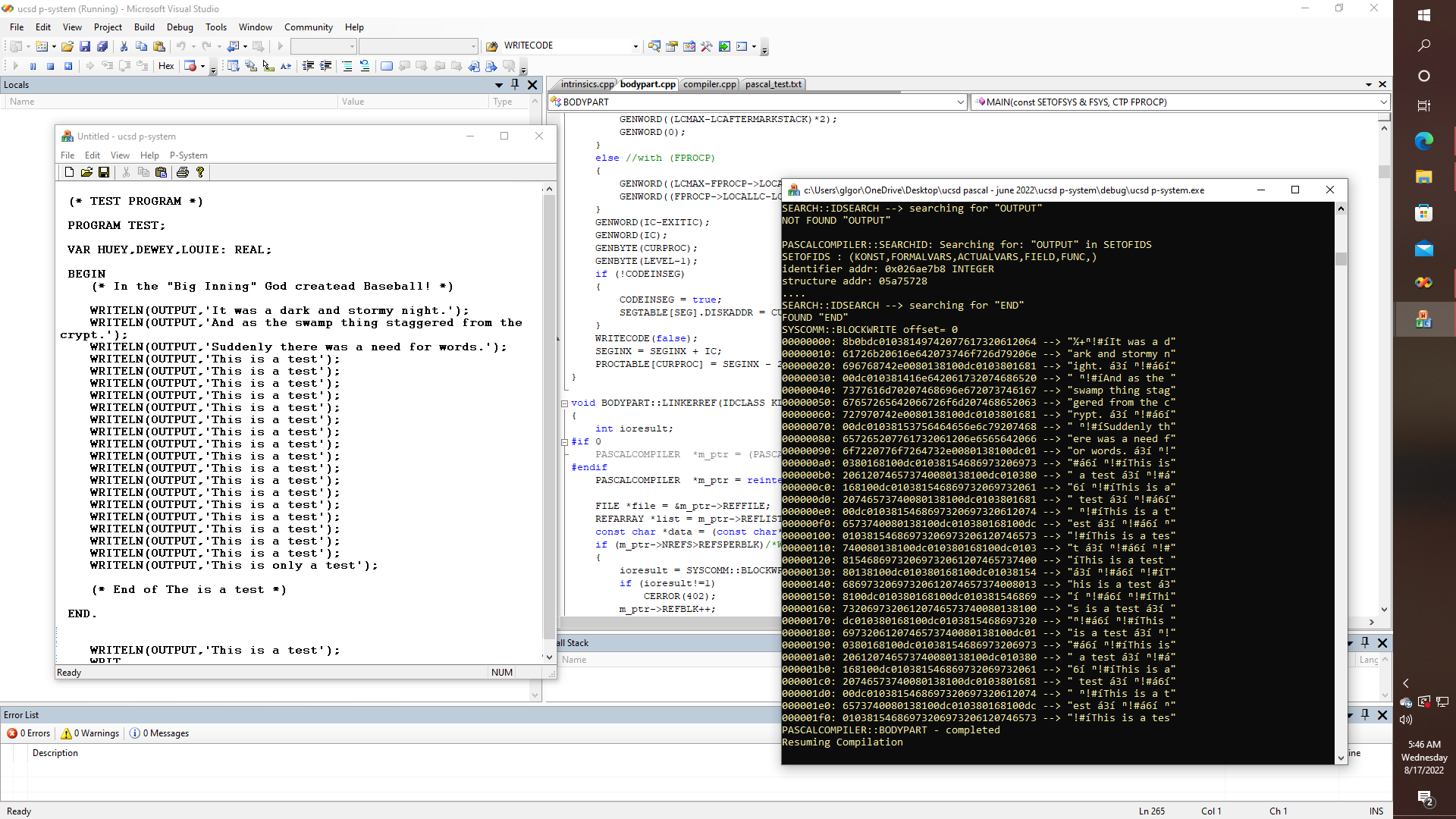

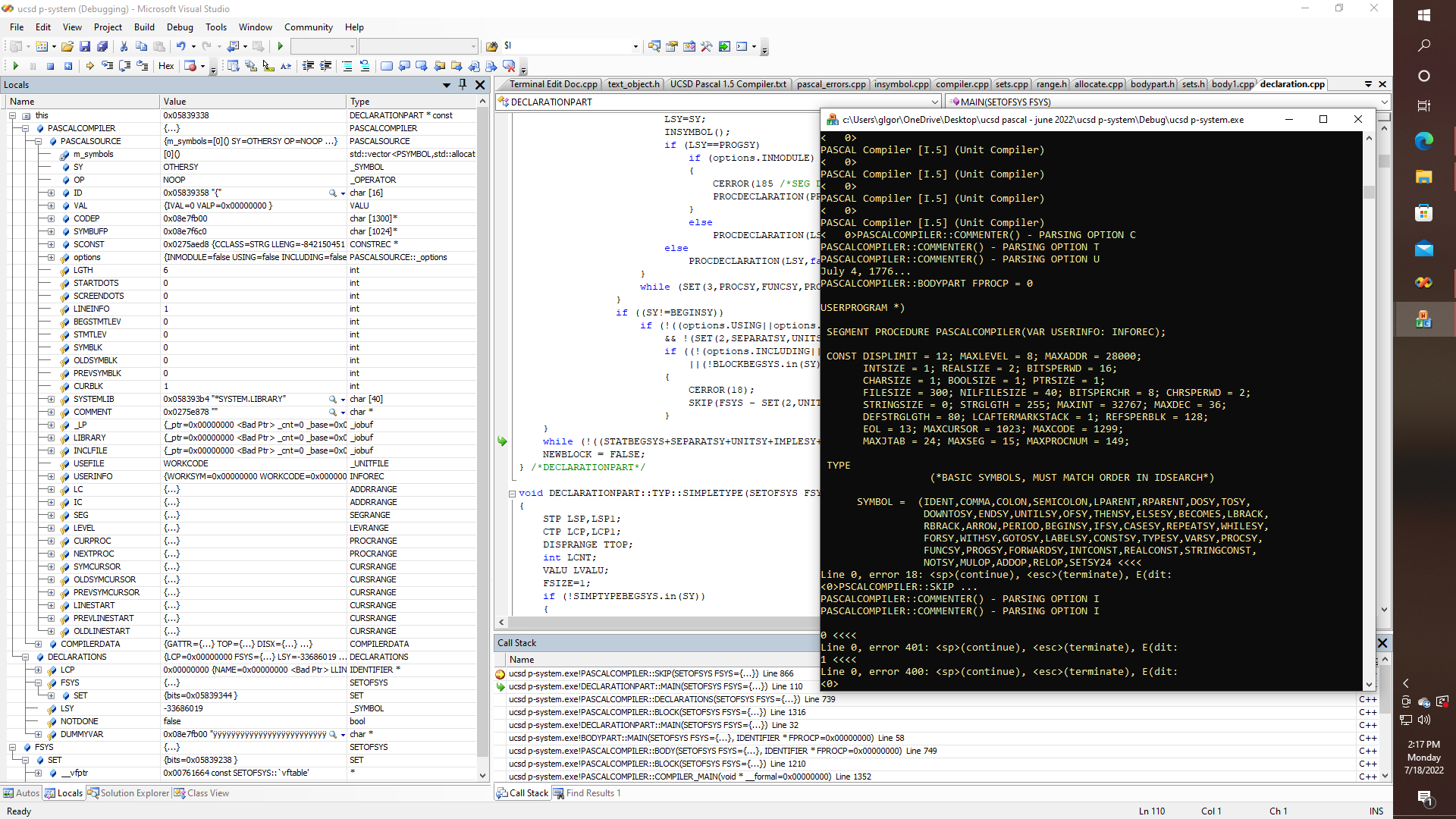

Thus, the first approach seems to work best for problems that we know how to solve, and this method, therefore, leads to solutions, that - when they work - are both highly efficient, as well as provable, with the main issues being the amount of work that goes into content creation, as well as debugging and testing.

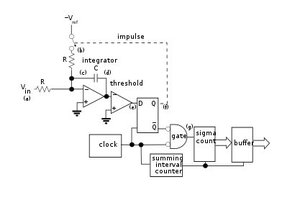

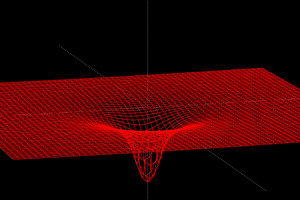

The second approach seems to offer the prospect of allowing for the creation of systems that are arguably crash-proof, at least in the sense that it should be possible to build simulations of large neural networks, that are just massively parallelized as well as pipelined matrix algebraic data flow engines, which from a certain point of view, is simplicity in and of itself. So that would of course seem to imply that from at least one point of view, the hardware can be made crash-proof, that is within reasonable limits, even if an AI application running on such a system might hang from the point of view of the case where the proposed matrix formulation according to some problem of interest fails to settle on a valid eigenstate.

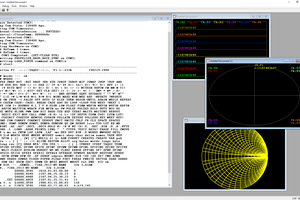

So, let's invent a third approach, according to the possible introduction of some type of neural network of the second type that can hopefully be conditioned to create script engines of the first type. Not that others haven't tried doing this with so-called hidden Markov models which concordantly will just as often introduce some kind of Bayesian inference to some hierarchical model. Thus, there have been many attempts at this sort of thing, often with interesting, even if somewhat, at times nebulous results, i.e., WATSON, OMELETTE. So, obviously - something critical is still missing!

Now as it turns out, the human genome consists of about 3 billion base pairs of DNA, each of which encodes up to two bits of information - which might therefore fit nicely in about 750 megabytes for a single set of up to 23 chromosomes, if it can be stored that is, in a reasonably efficient, but uncompressed form. Now if it should turn out that 99% of this does not code for any actual proteins, then it might very well be that all of the actual information needed to encode the proteins that go into every cell in the human body, well that information might only need a maximum of about...

Read more » glgorman

glgorman