And in other news, I am continuing to work on porting the UCSD Pascal compiler to C++, so that I will eventually be able to compile Pascal programs to native Propeller assembly, or else I will implement P-code for the Propeller, or Arduino, or both, or perhaps for a NOR computer. Approximately 4000 lines out of the nearly 6000 lines of the original Pascal code have been converted to C++, that is to the point that it compiles, and is for the most part operational, but in need of further debugging. As was discussed in a previous project, I had to implement some functions that are essential to the operation of the compiler, such as an undocumented, and also missing TREESEARCH function, as well as another function which is referred to as being "magic" but which is also missing from the official distribution - and which is referred to as IDSEARCH. Likewise, I had to implement Pascal-style SETS, as well as some form of the WRITELN and WRITE functions, and so on - amounting to several thousand additional lines of code that will also need to be compiled to run in any eventual Arduino or Propeller runtime library. Then let's not forget the p-machine itself, which I have started on, at least to the point of having some functionality of floating point for the Propeller or Arduino, or NOR machine, etc.

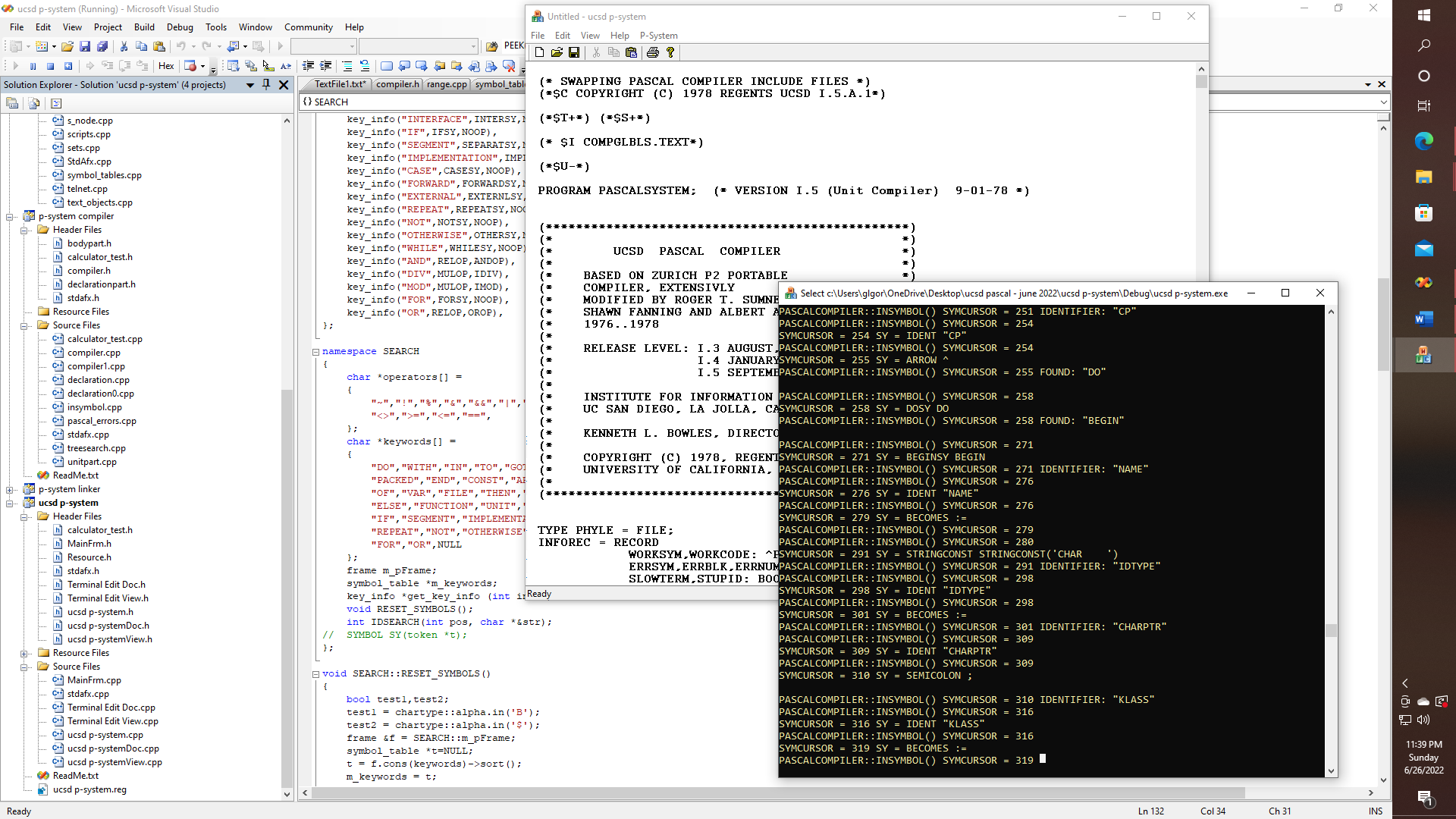

Here we can see that the compiler, which is being converted to C++, is now - finally starting to be able to compile itself. The procedure INSYMBOL is mostly correct and the compiler is getting far enough into the procedures COMPINIT and COMPILERMAIN so as to be able to perform the first stages of lexical analysis.

Now, as far as AI goes, where I think that this is headed is that it is going to eventually turn out to be useful to be able to express complex types of grammar that might be associated with specialized command languages according to some kind of representational form that works sort of like BNF, or JSON, but ideally which is neither - and that is where the magic comes in - like suppose we have this simple struct definition:

struct key_info

{

ALPHA ID;

SYMBOL SY;

OPERATOR OP;

key_info() { };

key_info(char *STR, SYMBOL _SY, OPERATOR _OP)

{

strcpy_s(ID,16,STR);

SY = _SY;

OP = _OP;

}

};

Then we can try to define some of the grammar of Pascal like this:

key_info key_map[] =

{

key_info("DO",DOSY,NOOP),

key_info("WITH",WITHSY,NOOP),

key_info("IN",SETSY,INOP),

key_info("TO",TOSY,NOOP),

key_info("GOTO",GOTOSY,NOOP),

key_info("SET",SETSY,NOOP),

key_info("DOWNTO",DOWNTOSY,NOOP),

key_info("LABEL",LABELSY,NOOP),

key_info("PACKED",PACKEDSY,NOOP),

key_info("END",ENDSY,NOOP),

key_info("CONST",CONSTSY,NOOP),

key_info("ARRAY",ARRAYSY,NOOP),

key_info("UNTIL",UNTILSY,NOOP),

key_info("TYPE",TYPESY,NOOP),

key_info("RECORD",RECORDSY,NOOP),

key_info("OF",OFSY,NOOP),

key_info("VAR",VARSY,NOOP),

key_info("FILE",FILESY,NOOP),

key_info("THEN",THENSY,NOOP),

key_info("PROCEDURE",PROCSY,NOOP),

key_info("USES",USESSY,NOOP),

key_info("ELSE",ELSESY,NOOP),

key_info("FUNCTION",FUNCSY,NOOP),

key_info("UNIT",UNITSY,NOOP),

key_info("BEGIN",BEGINSY,NOOP),

key_info("PROGRAM",PROGSY,NOOP),

key_info("INTERFACE",INTERSY,NOOP),

key_info("IF",IFSY,NOOP),

key_info("SEGMENT",SEPARATSY,NOOP),

key_info("IMPLEMENTATION",IMPLESY,NOOP),

key_info("CASE",CASESY,NOOP),

key_info("FORWARD",FORWARDSY,NOOP),

key_info("EXTERNAL",EXTERNLSY,NOOP),

key_info("REPEAT",REPEATSY,NOOP),

key_info("NOT",NOTSY,NOOP),

key_info("OTHERWISE",OTHERSY,NOOP),

key_info("WHILE",WHILESY,NOOP),

key_info("AND",RELOP,ANDOP),

key_info("DIV",MULOP,IDIV),

key_info("MOD",MULOP,IMOD),

key_info("FOR",FORSY,NOOP),

key_info("OR",RELOP,OROP),

};

And as if, isn't this all of a sudden - who needs BNF, or regex, or JSON? Thus, that is where this train is headed - hopefully! The idea is, of course, to extend this concept so that the entire specification of any programming language (or command language) can be expressed as a set of magic data structures that might contain lists of keywords, function pointers, and special parameters associated therewith, such as additional parsing information, type id, etc.

Elsewhere, of course - I did this - just to get a Lisp-like feel to some things:

void SEARCH::RESET_SYMBOLS()

{

frame &f = SEARCH::m_pFrame;

symbol_table *t=NULL;

t = f.cons(keywords)->sort();

m_keywords = t;

}

Essentially constructing a symbol table for the keywords that are to be recognized by the lexer, as well as sorting them with what is in effect, just as if we only needed to write only one line of code! Naturally, I expect that parsing out the AST will eventually turn out to be quite similar and that this is going to work out quite nicely for any language - whether it is Pascal, C++, LISP, assembly, COBOL, or whatever.

This of course now gives us one of the missing functions, as stated, since if you look at the UCSD p-system source distribution, the function IDSEARCH, which is in this project provided as SEARCH::IDSEARCH, is, of course, one of the missing functions, without which the compiler cannot function; since it is, after all, a fundamental requirement that we have some method for recognizing keywords. There could be improvements made, even beyond what I have done here, such as taking the list that I have created and instead making a binary tree that has more frequently encountered symbols placed near the top of the tree, for even faster searching, as if trying to write a compiler that can perhaps handle a few hundred thousand lines of code per minute isn't fast enough. Or perhaps a hash function that works even faster yet would be in order. Not really a requirement right now, but as far as modular software goes, one of the nice things is that the implementation of this component could possibly be upgraded at some point in the future, without affecting the over all design.

glgorman

glgorman

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.