-

This is the tangent that doesn't end

02/24/2017 at 06:07 • 0 commentsyes it goes on and on, my friend.

Trying to backup an old/unique KayPro's system diskette... Yep, we're talking a 30y/o diskette.

Have managed, allegedly, to extract all but 60 or so of its 800 sectors. Though, now that I've combined the majority of the data I have extracted, I've discovered that my methods for combining that data was neglectful of some key information... On the plus-side, I don't think I need to add any wear to the diskette to fix that neglect. On the other hand, that combination of extracted-data, alone, was a good two days' worth of work that now has to be repeated in a new, more complicated, way which I can barely wrap my head around.

So far, we've got the KayPro's 360K floppy drive attached to a Pentium running linux, using fdrawcmd and countless hours of scripts reading and seeking and rereading and reseeking from the other direction... different delays for allowing the head to stop vibrating from a seek, different delays to make sure the spindle's reached the right speed...

Now I'm using the 1.2MB floppy drive to try again... one thought being that maybe the disk's spindle/centering-hole might've worn over time, in which case the actual tracks may be wobbling with-respect-to the read-head. So, if I use the 1.2MB drive on those tracks, I'll not only have skinnier read-heads that're more-likely to stay "on-track", I'll also have *two* to try. Also, I imagine the head-amplifier expects a weaker signal, so probably has better SNR, etc... Seems like it could be helpful. But I initially found that the 1.2MB drive wasn't reading valid data from the sectors nearly as often as the original 360K drive the system (still, 30 years later) boots from, so switched over to that one early-on.

Dozens of extraction-attempts... Some of those runs had the head seeking back and forth for hours on end. Noisy Bugger. But I only stopped when a few runs in a row weren't able to extract more of the missing data... So, each of the *countless* attempts before that managed to recover more missing data... So that's cool.

It's a wonder these things ever worked, really... There're so many factors! I'm betting even minor amounts of thermal expansion/contraction could have a dramatic effect on head-alignment.

But I haven't really been able to think about anything else since this stupid side-project started... kinda draining, to say the least. Especially considering I don't know if I've really got a use for it. Coulda been using that time to work on #sdramThingZero - 133MS/s 32-bit Logic Analyzer instead, which would render this ol' clunker a bit obsolete... but my head's just not been there.

It is cool, though... 20MS/s from the 1980's! The entirety of the software fits on a 360K disk, and still manages to do things like advanced-triggering and disassembly of the waveforms (in Z80, anyhow). Would definitely be a shame to see it lost to bit-rot.

-

Warm-Fuzzy

02/17/2017 at 12:07 • 0 commentsI'm getting a warm-fuzzy feeling reading the DOS-based multi-color 80x25-character help-screen in a program copyright late in the first decade of the twenty-first century, explicitly mentioning its compatibility with (and workarounds for) PC/XT's running at 4.77MHz, to 486's running "pure DOS", to systems running Windows 2000 through XP, in backing-up and creating 5.25in floppy disks for systems like KayPros and Apple II's.

Seriously, you have no idea how warm-fuzzy this is.

--------

This is a far tangent from this project... But actually not-so-much. In fact, I dug this guy out *to work on* this project. Though it seems I won't need it, it's a pretty amazing system that would've helped if I'd've known anything about it early-on. Suffice to say, many years ago I acquired an apparently VERY CUSTOM KayPro. I've been doing quite a bit of searching online, and it would seem there's basically nothing more than a few archived magazine-snippets, and *one* article, regarding this specific unit. Yeahp, there's a whole community (if not several), online, regarding KayPros and CP/M... People going to the effort to backup and restore images of Wordstar and things we have in *much* better functionality these days... Even people creating emulators (both CPU *and* circuitry/hardware) to use those things. And yet it would seem in the entirety of the interwebs, and amongst the entirety of those die-hards, not one has encountered this particular system, let-alone gone to the trouble to document anything about it.

-----------

Forgive my being side-tracked... But the warm-fuzzies that those die-hards--wanting to back-up and document things like Wordstar, that most people would consider irrelevant in this era--might just make it possible for someone with my utter-zero knowledge of CP/M, Z80's, nor KayPros, to document something so apparently unique... (and, yet... still usable even by today's standards!)... I dunno if I'm worthy of this experience. But I'll do my best.

---------

As it stands, I'm staring at a well-written help-screen written for DOS in 2008, compatible with PC/XT's from 1988 through Pentium 4's of the 2000's, explaining how to work with diskettes from an incompatible system from the much-earlier 1980's. It's almost like... well, I won't go into those details. Let's just say it's a nice feeling.

-------

Meanwhile, someone left a huge pile of TNG episodes on VHS in the building's "free-section", so I've been having quite a throw-back these past several days. (Would you believe there's a couple episodes I *don't* remember having seen previously? The first encounter with Ferengis was quite hilarious.).

-

Question For Experts - Disassembly and Data-Bytes?

02/15/2017 at 07:23 • 4 commentsHow Does A Disassembler Handle Data Bytes intermixed in machine-instructions? anyone?

Recurring running question in my endeavors-that-aren't-endeavors to implement the 8088/86 instruction-set.

(again, I'm *not* planning to implement an emulator! But it may go that route, it's certainly been running around the ol' back of the 'ol noggin' since the start of this project.)

I can understand how data-bytes intermixed in machine-instructions could be handled on architectures where instructions are always a fixed number of bytes... just disassemble those data-bytes as though they're instructions...(they just won't be *executed*... I've seen this in MIPS disassembly, though I don't know enough to know that MIPS has fixed-length instructions).

I can also understand how they're not *executed*... just jump around them.But on an architecture like the x86, where instructions may vary in byte-length from anywhere from 1 to 6 instruction-bytes... I don't get how a disassembler (vs. an executer) could possibly recognize the difference between a data-byte stored in "program-memory" vs, e.g. the first instruction-byte in a multi-byte instruction. And, once it's so-done, how could the disassembler possibly be properly-aligned for later *actual* instructions?

Anyone? (I dunno how to search-fu this one!)

-

FINALLY!

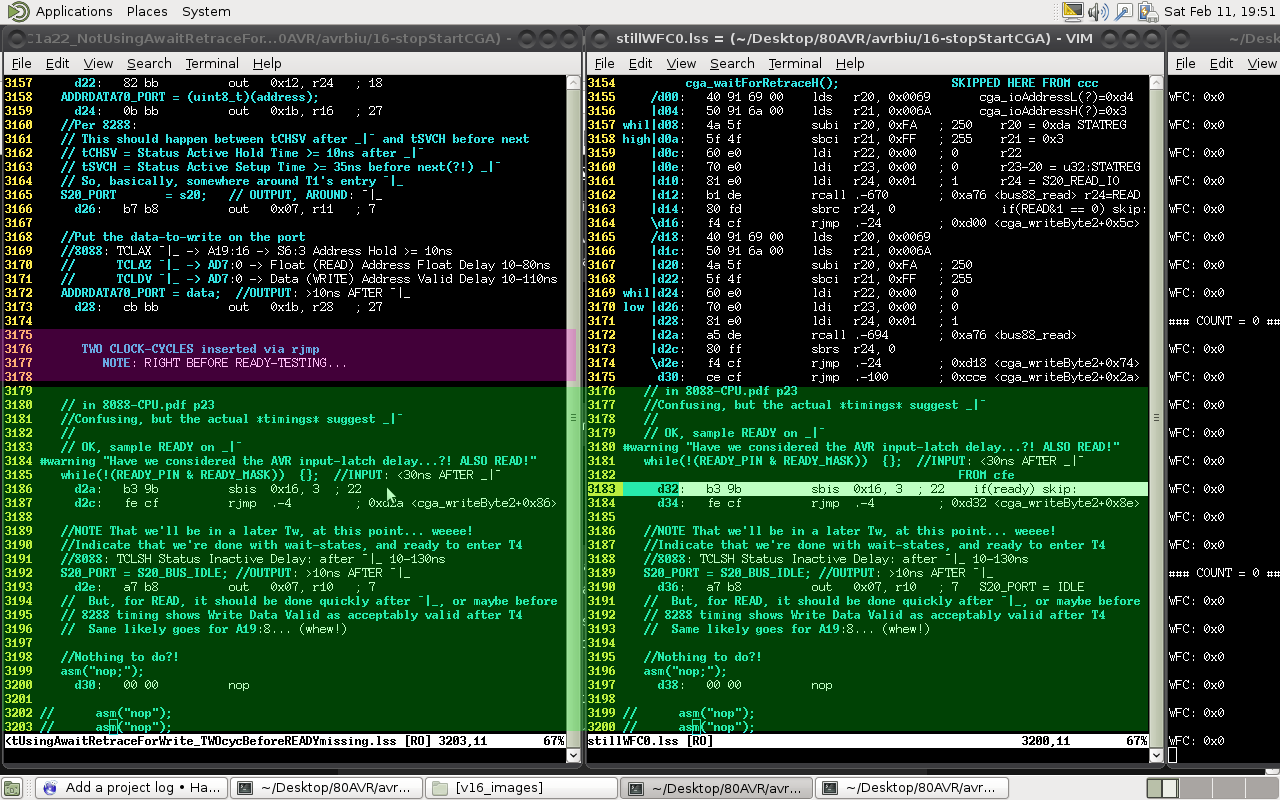

02/12/2017 at 04:29 • 4 commentsSeriously, look at the number of experiments...

![]()

The signs were all there:

#warning "Have we considered the AVR input-latch delay...?!"I like that one the best...

Yahknow, it only reminded me to look into the actual cause of the problem *every time I compiled* since the very beginning. (It's been years, but I still haven't gotten used to the new gcc warning-output... it's too friggin' verbose. Does it really need to show the column of the line that starts with \#warning, then show the \#warning *output* as well?)

------------

So, here's what it boils down to: early-on (when I wrote that warning) I figured there was basically no way in he** this could possibly be less than at least a few instructions... Read the port-input-register, AND it, invert it, set up a loop, etc...

while(!(READY_PIN & READY_MASK)) {};In reality it compiles to TWO instructions!

sbis 0x16, 3 rjmp .-4

And, technically, the read-input instruction grabs the value stored in its latch during a *previous* clock-cycle.I had a vague idea it might need to be looked into, but I was *pretty sure* I had it right... but not *certain*.

Then the friggin' ORDEAL with h-retrace seemingly confirming various theories... And boy there were many... Friggin' rabbit-hole.

So, then... this last go-round I had it down...

After the rabbit-hole revealed its true nature, I found a place of utmost-oddity...

Inserting the following 'if(awaitRetrace)' before retraceWait() somehow magically fixed the problem, in its entirety. I mean, the rabbit-hole got me close. Visually I was getting no errors, but it was still taking about 500 retries (out of 2000 bytes) to get it there. But somehow inserting that if-statement before the already-running retraceWait() fixed it 100%.

So, at that point I'd inlined bus88_write() which didn't have any significant effect... maybe the error-rate dropped, slightly, that first-try, but whatever benefit was easily wiped-out by the uncanny results of various seemingly irrelevant changes, such as adding a nop between write and read-back.

But I left it inline, anyhow, thinking maybe it'd reduce the number of instructions between detecting the h-retrace and actually-writing. (In case, maybe, all those pushes/pops, jumps, returns might've taken longer than the actual h-retrace).

So, when I added the if(awaitRetrace), what I'd *planned* on doing was reusing the cga_writeByte() function for two purposes, I'd have two tests: one like I'd been doing--drawing while the screen is refreshing (waiting for retrace), and a new one, where I'd shut down the video-output, and only *then* write the data. Then compare the error-rates of the two. (Thus, in the second case, I'd have to disable retrace-waiting, since there would be no retracing).

That was the idea.

All I did was add an argument for awaitRetrace to cga_writeByte, and add that if-statement. Then called it with my normal code, (I hadn't written-up the stop-output portion yet) expecting it to function *exactly* the same (but figuring that'd be a lot to ask, since merely inserting a nop in random places was enough to cause dramatic changes).

Instead, 100% accurate. For the first time in this entire endeavor.

How the HECK could an if-statement whose argument is 1--wrapping a function which was previously called anyhow--fix the problem?!

So I looked into the assembly-listings side-by-side.

And, sure-enough, the optimizer did its thing. I'll come back to what it did.

Usually I'd write things like bus88_write in assembly to make *certain* the optimizer wouldn't interfere with timing. An easy example is

uint8_t byteB = byteA&0xAA; PORTA = byteA; PORTB = byteB;Which the optimizer might-well compile as, essentially:

PORTA = byteA; byteA &= 0xAA; PORTB = byteA;That way it doesn't have to use an additional register. But, the problem is, now PORTB's write occurs *two* cycles after PORTA's, rather than the *one* intended.I haven't been in the mood to refresh myself on inline-assembly, lately, so instead I'd been keeping an eye on the assembly-output nearly every time I made a change to the bus88_write() function to make sure that sorta stuff didn't happen. And it didn't.

Until I inlined it... And even *then* it didn't.

Until I added that if-statement around a completely different function.

I'll spare *all* the details, but here's the important bit:

![]()

The key-factor is that the inlined h-retrace function, since it now belongs in an if-statement, is moved *below* its place in the C code,and jumped-to. It happens to be placed *exactly* before the inlined write-function's READY-testing routine. (Why there? Dumb-luck again, I guess).![]()

So, the one on the *right* side is the "new" one that works. It has the if-test, and remapped the h-retrace functionality to literally right before the READY testing in bus88_write's functionality. And to get around that, it inserted a jump. And that jump takes two clock-cycles.

Thus, delaying the testing of the READY-pin by two bus-clocks, and thus, apparently, giving the CGA card enough time to output its "NOT READY! WAIT!" Flag.

------------

So the be-all-end-all solution to literally a week's worth of harassment (and over 80 experiments!) is to insert a danged NOP (or two) where I once thought it might be wise to consider inserting a NOP (or two).

-----------

And all that stuff about h-retrace's seemingly fixing the problem at times...? Just dumb luck I guess. Worse than dumb-luck, BAD dumb-luck, until it *finally* sorta redeemed itself for all that harassment, here...

-

dumb-luck wins - Color Text is now reliable

02/11/2017 at 17:17 • 2 commentsUPDATE2: MWAHAHAHAHAHAHAHA. See the bottom...

UPDATE: DAGNABBIT DUMB-LUCK AGAIN. See the bottom.

-------------

Long story short: wait for horizontal-retrace between *every* character/attribute read/write. (Or don't... this is all wrong. See the updates at the bottom and the next log)

------------

So, it would seem...

(I tried to write this next paragraph as a single sentence... you can imagine how that went):

The last log basically covers the fact that I made a mistake in my implementing a test for the hypothesis that this clone CGA card ignores bus-read/writes while it reads VRAM to draw pixels. Despite the mistake (and it was a big one), the result was *exactly* what I was expecting, seemingly confirming my hypothesis. That "confirming"-result was, in fact, nothing but dumb-luck. And, in fact, seems completely strange that it appeared *any* different than the earlier experiments for earlier hypotheses, let alone *confirmational* of the latest one.

(This is why I *hated* chemistry labs. "1-2 hours" usually took me two 8-hour days, or more).

So, yes, the results appear to have led to the *right* confirmation, but the way those results were acquired were no more confirmational than any other form of dumb-luck.

------------

So the end-result is that *every* read or write should be prefaced with a wait-for-horizontal-retrace. Once that's done, the write/read/verify/(repeat) process dropped from (at one point) 500% errors to less than 10% repeats, and no on-screen errors.

Still can't explain the need for verify/repeats, but it works.

Also can't explain why *errors* were coming-through on-screen despite the fact I had a write/read/verify/(repeat) loop. The only thing I can think is that maybe a bus-read that occurs at the same time as an on-card pixel-read might result in the PC's reading the byte requested by the *pixel-read* rather than the byte the PC requested. (and, since the memory was *mostly* full of the same data, a read of another location might return the value we're expecting... hmmm).

(Note I refer to "pixel-reads", but that's obviously not correct in text-mode, since the VRAM contains *character*/*attribute* bytes, not bytes of pixel-data. So, by "pixel-read" I mean the CGA card is reading the VRAM in order to generate the corresponding pixels.)

---------------

I should be excited about the fact it's working, and as-expected, no less.

Means my AVR-8088 bus-interface is working!

--------

In reality, I figured waiting-for-retrace was probably a good long-run idea, but I chose not to implement it, yet. I've read in numerous places that writing VRAM while the card is accessing it for pixel-data causes "snow." I didn't care about snow at this early stage. And, yahknow, the more code you put in in the beginning, the more places there are for human-error. This seemed like a reasonably-"educated" trade-off choice.

Also, I didn't just *avoid* it... I did, in fact, look into examples elsewhere... The BIOS assembly-listing shows its use in some places, but *not* in others. (Turns out, many of those examples they shut-down the video-output altogether... man Assembly is dense!)Though, upon implementing it, it hadn't occurred to me *just how often* it would be required... h-retrace-write-read is too much!

If I didn't take this path, I wouldn't've discovered that some cards don't respond the same as described ("snow" vs. ignored-writes/reads)... wooot!

----------

UPDATE: Dagnabbit! Dumb-Luck again!

New function:

void cga_writeByte(uint16_t vaddr, uint8_t byte) { while(1) { cga_waitForRetraceH(); bus88_write(S20_WRITE_MEM, cga_vramAddress+vaddr, byte); uint8_t readData; cga_waitForRetraceH(); readData = bus88_read(S20_READ_MEM, cga_vramAddress+vaddr); if(readData == byte) break; writeFailCount++; } }The contents of this function were, previously, copy-pasted where needed...And it worked great.

So now it's in a function...

And now it's called in cga_fill() and numerous other places similarly:

for(i=0; i<FILL_BYTES; i+=2) { cga_writeByte(i+1,attributes); cga_writeByte(i, FILL_CHAR); }Worked great in most locations (writing numbers 0-9 8 times, indicating that I'm actually running in 80x25, rather than 40x25 like I thought), writing the attribute-value in hex in the upper-left corner... All these look great.

But filling the screen with cga_fill() shows a regular pattern of errors again.

And guess what affects it....

Inserting friggin' NOPs between the two cga_writeByte() calls.

Not just a minor effect, either... Pretty dramatic.

Adding one nop reduces the (visible!) error by half

Adding two nops reduces it to near-zero visible error, but we have 0x900 retries!

Adding three nops increases it again

Four makes it far worse...

Here's waitForRetrace: (Don't want return, etc. to slow it down)

#define cga_waitForRetraceH() \ ({\ /* Wait while H-Retrace status is Active=1 (leave when inactive=0) */ \ while \ ((0x1&bus88_read(S20_READ_IO, cga_ioAddress+CGA_STATUS_REG_OFFSET)) ) \ {} \ /* TODO: Is this how DMA is prevented from interfering? */ \ cga_RetraceH_CLI(); \ /* Wait while H-Retrace status is Inactive=0 (leave when active=1) */ \ while \ (!(0x1&bus88_read(S20_READ_IO, cga_ioAddress+CGA_STATUS_REG_OFFSET)) ) \ {} \ /* No Return Value */ \ {}; \ })So, dumb-luck strikes again. It worked as-expected earlier, and apparently-perfectly, actually... no fine-tuning/calibration necessary... and apparently only because of dumb-luck. Again, the only difference between now and then was that I moved multiple copies of this same routine into a single function (and the small amount of necessary math to do-so). So all that's changed was timing. And I guess the timing I had before was just lucky enough to be perfect without even trying.

------------

So, entirely-plausibly, there's too much time between detecting the horizontal-retrace and actually writing the bus (between function-calls, pushing, popping, writing the three address-bytes to the bus, whatnot). BUT, that should have no bearing whatsoever on puting NOPs between separate calls to cga_writeByte!

---------

OY!

And, frankly, I don't even know what the heck I'm going to use this for...

-----------------------------------------

UPDATE 2: MWAHAHAHAHAHAHA

In preparation for a more-scientific test, to determine whether it's actually related to pixel-accesses interfering with bus-writes... the goal was to turn the video-output *off* then use the same routine cga_writeByte() to count the errors.

The *only* changes I made were:

- rename cga_writeByte() to cga_writeByte2()

- Add a third-argument: awaitRetrace

- Add "if(awaitRetrace)" before each call to cga_waitForRetraceH()

- \#define cga_writeByte(arg1, arg2) cga_writeByte2(arg1, arg2, TRUE)

That's it.

Now we have 100% write/verify (no retries necessary)... and obviously the screen isn't showing any visible errors, either.

So then I started experimenting with nops... Because, certainly, the only difference should be that if(awaitRetrace (=1)) would insert a delay...

#define cga_writeByte(vaddr,byte) cga_writeByte2(vaddr,byte,1) void cga_writeByte2(uint16_t vaddr, uint8_t byte, uint8_t awaitRetrace) { while(1) { // asm("nop"); asm("nop"); asm("nop"); if(awaitRetrace) cga_waitForRetraceH(); asm("nop"); asm("nop"); asm("nop"); asm("nop"); bus88_write(S20_WRITE_MEM, cga_vramAddress+vaddr, byte); cga_RetraceH_SEI(); uint8_t readData; asm("nop"); asm("nop"); asm("nop"); // if(awaitRetrace) cga_waitForRetraceH(); readData = bus88_read(S20_READ_MEM, cga_vramAddress+vaddr); cga_RetraceH_SEI(); if(readData == byte) break; writeFailCount++; } }As shown, it works perfectly. Removal of all those nops also works perfectly (that was what I originally tested to get to this point in the first place).Addition of NOPs *between* write and read showed no difference

Also, second "if(awaitRetrace)" is commented-out and still-functions (but note that cga_waitForRetraceH() is still called).

Additions of NOPs *above* write's "if(awaitRetrace)" seem functionless. Addition between waitForRetraceH() and bus88_write() do have some effect, but no number of them that I tried would repeat this 100% functionality.

I mean, this is just weird.

Oh, and if I remove the first "if(awaitRetrace)" (again, waitForRetraceH() is *still* called), and mess with nops, then we're quite-literally getting very similar randomness to the very beginning.... back when the errors weren't even vertically-aligned.

I mean, this is just weird.

(I've tried inlining bus88_read/write, as well... Actually, I think since the last time I updated this log. They're inlined now. There was a definite change, upon inlining them, just as adding a NOP here or there causes a definite change, but still pretty much the same. I've also verified the disassembly of that output, and verified that the optimizer didn't reorganize anything... so the actual timings on the bus, within a single transaction, should be identical, in *all* these cases.)

It'll be interesting to see how the universe--or who/what-ever's in charge of games like these--pulls this one together to anything explainable. Am I allowed to forfeit from boredom, or are there consequences? Do I get a fiddle made of gold if I win this one? And, how do I know if I've won... Am starting to think I already have. I've proven this shizzle impossible, as far as I'm concerned. That's *won* in my mind. Now where's my fiddle?

(We're going on 20minutes without one error).

-

weirdness revisited

02/11/2017 at 05:09 • 0 commentsUPDATE: Significant-ish rewriting...

---------

last time I worked on it... a few days ago, now...

I was trying to determine what was the cause for odd-data. As you may recall, all I was doing was writing the letter 'A' to every position on the screen, along with a color-attribute, then repeating that process, cycling through the color-attributes, incrementing it every second or so.

The result was odd-data. Sometimes the new values would be placed as expected, other times it seems data was not being written to a location. The result was a screen with somewhat random data... Mostly 'A' everywhere, but with various attributes, apparently from previous "fill"-attempts.

In the log before last I wrote a lot on my attempts to explain *why* this was happening.

The first thing was the thought that maybe this cheap-knockoff CGA card was expecting data to *only* be written during the horizontal/vertical retraces... (since that seems to be how the BIOS handles it). The theory being that the card might not have the more-sophisticated RAM-arbitration circuitry of the original IBM CGA card, which would allow writes during pixel-reads (showing "snow" on those occasions). Instead, maybe, this cheaper card's pixel-reads *block* write-attempts from the bus.

Thus, I added a wait for horizontal-resync to the beginning of my process, and suddenly the data-errors aligned in vertical columns. Kinda makes sense... In fact, makes perfect sense.... In fact, exactly what I was expecting. Say the data-errors were caused by a card who's circuitry wouldn't allow write-access at the same time it was *reading* (to draw the next pixel in a line). Then there would be several writes which go-through, then a read of a pixel (and a failed write), then several more writes, then a read, etc. "beating". Makes some amount of sense.

Makes sense, as well, if you imagine that the dot-clock is faster than the bus-clock used for writing data... you'll get several read-pixels, but every once in a while [periodically] a write will come through. If that write happens at the time a pixel's being read, it would be ignored, otherwise they might be slightly misaligned and both would go through. AND, if you believe that to be the issue, that could very-well explain the newest problem which I called "even weirder." which, upon revising this log-entry, I never really get around to explaining.)

And now the "errors" would be aligned in vertical columns because the write-procedure waits until a horizontal retrace... so all writes in each row would be aligned to the left of the screen... right? So I continued my experiments on this theory.

BUT: There are SEVERAL problems with this theory...

Problem One: I didn't write only a *row* of data after the horizontal-retrace signal. Nor did I verify we were still in the horizontal-retrace before writing each byte. In fact, I wrote the entire screen's worth of data. So looking back there should be *NO* inherent guarantee of vertical-alignment of the errors due to the addition of retrace-waiting. In fact, it really shouldn't've changed *anything* regarding error-alignment, except through luck.

320 pixels are drawn in each row and there's some horizontal-porch time, as well, before the next line is drawn. But 40*25*2=2000 data-locations are written after that first horizontal-retrace, to fill the screen's character-memory. Assuming the bus-clock and the pixel-clock were the same, we'd also have to consider that each bus-transaction is a minimum of 4 bus-clocks, so now we're at 8000 pixel-times' worth of data. (And, I think the pixel-clock runs faster than the bus-clock). We're talking each "fill"-process is at least an *order-of-magnitude* longer than a single row's being drawn. Probably more like *numerous* rows' being drawn, maybe even numerous frames. And, that entire fill occurs in one continuous burst after that first h-retrace. Thus, again, any vertical-alignment in the errors would amount to nothing more than luck that my character/attribute-writing routines, bus-transactions, and more just happen to be divisible by 320 pixel-clocks (plus h-refresh).

There should be no guaranteed vertical-alignment of the errors. And yet, adding this horizontal-retrace checking *at the beginning* of the fill-process, caused exactly that. As I *mistakenly* expected it to.

I'm harping on this because, before I added that one mistakenly-placed singular h-retrace wait at the very beginning, the data was *definitely not* vertically-aligned. The purpose of adding testing of the h-retrace was to test my hypothesis that the errors were caused, as described earlier (in part at least), by the possibility of the CGA card's not allowing writes while it's reading. I knew it wouldn't solve *all* the errors, but the point was to test whether those errors aligned... With the understanding that how I *intended* to implement it was such that each *row* of data that I'd write would be synchronized with the h-retrace. But I Did Not Do That. I only synchronized *the beginning*.

In reality, that code-change should've had zero effect on vertical-error-alignment, as once that fill-process begins, it should take exactly the same number of clock-cycles to process as it did before (since, as-currently-implemented, there should be no wait-states inserted by the READY signal, no DMA, etc.). So, basically, it should just amount to nothing more than luck (good or bad?) that waiting for that first h-retrace happened to cause the fill-process to align the errors vertically, illegitimately "confirming" my hyphothesis. In fact, it's something even weirder than luck, because, again, if it was a matter of exactly a certain number of writes corresponding to exactly some number of pixel-clocks, and repeating, then vertical-alignment of the errors should've happened before, as well, because, again, the code that actually *writes* the data was unchanged. It was just delayed slightly, at the beginning.

And, furthermore, it really *shouldn't* have caused a repeatable effect, in the first-place, as I mistakenly chose to look for the "in h-retrace" signal, rather than looking for the *edge* of it. AND even looking for the EDGE of the signal shouldn't cause a guarantee of alignment, at all, as that edge would come-through aligned on the *pixel* clock, but only sampled at a somewhat random rate aligned to the 4.77MHz *bus*-clock divided by some number larger than 4 (bus-clocks per transaction). "luck2".

And, thereafter, if I make even the slightest change to the fill-process (even adding a single nop between each write), the entire alignment of write-errors should become completely skewed, and certainly not vertically-aligned.

"Luck3" happens to be, apparently, that darn-near every change I've implemented since (and I tried *many*, including in the fill process) still allows vertically-aligned errors. Again, good luck or bad luck? I'd say bad, in the end, as it led me down a rabbit-hole which had nothing to do with the reality of the situation. Presumptions of understanding based on a complete misunderstanding that'd been carried through numerous iterations of nothing but dumb-luck. (Why can't I win the friggin' lottery, instead?).

Another problem with the earlier theory: This vertically-aligned-error occurs regardless of whether I wait for the horizontal retrace *or* the vertical retrace (and again, not looking for the *edge*). ("luck 1a", maybe?).

----------------

Now I haven't even begun to scratch the surface of why the thing got "even weirder" thereafter... nor even mentioned what it was. But I haven't the energy at this point, and I briefly touched on it in a comment in the last log.

----------------

So, looking back, it would seem if I reimplemented the system with retrace-checking before *every* write, then it might well work as-expected. And, furthermore, the "even weirder" aspect may well disappear.

(That's another question, though... The explanation of the in-retrace/OK-to-write signals are *vague*... Just because it's OK-to-write at the instant that's sampled doesn't mean that it'll still be OK-to-write numerous bus-cycles later! What if it's sampled *right* before changing to false? What if the DMA DRAM-refresh routine kicked in immediately after sampling OK-to-write, stalling the actual write-procedure indefinitely? The only thing I see that *could* prevent this is the fact that the BIOS routines appear to look for the h-retrace *edge* AND once that edge is detected, the interrupts are disabled. Does the DMA controller send an interrupt before beginning its transfers? Doesn't seem right, it's already got a hold-request output. Does the *timer* cause an interrupt that the processor then uses to trigger the already-set-up DMA controller?)

And, seeing as how all this "dumb luck" occurred, it's entirely plausible the explanations above are *completely* wrong. Maybe the write-errors have nothing to do with the pixel-reads and everything to do with not having the 8088-bus timing accurate-enough, or any number of other factors. Many of which were discussed in that past log (two prior).

The fact the characters apparently are visually-distorted based on the foreground/background colors suggests there may be an electrical-error (short? open-circuit?) on the card's signals, as well... So depending on which signals are shorted, that too could have some effect on written data (except, would most-likely not happen so apparently-randomly!) And more.

-

If you thought it was weird before...

02/08/2017 at 18:18 • 5 comments...It's gotten significantly weirder.

-

CGA clock and AVR->8088 Bus Interface

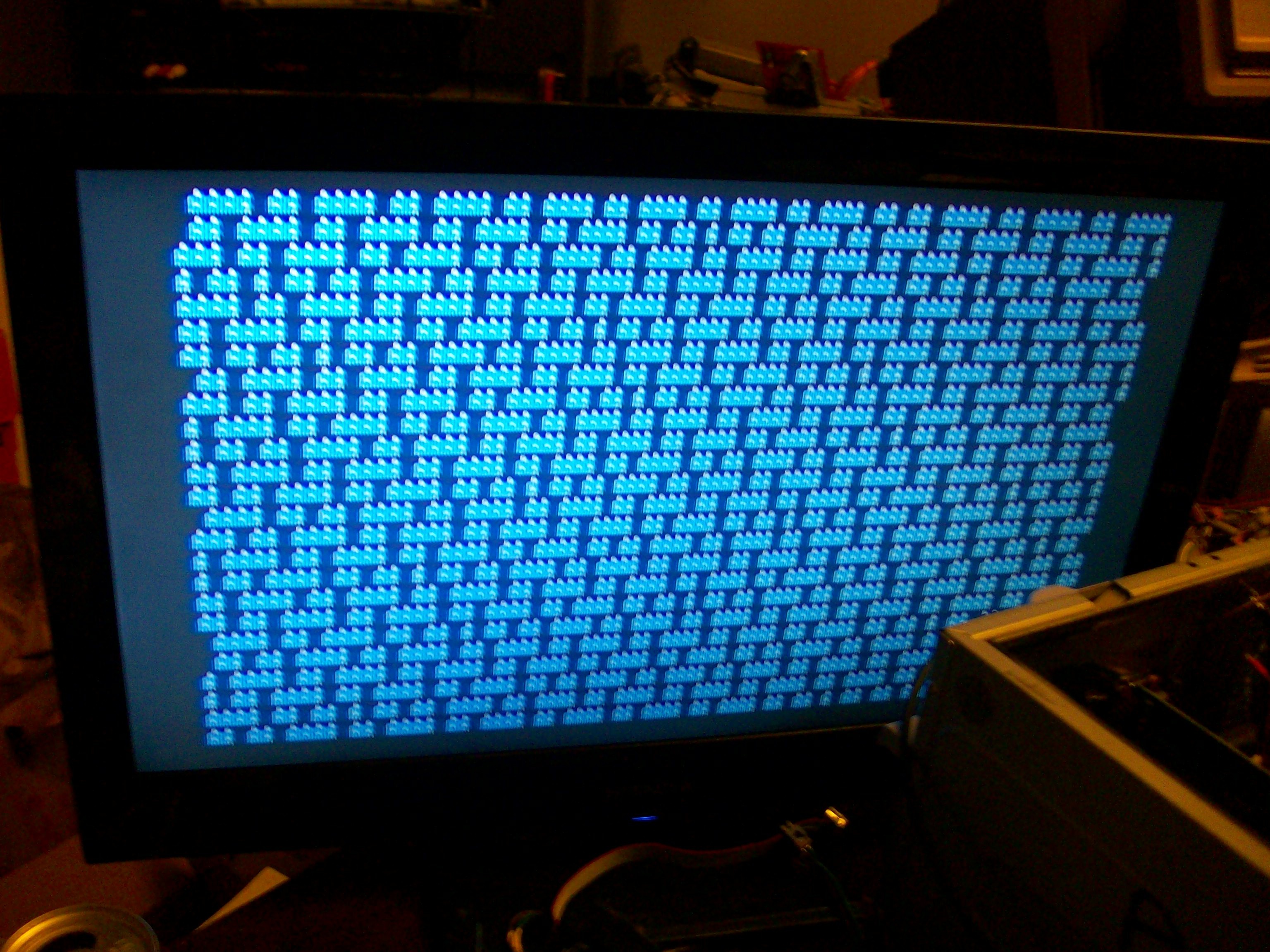

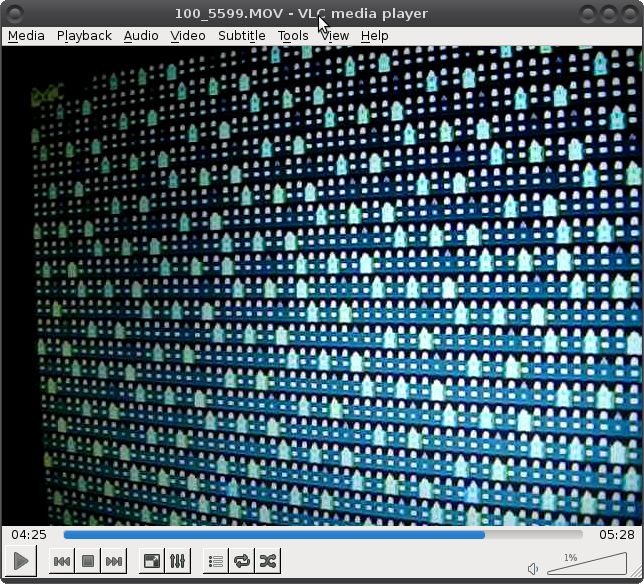

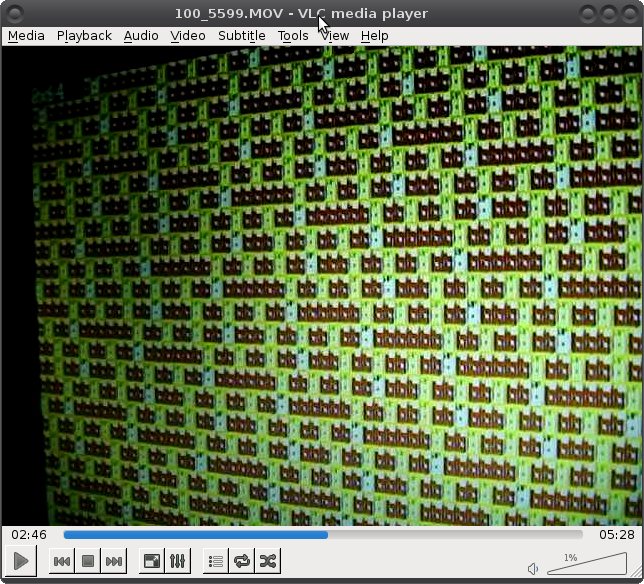

02/07/2017 at 07:09 • 0 commentsThe most-recently-logged experiments with CGA resulted in some interesting patterns. This is the most-boring image, but most-explanatory:

In the image, I attempted to write the letter 'A' to every location. Instead what I get appears a bit like "beating" in the physics/music-sense. It's almost as if the timing of the CGA card's clock and the timing of the CPU (an AVR, in this case) clock are slightly out-of-sync. So, most of the time, the two are aligned well-enough for the 'A' to be stored properly in the CGA's memory, but sometimes the timing is misaligned so badly that the 'A' comes through as some other value.

Frankly, I kinda dig the patterns it creates... it's a lot more visually-interesting than ones I can think to program...

(And check out previous logs for other interesting examples)

Those, again, are the result of nothing more than writing the letter 'A' to every location, and choosing different background/foreground colors. The above is with a foreground color of red and a background color of white. Where it differs, it appears that the background/foreground "attribute" byte wasn't written properly.

(Also, interestingly, the font appears to be messed-up with different color-choices. That's an effect I think due to the CGA card's age/wear, as it's visible as well in DOS, via the actual 8088 chip, and described in previous logs, as well.)

But, I suppose in the interest of science and progress, the trouble--of data not being written properly--needs to be shot.

-----------

A brief review, gone into a bit of detail in past logs:

The CGA card has a space for an onboard clock-generator crystal and space for jumpers to select it, but that circuitry was not populated, and the jumper was hard-wired to use the ISA-slot's clock-signal. In early issues setting up the system and trying to debug the weird-color-font problem, I wound-up adding an onboard clock-generator, and those jumpers, and have swapped those jumpers numerous times since.

----------

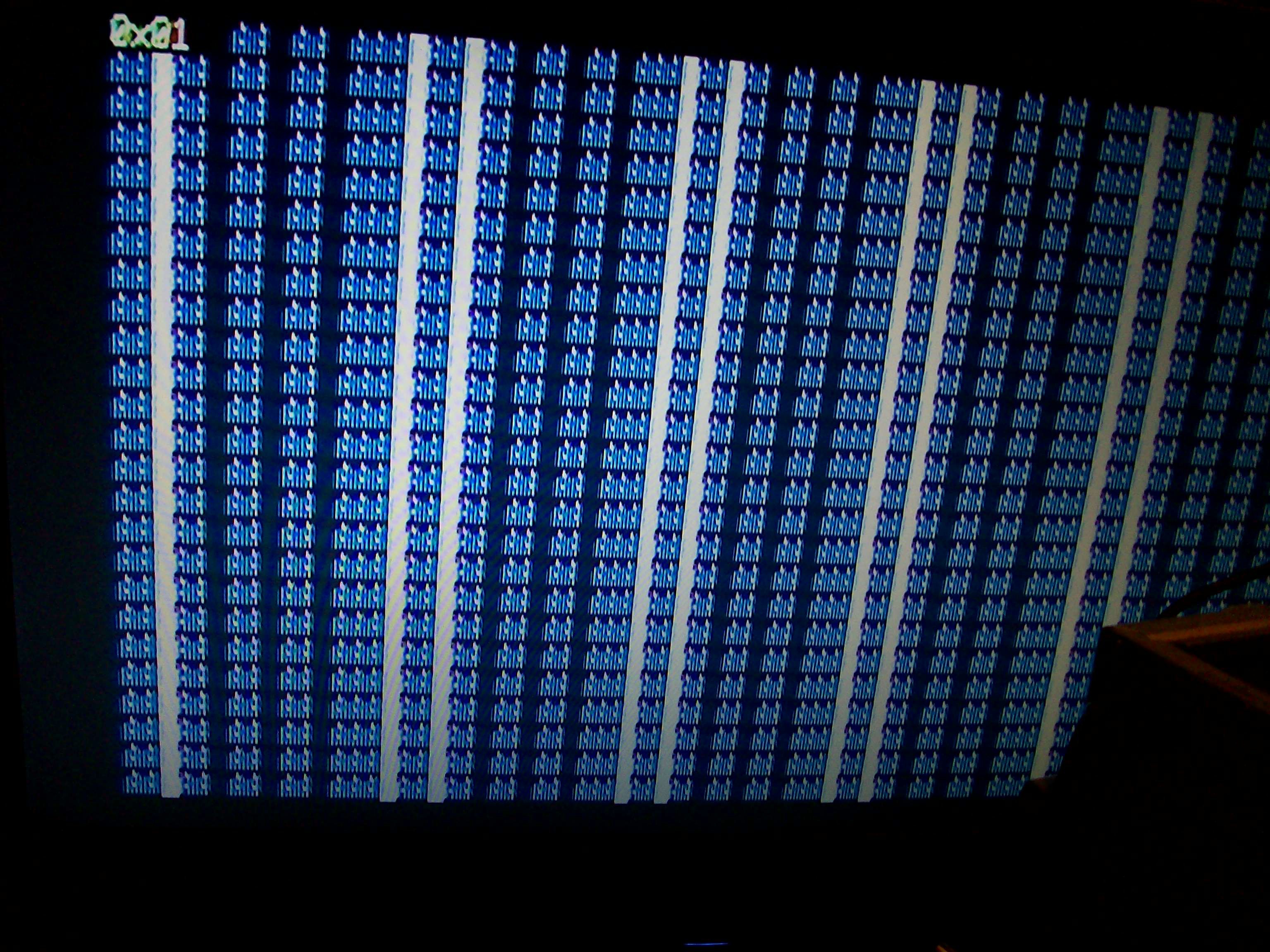

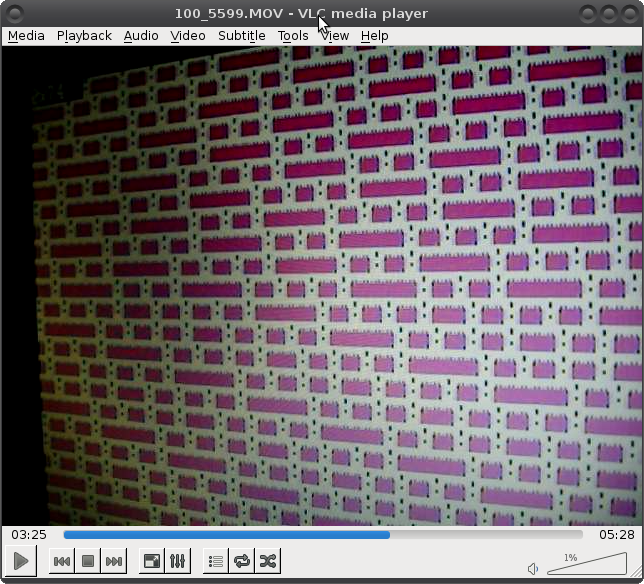

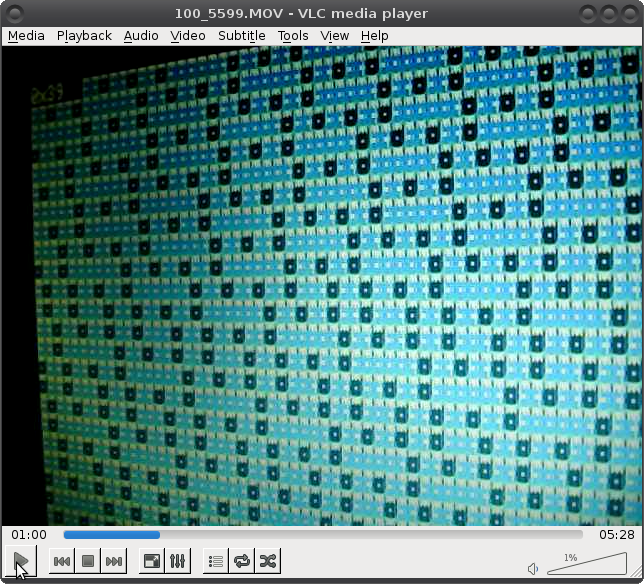

So, here's a result when the jumpers are set to use the ISA slot's clock:

This differs from the one at the top in several ways...

First, the white lines... My explanation for that is that those are "characters" that carried-over from a previous 'mode'. What I'm doing (now) is writing all the 'A's AND the attributes. The entire screen, currently, was supposedly filled with attribute=0x01 (=='mode 0x01'), which should be the letter 'A' in blue (if I recall correctly). But, in a previous mode it wrote 'A's with attribute=0x7f, which is white-on-white. So, again, it would appear these lines are the result of characters that weren't rewritten in this 'mode' (nor, apparently in mode 0x00).

OK. And that, too, might explain the black lines, as being carried-over from mode 0x00, which would be black-on-black.

Although, previously, I thought all bytes were being written, just incorrectly (e.g. the white/red 'brick' example, above). Maybe it's not that they're being written incorrectly, but that they're just not being written at all. Plausible, but questionable... So, the black characters in the 'brick' example are those that started either with attribute 0, or with a white-space character... either the character and/or the attribute were not written to those slots. Then there's the 'A' which comes through on a black-background. Where only the attribute wasn't written. OK. But then... this *is* the memory-initialization scheme (in that image), so those bytes likely weren't set to attribute 7, rather than just random-data... I dunno. Let's pretend the power-up default is an initialized memory suited for text-display, white-on-black. OK-then.

The problem with the theory that it's only *not* writing bytes (as opposed to writing invalid ones)...? Maybe nothing. For some reason I thought it was mis-writing data, earlier... e.g. ASCII character '0xff' appears as a space... this might result from reading a floating-bus, if the timings are wrong. OTOH, I can't think of any examples from before where those "errors" couldn't be explained by *not*-written data (as opposed to *mis*-written data).

Oh yes I can.

When I write the mode-number in the upper-left corner. The first incarnation of that code simply wrote the mode number and attribute ten times, to assure *exactly* that *not* written data would be overwritten. And yet, there were numerous times I could actually see it change from the proper character/attribute to another. Thus, I explicitly rewrote that routine to write-then-verify-then-repeat until verified correctly.

So, no, there should be cases where the attribute, at least, is *mis*-written. Bit I don't see that here (could that be a result of having switched to the ISA-clock?)

-------------

Oy, OK.

Observation Number 2. ALIGNMENT. Note how in this image all the errors are aligned vertically. In the earlier "brick" example, the errors were somewhat randomly staggered. This, initially, indicated to me that, indeed, the system-bus clock and the onboard CGA-clock were slightly off, and thus "beating".

But yet, why would we get perfectly vertical columns...? Or, let me step back further and start with: If they're no longer beating, and in fact perfectly-synchronized, then why isn't the screen, now, filled with 'A' all with the same foreground/background color...?

I suppose there are *numerous* other possible causes for "beating"... there's definitely the possibility that my AVR's bus isn't timed *exactly* right, and if it was *just* on the threshold of some setup/hold times, it might come through sometimes, but not others.

(I should interject: I've not noticed this effect when running DOS on the original 8088, and if it's as common as it appears here, DOS would probably be darn-near illegible).

There's another possibility I've just thought of... The BIOS assembly-listing explicitly waits until a horizontal-retrace (or vertical) before changing a character (in the cases I checked).

ACCORDING to the CGA-card reference from IBM, this shouldn't be *necessary* as the memory is multiplexed in favor of the 8088-bus (over the pixel-drawing), but the effect is allegedly that writing to the memory when a pixel is trying to read from it could cause visual artifacts *during that refresh*. No problem. But, these aren't visual artifacts that disappear after the next refresh! These are persistent artifacts.

OK. BUT the BIOS explicitly handles that... so, say... This CGA card is a clone... (I can't find any data on any of its markings). It was *probably* designed for PCs, which they probably knew to have retrace-testing. In which case, it's plausible, that the card isn't nearly as sophisticatedly-implemented as the original IBM one... in which case, if the pixel-drawer was trying to access the memory at the same time as a bus-access, it might just completely *block out* the bus-access. Plausible.

I think I had some other ideas, too... But let's stick with that one. THEN, that'd explain why I haven't seen this in DOS.

But then... what would explain why the "error" is perfectly aligned in columns...? I'm just looping through each memory-location writing the character and attribute, not paying *any* attention to the video-card's "safe to write" signal... Would seem utterly amazing if that loop just happened to align darn-near perfectly with horizontal-syncs.

------------

I'll leave it at that.

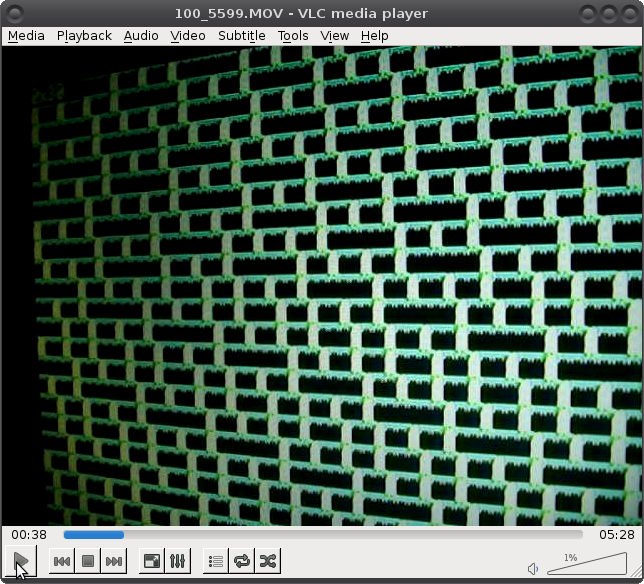

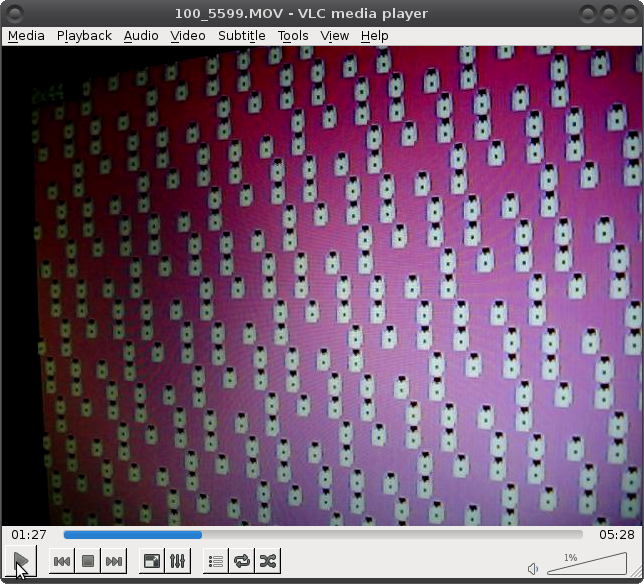

Here are a couple fun ones from the original completely unexplained groovy randomness (which may have as much to do with the as-yet unexplained timing/write-errors as with a bad trace or twelve on my CGA card) These are nothing but the letter 'A', background/foreground colors, and unknown-effects:

Graveyard:

![]()

Graveyard at night:

![]()

Brick wall:

![]()

Brick wall in a night-club:

![]()

Hello Kitty:

![]()

Scenes from Zelda:

![]()

Sea bubbles:

![]()

Load Runner:

![]()

-

Check out this ridiculous finding...

02/06/2017 at 15:20 • 0 commentsIf you've been following the recent saga of assembling the AVR->8088 adapter, you mighta caught that I did most of my calculations based on a 74S04 to delay (and invert) the clock input to the AVR so the timing would align properly...

Then, when assembling, I not only discovered that I have a *very* small supply of 74x04s, but no 74S04s. (I find both scenarios utterly surprising... Yah'd think I'd be pretty friggin' familiar with supplies I've had and used for 20+ years... But I guess that's the way things go these days).

So tonight, looking in "crap boxes" (or, in reverse and cropped: "AR-boxes", for those of a sensitive disposition, or those wondering why I've got so many boxes labelled "AR") for an old project, came across this:

74S04, the lone chip in the center of a huge slab of antistatic foam sitting right at the top of all that other un-foamed "crap."

I didn't see it before looking at the photo, but there seems to be a friggin' arrow pointing at it, too. Weee!

Well, I settled on the lone 74F04 I found in the sorted-7400s box, long before this discovery... and it seems to be doing the job, so I won't be changing it unless deemed necessary.

-

Scenes from an AVR-interfaced CGA card - In the Key Of A

02/04/2017 at 20:26 • 2 comments![]()

The above are the results of the following code:

void cga_clear(uint8_t attributes) { uint32_t i; for(i=0; i < CGA_VRAM_SIZE; i+=2) { //Apparently it's Character, Attribute //(two bytes) bus88_write(S20_WRITE_MEM, cga_vramAddress + i+1, attributes); //Thought adding a buncha nops // might've allowed the // bus to stabilize, // but they seem to have no effect asm("nop;"); bus88_write(S20_WRITE_MEM, cga_vramAddress + i, 'A'); //0); //Here too... asm("nop;"); } }combined with my bus88_write() function, which attempts to interface an AVR physically socketted in place of an 8088 on a PC/XT clone motherboard.And called with:

while(1) { mode++; mode &= 0x7f; //don't use blinking cga_clear(mode); }from main()(Here's bus88_write(), but it kinda relies on the physical interface, as well)

void bus88_write(uint8_t s20, uint32_t address, uint8_t data) { ADDR1916_PORT = (uint8_t)(address>>16); ADDR158_PORT = (uint8_t)(address>>8); ADDRDATA70_PORT = (uint8_t)(address); S20_PORT = s20; ADDRDATA70_PORT = data; while(!(READY_PIN & READY_MASK)) {}; S20_PORT = S20_BUS_IDLE; }The AVR is inserted in the 8088's socket, with an inverter (or a few, for delay-purposes) between the 8088-clock and the AVR's clock-input.The CGA card is... in a questionable state. And its connection via composite to an LCD-TV probably accentuates that a bit.

-------------------------

If everything worked within-specs, what I *should* get is the letter 'A' filling the screen, with changing foreground and background colors.

What I get is much more interesting!

I've got some good names for some of these... e.g. "Zelda, in the Key of A", or "Goodnight Princess, in the Key of A", or "Zebra, in the Key of A", or... ok, the key of A is wearing out. We've got "Tetris Level 9," "Donkey Kong", and more!

Improbable AVR -> 8088 substitution for PC/XT

Probability this can work: 98%, working well: 50% A LOT of work, and utterly ridiculous.

Eric Hertz

Eric Hertz