-

First Attempt at raw 8086/88 assembling...

01/12/2017 at 10:19 • 7 commentsHere we go! First attempt at assembling raw 8088/86 code...

The point, here, is to create raw binary machine-code that can be directly loaded into the BIOS/ROM chip... NOT to create an executable that would run under an operating system like linux, or DOS.

(Though, as I understand, technically this output is identical to a .COM file, in DOS, or could also plausibly be written to a floppy disk via 'dd').

This needs: 'bin86' and maybe 'bcc' both of which are available packages in debian jessie, so probably most linux distros...

Note that you can't use the normal 'as' (I coulda sworn it was called 'gas') assembler that goes along with gcc (if you're using an x86)... because... it will compile 32-bit code, rather than 16-bit code. (See note at the bottom)

---------

So, here's a minimal buildable example:

# https://www.win.tue.nl/~aeb/linux/lk/lk-3.html # "Without the export line, ld86 will complain ld86: no start symbol." export _main _main: nop ; -> 0x90 (XCHG AX?) xchg ax, ax ; Yep, this compiles identically with as86 (not with gnu as)

Note that, technically, the 8088/86 doesn't have a "NOP" instruction... It's implemented by the (single-byte) opcode associated with 'xchg ax, ax'.I got a little carried away with my makefile. Hope it's not too complicated to grasp:

#This requires bcc and its dependencies, including bin86, as86, etc. target = test asmTarget = $(target).a.out ldTarget = $(target).bin default: as86 -o $(asmTarget) $(target).s ld86 -o $(ldTarget) -d $(asmTarget) clean: rm -r $(asmTarget) $(ldTarget) hexdump: hexdump -C $(ldTarget) #objdump86 only shows section-info, can't do disassembly :/ objdump: objdump86 $(asmTarget)Note that you can't *only* use as86 (without using ld86), because, like normal ol' 'as', it'll compile an executable with header-information, symbols, etc... meant to run under an operating-system.ld86 links that... or, really, in our case... unlinks all that header/symbol information, basically extracting a raw binary.

So, now, if you look at the output of hexdump (or objdump86) you'll see the file starts with 0x90 0x90, as expected.

I'm not yet sure why, but the file is actually four bytes... 0x90 0x90 0x00 0x00.

Guess we'll come back to that.

---------

So, my plan is to pop the original 8088 PC/XT-clone's ROM/BIOS chip, and insert a new one that contains nothing but a "jump" to one of the (many) other (empty) ROM sockets, where I'll write my own code in another chip.

Actually, what I think I'll do is copy the original ROM/BIOS and piggy-back another chip right atop the copy (keeping the original in a safe location). Then I'll put a SPDT switch between the /CS input from the socket and the two ROMs' /CS pins (and a couple pull-up resistors). That way I can easily choose whether I want to boot with the normal BIOS or whether I want to boot with my experimental code.

I guess I'll have to make sure that my secondary/experimental ROM chip does NOT start with 0xAA 0x55, as that's an indicator to a normal BIOS that the chip contains a ROM expansion (for those times when I want to boot normally). Maybe the easiest/most-reliable way would just be to start it with 0x90 (nop), then have my custom code run thereafter.

---------

So, so far this doesn't take into account the jumping. Note that x86's boot from address-location 0xffff0, they expect a "jump" from there to [most-likely] the beginning of the ROM/BIOS chip's memory-space, where the actual code will begin.

So, next I'll have to learn how to tell 'as86' that I want [some of] my code to be located at 0xffff0... and I suppose that means I need to make sure my EPROM is the right size to do-so, based on whatever address-space the original ROM occupied... and, obviously, my EPROM won't be 1MB, so... what location would I actually have to direct it to...? Maybe 64KB - 0x0f.

Or, probably easier, would be to compile the jump, just as I did above, and tell my EPROM programmer to load the resulting binary-file at that location. Not sure all EPROM programmers have that option, but mine does.

---------

SIDENOTE:

Attempt with normal 'as', had the following:

nop mov ax, ax

This wouldn't compile... something about too many memory-operands (does it think 'ax' is a variable name?) So tried:nop mov %ax, %ax

Or something similar (was it 'ax' or 'AX'?)Anyways, the final result was 0x90 0x66 0x90

0x66 is an invalid opcode on 8088/86...

Thought, maybe, since I was writing in assembly, I could go ahead with the normal 'as', and just limit my assembly-instructions to those I know are on the 8088/86, but I guess not.

-

A better idea...

01/11/2017 at 05:49 • 0 comments#A 4$, 4ICs, Z80 homemade computer on breadboard

Yeahp... AVRs are chock-full of peripherals that older computers used to implement via separate dedicated chips... So... In the 8086/8088-realm, they could be kinda like "super-IO" controller-chips, or south-bridges...?

Hmmm... Maybe I'm going about this all wrong. Or not, since it's kinda been curbed.

-

Fast AVR intercommunication: Bus Interface Unit = coprocessor...?

01/06/2017 at 10:39 • 7 comments1-26-17: This rambling was written a while back... apparently I never posted it (was a draft).

-----

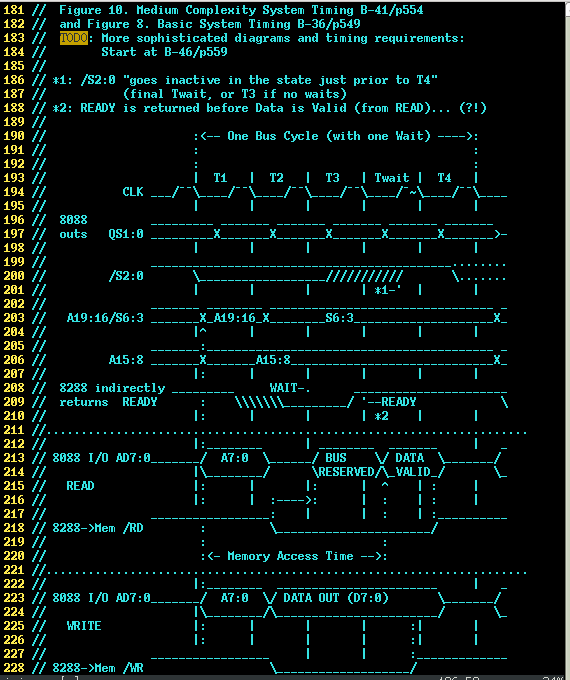

There're two "units" in the 8088/8086 that operate simultaneously.

The Execution Unit (EU) handles execution of instructions, etc.

The Bus Interface Unit (BIU) handles interfacing with the data/address bus, etc.

Wherein we come to a bit of a difficulty...

AVRs don't have DMA, so every change to the data/address/control bus requires processing on the part of the AVR. And, the bus-interface requires a lot of changes... basically a single 8088 read/write bus-transaction takes (at least) 4 bus-clocks. Now we're talking about running the AVR at 4-5x the clock-rate, so we're talking 16-20 AVR clock-cycles to handle a single bus-transaction (assuming there are no wait-states).

That doesn't sound like much, except consider that that time *can't* be used for other things (like actually *processing* an instruction).

![]()

And, consider that many instructions will take on the order of 20 AVR-instructions to execute, which means that the processor will spend nearly as much time *reading/writing* to the bus as it spends executing instructions...

Also, consider that the bus can't be accessed willy-nilly, transactions must align with the bus-clock, which could slow the thing down while waiting for a rising-clock-edge, up to an entire bus-clock for *every* transaction!

Also, consider that once the majority of the bus-changes have occurred (loading the address bytes), the remainder of the bus-transaction is pretty sparse. But, with only 4-5 AVR instructions per bus-clock, there's really no time for processing other things (e.g. using an interrupt, since an entering/leaving an interrupt-handler, alone, would require 2-3 AVR clocks).

The bus is a bit of a bottleneck.

Also, the BIU is responsible for caching instruction bytes, whenever it can... which, in the case of an actual 8088 means that it can do things *while* the EU is not accessing the bus... a coprocessor, of sorts...

---------

I've an idea of adding a second AVR that will interface with the bus... not unlike the 8088's dedicated BIU.

Somehow these two AVRs have to communicate... and *fast*.

I haven't done *all the math* but it looks like something like 6 *bytes* have to be transferred from the EU to the BIU for each bus transaction (writes)

So, a simple thing might be to have a dedicated procedure, e.g. the first byte always indicates the type of transaction, the second is always the lowest address-byte, the third is the second address-byte, and so-on.

But, somehow the two systems need to be synchronized. We won't *always* be transferring bytes to the BIU, only when a transaction is requested.

So, it might be nice to be able to send a "start-of-transaction" indicator... One idea would be to use a certain bit in the "type of transaction" field. But what when the very last byte transferred *also* has that bit set (e.g. an address-high byte)? Now I need to send a 7th byte just to indicate that the AVR-AVR bus is idle. (And, how's the BIU going to constantly monitor that input byte while it's also handling bus-transactions?)

Similarly, one could dedicate a GPIO (a 9th bit) to the process, to indicate when a transaction is starting. Again, this'd take one AVR clock-cycle to set, and another to clear.

These are all doable, maybe, but I've another idea to throw in the ol' tool-box.

What about setting up a timer to generate a (one-time) pulse at the start of a transaction? Then, technically, the AVR can output *9* bits *simultaneously* during the first transaction.

-

Output Timings are strict! Look at input-timings instead!

01/04/2017 at 06:24 • 0 commentsGuess my brain wasn't in it, when I drew up the timing-diagrams shown in the last log.

![]()

OF COURSE the timing-specifications from the 8088 manual are for the 8088's *outputs*... They're guarantees of what the 8088 chip, itself, will do... so that, when interfacing with other chips you can make sure those other chips' input-timing requirements are met.

---------------

There's a *huge* difference between what's required to be within-specs, and what's guaranteed by the 8088.

---------------

SO... Again, there's basically no way my 8-bit AVR can possibly change 20 address-bits (three ports) in less than the 110ns guaranteed by the 8088, when a single AVR instruction (at 20MHz) is already 50ns, and that'd only handle *one* byte, of the three.

So far I've only dug up specs for the 8288 (which converts /S2:0 into /RD, /WR, etc.) and the 8087 FPU (which I don't intend on using in this early stage, BUT, should probably be a decent resource for expectations of the other 8088 outputs, like A19:0).

And...

Yep, those specs are *way* more lenient.

FURTHER, they're *MUCH* more indicative of what's going on...

I couldn't figure out from the 8088's timing-diagrams *when* these signals were supposed to be *sampled*... Falling-edge? Rising-edge? (are they level-sensitive? E.G. A15:8 being fed directly to a memory-device?)

But e.g. the 8288 datasheet shows, for the /S2:0 signals, very clear "setup" and "hold" times, very clearly surrounding a specific clock-edge. Similarly of the 8087's datasheet showing which *edge* the Address-bits need to be setup for (and held after).

Those setup/hold times are shown as minimums, with no maximums... and worst-case we have a minimum setup-time of 35ns.

One 8088 bus clock-cycle is 1/4.77MHz=210ns, leaving a whopping 185ns of extra potential setup-time in many cases!

So, then, rather than having to switch all the Address inputs (three bytes) within the 8088's spec'd 110ns, we actually have 185ns to work with. That's doable.

And, further, some of those signals may not even be sampled at every clock, so might be changed in a prior clock-cycle than the one where it's needed.

E.G. If it can be determined that A15:8 are only paid-attention-to after the latching of A19:16 and A7:0, and only until, e.g., the end of Data-Out, then it might be possible to change the address-bits *before* the next cycle, e.g. in T4 alongside the change of /S2:0...

Similarly, it might be possible to stretch those timings a bit:

A prime example might be, again, A15:8. The higher and lower address-bits are time-multiplexed with status-signals and data, but A15:8 aren't multiplexed at all. Since the others are Muxed, and must therefore be *latched* through a separate latch-chip, that means the entirety of A19:0 won't be available to devices until those latches are latched *and* their propagation-delays... So, then, realistically... it's probably reasonable to assume that no devices attached to the address-bits actually look at the address until *after* that time... so then A15:8 could plausibly be changed even slightly after the other bits' latch-clock-edge. (Or, at the very least, should probably be the *last* bits written, when writing the address-bits).

Anyways, it's starting to seem less implausible. And maybe even possible without synchronizing the AVR clock to the 8088 clock too accurately.

-

Secret-Revealed, and Too Many I/O!

01/02/2017 at 03:52 • 18 commentsUPDATE: Re: Project "Reveal" and realistic goals in the following few paragraphs...

------

This should've been two separate project-logs... The "secret-reveal" was supposed to be a minor bit merely as lead-up to the current state-of-things (interfacing with the bus).

-------

In case you haven't figured out "the secret" of this project... the idea is to use an AVR to emulate an 8088 *in circuit*... To pop out the original 8088 in my (finally-functioning) PC/XT clone, and pop-in my AVR, and see what the blasted thing's capable of.

A key observation, here, is that AVRs run at roughly 1 instruction per clock-cycle, while 8088's can take *dozens* of clock-cycles for a single instruction. I read somewhere that the 4.7MHz 8088 in a PC/XT runs at something like 500*K*IPS. Whereas, an AVR running at 20MHz would run at something like 20MIPS! FOURTY TIMES as many instructions-per-second!

(albeit, simpler instructions, only 8-bit, and I imagine much of the limitation of an 8088 is the fact it's got to fetch instructions from external memory at a rate of 1/4 Byte per clock-cycle... which of course an AVR wouldn't be immune to, either.)

Anyways, I think it's plausible an AVR could emulate an 8088 *chip* at comparable speed to the original CPU. (Yahknow, as in, taking minutes, rather than hours, to boot DOS). Maybe even play BlockOut! (3D Tetris).

But those are *Long Term* goals, and it's entirely likely I'll lose steam before even implementing the majority of the instruction-set. For now, I intend to pull out the BIOS ROM, and replace it with a custom "program" using an *extremely* reduced instruction-set...

First goal is to output "Hello World" via the RS-232 port... I think that wouldn't take much more than a "jump" and a bunch of "out"s... so should be doable, even by the likes of me.

...And, maybe, just maybe, I could fit that and the reduced "emulator" in less than 1K of total program-memory on the two systems (and not making use of other ROM sources such as LUTs for character-drawing on the CGA card)... A bit ridiculous, there's only a few days left for the https://hackaday.io/contest/18215-the-1kb-challenge, and I haven't even decided on which AVR to use....

(Regardless of the contest, it's an interesting challenge to try to keep this as compact as possible. You can probably see from the previous logs I've already been trying to figure out how to minimize the code-space requirements for *parsing* instructions... *executing* them is still a *long* ways off ;)

------------

I was planning to use my trusty Atmega8515, since I've a few in stock...

I think it has *exactly* as many I/O pins as I need...

But... it gets complicated because:![]()

The 8088 clock runs at 4.77MHz, this is derived from a crystal running at

14.318MHz (divided by 3), but that signal's not available at the processor.

8-bit AVR clocks generally max-out around 16-20MHz (but can be overclocked

a little, and sometimes even quite a bit).

The 8515 is rated for 16MHz. And, worse for this project, its internal (and

*calibratable*) clock is limited to somewhere around 8MHz.

So, let's say I could bump that up to 4.77*2=9.54MHz via the OSCCAL

(oscillator calibration) register... That means I'd only be able to execute

*two* instructions between each of the 8088's clock-cycles.

And, seeing as how the AVR is only an 8-bit processor, there's no way it'd

come close to the 8088's bus-timing, what with needing to write 20 bits in

a single 8088 cycle (A19:16, A15:8, A7:0, at the beginning of T1).

In my initial estimates, I was planning on having 4 AVR clock-cycles for

every 8088 clock-cycle. That's not far-fetched... 19.09MHz. (Again,

most AVRs these days are rated for 20MHz, and the 8515-16MHz could probably

handle 19MHz). Doable, but I'd need an external clock for the 8515, and

somehow would need to calibrate it to closely-match the 8088's (which, too,

can be adjusted via a variable-capacitor).

-------------

I also have a couple ATmega644's, MUCH more sophisticated, in general, than the 8515, (mostly due to increased memory, but also a few additional instructions) but these guys have a similar internal clock, and fewer I/O pins...

-------------

I have a bunch of ATtiny861's... These guys have an internal R/C

(calibratable) oscillator that runs at ~8MHz, as well.

BUT, it can be bumped-up to a higher system-frequency with the internal PLL.

So, I've some vague theory that if I take the 8088's 4.77MHz clock and feed

it into a Timer Input-Capture pin... I might just be able to create ....Can the PLL be configured to sync with an external clock?

(Update: NOPE)

The thought is... using the Timer's Input-Capture pin, I could write a

little routine that runs at Power-Up, that adjusts OSCCAL to match the 8088's

clock, except at a higher multiple... e.g. 4x (19.1MHz), or maybe even 5x

(23.9MHz).

And then... I'd be able to execute 4 or 5 AVR instructions per every 8088

clock-cycle... which should be *just* enough to handle writing *all the*

address-bytes in a single bus-clock. (NOT within the 8088 timing-specs of

something like 100ns from the clock-edge to the 20-bit address-output, but

we'll see where that goes. I should probably look into the 8288 and other

"slave"-devices' expectations, since they're what really matter).

.......

Thing is, the Tiny861 only has TWO 8-bit ports, and no other pins for I/O.

(Trying to do the work of a 40pin 16-bit CPU with a 20-pin 8-bit microcontroller!)

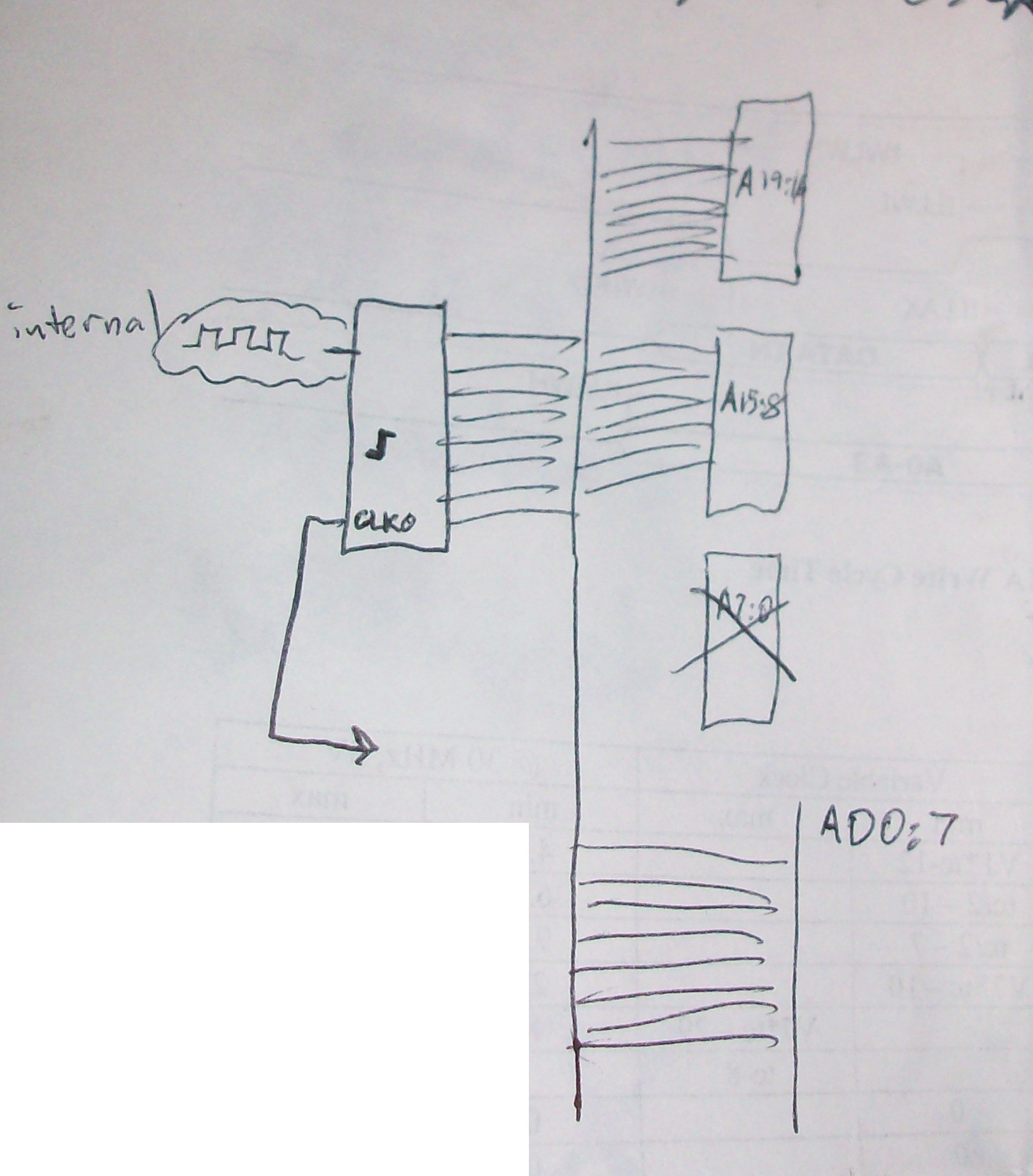

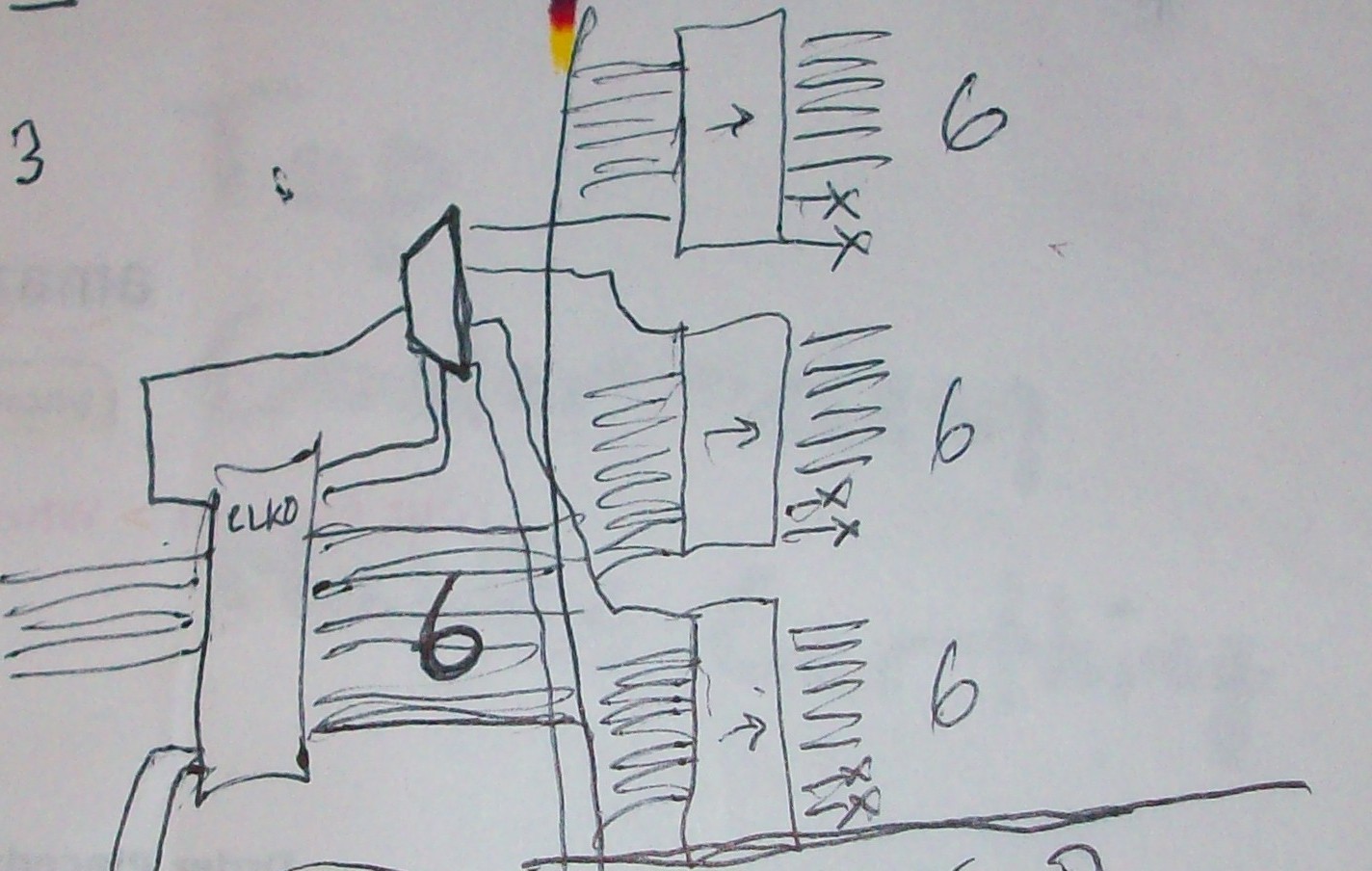

You can see from the above diagram, for bus-interfacing alone, I need

18 outputs (A19:16, A15:8, /S2:0, QS1:0 and /RD) *as well as* 8

I/Os (AD7:0) (which I'll come back to later).

-----------

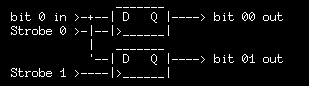

Say for those 18 outputs I use 3 8-bit latches such as the 74AC574's in my

collection. Those latch on the rising-edge of their clock-input.

Alrighty... Something like:Here's a single bit routed to two latches:

![]()

This would take 3 AVR clock-cycles to write each byte-wide latch. (Write-Data to Latch-Inputs, Strobe-high, Strobe-Low).

Short of pins, the strobe inputs could be reduced via demuxing:

![]()

Now I can handle four bytes with only 3 additional GPIOs.

This might take an additional AVR clock-cycle to write a byte-wide latch. (Select, Write-Data, Strobe-high, Strobe-Low).

But we got here because this system's already too slow! It was already too slow when I could write each byte-wide port on the original 8515 with a single instruction! (Three address-bytes, three ports, three AVR-instructions, needed to fit within one 8088 bus-clock).So, these muxing-schemes would make the system *even slower*. No good.

---------The Tiny861 has an optional clock-output that matches its internal clock...

And Tiny861 outputs are toggled on the rising-edge of the internal

clock. What if I use that output-clock as the "strobe" for my latches?

Then I wouldn't need a GPIO to toggle twice for each latch operation, reducing the latch-write-instruction-count to 2 (select, write).(This may introduce a glitch between select/write, but that can be dealt-with).

And I reduce the GPIO-usage by one pin.

But, we'd have the same problem... I'd first have to select which latch,

then write the 8-bits of data... 6 instructions to write three bytes.

(select, write, repeat, repeat), and still only 4, maybe 5 instructions can fit within an 8088 bus-clock.-------------------

3x 8-bit latch chips would be 24 outputs.

Ignoring AD7:0, for now, I need 18 outputs to transition during a single bus-clock...And 18/3 just happens to be 6...

So, if I only used 6 of the 8 latches in each chip, and only wrote 6 bits

each time, I'd have two remaining pins on the AVR's 8-bit port.

Two pins which could be fed into the select-inputs of a 1-in-4-out demux,

for instance! (Random luck, here!)

AND, Importantly, those two pins, on the same port, would be written at the same time as the 6 address-bits-to-be-latched, so only *one* instruction must be executed to both *select* a latch *and write* data to it!![]()

Now, I haven't *fully* wrapped my head around this, yet... It might be

necessary to insert some delays between the AVR's Clock-Output and the

demux's input, such that the data arrives at the latches' inputs *just

before* the clock-output reaches the selected latch's clock-input. But I

think that can be handled with little more than a few gates inserted for

the sake of adding a delay. OR, maybe... inserting a (fast) inverter

inbetween, so the latches' inputs are loaded with data on the rising-edge

of the AVR clock, but the latches are clocked on the following (inverted)

falling-edge of the AVR-clock.

Should be doable.

AND, amazingly, will take *exactly* the same number of AVR

instruction-cycles to write these three external latches as it would've to write

3 dedicated I/O ports (on a chip which has that many).

(Of course, a little preprocessing would have to be done, to merge the data

and select info. But, the actual writing of the three latches will take

exactly 3 instruction-cycles. And that's what's important, here... that the

changes all occur within a single 8088 bus cycle).=====================

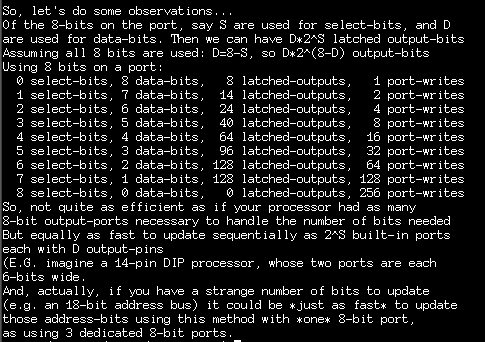

I wrote a program to calculate the possibilities for future-endeavors:

![]()

------------------

TODOs:

For the I/O byte (AD7:0), I'll use the fourth output from the demux. I've some ideas regarding that, including some that've been well-thought-out in #sdramThingZero - 133MS/s 32-bit Logic Analyzer, making use of resistors as data-paths (and virtual "open-circuits") without necessitating additional Output-Enable or Direction signals (which would have to be driven by GPIOs). I'll come back to that later.

So, assuming I run my AVR (Tiny861?) at 4x the 8088's bus-clock, we'll *just* fit in all the necessary transitions. With QS1:0 changing midway, that might add a 5th transition (5x clock is pushing it, but plausible).

The 8088 timing specifications show those transitions happening within a very short time-period... E.G. ALL the address-bits (20!) are supposed to be written within 100ns of the bus-clock's edge. Again, doubtful an 8-bit AVR could handle all that in 100ns. But I've some ideas for how to make it work if it really needs to be *that* precise. Good thing I ordered so many 74AC574's!

-

It Has Begun!

12/30/2016 at 12:09 • 0 commentsIt has finally begun... *This* project, rather'n the slew of random-tangents that this project took me on.

I think it'll be a *tremendous* accomplishment if somehow I manage to fit this within 1K... As in, I think it's pretty much unlikely. But I am definitely designing with minimal-code-space in mind... (which probably serves little benefit to the end-goals, besides this contest, heh! Oh well, an interesting challenge nonetheless).

Amongst the first things... I needed a 4BYTE circular-buffer... and decided it would actually be more code-space efficient (significantly-so) to use a regular-ol' array, instead of a circular-buffer. Yeahp, that means each time I grab something from the front of the array, I have to shift the remaining elements. Still *much* smaller. And, aside from the "grab()" function, significantly faster, as well.

Also amongst the first things... I need to sum a bunch of values located in pointers. Except, in some cases those pointers won't be assigned. But you can't add *NULL and assume it'll have the value 0, so the options are to test for p==NULL, or assign a different default.

---------

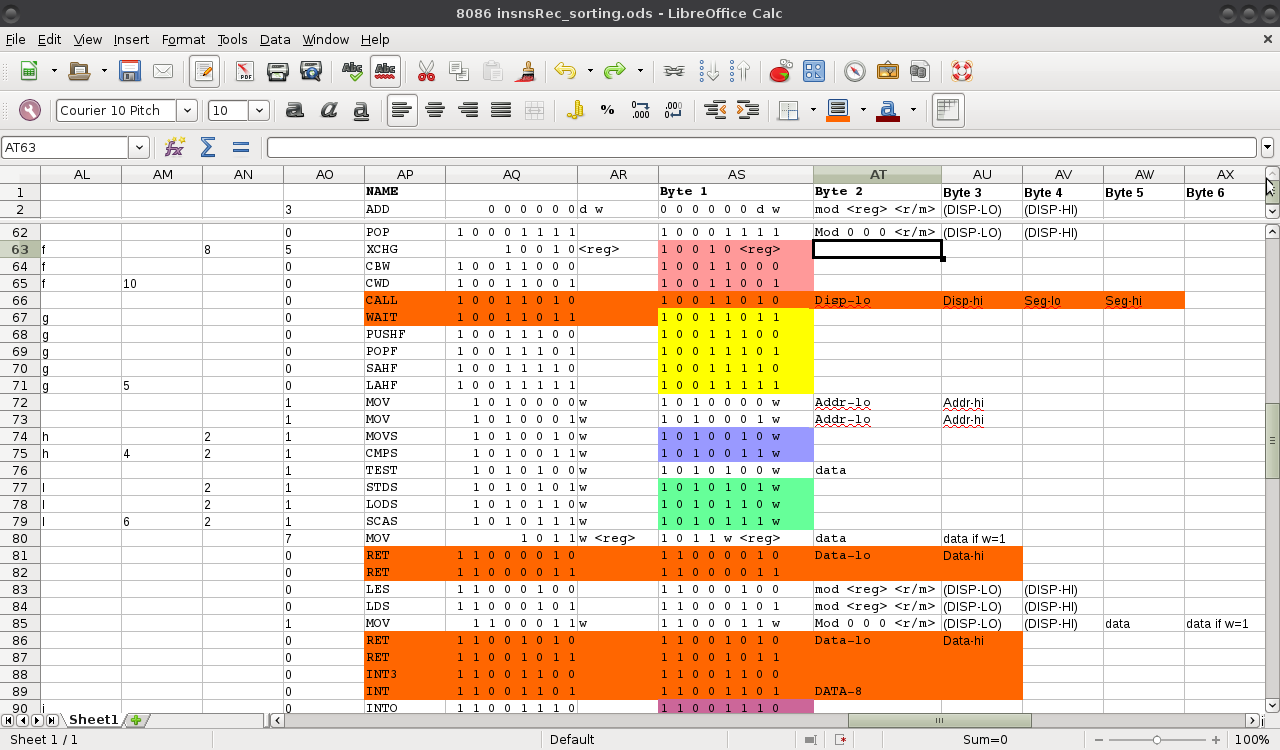

Now... I'm on trying to figure out how to take an input Byte and route it appropriately. The fast-solution is a lookup-table. But we're talking 256 elements in that table, alone! That's already 1/4th of the 1KB limit!

One observation, so far: One of things I need to "look up" is a simple true/false value. It turns out, apparently, except for 3 cases (in red), the lowest-bit doesn't affect the true-ish-ness/false-ish-ness of the particular characteristic I'm looking for. Alright! So I only need a 128-element look-up-table (and a handful of if-then statements).

![]()

Now, better-yet... Currently I'm only looking up a True/False value. That's only a single bit. So, shouldn't I be able to divide that 128-lookup down to 128/8=16 BYTES? Now we're talking.

First idea: Simply use the first four-bits of the value to address the LUT. Then, the remaining three bits (8 values) will select one of the 8 bits in the LUT's returned byte.

Simple!

---------

The thing is, *that one* true/false value is only *one* of the *many* characteristics I'll be needing to look-up. So... The question becomes... Does this method save any space...? That has yet to be determined.

On the one hand, this particular true/false value is necessary early-on. On the other hand, if I used a 256-element LUT, I could determine *many* characteristics, simultaneously. On the other other hand, a 256-Byte LUT isn't quite enough to determine *all* the characteristics I'll be needing to determine... as, in many (but not all) cases a *second* byte is necessary.

So, the first 16-byte LUT will tell us whether a second byte is required (the true/false value I'm looking for, currently).

So, let's just pretend there was no second-byte required to determine the necessary characteristics, and the original 256-element LUT would give us all the info we'd need. I'd still need to know whether additional-bytes are necessary (in this imagining: not to determine the characteristics, but to determine *arguments*). So, somewhere in that 256-element LUT's output, I'd *still* need an indicator of whether additional bytes are necessary. Which would mean one bit in each of 256 elements (32 bytes). Which, I've already managed to reduce to 128 bits (16 bytes), and a handful of if-then statements (~10? bytes).

Now that wouldn't sound like much... I've managed to save something like 6 bytes... And, in the end, it's quite plausible the 256-element LUT will still be necessary to determine the *other* characteristics.

So, here's a reality-check... I *do* need to know whether the input requires a second byte. So, I *do* need that bit of data for each element in the 256-element LUT. But, one of the other characteristics I need to "look up" happens to have something like 130 values. Which is *just barely* too many for 7 bits. Which means I'd have to throw another bit, at the least, to the 256-element LUT to keep track of whether a second byte is required. So, now, we're talking 256 2Byte elements (HALF of the 1K limit!), rather than 256 1Byte elements. Or, even if I was able to create a 256x9bit-element LUT, I'd still be wasting 128 bits on redundant-data (seeing as how I determined that the lowest bit *seldom* affects the true/false-nature of whether a second-byte is necessary from the input).

(And, again, even 9 bits wouldn't be enough to handle all the characteristics I need to look-up... fitting this in 1K will be *quite* the challenge; improbable, if not impossible).

So... It seems to me... This path may reduce the overall LUT-code-space-usage by more than just 16 bytes (or 6?), if I continue with this logic with the other characteristics.

E.G. look again at that spreadsheet, above. Each color-grouping (besides red) indicates groupings of sequential input-values which have *very similar* characteristics. They're sparse, and for the most-part irregular. But there *are* some regularities to them, which might correspond to an input-bit-value or at least some range of input-values.

One of these sequential-groupings (not shown in the image) is actually 32 Bytes long. That means, if I use a 256-element LUT, I might be able to throw an *entirely different* LUT in that same memory-space... For instance, the 16-byte LUT I've come up with earlier, and a bit more. By throwing a few if-then statements before the 256-element look-up, I can skip those values. Now I have *only minorly-degraded* the speed-efficiency of a LUT, and can use that same space for other purposes.

(Note to drunken-self: Is a few if-then statements and a couple merged LUTs any more efficient than a couple if-then statements combined with a tiny bit of math, and separate LUTs?)

-

Open Collector FAIL. The ATX power-switch saga continues.

12/24/2016 at 03:21 • 27 commentsUpdate 1-16-17: It's NOT Friggin' Diodes! AND I FOUND A USE!

---------------------

Update 1-15-17: FRIGGIN DIODES! (see the bottom)

Update II, vague recollection of BJTs used instead of diodes for reverse-polarity-protection. Notes and excessive ramblings at the end.

Update 1-2-17: Found some discussion about misuse of transistors, similar to what I experienced, here. Notes and ramblings and no conclusions added to the bottom.

--------------------

In the interest of making this blasted thing even more robust, yahknow, by being in-spec, rather'n just "it works" and moving on... And, since I was already modifying the circuit to lower the pull-down resistor (see last log), and add a Power LED...

I decided to add a transistor to the output of the Flip-Flop. Thus, the connection to the /PWR_ON signal would be open-collector, and capable of sinking more current, if necessary (e.g. on another Power Supply, should this one go flakey).

Simple!

FlipFlop Output C .-----> /PWR_ON Buffer / Input v |/ --|>---/\/\/\---| NPN B |\ v E | v GNDThe /PWR_ON signal powers up the system when grounded, and the system is OFF when that pin is floating/unconnected. So, obviously, it must have a pull-up resistor somewhere in the circuit (if necessary).--------

I powered it up (works great! Finally!)

But the stupid LED wasn't lighting. Alright, musta reversed it... Swapped the polarity, and sure 'nough, it's lit. Excellent!

No, wait... WTF... The fan's not spinning. push the button and the LED goes off but the Fan starts up.

WTF?

....

The LED is connected to the Q (non-inverted) output of the FlipFlop, anode tied high through a resistor (since TTL has much greater drive-strength when driving low).

The /Q output drives the transistor when high.

(Note To Self: drive-strength??? hFE measured at 220... is that better than just driving low from the TTL output? Heh, barely... IOH <= 0.8mA, IOL <= 16mA. And, frankly, there's no way the /PWR_ON input could draw 16mA, right?)

So the LED should activate (cathode driven low) when the /Q output is high, which also drives the transistor, pulling the /PWR_ON signal low, powering the system.

So... what's wrong now...?

Ultimately... Turns out I grabbed a PNP by mistake.

But now I'm *really* confused. How the heck can this thing work at all?!

FlipFlop Output C .-----> PWR_ON Buffer / Input v |/ --|>---/\/\/\---| !!!!PNP!!!! (A) B |^ \ E | v GND

VBE will never be forward-biased!

-----------------

Measurements (between point and GND):

SYSTEM OFF:

VA = 3.9V

VB = (not measured)

VC = 4.5V

(VE = 0.0V)

SYSTEM ON:

VA = 0.1V

VB = 0.2V

VC = 0.8V

(VE = 0.0V)

--------------

Alright, so, have we established, yet, that I'm certainly no BJT expert? This was mentioned in the last log, as well as a few logs on other projects (e.g. the Transistor-based RS-232 inverter, which wound up acting as an amplifier)...

But, here I think I can see something that *sort of* makes sense...

Note the voltage difference when ON: VC - VB (VBC) = 0.6V... A transistor-bias voltage, if I've ever seen one... Except, VBC, not VBE.

And, similarly, when OFF: VC - VA = 4.5-3.9 = 0.6V... (Imagining not much drop across that resistor, since there's little current flowing between the Emitter and Collector.

But... WEIRD.

OK, I'm pretty sure I've read somewhere that swapping E for C will still function, but at a *really low* hFE... So I plug one in the ol' transistor-meter backwards, and sure-enough hFE = 2 (when forward, hFE=220).

---------

But, I can't let this go... this just doesn't seem right to me. If you can turn on a transistor with VBC, then how come all those open-collector circuits don't turn themselves on...?

9V ^ | light-bulb, -> ( ) solenoid, etc. | 5V Buffer C | Output / v |/ --|>---/\/\/\---| NPN B |\ v E | v GNDVBC is always greater than 0.6V, in this case... OK, so it's not the *voltage* that turns it on, but the *current*, right? But, we've already seen that current through VBC can turn on the transistor... right?

So then, I guess, in this circuit IBE may be lower than IBC... And with hFE, it...

Oh, NO... In this case, VBC is < -0.6V. Reverse-Biased. OK.

But then what if you've got:

3V ^ | light-bulb, -> ( ) solenoid, etc. | 5V Buffer C | Output / v |/ --|>---/\/\/\---| NPN B |\ v E | v GND

Ah, right, so... When the buffer outputs 5V, BOTH VBE and VBC are forward-biased, the switch turns on. Right. When the buffer outputs 0V, I guess we rely on the hFE to determine how "off" the transistor will be... because ... NO. VBC is reverse-biased, it'll inherently be off.

Man, once these things seemed so intuitive to me...

Lesson repeatedly-learned the hard way: Transistors Are NOT Switches. They're more like Teeter-Totters.------------

And... This is just another in the *LONG* list of things that should've been easy that have turned into *huge* ordeals prepping for *this* project, which *still* hasn't even started.

----------

Found an interesting discussion kinda touching on my PNP-resistor experience...

And some really weird linking-system making it impossible to show the image here without my re-uploading it. Weee: (not my work):

![]()

Except, actually, this one's a lot more obvious to me... In fact, it's pretty much normal BJT-biasing. In fact, it's not at all similar to my circuit, now that I look further. (VCE is reverse-biased, VBE is forward-biased like a regular-ol' BJT circuit). Though it's interesting, nonetheless.

Again, what I had was:

: 5V : ^ : | : / : \ PRESUMED 7400-series *TTL* : / FlipFlop : | Output + C .->:>-+--|>--- Buffer 0.6V / : Gate(?) v - |/ :............... --|>---/\/\/\---| !!!!PNP!!!! (A) B |^ + : + \ E : 0.2V | 0.1V : REVERSED v - : - GNDMeasurements (between point and GND):

SYSTEM OFF:

VA = 3.9V, VB = (not measured), VC = 4.5V, (VE = 0.0V)

SYSTEM ON:

VA = 0.1V, VB = 0.2V, VC = 0.8V, (VE = 0.0V)

So, when on, this is acting as a non-inverting *buffer* rather than the typical *inverter* I'd've experienced if this were the NPN I intended.

And, when on, I'm getting:

VBE = 0.1V (NOT enough to turn on the diode, nevermind being *reverse* biased for a PNP)

VBC = -0.6V (a diode-drop... also properly-biased for a PNP)

And... random-observation: *exactly* the output-voltage a regular ol' TTL considers VIL-Max. (Hmmmm). Some discussion I read, either at that link, or another it linked, suggests that TTL actually used this design(?).

So, plausibly, if I hadn't done this with a regular ol' TTL 7400-series chip, and instead used CMOS or something of this era, this wouldn'ta worked, since the drive-strength and voltage of the output, when high, would probably be enough to assure even VBC would be zero, rather'n forward-biased... unless, somehow, ... nah, plausibly even "weirder" than that... if the output driving the base is *only* tied-high (when high), then no current could flow, the transistor would never turn on. Huh...

![]()

![]() See, Q3 and Q4 can both be on simultaneously, depending on the load. But in the MOSFET case...? Hmmmm...

See, Q3 and Q4 can both be on simultaneously, depending on the load. But in the MOSFET case...? Hmmmm...

So many oddities!---------------------------------

It's finally come back to me: Somewhere 'round this site, a year+ish ago, somehow it came up to use a BJT instead of a diode to protect a circuit from reverse-polarity...

V+ ----. .-----> Circuit V+ \ / C \ v E ------- | B \ / \ | GND ------+--------> Circuit GND(From Memory)Yep. That's with the emitter connected to the LOAD, and V+ connected to the collector.

This guy turns on when VBC (not VBE) is forward-biased. As I recall, the purpose is that VEC is typically *smaller* than VCE. And an experiment here states the same. (Something like 1/4!)

Unlike my circuit, VBE will *also* be forward-biased in both of these circuits, but less-so than a full diode-drop.

So, I've still no idea what this thing I've run into could be useful for... I had *an* idea, then started realizing all the inherent oddities I keep running into with various circuit elements' not working the way I'm used to.

So, one idea is similar to the reverse-polarity protection, except with an NPN instead of PNP.

12V ------. | \ / \ | B ------- / \ / v 5V ----' '-----> Circuit 5V GND ---------------> Circuit GND

A bit ridiculous, because it requires your 12V to be correctly-polarized... But, maybe, it could be used with/as e.g. a power-switch for some ridiculous reason...

BEWARE: I found out the hard-way... This will *NOT* work if you use a linear voltage-regulator to output that 5V (maybe from the 12V rail?).

Why...? Because, at the fundamental-level, of the same friggin' principle that got me into this mess in the first place, I think. Linear voltage-regulators are designed to *source* current, not to *sink* it, so, when the load's too small, this circuit would pull the 5V "regulated" output up to around 12V. HAHAHA.

A more obvious alternative is to do the same reverse-polarity circuit as earlier, except with an NPN on ground. But, yahknow, switching ground isn't such a great idea.

And, again, don't forget that hFE is something like 2 (not 200) when turning on with VBC, so... if the circuit uses 1A, does that mean this thing would require 0.5A into the base? But, then, maybe that's irrelevent, because, again, once the circuit turns on, *both* VBC and VBE will be forward-biased... no, wait, forward-biased, but VBE's not going to reach the diode voltage-drop, so not *on*, right? No, wait... LOL this is ridiculous.

VBC will be, say, 0.6V, then VBE will be 0.595V! So, then... does that mean *both* sides are active, and hFE is something like the average of 200 (C->E) and 5 (E->C) ?

This is *SO WEIRD*. 'Spose I should just dig out the ol' text-book and refresh myself. But apparently I'm in a rambling mood.

----------

Anyways, it's not so much that I want to find *a use* for this thing (though that's always nice), but that I'm almost certain there must be somewhat common cases where this situation is actually an oft-neglected side-effect... Just can't seem to think of one.

----------

UPDATE: DIODES:

Hah, I have no idea how I didn't see it before... the circuit's basically nothing more than diodes... (Oh, and I came up with a groovy AND gate from 'em... over at #Random Ridiculosities and Experiments: https://hackaday.io/project/8348-random-ridiculosities-and-experiments/log/51939-transistor-oddities).

![]()

So, it's *slightly* better than using diodes... about twice. Since I measured the reverse-hFE as 2.

-

Casing + TTL-fail.

12/23/2016 at 22:35 • 0 commentsIt's time to case-up the 8088 system so I can reclaim my coffee/dinner-table. (I found this motherboard in a "scrap PCB's" box. Amazing it survived all those years of storage, nevermind the countless diggings-through-those-boxes!).

I've settled on an old ATX case, for now. So did some drilling because the screw-holes/posts aren't in the same positions... And found an old AT power-supply that fits in the spot. Had to do a little bit of nibbling, because the power-connectors interfered with the cutout, but that was easy-enough (man it's nice having a nibbler-tool again!).

Screwed everything down then realized the AT power-supply's cables were *way* too short.

Obvious solution: steal cables from another AT power-supply and use wire-nuts. And I even found an old AT power-supply that'd been de-cased with *really long* cables. So... simple, right?

Nah, for some reason I didn't go that route. I think it had something to do with a lack of interest in more nibbling, drilling, etc. to mount the Power Switch.

Well, besides that... this particular AT power-supply is clearly marked (in probably decade+ old marker-scrawl) "Runs Without A Load", which is something rare-enough that I like to save these guys for workbench power-supplies.

And... I have a box marked "computer power-supplies" where I thought I'd find a bunch of others, but instead discovered that it's full of ATX supplies. WTF. Just a few months ago, when my main computer's supply blew out, I couldn't find a friggin' ATX supply without stealing one from another half-assembled ATX computer, and yet here's a box full of 'em.

So, there's another box marked "Power Supplies" which is mostly oddities like 24V switchers and things pulled from old printers... but it also has a couple AT/XT supplies... but those things... well, they're *beasts* and would be darn-near incredible if I could fit 'em in an ATX case, let alone mount 'em.

OK, so... I didn't go the easy route of cutting the ATX-sized AT power-supply's cables and wire-nutting the *really long* cables I found on the long-scrapped AT power-supply (without a case). That'd've been easy.

--------------

Instead I decided to use an ATX supply. This isn't too difficult... Right?

Pinouts found. No problem. Scrapped an old connector from an old (fried) ATX mobo so I don't even have to modify the supply and can easily swap it out if, for some reason, e.g. I happened to have one in my collection which I'd forgotten to mark "bad"... (which surely most are, otherwise why would I have acquired so many?).

Power-On is pretty simple, tie that wire to ground when you want the system to be powered-on. Allow it to float (or be 5V) for power-off.

Simple-solution... Just throw a flip-flop in there... There's a 5V-standby voltage which is always-on, so that can drive my *really simple* TTL flip-flop circuit.

5Vsb ^ .--------------+-->/PWR_ON | | _________ | _|O '-| D Q | | |O | _ | | | | _| /Q |--' +----|>CLK | | |_________| \ / \ | v GND

Went to reach for my trusty ol' 74x74's but came across the 74x175's first... and have *a lot* of them. Sure-nough they're D-latches with /Q outputs. Perfect.Wired the whole thing up on an old scrap PCB...

Oh, wait... I decided to throw in some debouncing:

5Vsb ^ | \ / \ .--------------+-->/PWR_ON | | _________ | _|O '-| D Q | | |O | _ | | | | _| /Q |--' +--+-|>CLK | | | |_________| \ | / === \ | | | v V GNDAright. 1K up-top, and 10K down-below... seems totally reasonable.Grabbed the first 74x175 in the tube, happened to be 74LS175.

No Workie.

WTF... this is a *simple* circuit.

Troubleshooting for what seemed like hours, rechecking my scrap PCB had all the necessary traces cut, and rerouted. Whoops, forgot a wire... That should do it!

Nope.

Recheck again.

Nope, now I'm pretty certain it's wired right. Still not working.

Multimetering all the pins... This stupid LS175's pins were a bit corroded, didn't have the patience to thoroughly clean 'em, the solder-joints were a bit ugly... But, metering shows they're all making contact.

Finally power the thing up again and measure voltages...

The clock-input is sitting at something like 1.2V, well above Vil-max=0.8V. WTF?

Meh, I know TTL draws a bit of current through its inputs... maybe 10K was too much, but surely 5K would be *plenty*. Should cut that 1.2V down to 0.6V, which is lower than Vil-max=0.8V, right...? Just solder another 10Ker in parallel.

Nope. 1.1V, now. Something's seriously wrong with this circuit.

Rechecking traces... Again and again. And again, and again.

Finally decide that... since the solder-joints were so bad I'd used a *really hot* temperature, and *really long* durations to try to burn off that corrosion... Maybe I literally fried the chip.

Cut it off, dug out another from the tube, this 74175 (not LS/HC...) looks much cleaner. Soldered it up, much more carefully this time.

Now the clock-input is 1.7V. WTF?

WTF. I mean, I know these aren't CMOS, but this is getting ridiculous.5K isn't enough?!I check the datasheets... Iil-max ~= -0.5mA... I mean, we're *well* within specs, right? V=IR, 5 = 0.0005 * R, R=5/0.0005=10000 or something, right...? OK, so maybe 10K was cutting it close, but it should've worked, right?

Something's wrong somewhere on the board... but I can't spot it.Another parallel resistor, this time 2.2K... So we're measuring something like 1.5K. And... It works!

Alright, finally!

But now the clock-input is measuring 1.4V... which is *way* above Vil-max = 0.8V.

And it's toggling between that and something like 2.8V.

Mind completely boggled. And, still, the math doesn't make sense, it should've been *well* within specs, at this point... right? I *know* I've used 4.7K pull-up resistors with LS and other non-CMOS TTLs before...

---------

I vaguely recall something about pull-downs being less-common than pull-ups for a reason, but no idea what...

Search-fu...

I come across this comment: "Connecting a 10k resistor from an input to 0V will not necessarily pull the input low because TTL/LS inputs source current" (from here)

WTF?!

Am not wrapping my head around this...

I looked at the specs... something like 0.4mA leakage-current when low, and significantly less when high... 0.4mA isn't much. And I did the math... 10K should be *plenty* within-range... instead it's *way off*. And halving that didn't halve the voltage, in fact barely made a dent. Even weirder...

Still search-fu-ing... I really just expected there to be some sort of simple page explaining the use of pull-downs with TTL, like a tutorial, or a section in a chapter of a digital-logic book (and how is it I don't recall this from all my digital-logic studies? Must've lost that knowledge to time and lack-of-use). But instead, all I'm finding is forum-posts. I guess that works. Here's another.

"use low-ohm pull-down resistor; For TTL-LS gates (Iil<400uA, Vil<0.4V) the pull-down resistor can be 1kΩ .."

Jeeze that's low... What'm I missing?

And further comments suggest values as low as 470ohms. WTF? That's like the value you'd use when driving an old-school (pre today's high-brightness) LED from a 5V source (or tying one to an active-low TTL output). Those old LEDs take *way* more current than a TTL-input, certainly more than 400uA!

Now, an aside, y'all probably already figured out my fail... but at this point I'm *still* not getting it. And I've done a lot of digital-circuitry over the years. Certainly no expert, but yahknow well-trained over a decade ago, built a friggin' TTL raster-scan LCD driver several years prior to that... But, again, at this part in the story last-night, I am just not getting how 470 ohms could possibly be necessary to pull down an input. And, frankly, at this point I'm pretty certain the world's turned upside-down and I should start believing in magic over science.

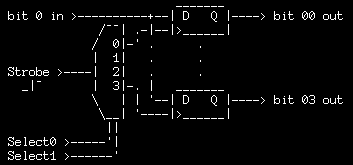

So, after cursing the field of science for a while, for misleading me for so many years, it strikes me... Back In The Day TTL datasheets often showed internal circuit-diagrams... Of the actual BJT-layout (or an equivalent) used to create those gates. (I don't think you see this very often on parts these days).

Whelp, I didn't find the *entire* schematic, this time, but I did find this:

![]()

(from here)

WTF... the inputs aren't connected to the transistor's Base, but to the Emitter?!

Now I'm no BJT-expert, at all... but as i see it, there's a definite current-path from whatever circuitry's on the right to the input. (Is this an Emitter Follower?) Whatever...

Say the circuitry on the right looks like a resistor tied to the VCC rail... then, ignoring the diodes for a second (the two together would be greater than Vil-max=0.8V, anyhow)... Then, when you tie the input straight to ground (not through a resistor!), there's only one path for the current to flow through that imaginary pull-up resistor on the right-side.... And that is straight out the input terminal.

If the imaginary pull-up resistor happens to be 10K, then using the 10-1 rule-of-thumb, you'd have to have a 1K resistor on that input tied to ground to divide the voltage down low enough to be reliably registered "low".

And there yah have it. The danged input is *sourcing* current, just as the guy said.

----------

So where'd I go wrong in my hand-waiving calculations...?

Somehow I considered V=IR where V=5V-0.8V.

V is, in fact, 0.8V. I=0.4mA... V=IR -> R=V/I = 0.8/0.0004 = 2000ohms. My 5K was too great for my TTL-LS, and I'd switched to the regular ol' TTL before throwing the 2.2K in parallel (bringing it down to ~1.5K). That woulda been within-specs for the TTL-LS, but at this point I'd switched to the regular ol' TTL... which has: Iil-max = -1.6mA. So, recalculating: R=V/I = 0.8/0.0016 = 500 friggin' ohms.

The fact it works at all, with 1.5K (and toggling between 1.2V and 2.4V) is just luck, I guess... Bad luck, maybe, along with Good luck. Good in that it got it working, so at least I knew my digital design was sound (D=/Q, button->CLK, debounce-circuit)... Bad luck in that, maybe I've been throwing resistors at circuits for years that might've been out of spec but just happened to work, and might've gotten a bit too reliant on trusting these ol' rules-of-thumbs (yahknow, "10K should be fine for a pull-up/down").

I shoulda just seen it when they used a negative-sign in front of the Iil-max ratings... Negative indicating that current is leaving the input. Somehow that escaped me.

Really, this is all pretty basic ohms-law... I just failed. At the most-basic electronics fundamentals. Fail.

On the plus-side, It works... I'll throw yet another parallel resistor (four now) in there to make sure it's within-specs... And then I'll have a lovely circuit to remind me of this day. (Oh, I should probably throw in a header for the power-LED).

------

Update 12-24-16: Found a chapter in a book that makes mention of it... but again, very brief...

"The value of the pull-down resistor is relatively low because the input current required by a standard TTL gate may be as high as 1.6 mA"

See Figure 5-11 here, where they use a 330ohm pull-down resistor.

Of course, all these brief explanations are detailed-enough to get it right, if you don't make the stupid mistake I did. (V != 5 - 0.8).

------

Also, see the next log where I experience a very similar situation in an entirely different part of the circuit, mistakenly using a BJT in a weird way.

-

It Boots!

12/21/2016 at 08:06 • 0 commentsI now have a booting 8088-machine!

(Can it be called a "machine" if its guts are strewn across a table?)

---------

The ridiculous adventures were countless (see a previous log where I go into a fraction of 'em... I've been meaning to throw up some more photographs from the experiences).

A brief answer to an earlier question: Yes, somehow, beyond my grasp, it is in fact possible to use a 1.44MB 3.5in floppy drive connected to an XT's floppy-controller using the system's regular-ol' BIOS (which was designed exclusively for 360K 5.25in drives). You allegedly can only use 720K 3.5in floppies in the drive (I didn't try others).

Oddly, despite my 1.2MB 5.25in floppy drive having somewhat extensive jumper-options and decent documentation, even mentioning the XT, specifically... no combination of jumpers could get the blasted thing to read a disk, when connected to the XT. I tried 1.2MB disks, 360K, and even 180K to no avail.

But all that's somewhat moot, because I also installed a SCSI card, with a 1GB hard-disk... onto-which I installed/copied almost every old DOS-based program I could find in my collection, as well as installing DOS, while temporarily inserted in a Pentium-120.

Took quite a bit of effort, but I managed to replace my SCSI card's v8.5 ROM with a v8.2 found in a forum... Apparently after v8.2 they changed something so that it no longer would boot XT systems.

And let's discuss that ordeal! Sheesh. So there was my EEPROM-programmer's lost-personality-module, mentioned in the last log... And a "miracle" of sorts.

Then a full day or so trying to figure out how to etch a board with an edge-connector with similar spacing as PCI slots, lacking a laser-printer....

Then the PLCC FLASH chip had to be broken-out, so I spent a day soldering that stupid thing up...

Then I tried to program it, and it failed. Which wasn't surprising, because... There's No "Erase" nor "Blank Check" function for this particular chip (?!). Maybe they didn't complete support for it, or something, by the time they stopped releasing updates?

Wasn't 'bouts to solder up another one of these things unless I was darn-near certain it'd work...

So then I had a brilliant idea... I'm lacking a UV-EPROM-eraser, right? But!

When you program an EPROM, it starts with 0xff's and the only bits that are "written" are those that are low... So I found an old/used EPROM which had enough consecutive blank-space to fit the SCSI card's ROM (which is pretty small)... And lucked out enough that all I'd have to do is tie a few address lines low and remap a couple others.

So I worked it out, read-in the old EPROM data (whatever it was, no idea) overwrote the necessary 0xff's in the buffer, then wrote back the modified version over the non-eraseable contents already on the chip...

It got about 60% complete (well into the "new" data) then failed verification and stopped programming.

"Read 0x54, expected 0x55"

SHIT! A bit was somehow written low that shouldn't've... there's no fixing this without an "ultraviolent eraser" (who was it that coined that?)...

(Wow, I really only intended on writing that I got the thing to boot, if I'da intended this to be this detailed, I'd've started being-so much earlier, and covered *quite a bit more*... 'cause this whole venture has basically been nothing but ridiculous-experiences/troubleshootings like these)

...

OK, checking the remainder of the EPROMS in my collection... none have a consecutive-blank-space large enough. I guess I "lucked out" with that first one...?

...

Losing writing-steam... turns out my friggin' EEPROM/FLASH programmer had 2 dead transistors.

(Interestingly, the paper manual mine came with, from an "OEM" (doesn't that mean original equipment manufacturer? I'm almost certain, the "OEM" relabelled it with their own brand/part-number, especially considering the PCB is marked by who *I*'d've considered the "original" manufacturer, which differs) Anyways, the OEM's paper-manual was more in-depth than the latest found online... and just happened to have a section regarding pin-failures. Yes, two of my transistors were blown... Determined by a "pin-test" in the application. But, after I replaced those transistors, suddenly *another* pin, which *wasn't* in the failure-list previously, was showing up as the *only* failure... And, anyways, that manual made mention of a "parallel-port delay" which should be increased for faster computers... And... now it works like a charm.

And, turns out, the original "flash" chip I was planning to use works fine, as well. Apparently "Erase" and "Blank Check" are unnecessary for it, this thing can actually be written byte-for-byte(?!) without needing an erase-cycle. Impressive.

---------

Alright, so that was just a handful of the unexpected-troubleshooting and workarounds... but it works, now!

I've even run a 3D CAD program, on my 8088 (!?) "Generic 3D" from autodesk, originally on several 5.25in floppies.

And, of course, my go-to DOS game from my 8088/86 days: Block-Out... 3D tetris.

---------

Also, my first experience with x86 assembly... running "debug.exe" and even wrote a really small .COM program.

-----

But, none of this is really particularly-relevant to *this* project's goals...

Well, indirectly, for sure... learning a lot about the architecture, which is a big part of the goal.

But, all that much more aware that trying to fit this project's original goals into 1KB would be darn-near impossible.

-------

I keep meaning to throw up photographs. Really, if you saw this thing it'd make a heck of a lot more sense just how ridiculous, and ridiculously-difficult, this whole endeavor has been.

------

and... a new fixation on overcoming the 640KB memory limitation in weird ways (again, not at all related to *this* project)...

I think I can do it with two previously-used (and *not* UV-erased) EPROMs as address-decoders, a custom ROM (only a few bytes, and maybe even within the "address-decoder" ROMs) and some SRAMs. 736KB, yo!

-

EEPROMer-Adventures and more-realistic goals

12/16/2016 at 03:58 • 0 commentsAs described In the last log I tangentially (of a tangent) came to the conclusion that I need to program a parallel-interfaced EEPROM/FLASH... as a sort of tangential tangent to a tangent of this project.

And, ridiculously, I realized my EPROM programmer couldn't do-so. So came to another tangential-conclusion that I needed to build a FLASH/EEPROM programmer, with an AVR, maybe. So, mulled that over a bit, was getting into the mindset. Was kinda looking forward to some of the side-benefits of having those FLASH read/write libraries available for other projects... but again, a tangent of a tangent of a tangent.

So, while I was writing about this ridiculous conclusion, I realized I have an EEPROM-programmer, in my possession. In addition to my EPROM programmer.

The thing is, I gave up on my EEPROM programmer about a year ago when I lost its EEPROM "personality module" (A little card that tells it which pinout to use). I gave up on it so hard that I was quite literally staring at its diskettes (as another tangent of a tangent of this project) and it *still* didn't occur to me that I have an EEPROM-programmer, in my possession.

----------

Skip forward. I once tried to contact a few distributors of those "personality modules" regarding a replacement for my lost personality ... but didn't receive a response. This thing was EOL'd 16 years ago, after all. Whattya expect?

I dunno what changed this time 'round, because I did have this idea long ago, just never actually tried it out...

The thing is, the personality-modules are pretty much nothing more than a PCB with some traces shorting some edge-connect pins. Most don't even have *any* components on them. Could easily be reproduced, with the right knowledge.

So I set out to find other users of this EEPROM-programmer, to see if I could find one kind enough to send me photographs, or scans, of both sides of their personality-modules.

This seemed an uphill battle. Really, I didn't expect any response. The best contact I found was someone who posted a barely-tangentially-related message (which no one responded to!) in a forum 17 years ago.

But I contacted him... and... within two hours he sent me *exactly* the info I needed to reproduce my lost personality [module].

No shit.

He said he'd already had the scans on-hand, even.

It was like a miracle, or something.

And in a few hours' work, I should have my replacement-personality[module] up-and-going, rendering something I once thought a lost-cause now once-again completely functional!

-------

So, in light of the fact that this battle seemed *near-impossible*--so much so that even the optimistic side of me was extremely pessimistic--I've decided that there may in fact be others out there--only slightly-less optimistic than myself--who would give up on the idea as soon as they realized just how few folk even talk about *using* this thing. Let-alone there being any webpages related to it.

Maybe this'll save a few of these things from the trash, and save some frustrated folk from having to reinvest in something they already owned.

https://sites.google.com/site/geekattempts/misc-notes/megamax-programmer

Now, scaling-back my goals for this project...It occurred to me that as a proof-of-concept for this project, I really only need to implement the *bare-minimum*... see how it functions...

And, realistically, *That* is my interest in doing this project, in the first place. Not to *complete* it, but to have a test-bed for comparison, and maybe further-implementation (plausibly to the point of completion) if it seems doable.

So, as far as fitting this in 1K... I think it's definitely plausible to fit this bare-minimum "test-bed" within 1K. And that, then, would be the "framework" for the rest of the implementation, should I feel motivated to "complete" it. And... having gone to the effort to fit that "framework" within 1K, the remainder of the implementation should *definitely* fit within today's lowish-end devices (probably 8K would be enough?).

.........

Interestingly(?) I think my having gone on this ridiculous path of tangents-of-tangents ("pro-crastination", maybe? and what the heck is "to crast[inate]" anyways? LOL check this out.) will help cement (or at least rubber-cement) the idea of bringing this thing to "completion"...

Improbable AVR -> 8088 substitution for PC/XT

Probability this can work: 98%, working well: 50% A LOT of work, and utterly ridiculous.

Eric Hertz

Eric Hertz

See, Q3 and Q4 can both be on simultaneously, depending on the load. But in the MOSFET case...? Hmmmm...

See, Q3 and Q4 can both be on simultaneously, depending on the load. But in the MOSFET case...? Hmmmm...