Noted every time you lxc-stop & lxc-start a certain container, it creates a new virtual eth device. Eventually ifconfig & brctl show fill with dead virtual eth devices. These dead devices cause networking in the container to fail with TLS errors, dropped connections, while DNS & ping continue to work.

The leading theory is these containers start processes which aren't killed by lxc-stop. kill -9 instead of lxc-stop doesn't release the devices.

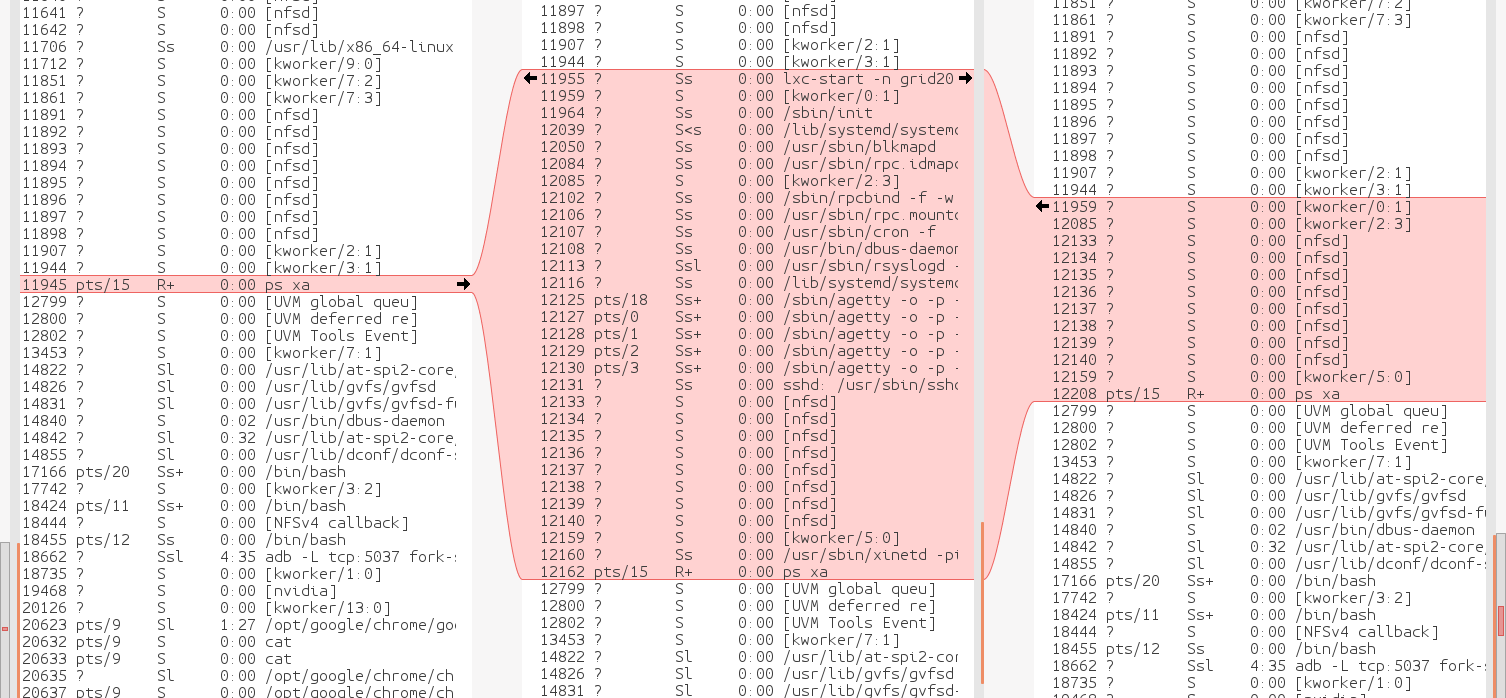

meld shows an interesting evolution of the process table during the lifecycle of the container.

The container creates a bunch of nfsd & kworker which aren't visible inside the container. These are left behind after lxc-stop or kill -9

killall -9 nfsd gets rid of all the dangling eth devices & processes.

The nfsds are all created outside of the container by lxc-start & bound to the virtual eth.

For some reason, the offending container has a bunch of rpc programs running inside the container & these cause nfsd to run outside the container. There's no reason to use nfsd in a container since the filesystem is a subdirectory on the host. A quick disabling of RPC solves the dangling network interfaces.

mv /usr/sbin/rpc.idmapd /usr/sbin/rpc.idmapd.bak

mv /usr/sbin/rpc.mountd /usr/sbin/rpc.mountd.bak

mv /usr/sbin/rpcbind /usr/sbin/rpcbind.bak

That fixed the connection errors. As in all things, containers introduce many new problems in exchange for solving old problems with virtual machines. The hope is the new problems are less debilitating than the old problems but there can never be a perfect solution.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.