I started this project to measure the input delay of the TV that I use for gaming. Specifically, I wanted to know what kind of delay I was experiencing when playing an FPGA-based NES emulator on the same FPGA and DVI output hardware. I'm pleased with the end results, and I think this project could serve as the basis for a more advanced input delay measurement tool.

For an overview and demo, check out the video on YouTube:

Components

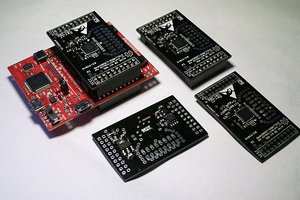

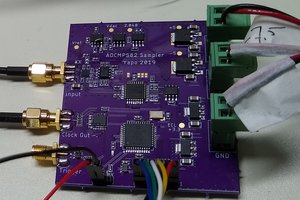

The project uses a Digilent Nexys A7-100T FPGA development board for its FPGA platform. The HDL code is written in SystemVerilog, and all of the demos were synthesized in Vivado 2022.1. The DVI output is generated with the 24bpp-DDR version of the PMOD Digital Video Interface from 1BitSquared. It uses two adjacent PMOD expansion ports on the FPGA board. The light sensor circuit is built from an Everlight PD204-6C photodiode, a Microchip MC6291 op amp, and a Microchip MCP6541 comparator. It connects to the FPGA board through a ribbon cable and a hand-soldered, 3-pin PMOD expansion board.

The FPGA board and PMOD DVI chip were chosen because I already own them and have experience with them on much larger projects. The photodiode was chosen for its low cost, its visible spectrum response (be careful not to pick infrared only!) and its relatively fast response time. The Microchip ICs were chosen for their low cost, low voltage and power requirements, CMOS inputs (it's especially important for the op-amp to have virtually no input current) and relatively high speed. The ICs also had to be through-hole PDIPs for easy use on a breadboard / prototype board.

Design Goals

I jumped into working on this project without researching how other projects or commercial products perform these kinds of measurements. My goal was to measure the time elapsed from the moment a pixel's data is sent to the DVI chip to the moment the light sensor gets triggered. In order to meet an overall margin of error of +/- 100 microseconds, I designed the light sensor circuit with a response time bounded by about 25 microseconds, and I designed the pixel pattern generation and timing algorithm to have a margin of error of +/- 55 microseconds. My plan was to enable measuring the input delay at nine positions around the screen using a simple button and seven segment display interface on the FPGA board. My final design goal was to power the light sensor circuit using the FPGA's 3.3V supply voltage, i.e. without providing my own power supply.

Uncertainties and Challenges

My educational background is in digital design, and my career is in software engineering. I've only dabbled in analog circuitry as a minor hobby, so my confidence in the light sensor design is not very high. I think the strategy of amplifying the small photodiode signal and converting it to a digital signal with a comparator is a decent, textbook approach, but I don't have the experience to know whether it's totally sound. I'm also not entirely confident in the response time estimates. It would be much better to measure the circuit's actual response time with the one-shot feature of a digital scope. Unfortunately, I only have a 20MHz analog scope, and using it to observe the fast switching time of this circuit was difficult, if not comical.

I do, however, feel confident about the rest of the design. I had developed the 720p output circuitry for my NYDES (Not Your Dad's Entertainment System) project, and the remaining components of the design would be appropriate assignments for a first course in undergraduate digital design lab work.

Features Demonstrated

For readers interested in learning FPGA development or digital design, this project demonstrates several basic techniques:

- Accessing I/O ports of development board resources and GPIO pins (PMOD ports)

- De-bouncing noisy switching signals from pushbuttons or sensors

- Safely synchronizing 1-bit signals from I/O pins or across clock domains (to avoid flip-flop metastability)...

Mike Kibbel

Mike Kibbel

helge

helge

jaromir.sukuba

jaromir.sukuba

Ted Yapo

Ted Yapo

Thanks for sharing these ideas! I didn't know about the Parallax Propeller II, and I look forward to exploring it some more. I see that the RP2040's programmable I/O (PIO) also has a lot of potential and is even available in the Pico.

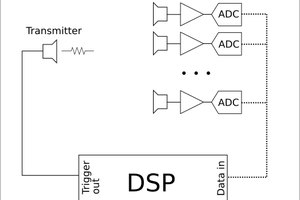

Having detailed control over parallel I/O is what makes the FPGA so simple to use for this kind of application. In this project, it's easy to count exactly how many clock ticks (at 10 ns each) occur between outputting a pixel's data and detecting it on the screen. I would expect that one of the biggest challenges with using microcontrollers is synchronizing these events against a common reference clock.

If these chips provide access to a high-resolution clock, the I/O systems can generate interrupts at a predictable point in their outputs, and interrupts can be preemptive, I think we'd be able to write synchronization code that can reliably run within a known margin of error. For example, if we could configure preemptive interrupts for the moment when video vertical blank is sent and for when a timer fires for sampling the ADC, then we could start each interrupt handler by storing the current high-resolution timer value. A separate thread could process timestamped data generated by the interrupt handlers to stitch together the output results. The details would certainly be tricky, but it sounds like fun to try!