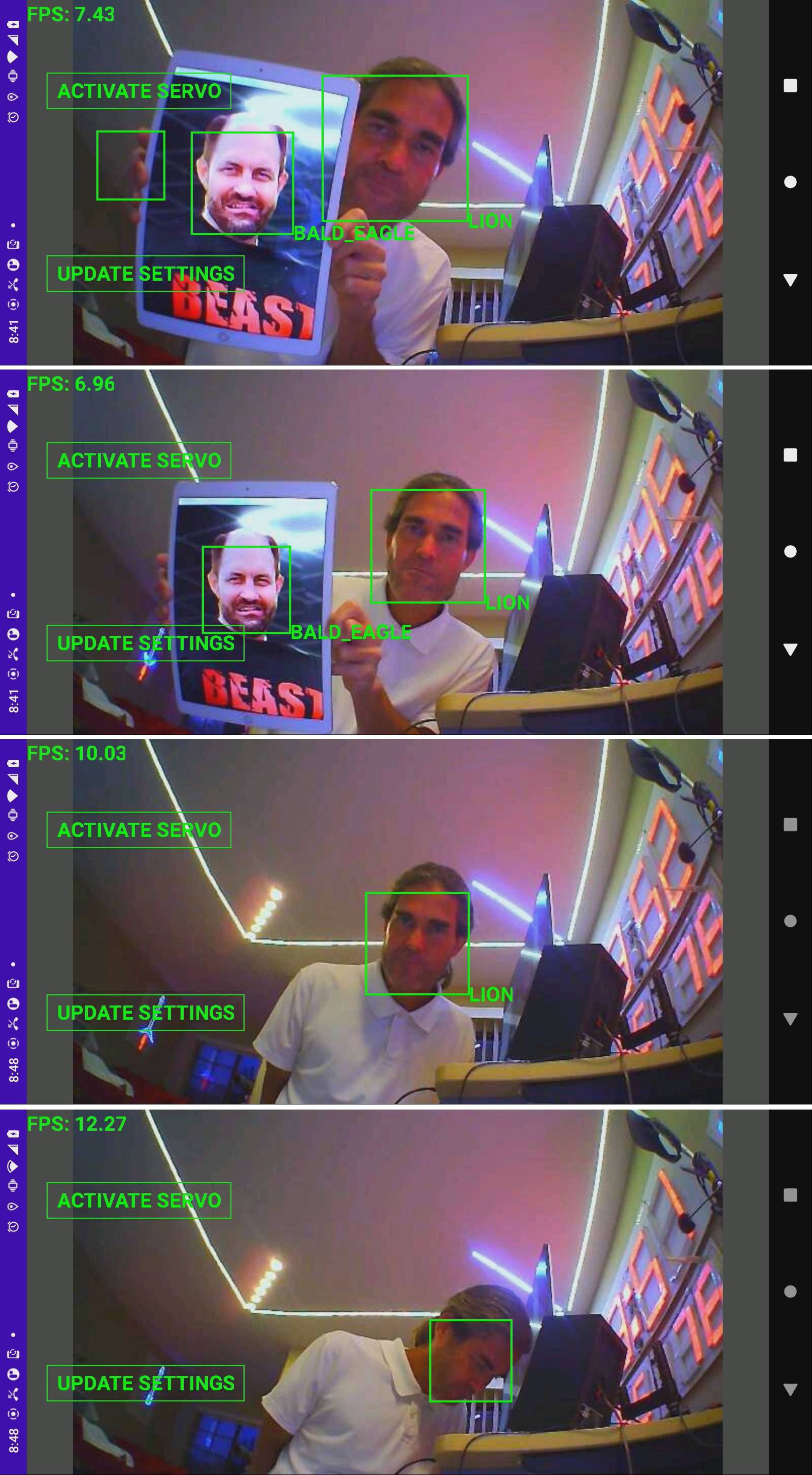

Mtcnn on the jetson nano did much better with 1280x720 & no changes to the original parameters. The 2nd problem was the byte ordering. It expects BGR. The amount of upscaling doesn't matter. It's quite robust, using FP16. Frame rate with full face matching depends on how many faces are visible & the shutter speed. With 1 face in indoor lighting, it goes at 11fps. The raspberry pi went at 3fps.

https://hackaday.io/project/176214/log/202367-face-tracker

The original notes said color gave better results than B&W, but changing the saturation or brightness had no effect beyond that.

https://hackaday.io/project/176214/log/202565-backlight-compensation

Much effort went into migrating the phone app to the jetson codebase. The key feature is landscape mode being synthetic. The phone has more screen space in portrait mode with the app drawing synthetic landscape widgets than it would in auto rotate mode.

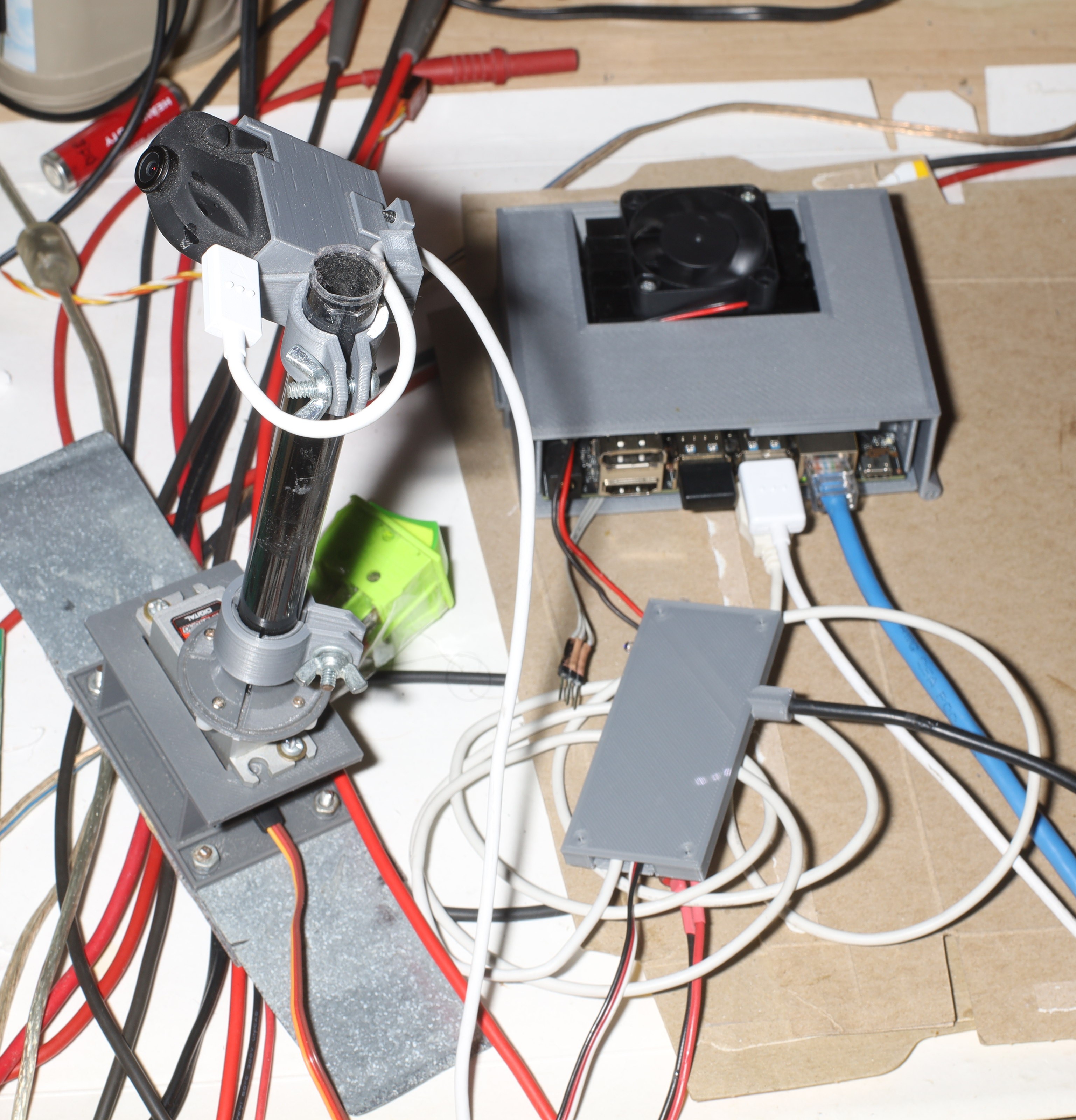

With face tracking working, the next step was moving the efficientdet model trained on lions to the jetson.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.