It's been 6 months with the jetson, with only the openpose based 2D tracker & the face recognizer to show for it. 1 problem is it takes eternity to train a model at 17 hours. The conversion to tensorrt takes another 2 hours, just to discover what doesn't work.

It reminds lions of a time when encoding a minute of video into MPEG-1 took 24 hours so no-one bothered. The difference is training a network is worth it.

The jetson nano predated efficientdet by a few years. The jetbot demo used ssd_mobilenet_v2. That might explain the lack of any ports of efficientdet.

The detection failures were narrowed down to num_detections being 0, which can be tested after only 10 epochs.

Trying num_classes=2 didn't work either. 1 hit said 1 class was the background so the minimum number was 2. A higher than necessary number might dilute the network but it should eliminate it as a factor.

num_detections is always 100 with the pretrained network & always 0 with the lion network. The 100 comes from tflite_max_detections in the hparams argument. The default hparams are in hparams_config.py. hparams_config.py contains names & resolutions of all the efficientdets.

Another hit left out all the val images, starting checkpoint & threw in a label_map:

time python3 main.py \ --mode=train \ --train_file_pattern='../../train_lion/*.tfrecord' \ --model_name=efficientdet-lite0 \ --model_dir=../../efficientlion-lite0/ \ --train_batch_size=1 \ --num_examples_per_epoch=1000 \ --hparams=config.yaml \ --num_epochs=300

config.yaml:

num_classes: 2

label_map: {1: lion}

automl/efficientdet/tf2/:

time OPENBLAS_CORETYPE=CORTEXA57 PYTHONPATH=.:.. python3 inspector.py --mode=export --model_name=efficientdet-lite0 --model_dir=../../../efficientlion-lite0/ --saved_model_dir=../../../efficientlion-lite0.out --hparams=../../../efficientlion-lite0/config.yaml

TensorRT/samples/python/efficientdet:

time OPENBLAS_CORETYPE=CORTEXA57 python3 create_onnx.py --input_size="320,320" --saved_model=/root/efficientlion-lite0.out --onnx=/root/efficientlion-lite0.out/efficientlion-lite0.onnx

time /usr/src/tensorrt/bin/trtexec --fp16 --workspace=2048 --onnx=/root/efficientlion-lite0.out/efficientlion-lite0.onnx --saveEngine=/root/efficientlion-lite0.out/efficientlion-lite0.engine

That got it down to 10 hours & 0 detections. Verified the pretrained efficientdet-lite0 got num_detections=100.

https://storage.googleapis.com/cloud-tpu-checkpoints/efficientdet/coco/efficientdet-lite0.tgz

That showed the inspector, onnx conversion, & tensorrt conversion worked. Just the training was broken.

A few epochs of training with section 9 of the README & the original VOC dataset

https://github.com/google/automl/blob/master/efficientdet/README.md

yielded a model with num_detections 100, so that narrowed it down to the dataset. The voc dataset had num_classes 1 higher than the number of labels. A look with the hex editor showed the tfrecord files for lions* had no bbox or class entries.

The create_coco_tfrecord.py command line was wrong. This one had no examples.

in automl-master/efficientdet

PYTHONPATH=. python3 dataset/create_coco_tfrecord.py --image_dir=../../train_lion --object_annotations_file=../../train_lion/instances_train.json --output_file_prefix=../../train_lion/pascal --num_shards=10

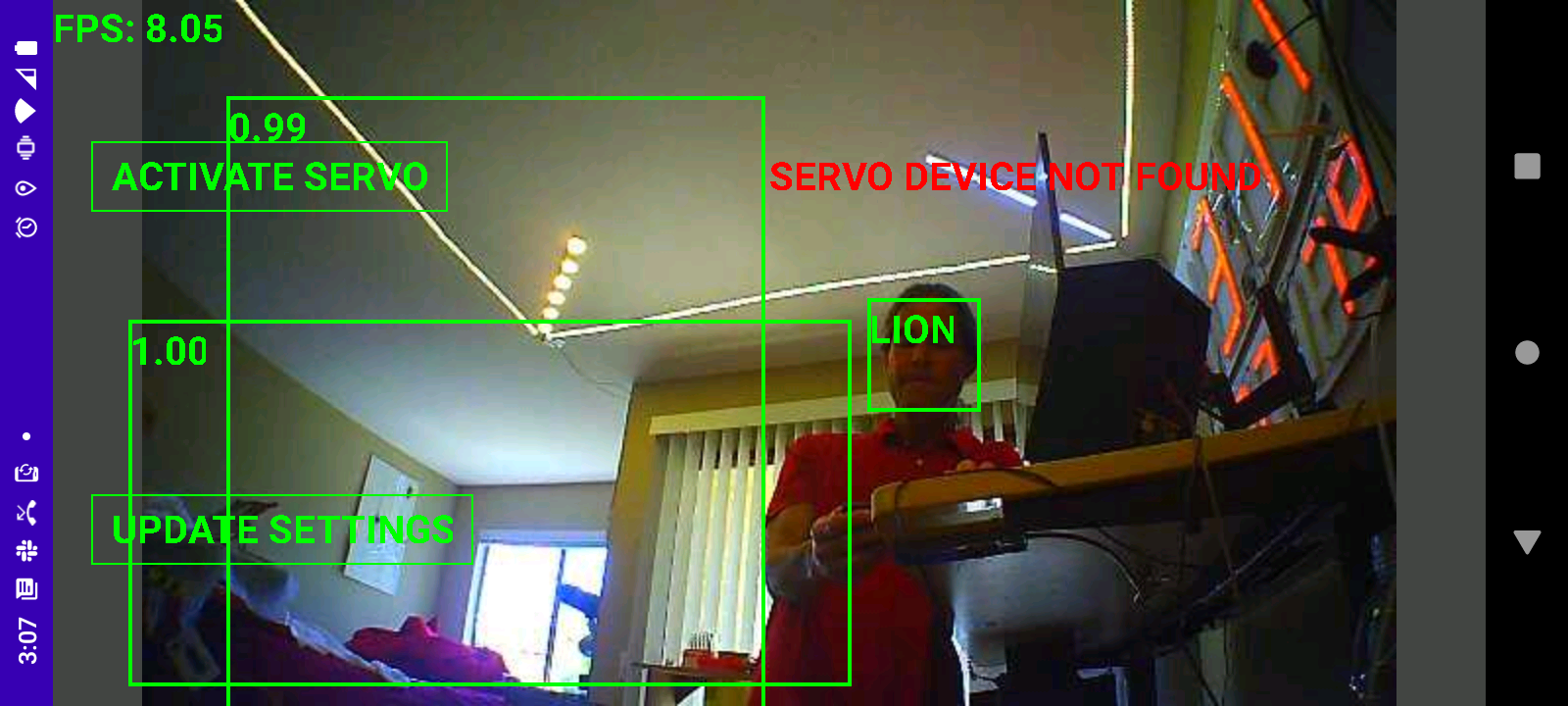

That finally got num_detections 100 from the lion dataset, with 2 classes. Sadly, the hits were all garbage after 300 epochs.

Pretrained efficientdet-lite0 wasn't doing much better. It gave bogus hits of another kind.

So there might be a break after the training. A noble cause would be getting the pretrained version to work before training a new one. The gootube video still showed it hitting valid boxes.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.