In the previous tests, we were able to obtain the sound image. Next, we will capture a camera image and try to combine it with the sound image, and output it to the display.

When combining the sound image and the camera image, it is important to align the field of view (FoV) of both images and bring the shooting axes as close together as possible. Ideally, the camera should be positioned at the center of the microphone array.

However, in this case, we will place the two sensors as close together as possible and make adjustments. Since the exact center of the field of view may be slightly offset, it is recommended to tilt the placement slightly so that the center of the field of view intersects at the desired distance from the target.

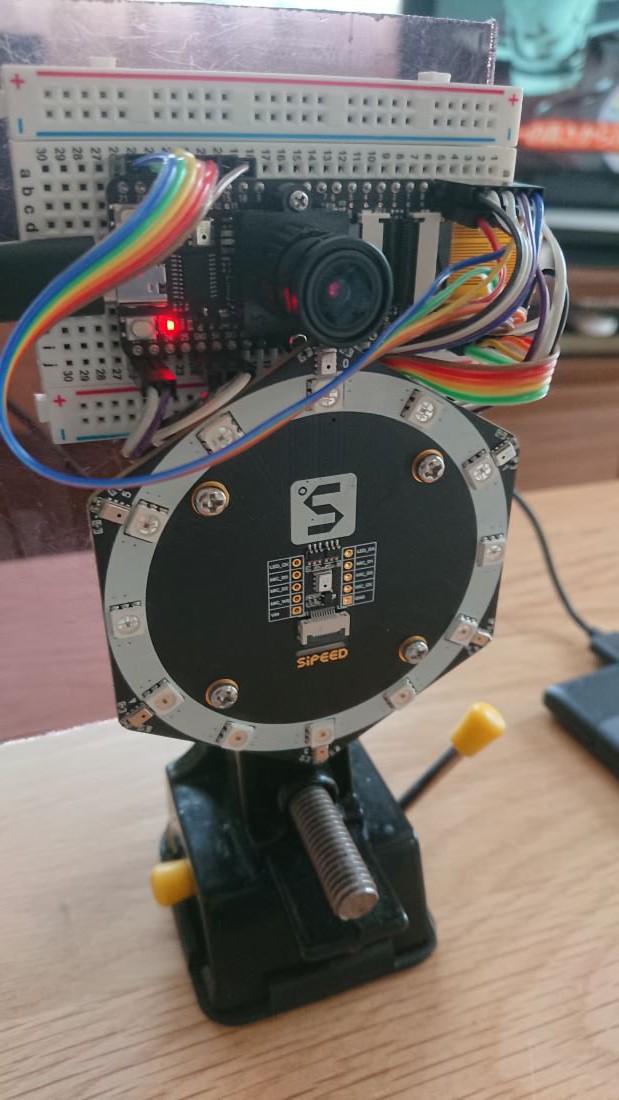

Here, the camera and microphone array were tested on a polycarbonate board to which the camera and microphone array were fixed for testing.

When you combine the images obtained from the camera and the microphone array, it will look like the following. The sound image is enlarged to a suitable size and then cropped to align with the camera image. The code can be written very simply using MicroPython's OpenMV.

TV audio visualization example.

You can also visualize the music playing from the audio speakers.

AIRPOCKET

AIRPOCKET

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.