How does it work

Starting with a central location, a destination at specific distance is randomly calculated with a trigonometry formula. Having an origin and destination, walking directions are requested to Google Maps API. At this point feet starts moving towards destination, pictures are taken with Google Street View API and at arrival a diary entry is created with generative Artificial Intelligence.

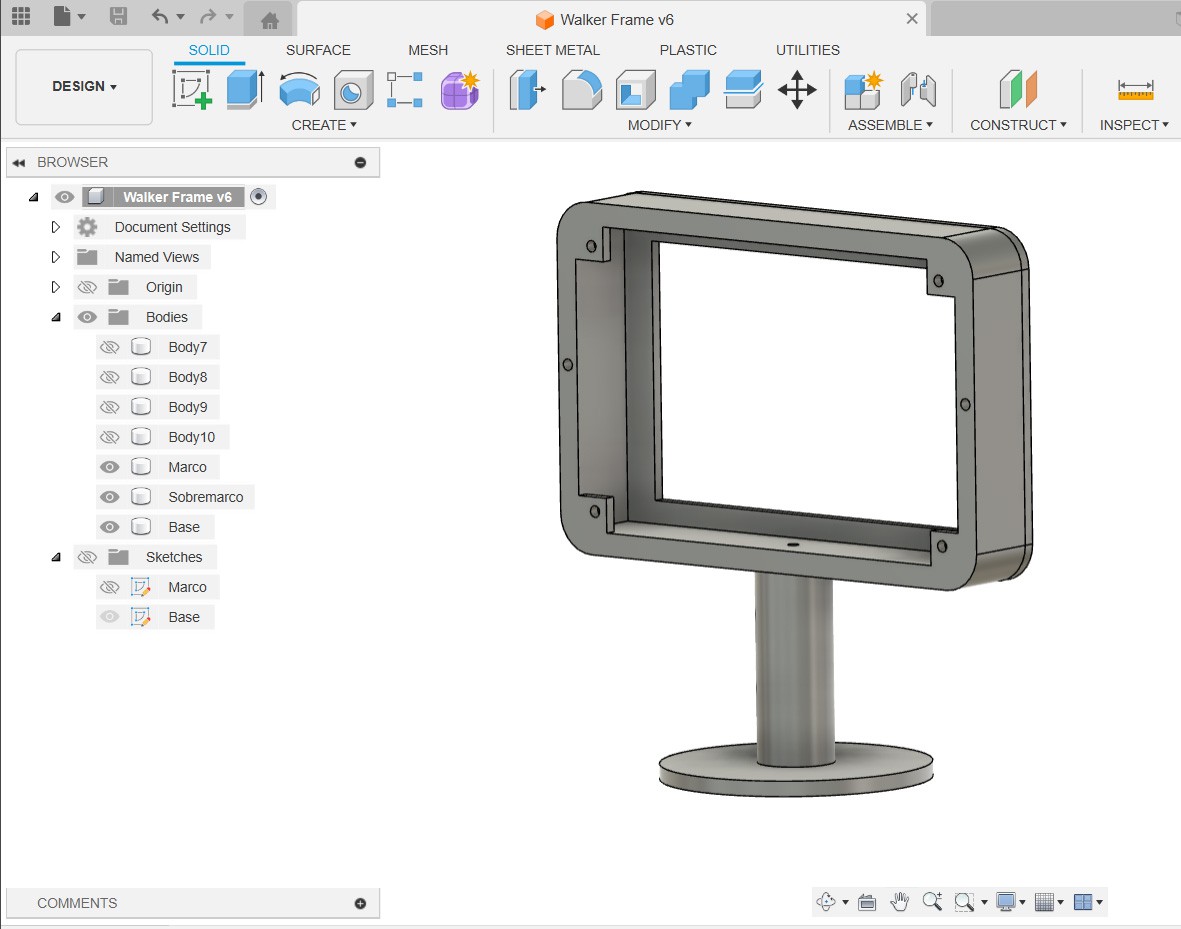

Parts used

• Raspberry Pi 2 with USB WiFi dongle

• DFRobot TFT screen 800x468

• 2 SG90 Servo motors

• 2 rubber foot

• 3d printed parts

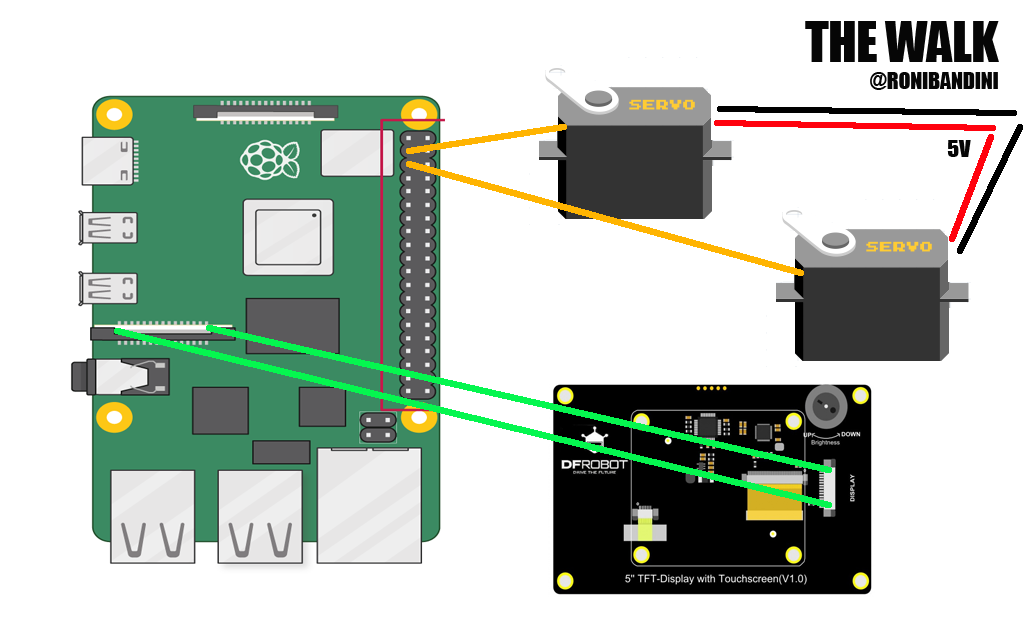

Circuit

There is not much from electronic in this project. Just the servo motors connected to GPIO2 and GPIO3 and external Power. The DFRobot screen is connected to the Raspberry Pi with a simple ribbon and it does not even required libraries or special software.

Software APIs

The Walk uses 3 APIs.

• 1. Google Maps API https://developers.google.com/maps for reverse geolocation (getting address from lat-lng), to get directions and also to plot maps and get snapshots.

• 2. Openweathermap API https://openweathermap.org/api to get the weather for given lat-long. This is used to create the journey entry.

• 3. OpenAI API https://platform.openai.com/docs/api-reference to write journey entries

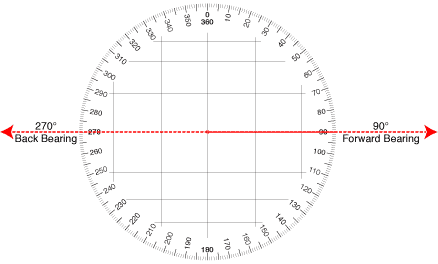

Random location at a distance

To get a random location at a distance first a bearing is obtained. A bearing could be defined as a direction or an angle, between the north-south line of earth or meridian and the line connecting the target and the reference point.

myBearing = randint(1, 360)

def get_point_at_distance(lat1, lon1, d, bearing, R=6371):

lat1 = math.radians(lat1)

lon1 = math.radians(lon1)

a = math.radians(bearing)

lat2 = math.asin(math.sin(lat1) * math.cos(d/R) + math.cos(lat1) * math.sin(d/R) * math.cos(a))

lon2 = lon1 + math.atan2(math.sin(a) * math.sin(d/R) * math.cos(lat1), math.cos(d/R) - math.sin(lat1) * math.sin(lat2))

return (math.degrees(lat2), math.degrees(lon2),)

Directions and steps

With a starting point, a random bearing and the new point at specific distance, a Google Maps API Direction call is executed. The answer is a Json that is parsed, distance is obtained and steps numbers calculated. The basic formula is one meter per step. So, if we have a direct 2000 meters line (6500 ft) we will have to wait around 2000 steps to get the picture.

To move the feet, mid and min angles are assigned to Gpio connected servos

while myDistance>0:

rightFoot.min()

leftFoot.min()

sleep(0.5)

rightFoot.mid()

leftFoot.mid()

sleep(0.5)

myDistance=myDistance-1CSV Files

Coordinates.csv file is used for current location. Just one record with lat-lng.

TheWalkLog.csv is used to store every movement: timestemp, origin, destination, distance. map, snapshot and weather conditions.

Row example:

2023-09-04 09:27:22.558367,-34.561641,-58.4819516,-34.564203,-58.48763710000001,592,'/home/roni/images/route-500835d3-b13b-4a17-b7d2-546fcdc605e9-5.jpg','/home/roni/images/snap-500835d3-b13b-4a17-b7d2-546fcdc605e9-5.jpg','clear sky'

TheWalkJournal.csv is used to store the diary.

The later 2 csv will be imported to a database and used in the future to plot The Walk complete journey.

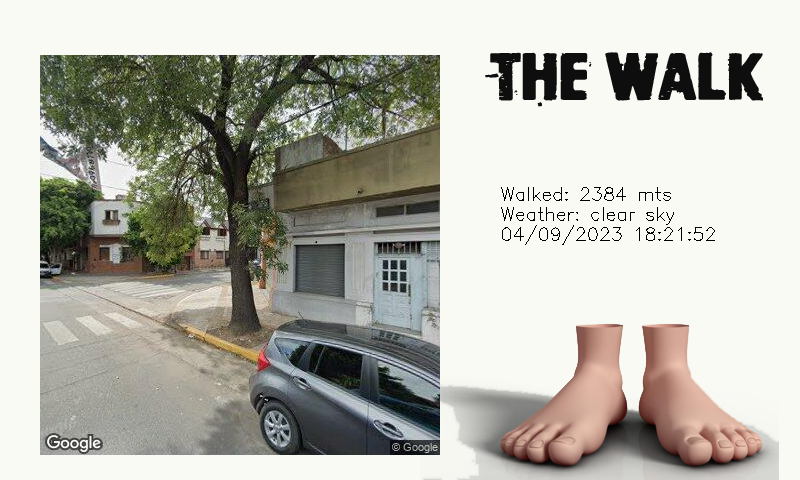

Screens

For lots of Python projects text output is just enough. In this case, more appealing screens are required, so CV2 library is used. The thing is that the screen size is 800x468 but Google Maps images are square. In the first versions of The Walk I just resized the images but then I found out a better option: using a full screen template and pasting the maps and dynamic text.

Files

I have uploaded a command line version of The Walk to Github.

Just download the files .py, csv and images, signup for the 3 APIs, configure API keys inside the code and install dependencies:

sudo apt-get install build-essential cmake pkg-config libjpeg-dev libtiff5-dev libjasper-dev libpng-dev libavcodec-dev libavformat-dev libswscale-dev libv4l-dev libxvidcore-dev libx264-dev libfontconfig1-dev libcairo2-dev libgdk-pixbuf2.0-dev libpango1.0-dev libgtk2.0-dev libgtk-3-dev libatlas-base-dev gfortran libhdf5-dev libhdf5-serial-dev libhdf5-103 python3-pyqt5 python3-dev -y

pip3 install opencv-python==4.5.3.56

pip3 install -U numpy

pip install haversine

pip install screeninfo

pip install openaiTo execute The Walk you can add a cronjob, do it manually with a USB connected keyboard.

$ python thewalk.py

3d printed parts

There are files to make the screen stand. And also files for servo support. Print with PLA, no support is required but you will have to rotate some parts before printing.

https://cults3d.com/en/3d-model/gadget/the-walk-a-machine-to-walk-maps

ChatGPT for journey entries

To write the journey diary, a simple prompt is sent to OpenAI API including parameters like weather, destination and duration.

prompt ="Write a personal diary entry for "+myFecha+" date using "+myCantidadDePalabras+" words talking about a " +myDistancia+ " meters walk under "+myClima+" weather until reaching "+myDestino

completion = openai.Completion.create(

engine=model_engine,

prompt=prompt,

max_tokens=1024,

n=1,

stop=None,

temperature=temperatura,

)Examples obtained:

“I have finally reached my destination with a feeling of great relief. After my 2705 meter walking journey, I am feeling exhausted but satisfied and content. The clear sky weather was glorious and the sun shone brightly on my journey, yet the air was still cool and refreshing. The sight of Gral. José Gervasio Artigas 469, C1406ABE CABA, Argentina was a beautiful sight and I am so grateful to have made it here in one piece”

“Today was a beautiful day. The sun was shining brightly in the clear sky and the temperature was just perfect. I decided to go for a walk from my house to Alberti 1685, C1247AAI CABA, Argentina. It was a long walk of 2637 meters, but it was worth it. The scenery was breathtaking, with lush green trees and flowers everywhere. The birds were singing and the air was fresh and fragrant. I felt so energized and alive as I walked along. The view of the cityscape was incredible, and I was in awe of the architecture, the bustling streets, the people and the culture. I felt so fortunate to be able to experience this. Walking back home, I had a spring in my step and a smile on my face.”

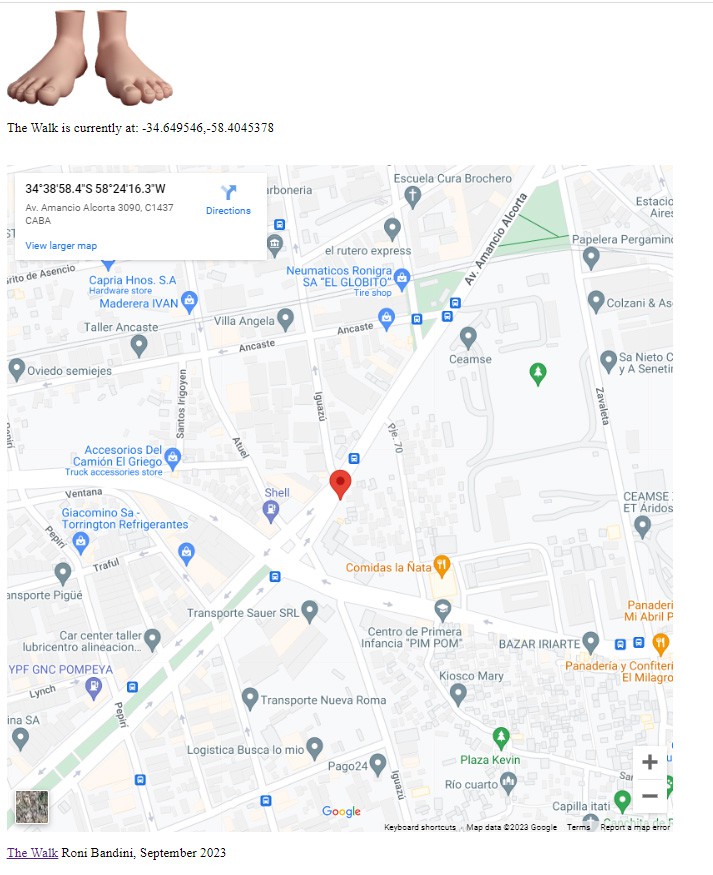

Web site to follow The Walk

At destination, the Python code will also make a call to a PHP web page to store coordinates. Then, an embedded Google map will display The Walk location.

PHP files are also included in Github for this purpose.

Final notes

There is an irresistible allure in observing kinetic, yet seemingly static feet, all the while recognizing that the true journey unfolds within a different realm—one that is virtual, simulated, and oddly genuine

Roni Bandini

Roni Bandini