Nothing has happened for a while in this project. The reason is somewhat obvious from the previous entry: There are many solutions for edge inference, but none that is really fitting my purpose.

To be honest, it is not a problem that is easy to solve, because a lot of flexibility (aka complexity) is required to address all possible types of NN-models people come up with. In addition there is a tendency trying to hide all the complexity - and this adds even more overhead.

When it comes to really low end edge devices, it seems to be simpler to build your own inference solution and hardwire it to your NN-architecture.

... and that is what I did. You can find the project here:

https://github.com/cpldcpu/BitNetMCU

Detailed write up

https://github.com/cpldcpu/BitNetMCU/blob/main/docs/documentation.md

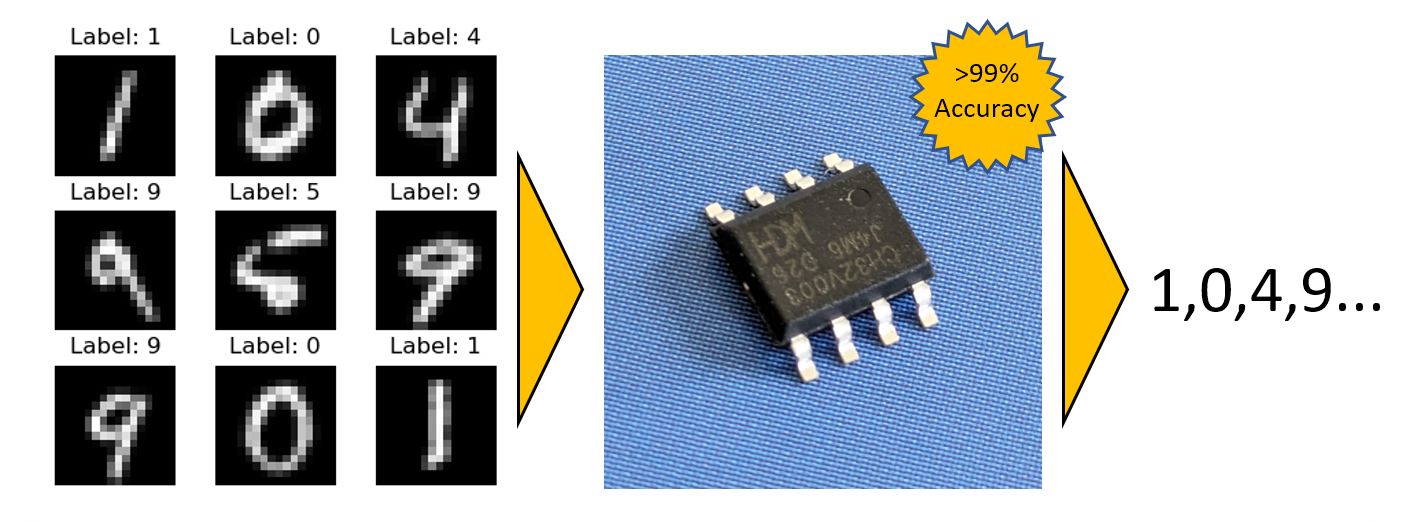

I learned a lot about squeezing every last bit out of the weights during training and make the inference as lean as possible. I used the well-known MNIST dataset and a small CH32V003 microcontroller as a test vehicle and achieved >99% test accuracy. This is not a world record, but beats most other MCU based applications I have seen, especially on a MCU with only 16kb flash and 2kb sram. (Less than an Arduino UNO).

So far, I got away with only implementing fc-layers, normalization and ReLU. But to address "GenAI" i will also have to implement other operators, eventually. We'll see...

Tim

Tim

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Holy cow, man. I look at the progress you've made and wonder... Have you considered designing a high-concept virtual pet? I'm just imagining one with generative facial expressions and some kind of pseudo-dream watching routines.

Are you sure? yes | no

Interesting idea! But this sounds quite complex. I would probably pick a simpler project as the next step.

Are you sure? yes | no