I got the second camera and two 170 degree wide angle lenses (1.8mm focal length). After calibration the disparity was terrible. I figured it was because I used the clips on the back of the cameras to hold them in place. Indeed a small movement of the lens is enough to render the calibration obsolete.

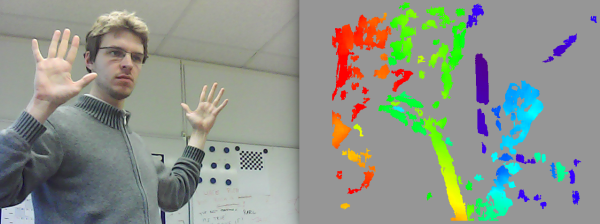

I printed a support that would hold them together at 100mm apart, the results were slightly better:

I think there is still room for improvements, but this proves the general feasibility of the idea.

Lessons learned:

- The disparity only starts working at about 1.5m from the cameras. Moving the cameras closer to each other might give better results at closer range.

- The lenses are not 170 degrees, I barely get 35 degrees, perhaps it's due to the tiny sensor size.

- I ordered the second camera from the same seller on eBay, however the manufacturer was reported as Generic instead of Etron Technology, Inc. like the first camera, The PCB as well as the image were also slightly different. Having two identical cameras might get better results.

- Images are slightly unsynchronized, still the disparity looks like the one above even on moving objects (at 30fps).

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Have you computed intrinsics and distortion coefficients for the lenses ? Lot easier to get higher quality parallax data if you do - and yeah, you have to work out orientation and fov overlap to determine closest measureable distance - all you know for an element within a single cameras field of view is that it's either outside the overlap region or inside it - if outside, you got nothing - if inside, you only know it maximum possible distance - but a bit or trig will answer those questions to the degree possible

Are you sure? yes | no