-

A first look at the DHD D5

09/23/2017 at 11:43 • 0 commentsI recently discovered the DHD D5, which looks like a clone of the JJRC Elfie but sells for a slightly lower price (£15 each, which is a serious bargain). I got a pair of them and I was hoping I'd be able to control them from my scripts straightaway but found out that at least from the software point of view they are significantly different.

What I've learned so far:

- The IP of the quad is 192.168.0.1 and it assigns the tablet the 192.168.0.100 (I haven't check if there's a DHCP that keeps a list of devices with different IPs)

- The control signal from the control app is sent by UDP to port 8080 every 100 ms but the message seems to be different. While in standby, most messages are a single byte 0x25 ("%") with a longer 5 byte message happening every second or so 0x08c0a80164. When the on-screen controls are on, the pattern is similar but you see 11 byte long messages from time to time. I haven't done a TCP dump while flying yet.

- Video is sent by UDP through a connection started by the app on ports 55934 or 577-something. The quad sends a chain of messages containing the video every 46 milliseconds. Assuming these are single frames, it gives some 21 fps, which is more or less the same framerate of the video from the JJRC Elfie.

- The video link is negotiated through a brief TCP conversation through port 7070 that lasts for some 200 ms and contains the following:

>> (from App to Quad) OPTIONS rtsp://192.168.1.1:7070/webcam RTSP/1.0 CSeq: 1 User-Agent: Lavf56.40.101 << (from Quad to App) RTSP/1.0 200 OK CSeq: 1 Public: DESCRIBE, SETUP, TEARDOWN, PLAY, PAUSE >> DESCRIBE rtsp://192.168.1.1:7070/webcam RTSP/1.0 Accept: application/sdp CSeq: 2 User-Agent: Lavf56.40.101 << RTSP/1.0 200 OK CSeq: 2 Content-Base: rtsp://192.168.1.1:7070/webcam/ Content-Type: application/sdp Content-Length: 122 << v=0 o=- 1 1 IN IP4 127.0.0.1 s=Test a=type:broadcast t=0 0 c=IN IP4 0.0.0.0 m=video 0 RTP/AVP 26 a=control:track0 >> SETUP rtsp://192.168.1.1:7070/webcam/track0 RTSP/1.0 Transport: RTP/AVP/UDP;unicast;client_port=19398-19399 CSeq: 3 User-Agent: Lavf56.40.101 << RTSP/1.0 200 OK CSeq: 3 Transport: RTP/AVP;unicast;client_port=19398-19399;server_port=55934-55935 Session: 82838485868788898A8B8C8D8E8F90 >> PLAY rtsp://192.168.1.1:7070/webcam/ RTSP/1.0 Range: npt=0.000- CSeq: 4 User-Agent: Lavf56.40.101 Session: 82838485868788898A8B8C8D8E8F90 << RTSP/1.0 200 OK CSeq: 4 Session: 82838485868788898A8B8C8D8E8F90

- Some kind of "heartbeat" is sent to another UDP port (55935, which is the same port as the video + 1). A short message is sent there every second. I assume this is a way for the quad to know that there's an application reading the video stream. It might be a common video protocol given that the info exchanged in the TCP stream above seems to indicate that a common video streaming protocol is used.

I'm a bit lazy to go through the process of reverse-engineering all this stuff but this quad seems to be more advanced and cheaper than the JJRC, which is an incentive to give it a go. I may start a new project if I have the strength.

-

Recording the video while watching it

07/29/2017 at 17:05 • 0 commentsQuick update. Dumping the raw h264 video from the quadcopter into a file is something I managed to do very soon once I got the "magic word" the quadcopter was expecting. The raw file has .h264 extension, which can be played by most software available. Still, it seems ffmpeg, vlc or gstreamer will fail to play it at the right framerate. One way I found to convert the raw .h264 into mp4 without reencoding (the quality of the video is bad enough without any reencoding) is by specifying the frame rate in ffmpeg:

$ ffmpeg -r 24 -f h264 -i dump1.h264 -c copy dump1.mp4

I'm not 100% sure the actual frame rate is 24 frames/second [EDIT: I first thought it was 15] but the length of the video this gives more or less matches the real duration of the recording that I logged.

The weight of the video is about 63kB/s.

-

Eureka! It works

07/09/2017 at 21:16 • 0 commentsI've been quite busy lately and I didn't touch this project since March. This weekend I finally found the time to give a few ideas a try and I managed to get everything working! weee!

My original idea was to modify my early python script that dumps the video stream into the stdin and to direct the video to a UDP or TCP socket to ffplay or VLC instead. This didn't work very well and made the aforementioned software crash or not play video at all (the video stream from the quadcopter is raw, it isn't packed in RTP or anything). Following these tests, I tried all sorts of things (mplayer, ffplay, gstreamer) and after thousand random tries and lots of frustration I finally found a solution that works.

The solution is to dump the video stream to the stdin using my original script and to use Gstreamer to decode and play the stream. The magic Gstreamer pipeline looks like this:

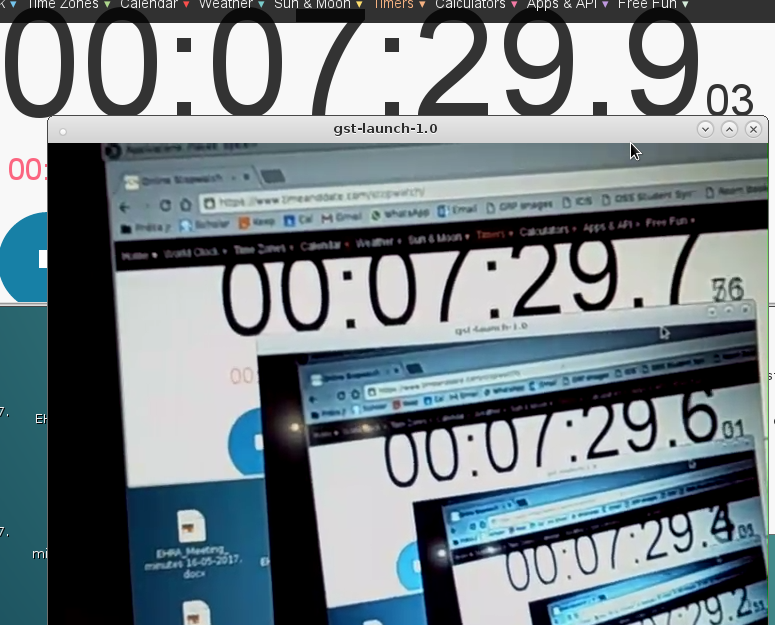

python ./pull_video.py | gst-launch-1.0 fdsrc fd=0 ! h264parse ! avdec_h264 ! xvimagesink sync=falseThis works like charm and the solution is so simple that I feel a bit stupid to not have tried it earlier.I did a "feedback" test in order to measure the time delay between REALITY and the video output and this is what I got:

As you can see from the picture, the lag is somewhere between 100 and 300 ms with a frame rate of about 5-7 frames per second. Not perfect but way way better than any video stream I've ever managed to set up :)![]()

I believe I've achieved what I wanted to achieve when I started this project and therefore I will soon update the text in the description of the project and mark it as completed. I will perhaps make another "final words" post and point at other interesting "research" ideas for us JJRC Elfie nerds.

-

Will I ever be able to play the video stream?

03/26/2017 at 22:36 • 2 commentsI decided to write a bit more about my attempts to decode the video stream from the quadcopter. So far, I haven't achieved much but I learnt a bit about h264 and captured some video for experimenting. Writing a little program that reads the stream from the quadcopter and plays it in real time isn't rocket science, it should be easy to anyone with the patience to learn about libavcodec but it isn't working for me yet.

I used the following code to dump the video from the quadcopter:

import socket import sys s = socket.socket(socket.AF_INET, socket.SOCK_STREAM) s.connect(('172.16.10.1', 8888)) magicword='495464000000580000009bf89049c926884d4f922b3b33ba7eceacef63f77157ab2f53e3f768ecd9e18547b8c22e21d01bfb6b3de325a27b8fb3acef63f77157ab2f53e3f768ecd9e185eb20be383aab05a8c2a71f2c906d93f72a85e7356effe1b8f5af097f9147f87e'.decode('hex') s.send(magicword) data = s.recv(106) n=0 while n<10000: #write replace by while 1 if you want this to not stop data = s.recv(1024) sys.stdout.write(data) n=n+1 s.close()This operation takes about 107 seconds to complete. The generated file weights 5.9 MB and contains about 1,364 frames (according to VLC). This means that the video stream of the quadcopter is about 54.35 KiB/s with an approximate frame rate of 12.76 fps. I recorded a video with the quadcopter facing a timer running on my tablet in order to measure the time. The video contains raw h264, which is made of a series of so-called NAL units. This can be played using VLC by telling VLC to use the h264 demuxer:

$ vlc video.bin --demux h264

The next thing I wanted to do was to see how many NAL units the video contained and what different types of units would I find in the video. I run the following script to search the recorded video for NAL units and list the headers of those units:

f=open('recording.bin') dump=f.read() f.close() p1=dump.find('000001'.decode('hex')) while(p1!=-1): print(dump[p1:(p1+5)].encode('hex')) p1=dump.find('000001'.decode('hex'),p1+1)The output of this program shows the following: there are 3039 NAL units in my video, that's about 28.43 NAL units per second, which is about two times the number of frames per second. The next question I wanted to ask was how many different types of NAL units were in the video (because I know close to nothing about h264 and therefore I wonder this type of things). I counted the different NAL unit types using the following command:$ cat log.txt |sort |uniq -c |sort -nr

This gives the following output:

1286 000001a000 1286 000001419a 116 000001a100 116 00000168ee 116 000001674d 116 0000016588 1 0000011600 1 0000011200 1 0000010600

All relevant information about how h264 works can be found in this document: https://www.itu.int/rec/T-REC-H.264

In brief, the first byte after 01 contains the basic information about what type of NAL unit it is. The most significant bit of the byte is the forbidden bit and it should be 0, otherwise something is wrong with the encoder or the NAL unit is expected to be ignored. The next 2 bits are the nal_ref_idc and they have different meanings depending on the type of NAL unit. The least significant 5 bits are the nal_unit_type and they show what type of NAL unit we are facing. Going back to our list above, we get the following:

1286 000001a000 --> a0 = 1010 0000 meaning forbidden=1, ref_idc=1 and unit_type=0 1286 000001419a --> 41 = 0100 0001 meaning forbidden=0, ref_idc=2 and unit_type=1 116 000001a100 --> a1 = 1010 0001 meaning forbidden=1, ref_idc=1 and unit_type=1 116 00000168ee --> 68 = 0110 1000 meaning forbidden=0, ref_idc=3 and unit_type=8 116 000001674d --> 67 = 0110 0111 meaning forbidden=0, ref_idc=3 and unit_type=7 116 0000016588 --> 65 = 0110 0101 meaning forbidden=0, ref_idc=3 and unit_type=5 1 0000011600 --> 16 = 0001 0110 meaning forbidden=0, ref_idc=0 and unit_type=22 1 0000011200 --> 12 = 0001 0010 meaning forbidden=0, ref_idc=0 and unit_type=18 1 0000010600 --> 06 = 0000 0110 meaning forbidden=0, ref_idc=0 and unit_type=6I gave a quick look at the position of these NAL units in the timeline of the video, and more or less it can be summarised as follows:- the a0 is a (wrong) short NAL unit and it always appears before 41. Their frequency of appearance seems to be constant and more or less matches the frame rate.

- a1, 68, 57 and 65 always appear together and they seem to appear regularly every 11-12 frames (every second?).

- 16, 12 and 06 appear rarely at what looks like random times in the video at a frequency that is less than once every minute.

Having given a look at all this, the next logic step seems to be to use libavcodec and pass the functions in this library the data from the NAL units upon arrival. I found a few examples online that use SDL to handle the graphical windows but I still need to figure out how to tell the software what codec to use. I'll keep you posted.

-

First attempts at reading the video stream

03/02/2017 at 23:33 • 0 commentsToday I played a bit more with the quadcopter. My idea was to dump the video stream somehow and find out how the video was encoded. My idea was to create a TCP socket to port 8888, write the 106 byte magic message that I saw the mobile app send before the quadcopter starts spitting out what looks like a video stream and then read whatever comes from the quadcopter. The following Python script can do this:

import socket import sys s = socket.socket(socket.AF_INET, socket.SOCK_STREAM) s.connect(('172.16.10.1', 8888)) magicword='495464000000580000009bf89049c926884d4f922b3b33ba7eceacef63f77157ab2f53e3f768ecd9e18547b8c22e21d01bfb6b3de325a27b8fb3acef63f77157ab2f53e3f768ecd9e185eb20be383aab05a8c2a71f2c906d93f72a85e7356effe1b8f5af097f9147f87e'.decode('hex') s.send(magicword) data = s.recv(106) n=0 while n<1000: #write replace by while 1 if you want this to not stop data = s.recv(1024) sys.stdout.write(data) n=n+1 s.close()The result -almost- worked straightaway. You will notice in the code above that I discard the first 106 bytes that the quadcopter returns, this seems to correspond to one of these magic messages that the app and the quadcopter exchange and I have no idea what they mean. The rest of the data that comes from the quadcopter seems to be raw h264 video stored in "nal" format. I dumped the output I got and managed to play it in vlc using the following commands:$ python test.py > video.h264 $ vlc video.h264 --demux h264

The vlc successfully plays the video but complains about some errors in the headers.My next step is to find a proper way to read the TCP stream and make some video player play it with the lowest possible lag. I'll keep you posted.

-

[OLD REPOST] First attempt at "desciphering" the UDP comms

02/20/2017 at 11:19 • 0 commentsNOTE: this text was originally included in the description of the project and perhaps should have been a log update instead. I reposted it here even though it is outdated and the problems described here were solved later on.

I've only played with this for one or two hours and I haven't been able to decode the 8 byte message; however, a few bits seem to change when one enables things such as the "altitude hold" or the "headless mode" and a few of the bytes also change whenever the control levers in the screen (thrust, yaw, etc) move.

I tried following my normal startup sequence in the quadcopter, where I first enable the altitude hold, then I enable the on-screen controllers and then I "arm" the propellers by pressing the up arrow button in the screen. This leaves the quadcopter ready to take off. This sequence generates the following messages:

66 80 80 01 80 00 81 99 "idle state" (on-screen controllers are enabled but the quad is idle and the altitude hold is disabled)

66 80 80 80 80 00 00 99 "idle state with altitude hold" (the previous message changes to this message as soon as the altitude hold is enabled)

66 80 80 80 80 01 01 99 "arm propellers" (the previous message becomes this when the altitude hold is on, the on-screen controllers are on and the "up arrow" button is pressed in order to arm the motors". The message is sent some 20-22 times -for about a second-).

66 80 80 80 80 04 04 99 "emergency stop" (this is the message that is sent starting from the idle state with altitude hold as soon as the emergency stop button is pressed. The message is sent some 20-22 times -for about a second-).

Using the information described above, I put together a small Python script that opens a UDP connection to port 8859 in the quadcopter and writes the series of commands that I describe above. The sequence sends a message every 50 miliseconds and leaves some of the messages for a certain amount of time, replicating more or less the sequence I follow when I want to get the quadcopter to be ready to fly.

If you want to give the demo a try. Take your laptop, make sure you don't have any browser/software running that is internet-hungry and will flood the quadcopter with useless requests, connect your laptop to the wifi of the quadcopter and run the application doing "python demo.py". What you should see is the quadcopter will arm the motors and make the propellers speed up some 10 seconds after starting the app. The propellers will spin for a few seconds and then the program will turn them off and disconnect.

THE DEMO CODE

import socket from time import sleep # The IP of the quadcopter plus the UDP port it listens to for control commands IPADDR = '172.16.10.1' PORTNUM = 8895 # "idle" message, this is the first message the app sends to the quadcopter when you enable the controls PIDLE = '6680800180008199'.decode('hex') # "altitude hold" this is what the app starts sending to the quadcopter once you enable the altitude hold PHOLD = '6680808080000099'.decode('hex') # "arm" this is what the app sends to the quadcopter when you press the "up" arrow that make the motors start to spin before taking off PSPIN = '6680808080010199'.decode('hex') # this is the message the app sends to the quadcopter when you press the "emergency stop" button PSTOP = '6680808080040499'.decode('hex') # initialize a socket # SOCK_DGRAM specifies that this is UDP s = socket.socket(socket.AF_INET, socket.SOCK_DGRAM, 0) # connect the socket s.connect((IPADDR, PORTNUM)) # send a series of commands for n in range(0,100): # send the idle command for some 5 seconds s.send(PIDLE) sleep(0.05) # the app sends a packet of 8 bytes every 50 miliseconds for n in range(0,100): # send the idle + altitude hold command for some 5 seconds s.send(PHOLD) sleep(0.05) for n in range(0,21): # send the "arm" command for 1 second (this is what the app seems to do when you press the up arrow to arm the quadcopter s.send(PSPIN) sleep(0.05) for n in range(0,100): # send the idle + altitude hold command for some 5 seconds s.send(PHOLD) sleep(0.05) for n in range(0,21): # send the "emergency stop" command for 1 second s.send(PSTOP) sleep(0.05) for n in range(0,200): # send the idle + altitude hold for some 5 seconds s.send(PHOLD) sleep(0.05) # close the socket s.close() -

The first working app that enables joystick control from the PC

02/19/2017 at 21:55 • 0 commentsI've finally put together the first working app that reads the joystick input from a USB joystick and sends the right commands to control the quadcopter. The application requires the pygame library to be installed and other basic Python dependencies and I've configured to use the axes and buttons that were convenient for my USB gamepad (a Logitech dual action). Basically, it uses the two analogue joysticks for the throttle+yaw and pitch+roll respectively, plus two buttons (7 and 8) for the "arm" and the "emergency stop" commands.

The procedure to make this work is quite simple: connect the computer to the wifi network of the quadcopter, connect the USB joystick and run the app (running with regular user permissions is ok).

import socket import pygame import time from math import floor def get(): #pygame gives the analogue joystick position as a float that goes from -1 to 1, the code below takes the readings and #scales them so that they fit in the 0-255 range the quadcopter expect #remove the *0.3 below to get 100% control gain rather than 30% a=int(floor((j.get_axis(2)*0.3+1)*127.5)) b=int(floor((-j.get_axis(3)*0.3+1)*127.5)) c=int(floor((-j.get_axis(1)+1)*127.5)) d=int(floor((j.get_axis(0)+1)*127.5)) commands=(j.get_button(6)<<2)|(j.get_button(8)) #only two buttons are used so far: "arm" and emergency stop out=(102<<56)|a<<48|b<<40|c<<32|d<<24|commands<<16|(a^b^c^d^commands)<<8|153 pygame.event.pump() return out # The IP of the quadcopter plus the UDP port it listens to for control commands IPADDR = '172.16.10.1' PORTNUM = 8895 pygame.init() j = pygame.joystick.Joystick(0) j.init() # initialize a socket # SOCK_DGRAM specifies that this is UDP s = socket.socket(socket.AF_INET, socket.SOCK_DGRAM, 0) # connect the socket s.connect((IPADDR, PORTNUM)) while True: s.send(format(get(),'x').decode('hex')) #ugly hack, I guess there's a better way of doing this time.sleep(0.05) # close the socket s.close() -

A basic script to monitor the controller input sent to the quadcopter by UDP

02/19/2017 at 20:03 • 0 commentsI'm in the process of making a small program to read the control input from a USB gamepad and send the commands to the quadcopter but first I wanted to make sure I got the UDP protocol right. Therefore, I wrote a small Python script that can be combined with airodump-ng and tcpdump in order to read what the phone app is sending to the quadcopter by UDP and translate the controllers axes readings into something a human can read. The source code is attached below:

import sys state=0 message="" yaw=0 roll=0 pitch=0 throttle=0 commands=0 err=0 n=0 for line in sys.stdin: if state==0: pos=line.find("0x0010:") if pos!=-1: message=line[(pos+39):(pos+43)]+line[(pos+44):(pos+48)] roll=int(line[(pos+41):(pos+43)],16) pitch=int(line[(pos+44):(pos+46)],16) throttle=int(line[(pos+46):(pos+48)],16) state=1 else: state=0 pos=line.find("0x0020:") if pos!=-1: message=message+line[(pos+9):(pos+13)]+line[(pos+14):(pos+18)] yaw=int(line[(pos+9):(pos+11)],16) commands=int(line[(pos+11):(pos+13)],16) err=int(line[(pos+13):(pos+15)],16) n=n+1 if n==10: #this is a little trick that only displays 1 out of 10 messages sys.stdout.write(message+" roll:"+str(roll)+" pitch:"+str(pitch)+" throttle:"+str(throttle)+" yaw:"+str(yaw)+" commands:"+format(commands,"08b")+" err:"+format(roll^pitch^throttle^yaw^commands,'x')+'\n') n=0In order for this to work, the following commands have to be executed first:# airmon-ng start wlan0 # airodump-ng -c 2 mon0

The first command puts the wifi adapter into monitor mode and creates the monitor interface. I'm not sure if this can work while the network-manager is running, so I stopped the network-manager beforehand using /etc/init.d/network-manager stop (under Debian). The second command above starts sniffing the traffic in the wifi channel #2. At this point I would turn on the quadcopter, connect my phone to the quadcopter's wifi network and start the jjrc app and the bsid of the quadcopter should appear in the terminal where airodump is running. It will look like this:CH 2 ][ Elapsed: 1 min ][ 2017-02-19 18:58 ][ WPA handshake: 42:D1:A4:C1:E5:A2 BSSID PWR RXQ Beacons #Data, #/s CH MB ENC CIPHER AUTH ESSID 01:C0:06:40:B2:32 -37 0 554 4901 0 2 54e. OPN JJRC-A21494F A4:E7:22:14:5B:CD -48 26 610 2 0 1 54e WPA2 CCMP PSK BTHub6-RFPMThe next thing to do is to start tcpdump and pass the UDP traffic between the phone and the quadcopter to my python script:# tcpdump -i 2 udp dst port 8895 -x -l | python ./my_script.pyThis should produce an output that looks like this:66807f0180007e99 roll:128 pitch:127 throttle:1 yaw:128 commands:00000000 err:7e 6667640180008299 roll:103 pitch:100 throttle:1 yaw:128 commands:00000000 err:82 6665630180008799 roll:101 pitch:99 throttle:1 yaw:128 commands:00000000 err:87 6665630180008799 roll:101 pitch:99 throttle:1 yaw:128 commands:00000000 err:87 66807f0080007f99 roll:128 pitch:127 throttle:0 yaw:128 commands:00000000 err:7f 66807f0080007f99 roll:128 pitch:127 throttle:0 yaw:128 commands:00000000 err:7f 66807f0080007f99 roll:128 pitch:127 throttle:0 yaw:128 commands:00000000 err:7f 66a6770080005199 roll:166 pitch:119 throttle:0 yaw:128 commands:00000000 err:51 66a6770080005199 roll:166 pitch:119 throttle:0 yaw:128 commands:00000000 err:51 6671620080009399 roll:113 pitch:98 throttle:0 yaw:128 commands:00000000 err:93 666b840080006f99 roll:107 pitch:132 throttle:0 yaw:128 commands:00000000 err:6f 66978e0080009999 roll:151 pitch:142 throttle:0 yaw:128 commands:00000000 err:99 66807f0080007f99 roll:128 pitch:127 throttle:0 yaw:128 commands:00000000 err:7f 66807f0080007f99 roll:128 pitch:127 throttle:0 yaw:128 commands:00000000 err:7f

By the way, I found out that the difference between the 30%, 60% and 100% settings in the app is the range of the roll and pitch control inputs one gets, they go from 1 to 255 when in 100% mode, from 68 to 187 in 60% mode and from 88 to 167 in the 30% mode. The throttle and yaw controllers are unaffected by the 30/60/100 mode setting. -

A Debian-based Wi-Fi repeater

02/13/2017 at 23:07 • 0 commentsOne of my first concerns as soon as I played with the Elfie for the first time was the limited range one could achieve due to the weak Wi-Fi connection between the quadcopter and the phone. I read somewhere that people had successfully used Wi-Fi repeaters to extend the range of the Parrot drones and I assumed there wasn't any reason why it wouldn't be possible to do the same with the Elfie (SPOILER: I was right).

I bought a random-branded usb wifi adapter + antenna combo with an alleged gain of 14 dBi (I haven't really thought if the specifications are realistic) and I thought I'd use an old laptop running Debian to act as a Wi-Fi repeater between the toy and my phone.

![]()

After a lot of tries and plenty of frustration trying to use NetworkManager to create a Wi-Fi my phone could see and connect to ("Access Point" or "AP" mode rather than "Ad-Hoc") I found the following procedure that is more or less easy to set up without breaking my normal configuration:

[EDIT] the credit for the procedure goes to this excellent thread: http://askubuntu.com/questions/180733/how-to-setup-an-access-point-mode-wi-fi-hotspot

- First I shut the network manager by doing

# /etc/init.d/network-manager stop

- Then I replace /etc/network/interfaces to contain the following

source /etc/network/interfaces.d/* auto lo iface lo inet loopback auto wlan1 iface wlan1 inet dhcp wireless-essid JJRC-A3194E #SSID of the Wi-Fi of the quadcopter - Then, I can make my laptop connect to the quadcopter using ifup:

#ifup wlan1

- The next step is to create an access point using the second Wi-Fi card in the laptop. This can be done using hostapd. This wasn't installed in my computer by default, so I had to apt-get install it. Afterwards, I created a config file in /etc/hostapd/hostapd.conf containing the following self-explanatory definitions:

interface=wlan0 ssid=ElfieRepeater hw_mode=g channel=1 macaddr_acl=0 auth_algs=1 ignore_broadcast_ssid=0 wpa=3 wpa_passphrase=1234567890 wpa_key_mgmt=WPA-PSK wpa_pairwise=TKIP rsn_pairwise=CCMP

- The config file didn't exist at all and I had to modify another config file in order to make hostapd load my configuration. This was done by editing the file /etc/default/hostapd to include the following line:

DAEMON_CONF="/etc/hostapd/hostapd.conf"

- Once that was done, it was possible to start the "repeater" Wi-Fi network by starting the hostapd daemon the Debian way:

#/etc/init.d/hostapd start

- The status of hostapd can be checked by using the command "status" rather than start in init.d. The expected output should say that the service is active with no errors. Once this is active, the Wi-Fi network "ElfieRepeater" becomes visible for the mobile one and one can connect to it. However, no DHCP server has been set up, therefore the phone won't get any IP address assigned and an error will be shown in the phone. This issue can be fixed by installing a DHCP server, I've read some people use isc-dhcp-server but I didn't want to go through the process of setting that up and I simply configured the IP of the Wi-Fi adapter from the laptor and manually configured the IP of the mobile phone from the mobile phone. The first step, from the laptop is:

#ifconfig wlan0 10.10.0.1

Then in the mobile phone I chose "static" rather than "dhcp" in the advanced settings when connecting to the Wi-Fi and chose 10.10.0.2 as the ip of the phone and 10.10.0.1 as the gateway IP. - The last step is to configure the iptables in the laptop to forward the traffic it receives in wlan0 so that the phone can connect to the quadcopter through the repeater, this can be done using the following commands:

#echo 1 | tee /proc/sys/net/ipv4/ip_forward #iptables -t nat -A POSTROUTING -s 10.10.0.0/16 -o wlan1 -j MASQUERADE - Voilà! Now the JJRC application can be run from the phone and it should be able to connect to the Elfie as if the phone had been connected straight to the Elfie.

If the aforementioned procedure doesn't work because the forwarding doesn't work, the whole setup can be debugged by having the laptop connect to the wifi hotspot of the broadband modem and try to load webpages from the mobile phone. It didn't give much trouble to me, frankly.

The way to go back to the original network setup in the laptop is relatively easy to follow: stop hostapd, switch /etc/network/interfaces to its original content and start the network-manager as if nothing had happened.

Below are some pictures from the test I did: I put the quadcopter in a separate room and brought the antenna nearby while I connected to the phone through the repeater Wi-Fi in a different room. I did some clumsy FPV flying trying to get the quadcopter to exit the room but ended up crashing it against a wall.

This is the Wi-Fi antenna+repeater thing mounter on a tripod. The USB cable is several metres long, which was convenient for the test:

![]()

Here's the laptop that acts as the Wi-Fi repeater and the mobile phone connected to the quadcopter through the laptop:

![]()

Here's a video capture from the quadcopter trying to leave the room through the door:

![]()

I was afraid that the repeater trick would add terrible latency to the communication, which would make this setup totally impractical. However, the latency didn't seem to be too bad. I haven't made any measurements but it feels as if the latency was lower than the update cycle of the controls (UDP packets are sent every 50 ms from the mobile phone to the quadcopter).

Final words:

The reason why I did all this was because I was concerned about the weak Wi-Fi connection curtailing the distance I am able to reach with the quadcopter. However, the trick allows a range of other things to be done, including:

- capturing the traffic between the phone and the quadcopter (being the Elfie's wifi an open network, this was already possible using a wifi adapter in monitor mode).

- controlling the Elfie through the interwebs / lan (subject to latency, of course).

I wish it was possible to configure more things in the Elfie. If for example we had control over its Wi-Fi settings, we could make it connect to a Wi-Fi infrastructure rather than host its own network. This would enable, for example, to have the quadcopter roam around a building or an entire campus seamlessly jumping from one Wi-Fi hotspot to the next one. I bet someone is doing that with small wheeled rovers, doing it with a cheap quadcopter is more challenging of course because of the lack of stability for autonomous flight.

- First I shut the network manager by doing

-

First attempts at understanding how the video link works

02/04/2017 at 22:37 • 0 commentsI haven't done any further tests with the UDP controls, but I captured some more traffic in order to try to figure out how is the video transmitted between the quadcopter and the mobile phone. The communication seems to be established through port 8888 of the quadcopter; however, getting the video is not as simple as opening a stream with VLC. The traffic in Wireshark shows multiple connections being established. These always start with the mobile phone sending a message of 106 bytes to the quadcopter which seem to have a common header:

0000 49 54 64 00 00 00 52 00 00 00 7a 34 b1 04 99 c6 ITd...R...z4.... 0010 b1 36 81 b7 be 83 83 4d de 4e 57 aa be 10 36 eb .6.....M.NW...6. 0020 d8 94 9b a8 5e 59 54 92 1f e9 3e 37 fa 70 d6 52 ....^YT...>7.p.R 0030 e2 ae e0 91 10 9c 34 cf ea ff 57 aa be 10 36 eb ......4...W...6. 0040 d8 94 9b a8 5e 59 54 92 1f e9 ad ce 18 b0 c3 10 ....^YT......... 0050 67 a8 36 f3 0e 06 d3 4b 60 bf 0a 78 6b bc b0 82 g.6....K`..xk... 0060 cf 8e a2 0b 26 f8 31 52 8f ab ....&.1R.. 0000 49 54 64 00 00 00 52 00 00 00 05 a7 a9 0f b3 6e ITd...R........n 0010 cd 3f a2 ca 7e c4 8c a3 60 04 ac ef 63 f7 71 57 .?..~...`...c.qW 0020 ab 2f 53 e3 f7 68 ec d9 e1 85 47 b8 c2 2e 21 d0 ./S..h....G...!. 0030 1b fb 6b 3d e3 25 a2 7b 8f b3 ac ef 63 f7 71 57 ..k=.%.{....c.qW 0040 ab 2f 53 e3 f7 68 ec d9 e1 85 b7 33 0f b7 c9 57 ./S..h.....3...W 0050 82 fc 3d 67 e7 c3 a6 67 28 da d8 b5 98 48 c7 67 ..=g...g(....H.g 0060 0c 94 b2 9b 54 d2 37 9e 2e 7a ....T.7..z 0000 49 54 64 00 00 00 52 00 00 00 72 98 c0 38 9b c3 ITd...R...r..8.. 0010 72 a7 1a 17 4b d1 b5 14 b3 ad ac ef 63 f7 71 57 r...K.......c.qW 0020 ab 2f 53 e3 f7 68 ec d9 e1 85 47 b8 c2 2e 21 d0 ./S..h....G...!. 0030 1b fb 6b 3d e3 25 a2 7b 8f b3 ac ef 63 f7 71 57 ..k=.%.{....c.qW 0040 ab 2f 53 e3 f7 68 ec d9 e1 85 b7 33 0f b7 c9 57 ./S..h.....3...W 0050 82 fc 3d 67 e7 c3 a6 67 28 da d8 b5 98 48 c7 67 ..=g...g(....H.g 0060 0c 94 b2 9b 54 d2 37 9e 2e 7a ....T.7..z 0000 49 54 64 00 00 00 52 00 00 00 0c 76 da 94 66 a3 ITd...R....v..f. 0010 54 68 65 15 92 8c fd 4d 70 c7 ac ef 63 f7 71 57 The....Mp...c.qW 0020 ab 2f 53 e3 f7 68 ec d9 e1 85 47 b8 c2 2e 21 d0 ./S..h....G...!. 0030 1b fb 6b 3d e3 25 a2 7b 8f b3 ac ef 63 f7 71 57 ..k=.%.{....c.qW 0040 ab 2f 53 e3 f7 68 ec d9 e1 85 b7 33 0f b7 c9 57 ./S..h.....3...W 0050 82 fc 3d 67 e7 c3 a6 67 28 da d8 b5 98 48 c7 67 ..=g...g(....H.g 0060 0c 94 b2 9b 54 d2 37 9e 2e 7a ....T.7..z 0000 49 54 64 00 00 00 58 00 00 00 9b f8 90 49 c9 26 ITd...X......I.& 0010 88 4d 4f 92 2b 3b 33 ba 7e ce ac ef 63 f7 71 57 .MO.+;3.~...c.qW 0020 ab 2f 53 e3 f7 68 ec d9 e1 85 47 b8 c2 2e 21 d0 ./S..h....G...!. 0030 1b fb 6b 3d e3 25 a2 7b 8f b3 ac ef 63 f7 71 57 ..k=.%.{....c.qW 0040 ab 2f 53 e3 f7 68 ec d9 e1 85 eb 20 be 38 3a ab ./S..h..... .8:. 0050 05 a8 c2 a7 1f 2c 90 6d 93 f7 2a 85 e7 35 6e ff .....,.m..*..5n. 0060 e1 b8 f5 af 09 7f 91 47 f8 7e .......G.~Searching online, I found a Reddit thread from 10 months ago where some people discussed how to control a (different) quadcopter from the PC. The model of the quadcopter was different but it also used port 8888 and it also used 106 bytes-long messages. One of the redditors in the thread eventually published a Chrome app that was able to control the quadcopter.I've given the source code of the Chrome app a look and it doesn't have any comments but they seem to blindly send the messages they've seen the mobile app send to the quadcopter in order to establish the connection and get the video stream. The messages their application send seem to different from the ones the JJRC Elfie expects.

I may try to identify all messages the JJRC app sends and see if I can get the video to open in VLC somehow but this is certainly not going to happen today. I will keep you posted.

Controlling a JJRC H37 Elfie quad from a PC

The JJRC Elfie Quadcopter comes with an Android/iOS app to control it from the phone. Can we control it from our own software?

adria.junyent-ferre

adria.junyent-ferre