There is an updated version of this project available here.

Why do we need a rotating camera for vegetation monitoring?

Whether you're a scientist like me, a farmer, or someone managing a park, you’ve probably noticed that extreme weather events are hitting us harder and more often, thanks to climate change. These events can seriously affect crops and vegetation. Heavy rains can cause flooding, and droughts bring water stress and crop failures.

We have a few tools to track these impacts: Typically, we turn to satellite imagery to track these impacts, but there are some big drawbacks. High-resolution satellite data is expensive, and cloud cover can limit its availability, especially during bad weather. Bad weather is also an issue for drones or UAVs and it is very time consuming to charge batteries, plan and fly a mission and to compute an orthomosaic. Field cameras could be a great alternative, but they are usually designed to be installed in a fixed place with a restricted field of view.

Is it possible to build a rotating field camera to get a daily map of the vegetation in an automated way and independent from the weather?

Building a first prototype:

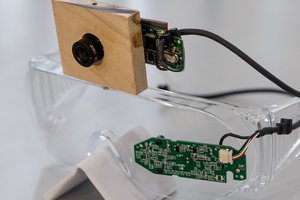

To test this concept, I used a Jetson Nano Developer kit and a Raspberry Pi Camera 2 for a simple, first prototype. One servo moves the platform (in blue PLA below) in a 360 degrees rotating movement, while a second servo can tilt the camera up and down. A python script controls the camera, takes pictures and stores them on a USB pen drive.

An Arduino Nano clone is used to control both servos via the USB port. The Jetson Nano board is supplied with a WIFI module that connects to these massive antennas. The camera is powered by a USB power bank and has a cooling fan because the Jetson Nano tends to send out overheating alarms without it.

Taking pictures:

The camera can connect to a WIFI hot spot created with a smartphone or a laptop. The camera then creates a dashboard that can be accessed via a browser. The dashboard is very simplistic for now with one magic button to "Start taking pictures!":

If the "Start taking pictures!" button is pressed, the camera takes 72 pictures while it rotates 360 degrees around its own axis. The rotation control doesn't work perfectly yet, mainly because of the total lack of any position feedback. A next version will include a rotary encoder to make the camera aware of its own position and to position the camera precisely.

After the images are recorded, another python script takes over and generates a 360 degree panorama. This is quite computation intensive and this is also the reason to use a Jetson Nano and not a Raspberry Pi for this project.

Finally, the 360 degree panorama is re-projected to a nadir view map, comparable to a map generated with drone images.

Testing the prototype in the field:

But even with this simple, first prototype, I could take a first set of images outside. I also made sure that I collected some reference data with a drone. I use a DJI Mavic 3 Enterprise with an RGB and a thermal camera and I mosaic the images with WebODM to have a reference map. The orange vest is not a fashion choice but a requirement if I want to fly drones on campus ;)

Here are some first results:

The images recorded with the Raspberry Pi camera are OK but can be improved. I didn't use the highest resolution to avoid memory issues when stitching the panorama later. For some reason the images are saved upside down. I fixed this problem after the field test:

In a next step, the panorama was created. It still has a lot of room for improvement, but it works:

Finally, we re-project this panorama to a nadir view map that we can later compare to our orthomosaic/map that is generated from the drone images. This map is far from perfect and I will keep updating this page with hopefully much better nadir view maps. It is still a long way to go, but the principle works:

Florian Ellsäßer

Florian Ellsäßer

John Evans

John Evans

AIRPOCKET

AIRPOCKET

Tommi Nieminen

Tommi Nieminen

Human Controller

Human Controller