Introduction

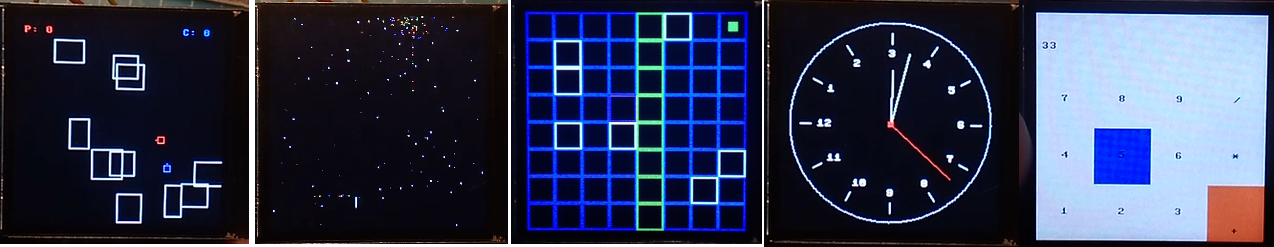

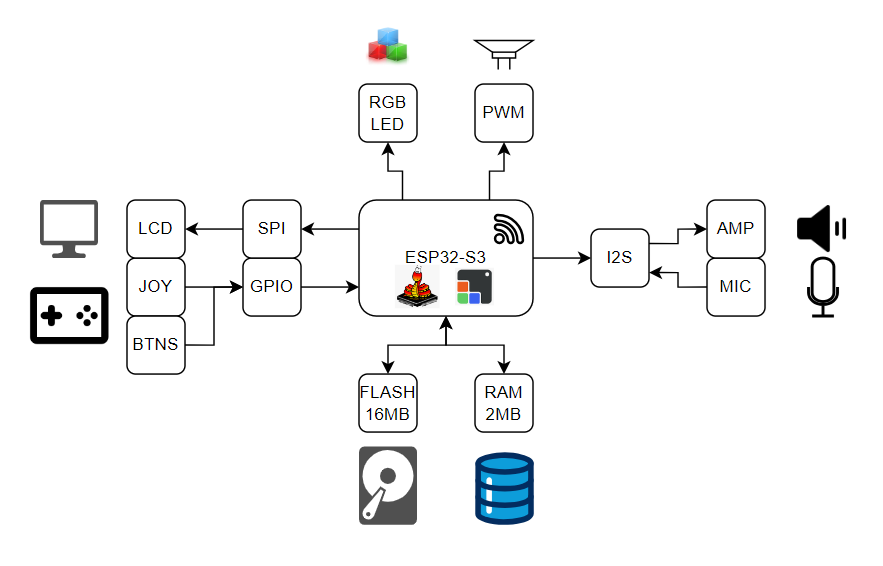

This project implements a chat interface with voice input and output capabilities, running on a microcontroller with a display. It utilizes various modules for Wi-Fi connectivity, audio processing, and UI rendering. The script interacts with an AI model (likely GPT) for generating responses and can execute various tools based on the AI's instructions.

Main Components

Imports and Initializations

- Imports necessary modules and initializes hardware and UI components.

- Sets up Wi-Fi connection using provided credentials.

User Interface

- Utilizes the LVGL library for creating a graphical user interface.

- Includes a chat container, status label, and a record button.

Audio Processing

- Implements recording and playback functionalities.

- Uses OpenAI's API for speech-to-text conversion.

AI Interaction

- Communicates with an AI model (likely GPT) using Anthropic's API.

- Supports tool execution based on AI responses.

Asynchronous Operations

- Uses

asynciofor managing concurrent tasks.

- Uses

Key Functionalities

1. UI Initialization (init_ui())

- Creates the main screen with a chat container, status label, and record button.

- Sets up joystick controls for scrolling.

2. Message Handling

add_message(): Adds messages to the chat container.remove_accents(): Removes accents from text for display purposes.

3. Input Processing

- Monitors button presses for recording and UI interaction.

- Converts speech to text using OpenAI's API.

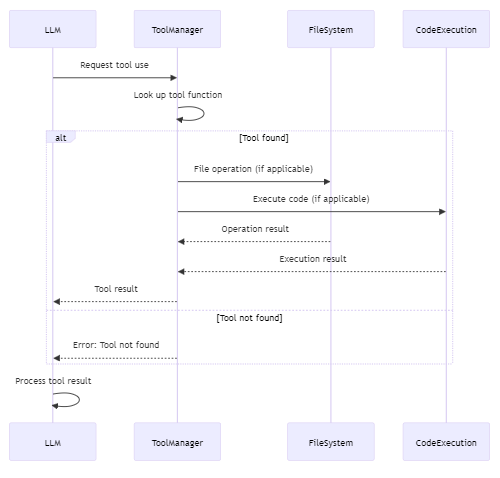

4. AI Interaction

- Sends user messages to the AI model.

- Processes AI responses, including tool use requests.

5. Tool Execution

- Supports file reading, writing, and execution tools.

- Handles tool results and feeds them back to the AI.

6. Audio Playback

- Converts AI responses to speech and plays them back.

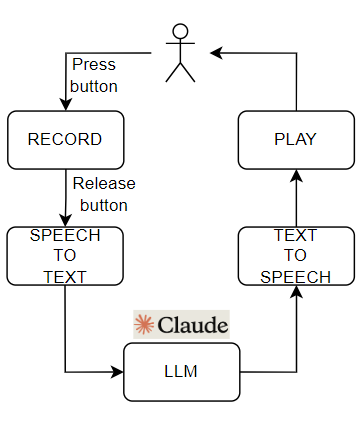

7. Main Loop (main())

- Orchestrates the entire conversation flow:

- Records user input

- Converts speech to text

- Sends text to AI

- Processes AI response (including tool use)

- Converts AI response to speech

- Plays back the response

- Repeats the process

Usage

The script is designed to run on a microcontroller with the necessary hardware components (display, buttons, microphone, speaker). It creates an interactive chat interface where users can have voice conversations with an AI assistant.

Dependencies

wifi,secrets,asyncio- Custom modules:

hal_utils,api_utils,i2s_utils,prompt_utils,lvgl_utils - LVGL library for UI

- OpenAI and Anthropic APIs for AI and speech processing

LLM and audio APIs

Overview

The api_utils.py file contains utility functions for interacting with various APIs, primarily focused on AI language models and speech processing. It provides asynchronous functions for tasks such as communicating with AI models, text-to-speech conversion, and speech-to-text transcription.

Functions

1. llm(api_key, messages, max_tokens=8192, temp=0, system_prompt=None, tools=None)

Interacts with an AI language model (likely Claude by Anthropic) to generate responses.

Parameters:

api_key(str): The API key for authentication.messages(list): The conversation history.max_tokens(int, optional): Maximum number of tokens in the response. Default is 8192.temp(float, optional): Temperature for response generation. Default is 0.system_prompt(str, optional): A system prompt to guide the AI's behavior.tools(list, optional): A list of tools the AI can use.

Returns: JSON response from the API or None if an error occurs.

2. text_to_speech(api_key, text, acallback=None)

Converts text to speech using OpenAI's API.

Parameters:

api_key(str): The API key for authentication.text(str): The text to convert to speech.acallback(function, optional): An asynchronous callback function to handle the response.

Returns: Audio data as bytes or None if an error occurs. If a callback is provided, it returns None.

3. speech_to_text(api_key, bytes_io)

Transcribes speech to text using OpenAI's API.

Parameters:

api_key(str): The API key for authentication.bytes_io(io.BytesIO): Audio data as a BytesIO object.

Returns: Transcribed text as a string.

Raises: Exception if the API request fails.

4. atranscribe(api_key, bytes_io) (Deprecated)

A deprecated function for transcribing speech to text. It's recommended to use speech_to_text instead.

Helper Class

FormData

A utility class for constructing multipart form-data for API requests.

- Methods:

__init__(): Initializes the FormData object.add_field(name, value, filename=None, content_type=None): Adds a field to the form data.encode(): Encodes the form data into bytes.

Usage Notes

All main functions (

llm,text_to_speech,speech_to_text) are asynchronous and should be used withawaitin an asynchronous context.The

llmfunction is designed to work with a specific version of Claude (claude-3-5-sonnet-20240620) and includes support for tool use.The

text_to_speechfunction is set to generate Spanish speech by default. Modify thelanguageparameter in the payload if a different language is needed.The

FormDataclass is used internally byspeech_to_textto prepare the audio data for the API request.Error handling is implemented in each function, with errors being printed to the console. In production, you might want to implement more robust error handling and logging.

The deprecated

atranscribefunction uses a lower-level networking approach. It's kept for reference but should not be used in new code.

Dependencies

aiohttp: For making asynchronous HTTP requests.json: For JSON encoding and decoding.io: For working with byte streams.

Ensure these dependencies are installed and available in your environment when using this module.

LLM Tools

Overview

The tools_default.py file contains utility functions and their descriptions for performing various file operations and running tests. These tools are designed to be used within a larger system, possibly as part of an AI-assisted development environment.

Tool Descriptions and Functions

1. Read File

- Description Variable:

read_file_tool_desc - Function:

read_file_tool(file_path)

Reads the contents of a specified file.

- Input:

file_path(str): The path to the file to be read, including the file name and extension.

- Output: The content of the file as a string, or an error message if the operation fails.

2. Write File

- Description Variable:

write_file_tool_desc - Function:

write_file_tool(file_path, data)

Writes data to a specified file.

- Input:

file_path(str): The path to the file to be written to, including the file name and extension.data(str): The data to be written to the file.

- Output: A success message with the file path, or an error message if the operation fails.

3. Run File

- Description Variable:

run_file_tool_desc - Function:

run_file_tool(file_path)

Executes a specified Python file and returns its output.

- Input:

file_path(str): The path to the Python file to be executed, including the file name and extension.

- Output: The last 1024 characters of the file's execution output, or an error message if the operation fails.

4. Run Unit Test

- Description Variable:

run_unittest_tool_desc - Function:

run_unittest_tool(test_file_path)

Runs unittest on a specified test file and returns the results.

- Input:

test_file_path(str): The path to the test file to be executed, including the file name and extension.

- Output: The unittest results as a string, or an error message if the operation fails.

Key Features

- Error Handling: All functions include try-except blocks to catch and report errors.

- Output Capture: The

run_file_toolandrun_unittest_toolfunctions useos.duptermto capture stdout, allowing them to return the output of executed files. - Output Limitation: The

run_file_toolfunction limits the returned output to the last 1024 characters to reduce token usage. - Dynamic Import: The

run_unittest_toolfunction dynamically imports the test module based on the file path.

Usage Notes

- These tools are designed to be used programmatically, likely as part of a larger system that can interpret the tool descriptions and call the appropriate functions.

- The tool descriptions (

*_tool_desc) include aninput_schemathat defines the expected input format. This can be used for validation or documentation purposes. - File paths are assumed to be relative to the current working directory or absolute paths.

- The

run_file_toolfunction executes Python code in the global namespace, which could potentially modify the global state. Use with caution.

Dependencies

os: For file operations and terminal manipulation.io: For creating string buffers.unittest: For running unit tests.

Security Considerations

- The

run_file_toolexecutes arbitrary Python code, which could be a security risk if used with untrusted input. - File operations are performed without explicit path sanitation, which could potentially allow access to sensitive files if not properly controlled.

Potential Improvements

- Add path sanitation to prevent unauthorized file access.

- Implement more robust error handling and logging.

- Consider adding a configuration option for the output character limit in

run_file_tool. - Add support for passing arguments to the executed Python files in

run_file_tool.

Prompt contents

Overview

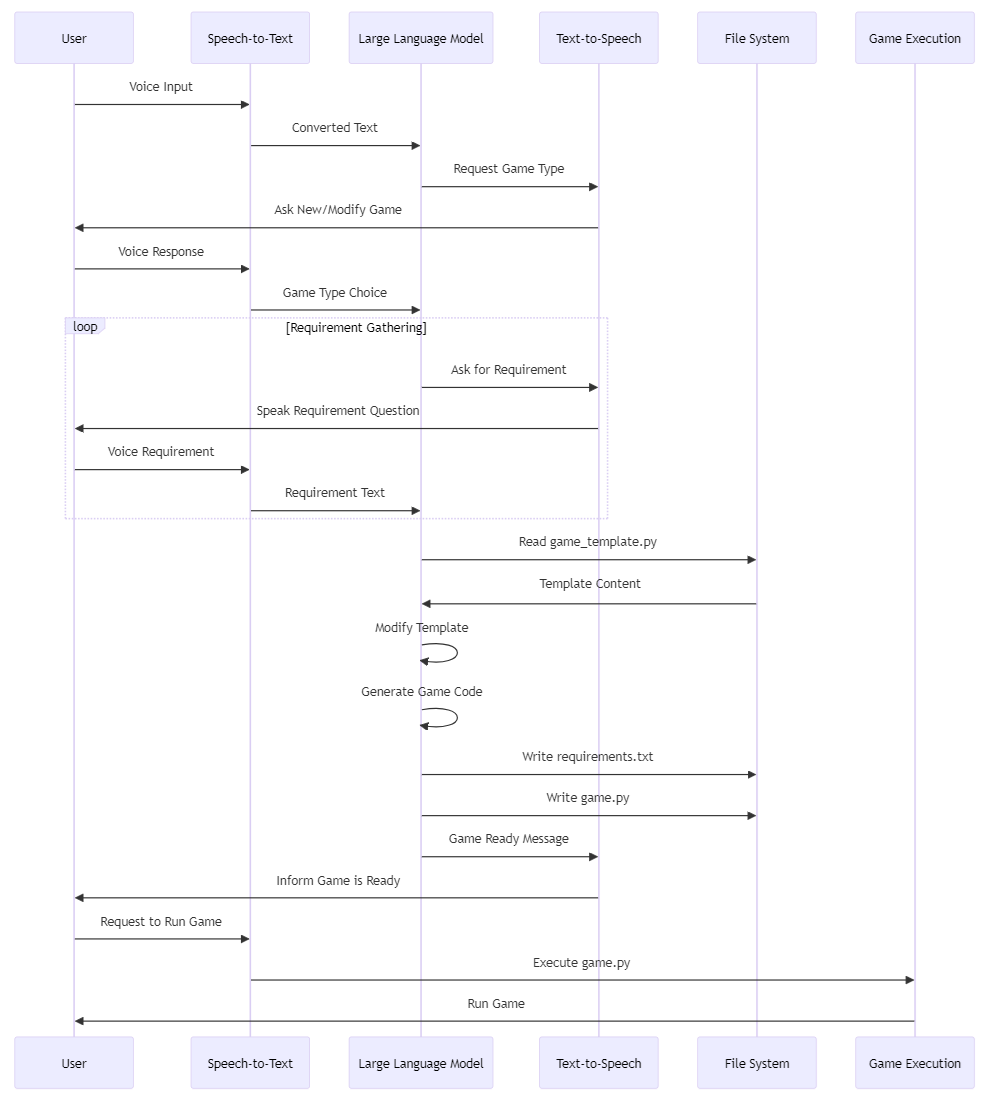

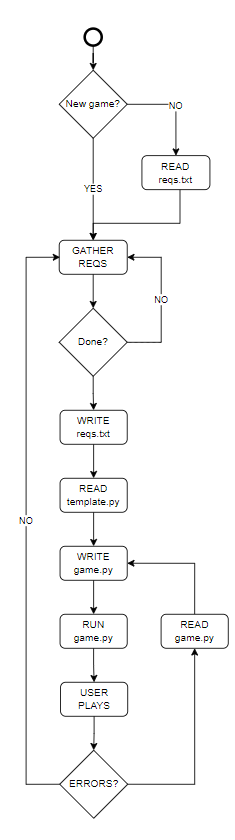

The prompt_utils.py file contains a system prompt (system_prompt) designed to guide an AI assistant in creating or modifying games for the MicroPython 1.23.0 platform. This prompt sets the context and behavior for the AI, ensuring it follows a specific process and considers important factors in MicroPython game development.

Contents

system_prompt

A string variable containing detailed instructions for the AI assistant. The prompt covers the following main areas:

Role Definition: The AI is positioned as an expert game developer specializing in MicroPython 1.23.0.

Process Steps:

- Determine if the user wants to create a new game or modify an existing one.

- Guide the user through requirement gathering or modification.

- Manage requirement documentation in

requirements.txt. - Read and modify game code (either from

game_template.pyor existinggame.py). - Consider

game_logs.txtfor modifications to existing games.

Development Considerations:

- Optimize code for speed and limited resources.

- Utilize MicroPython-specific libraries and functions.

- Implement sprite usage.

- For classic games, aim to match NES version features.

Hardware Specifications:

- Describes the user interface layout, including joystick, display, buttons, speaker, and RGB LED.

Communication Guidelines:

- Instructs the AI to respond briefly in Spanish, except when thinking or using tools.

- Advises against showing full requirement lists, preferring brief summaries.

Key Features

- Structured Development Process: Provides a clear, step-by-step approach for game development or modification.

- MicroPython Optimization: Emphasizes the importance of optimizing for the MicroPython 1.23.0 environment.

- User Interaction: Guides the AI to engage with the user for requirement gathering and confirmation.

- File Management: Specifies how to handle various files (

requirements.txt,game_template.py,game.py,game_logs.txt). - Hardware Awareness: Includes information about the target hardware's interface.

Usage

This system prompt is intended to be used as input for an AI model, setting the context and behavior for game development interactions. It should be provided to the AI at the beginning of a conversation or task related to MicroPython game development.

Important Notes

- The prompt is in English, but instructs the AI to respond in Spanish for most interactions.

- The AI is instructed not to start coding until requirements are gathered and confirmed.

- The prompt assumes the existence of certain files (

game_template.py,requirements.txt,game.py,game_logs.txt) in the development environment.

Audio input and output

Overview

The i2s_utils.py file contains utility functions for audio input and output using the I2S (Inter-IC Sound) protocol. It provides asynchronous functions for playing audio (text-to-speech) and recording audio, designed to work with a microcontroller that supports I2S.

Functions

1. play(key, text, cb_complete=None)

Converts text to speech and plays it through an I2S audio output.

Parameters:

key(str): API key for the text-to-speech service.text(str): The text to be converted to speech and played.cb_complete(function, optional): A callback function to check if playback should be stopped.

Behavior:

- Initializes I2S for audio output.

- Splits the text into parts and processes each part separately.

- Uses a queue system to handle audio data streaming.

- Supports interruption of playback through the callback function.

2. record(cb_complete=None, timeout=5)

Records audio from an I2S microphone input.

Parameters:

cb_complete(function, optional): A callback function to check if recording should be stopped.timeout(int, optional): Maximum recording duration in seconds. Default is 5 seconds.

Returns: A

BytesIOobject containing the recorded audio in WAV format.Behavior:

- Initializes I2S for audio input.

- Records audio until the timeout is reached or the callback function returns False.

- Ensures a minimum recording duration of 1 second.

Helper Classes

1. asyncio_Queue

A simple asynchronous queue implementation used in the play function.

- Methods:

put(item): Adds an item to the queue.get(): Retrieves and removes an item from the queue.

Constants and Configuration

- I2S Configuration:

PIN_SCK: 15 (Serial Clock)PIN_WS: 16 (Word Select)PIN_SD: 12 (Serial Data) for output, 17 for inputI2S_ID: 0I2S_BITS: 16 (Bit depth)I2S_CH: 1 (Mono)I2S_RATE: 24000 Hz for playback, 8000 Hz for recordingI2S_LEN: Buffer length (8192 bytes for playback, 4096 bytes for recording)

Usage Notes

The

playfunction uses theapi_utils.text_to_speechfunction to convert text to speech. Ensure that the necessary API key and network connectivity are available.Both

playandrecordfunctions are asynchronous and should be used withawaitin an asynchronous context.The

cb_completecallback in both functions can be used to implement interrupt logic (e.g., stop playback or recording based on a button press).The recording function creates a WAV file in memory. Be mindful of memory constraints when recording for extended periods.

The I2S configuration is hardcoded. Modify the constants if different pin assignments or audio settings are needed.

Dependencies

machine: For low-level hardware control (I2S interface).wave: For WAV file creation.asyncio: For asynchronous operations.api_utils: Custom module for API interactions (used in text-to-speech conversion).

Ensure these dependencies are available in your MicroPython environment when using this module.

Error Handling

The functions in this module do not include explicit error handling. In a production environment, you might want to add try-except blocks to handle potential errors, such as I2S initialization failures or API communication issues.