Now the current plan will precede in the following stages:

- Finish testing with stereo camera and make any improvements

- Prototype Stewart Platform using 6 micro servos and test response to Vive

- Use FPGA to analyze traffic between Raspberry Pi and Pi Camera V2.1 so I can emulate it and hopefully get 4k 30fps video feeding into FPGA, currently planning to use Artix based Zync

- Reverse engineer drivers for WiGig module for streaming low latency video from robot to Vive, rewrite drivers for Zync

- Come up with robots drive system, if possible stair climbing/jumping

- Finalize all hardware on robot, polished dedicated PCBs for robot and Vive

It’s going to be quite a challenge getting the FPGA software sorted so if anyone knows about communicating with the Pi Camera V2 or WiGig drivers and wants to provide some code, advice, or link me to resources I would appreciate it.

DrYerzinia

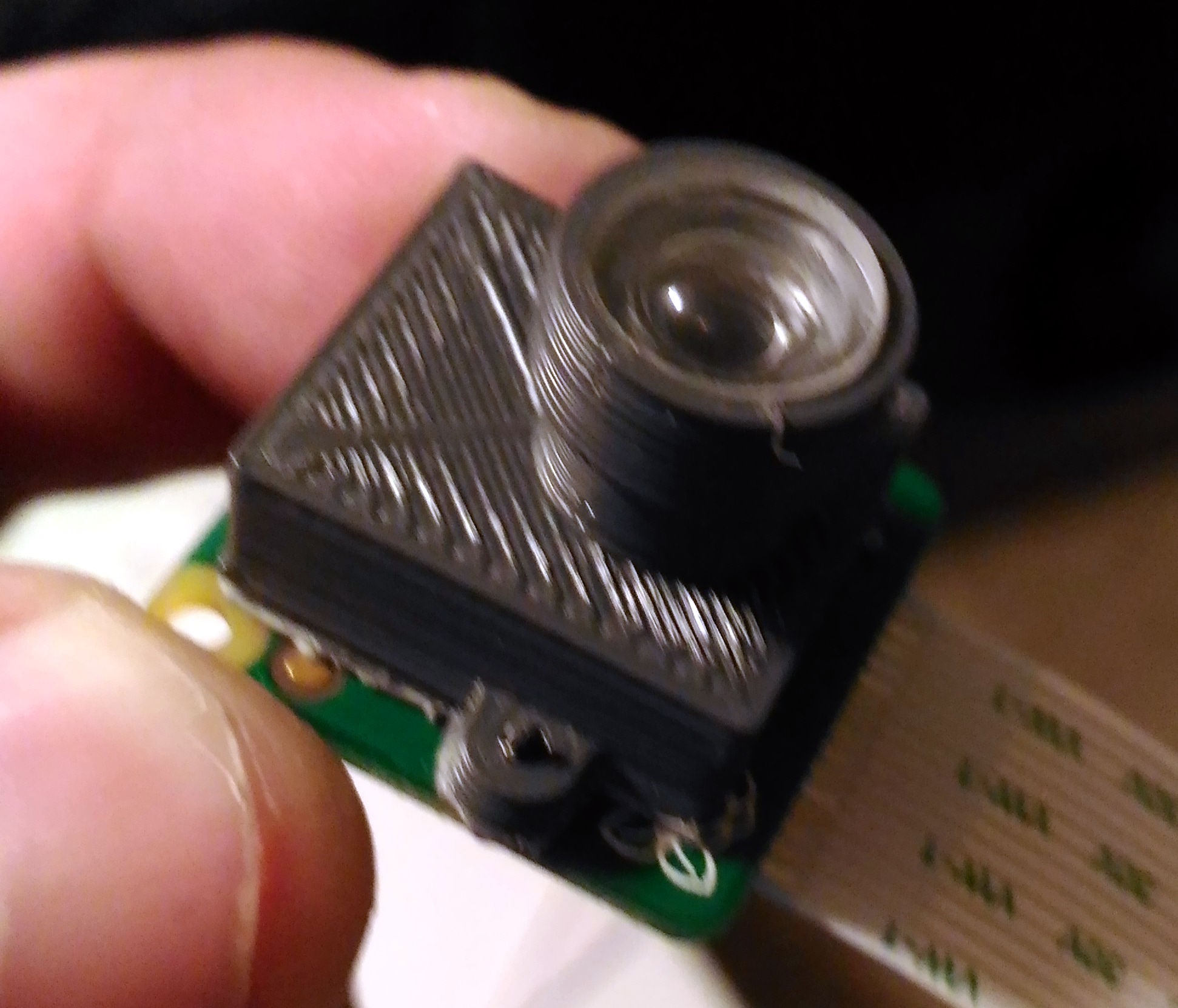

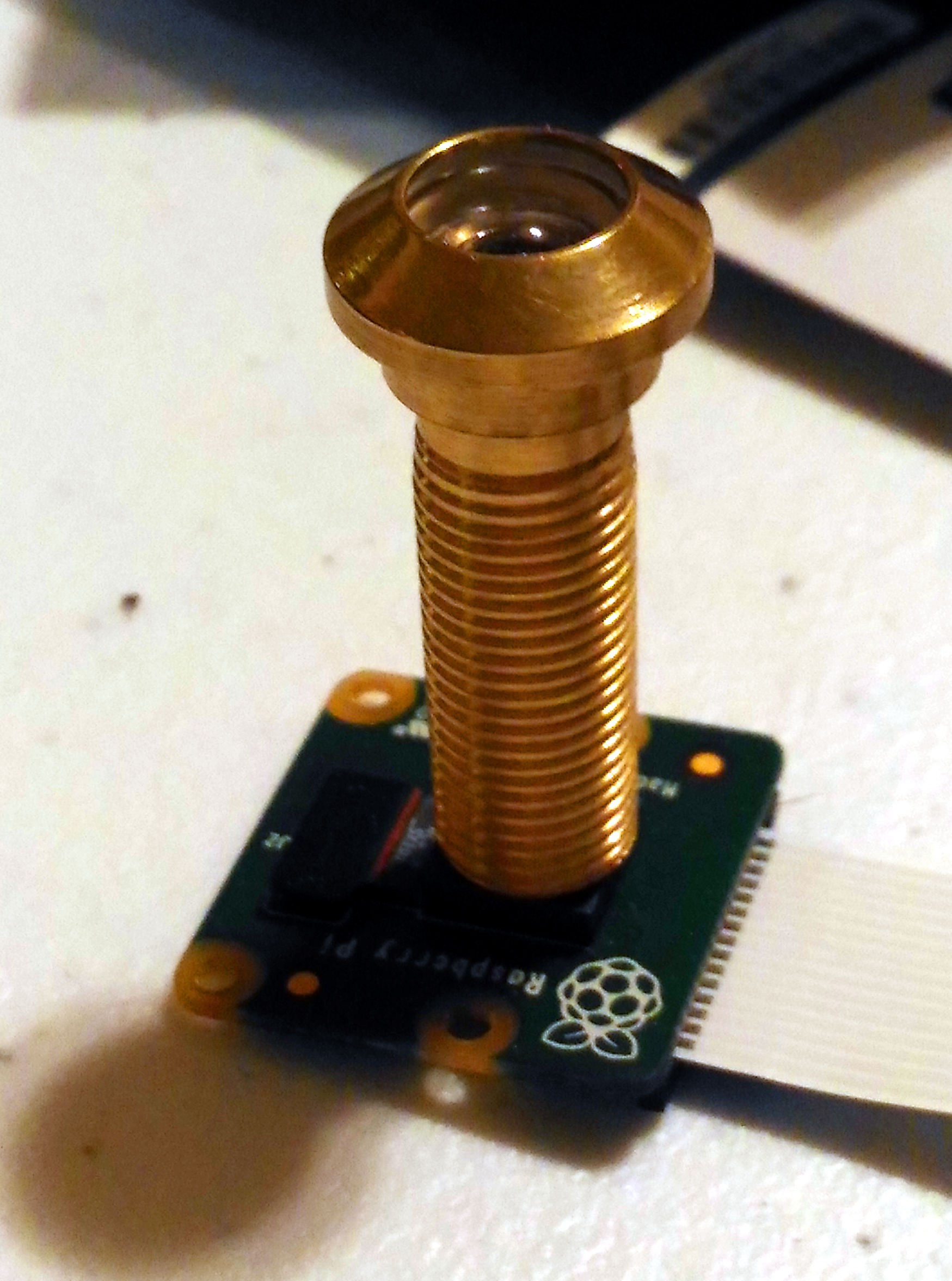

DrYerzinia Yes, Home Depot to the rescue with a peep hole lens, why didn't I think of it sooner? The peep hole fit perfectly on the end of the camera but the the barrel took up most of the cameras field of view, the actually scene was a small fraction of it, and I want all the pixels. What followed was disassembly of the peep hole and a lot of experiments tweaking the distance of the lens, trying the Pi Camera at different focuses or with no lens of it's own at all. Eventually I settled on this solution:

Yes, Home Depot to the rescue with a peep hole lens, why didn't I think of it sooner? The peep hole fit perfectly on the end of the camera but the the barrel took up most of the cameras field of view, the actually scene was a small fraction of it, and I want all the pixels. What followed was disassembly of the peep hole and a lot of experiments tweaking the distance of the lens, trying the Pi Camera at different focuses or with no lens of it's own at all. Eventually I settled on this solution: