Alright, here goes. In an earlier series of projects, I was chatting with classic AI bots like ELIZA, Mega-Hal, and my own home-grown Algernon. Hopefully, I am on the right track, that is to say, if I am not missing out on something really mean in these shark-infested waters, and here is why I think that that is. Take a look at what you see here:

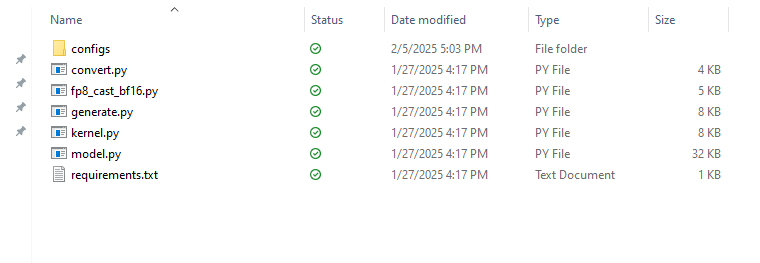

This is what I got as of January 27 when I downloaded the source files from GitHub for Deep-Seek. Other than some config files, it looks like all of the Python source fits quite nicely in 58K or less. Of course that doesn't include the dependencies on things like torch, and whatever else it might need. But model.py is just 805 lines. I checked. Now let's look at something different.

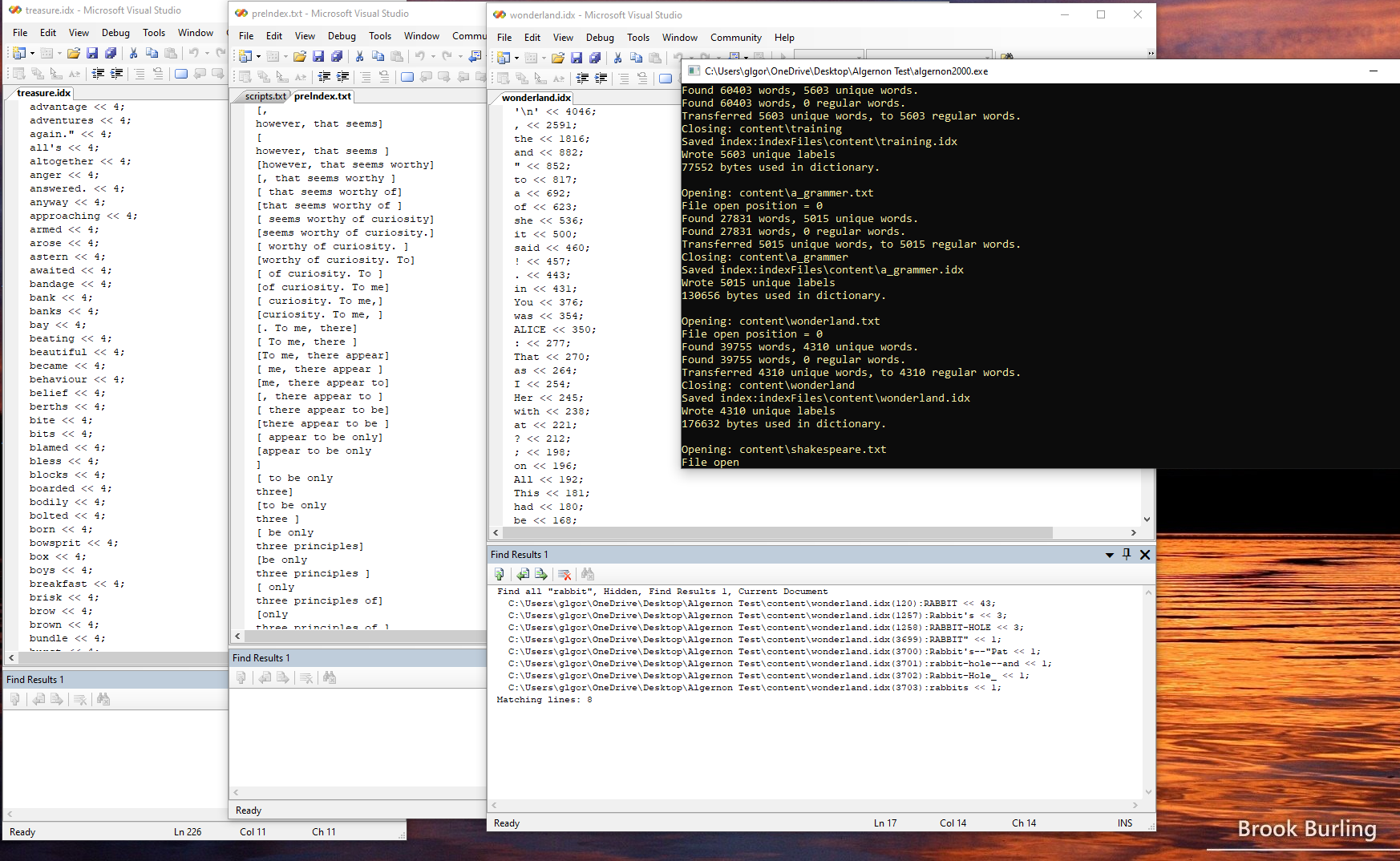

This is of course a screen-shot of a debugging session where I was doing things like counting the number of times that the word rabbit occurs in Alice in Wonderland, and so on. Maybe one approach to having a Mixture of Experts would require that we have some kind of framework. It is as if Porshe or Ferrari were to start giving away free engines to anyone, just for the asking, except that you have to bring your existing Porshe or Ferrari in to the dealer for installation, which would of course be "not free", unless you could convince the dealership that you have your own mechanics, and your own garage, etc., and don't really need help with all that much of anything - just give the stupid engine!

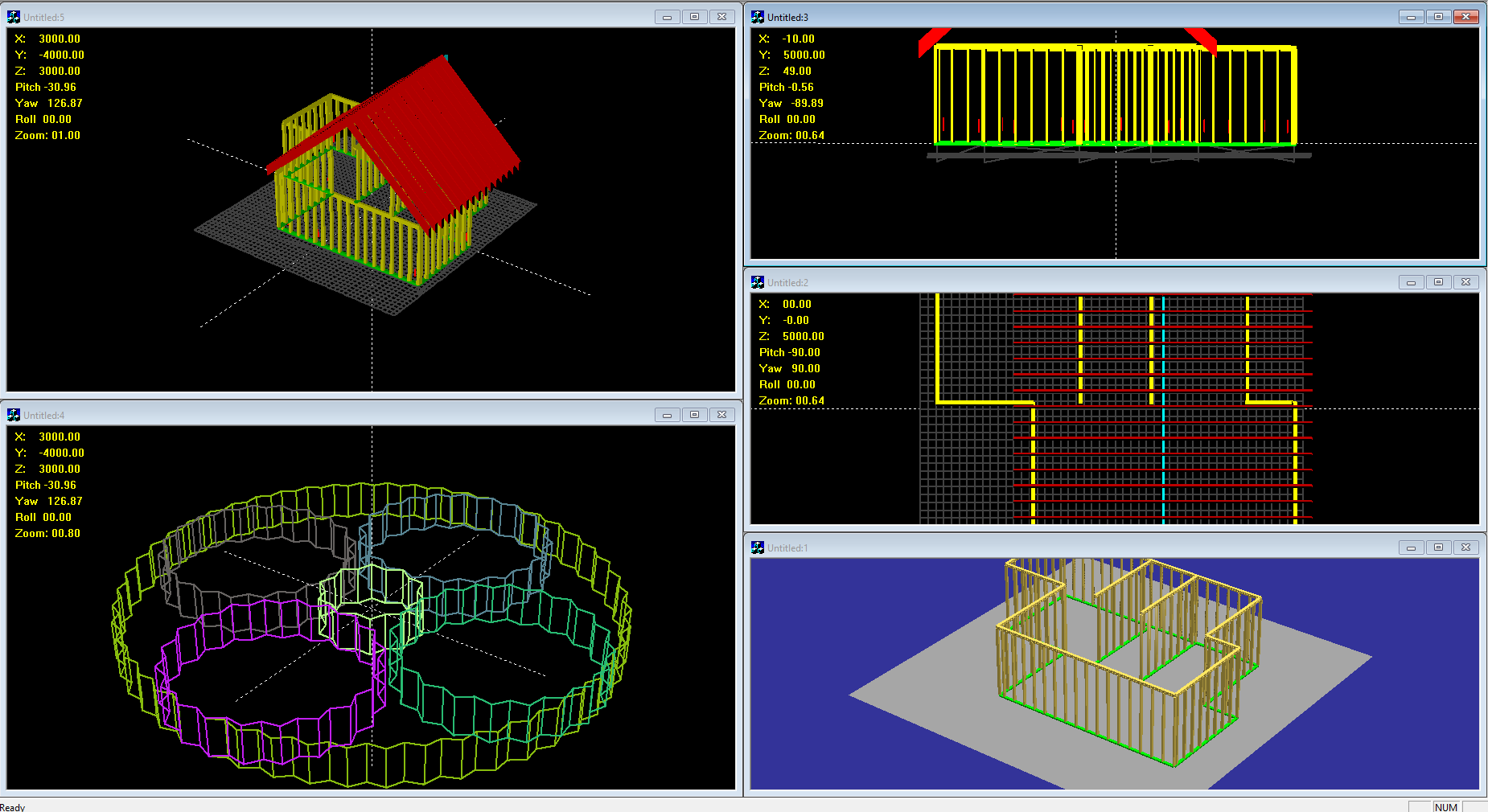

Well, in the software biz - what I am saying therefore, is that I have built my own framework, and that it is just a matter of getting to the point where I can, more or less, drop in a different engine. Assuming that I can capture and display images, tokenize text files., etc., all based on some sort of "make system" whether it is a Windows based bespoke application, like what you see here, or whether it is based on things like Bash and make under Linux, obviously. Thus, what seems to be lacking in the worlds of LLAMA as well as Deep Seek - is a content management system. Something that can handle text and graphics, like this:

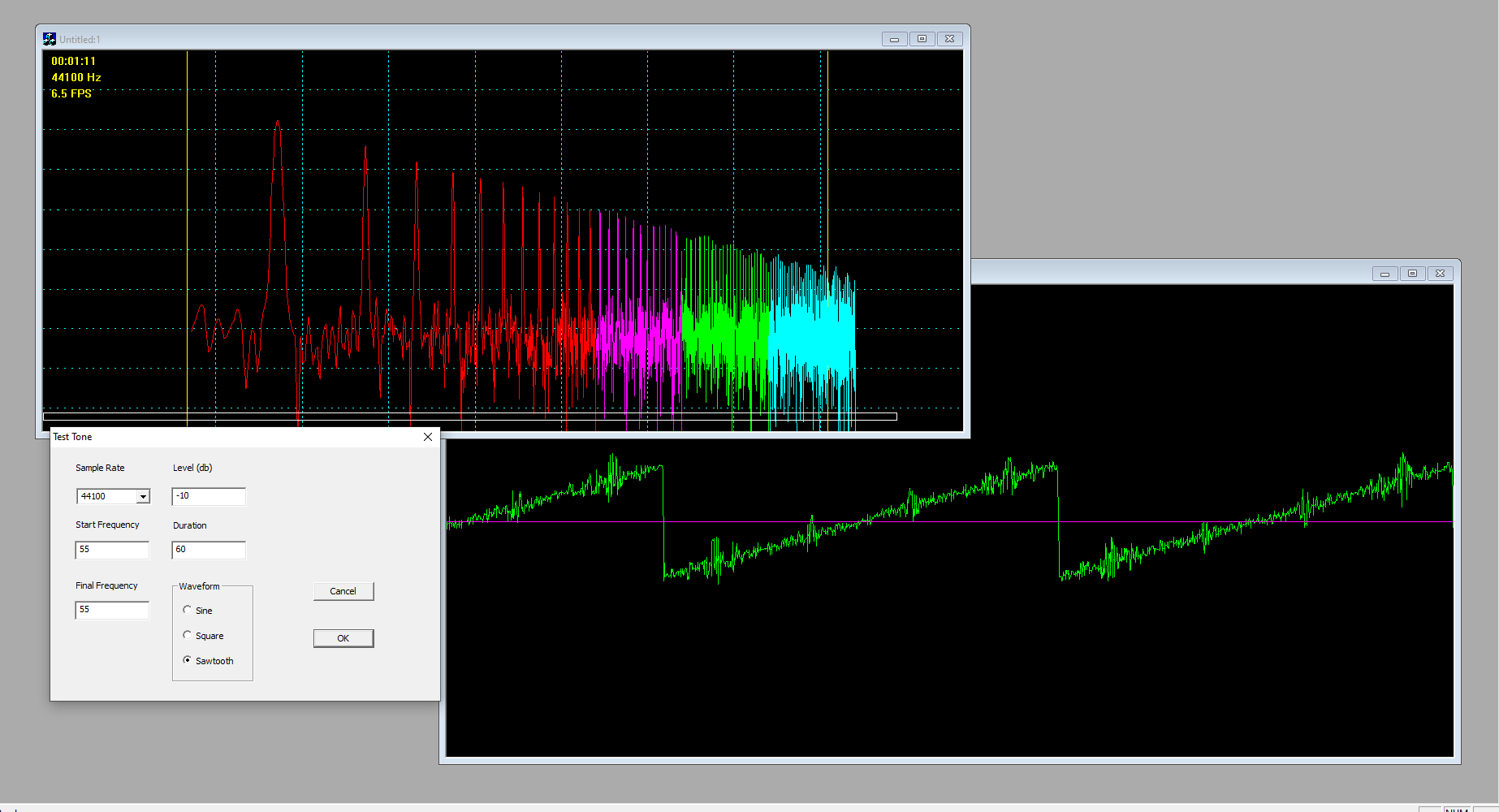

Yet, we also need to be able to do stuff like handle wavelet data, when processing speech or music, or when experimenting with spectral properties of different kinds of noise for example:

Of course, if you have ever tried writing your own DOOM wad editor from scratch, you might be on the right track to creating your own AI ... which can either learn to play DOOM, or else it just might be able to create an infinite number of DOOM like worlds. Of course, we all want so much more, don't we?

Alright then, first let's take a peek at some of the source code for Deep-Seek and see for ourselves if we can figure out just what exactly it is doing, just in case we want a noise expert, or a gear expert, or something else altogether!

Are you ready - silly rabbit?

class MoE(nn.Module):

"""

Mixture-of-Experts (MoE) module.

Attributes:

dim (int): Dimensionality of input features.

n_routed_experts (int): Total number of experts in the model.

n_local_experts (int): Number of experts handled locally in distributed systems.

n_activated_experts (int): Number of experts activated for each input.

gate (nn.Module): Gating mechanism to route inputs to experts.

experts (nn.ModuleList): List of expert modules.

shared_experts (nn.Module): Shared experts applied to all inputs.

"""

def __init__(self, args: ModelArgs):

"""

Initializes the MoE module.

Args:

args (ModelArgs): Model arguments containing MoE parameters.

"""

super().__init__()

self.dim = args.dim

assert args.n_routed_experts % world_size == 0

self.n_routed_experts = args.n_routed_experts

self.n_local_experts = args.n_routed_experts // world_size

self.n_activated_experts = args.n_activated_experts

self.experts_start_idx = rank * self.n_local_experts

self.experts_end_idx = self.experts_start_idx + self.n_local_experts

self.gate = Gate(args)

self.experts = nn.ModuleList([Expert(args.dim, args.moe_inter_dim)

if self.experts_start_idx <= i < self.experts_end_idx else None

for i in range(self.n_routed_experts)])

self.shared_experts = MLP(args.dim, args.n_shared_experts * args.moe_inter_dim)

def forward(self, x: torch.Tensor) -> torch.Tensor:

"""

Forward pass for the MoE module.

Args:

x (torch.Tensor): Input tensor.

Returns:

torch.Tensor: Output tensor after expert routing and computation.

"""

shape = x.size()

x = x.view(-1, self.dim)

weights, indices = self.gate(x)

y = torch.zeros_like(x)

counts = torch.bincount(indices.flatten(),

minlength=self.n_routed_experts).tolist()

for i in range(self.experts_start_idx, self.experts_end_idx):

if counts[i] == 0:

continue

expert = self.experts[i]

idx, top = torch.where(indices == i)

y[idx] += expert(x[idx]) * weights[idx, top, None]

z = self.shared_experts(x)

if world_size > 1:

dist.all_reduce(y)

return (y + z).view(shape)

Well for whatever it is worth, I must say that it certainly appears to make at least some sense. Like OK I sort of get the idea. What now? Install the latest Python on my Windows machine, and see how I can feed this thing some noise to train on? Or how about maybe some meta-data from an Audio-in sheet music out application from years ago?

Of course, personally, I don't particularly like Python - but maybe that's OK, because I decided to try a free online demo of a Python to C++ converter at some place that calls itself codeconvert.com. So, while I don't know how good this C++ code is, at least it looks like C++. Now if I can also find a torch/torch.h library - wouldn't that be nice - that is if such a thing already exists?

#include <vector>

#include <cassert>

#include <torch/torch.h>

class MoE : public torch::nn::Module {

public:

MoE(const ModelArgs& args) {

dim = args.dim;

assert(args.n_routed_experts % world_size == 0);

n_routed_experts = args.n_routed_experts;

n_local_experts = args.n_routed_experts / world_size;

n_activated_experts = args.n_activated_experts;

experts_start_idx = rank * n_local_experts;

experts_end_idx = experts_start_idx + n_local_experts;

gate = register_module("gate", Gate(args));

for (int i = 0; i < n_routed_experts; ++i) {

if (experts_start_idx <= i && i < experts_end_idx) {

experts.push_back(register_module("expert" + std::to_string(i),

Expert(args.dim, args.moe_inter_dim)));

} else {

experts.push_back(nullptr);

}

}

shared_experts = register_module("shared_experts", MLP(args.dim,

args.n_shared_experts * args.moe_inter_dim));

}

torch::Tensor forward(torch::Tensor x) {

auto shape = x.sizes();

x = x.view({-1, dim});

auto [weights, indices] = gate->forward(x);

auto y = torch::zeros_like(x);

auto counts = torch::bincount(indices.flatten(), n_routed_experts);

for (int i = experts_start_idx; i < experts_end_idx; ++i) {

if (counts[i].item<int>() == 0) {

continue;

}

auto expert = experts[i];

auto [idx, top] = torch::where(indices == i);

y.index_add_(0, idx, expert->forward(x.index_select(0, idx)) *

weights.index_select(0, idx).index_select(0, top).view({-1, 1}));

}

auto z = shared_experts->forward(x);

if (world_size > 1) {

torch::distributed::all_reduce(y);

}

return (y + z).view(shape);

}

private:

int dim;

int n_routed_experts;

int n_local_experts;

int n_activated_experts;

int experts_start_idx;

int experts_end_idx;

torch::nn::Module gate;

std::vector<torch::nn::Module> experts;

torch::nn::Module shared_experts;

};

So now we just need to convert more code, and see if it will work on bare metal? The mind boggles at the possibility however if we can so easily convert Python to C++, then why not go back to raw C, so that we can possibly identify parts that might readily be adaptable to synthesizable Verilog? Like I said, earlier - this is an ambitious project.

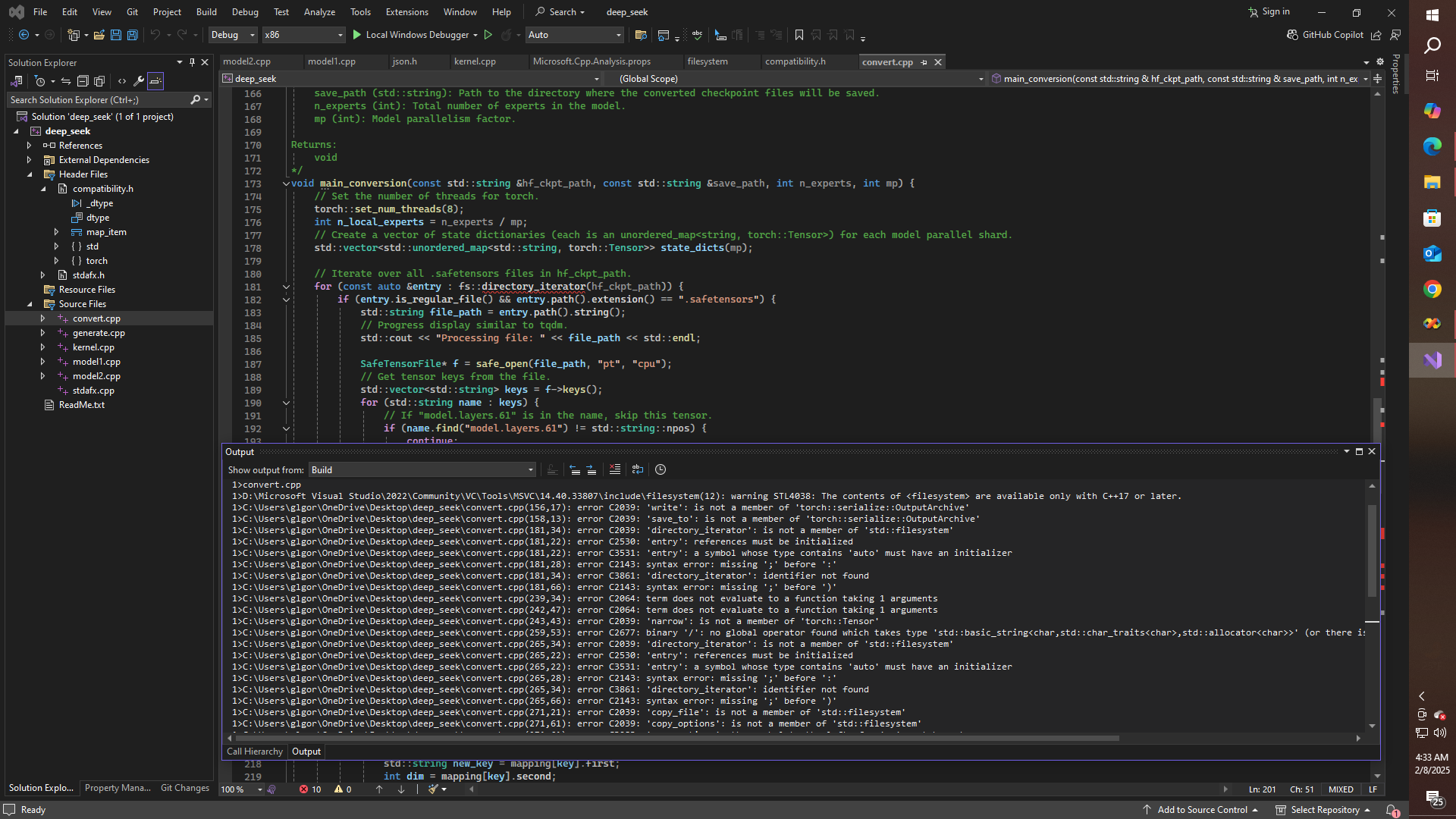

Alright then, decided to try an experiment, and thus I am working on two different code branches at the same time. In Visual Studio: 2022 I have started a Deep Seek Win32 library file based on the converted Python code, even though I haven't downloaded, installed, and configured the C++ version of PyTorch yet. So I have started on a file called "compatibility.h" which for now is just a placeholder for some torch stuff, that I am trying to figure out as I go So, yeah - this is very messy - but why not?

#include <string>

using namespace std;

#define _dtype enum { undef,kFloat, kFloat32,}

typedef _dtype dtype;

namespace torch

{

_dtype;

class Tensor

{

protected:

int m_id;

public:

size_t size;

};

class TensorOptions

{

public:

dtype dtype (dtype typ)

{

return typ;

};

};

void set_num_threads(size_t);

Tensor ones(size_t sz);

Tensor ones(size_t sz, dtype typ);

Tensor ones(int sz1, int sz2, dtype typ);

Tensor empty (size_t sz);

Tensor empty (dtype);

namespace nn

{

class Module

{

public:

};

};

namespace serialize

{

class InputArchive

{

public:

void load_from(std::string filename);

};

class OutputArchive

{

public:

};

};

};

#ifdef ARDUINO_ETC

struct map_item

{

char *key1, *key2;

int dim;

// map_item(std::string str1, std::string str2, int n);

map_item(const char *str1, const char *str2, int n)

{

key1 = (char*)(str1);

key2 = (char*)(str2);

dim = n;

}

};

#endif

namespace std

{

#ifdef ARDUINO_ETC

template <class X, class Y>

class unordered_map

{

public:

map_item *m_map;

// unordered_map ();

~unordered_map ();

void *unordered_map<X,Y>::operator new (size_t,void*);

unordered_map<X,Y> *find_node (X &arg);

char *get_data ()

{

char *result = NULL;

return result;

}

unordered_map<X,Y> *add_node (X &arg);

};

#endif

namespace filesystem

{

void create_directories(std::string);

void path(std::string);

};

};

Yes, this is a very messy way of doing things. but I am not aware of any tool that will autogenerate namespaces, class names, and function prototypes for the missing declarations. It is way too early of course to think that this will actually run anytime soon. But then again, why not? Especially if we also want to be able to run it on a propeller or a pi! Maybe it can be trained to play checkers, or ping pong, for that matter. Well, you get the idea. Figuring out how to get a working algorithm that doesn't need all of the modern sugar coating would be really nice to have - especially if can be taken even further back, like to C99, or System C, of course - so that we can also contemplate the implications of implementing it in synthesizable Verilog - naturally!!

Well, in any case - here it is 7:40 PM, PST -that is, and I could hardly believe that the Chiefs got smoked out by the Eagles in the last few hours, that is to say in Super-Bowl 59. I really need a new chat-bot to have some conversation about this with, or else I will have to start rambling about Shakespeare. Or maybe I have something else in mind? Getting back on topic then, take a look at this:

Alright - so I am now able to create an otherwise presently useless .obj file for the Deep Seek component convert.py, which I have of course converted to C++, and managed to get it to compile, but not yet link against anything. So, I think that the next logical step should be to try to create multiple projects within the Deep Seek workspace and make a "convert" project, that will eventually become a standalone executable. In the meantime, since I don't think that this project is quite ready for GitHub, what I think that I will do, is go ahead and post the current versions of "compatibilitiy.h" and "convert.cpp" to the files section of this project, i.e.., right here on Hackaday, of course. Just in case anyone wants to have a look. Enjoy!

glgorman

glgorman

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.