Alright, here goes. In an earlier series of projects, I was chatting with classic AI bots like ELIZA, Mega-Hal, and my own home-grown Algernon. Hopefully, I am on the right track, that is to say, if I am not missing out on something really mean in these shark-infested waters, and here is why I think that that is. Take a look at what you see here:

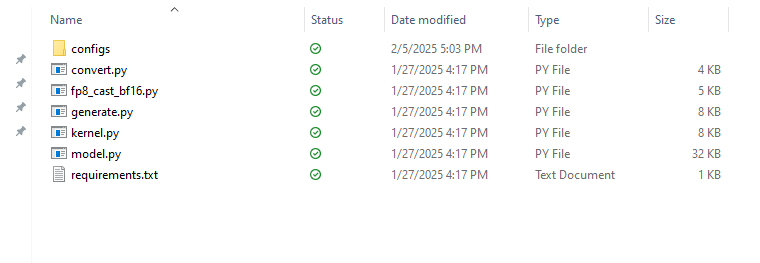

This is what I got as of January 27 when I downloaded the source files from GitHub for Deep-Seek. Other than some config files, it looks like all of the Python source fits quite nicely in 58K or less. Of course that doesn't include the dependencies on things like torch, and whatever else it might need. But model.py is just 805 lines. I checked. Now let's look at something different.

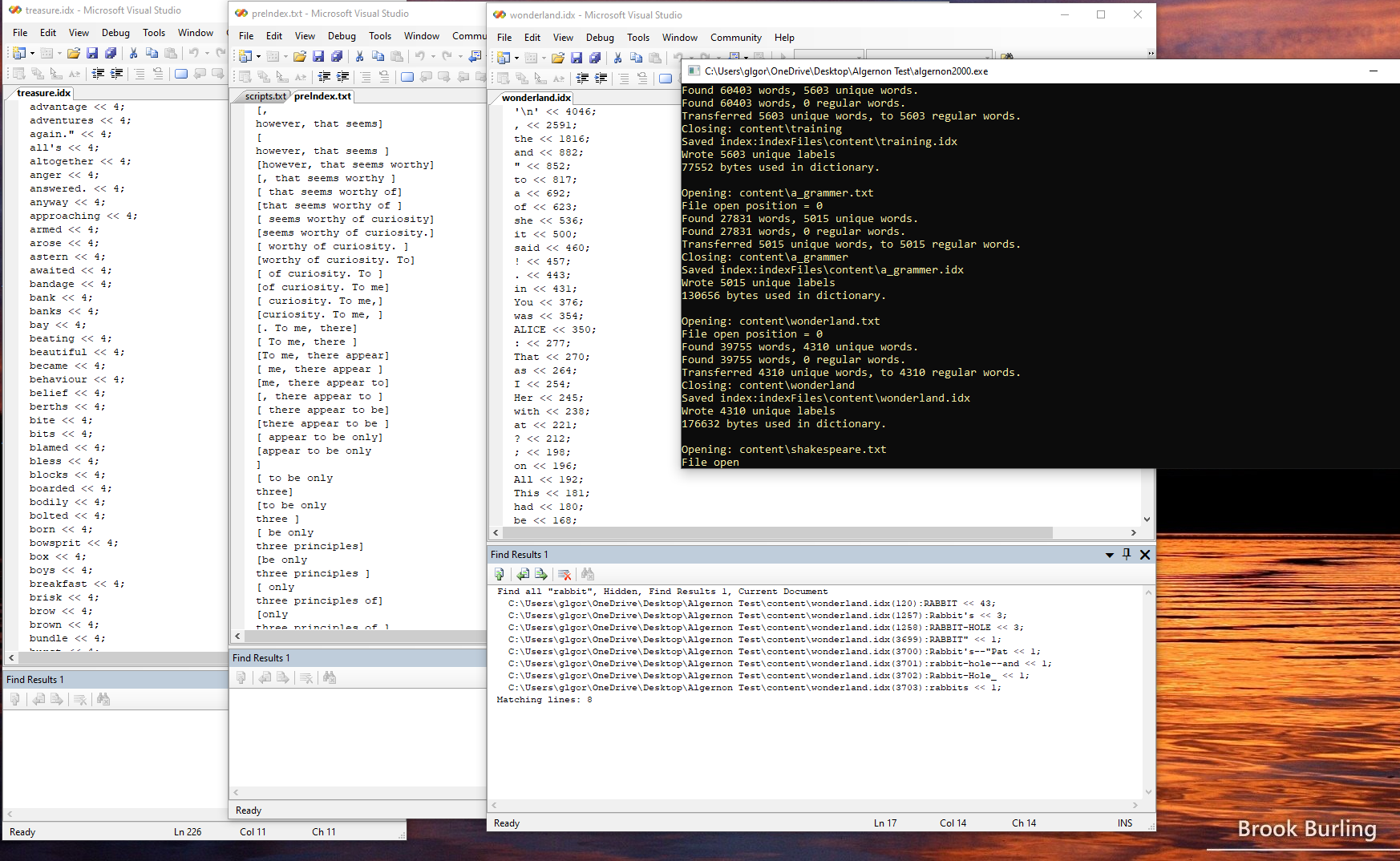

This is of course a screen-shot of a debugging session where I was doing things like counting the number of times that the word rabbit occurs in Alice in Wonderland, and so on. Maybe one approach to having a Mixture of Experts would require that we have some kind of framework. It is as if Porshe or Ferrari were to start giving away free engines to anyone, just for the asking, except that you have to bring your existing Porshe or Ferrari in to the dealer for installation, which would of course be "not free", unless you could convince the dealership that you have your own mechanics, and your own garage, etc., and don't really need help with all that much of anything - just give the stupid engine!

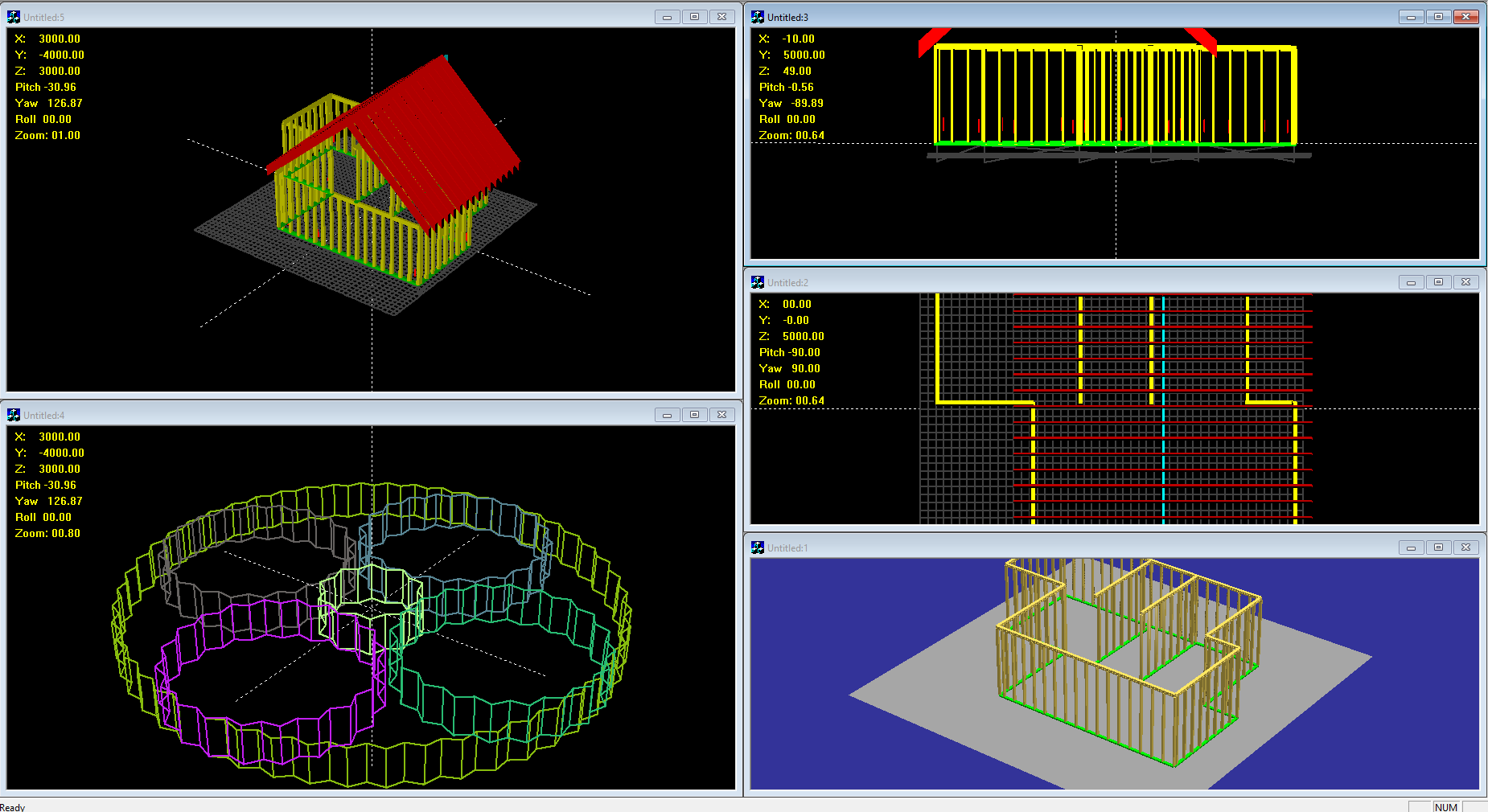

Well, in the software biz - what I am saying therefore, is that I have built my own framework, and that it is just a matter of getting to the point where I can, more or less, drop in a different engine. Assuming that I can capture and display images, tokenize text files., etc., all based on some sort of "make system" whether it is a Windows based bespoke application, like what you see here, or whether it is based on things like Bash and make under Linux, obviously. Thus, what seems to be lacking in the worlds of LLAMA as well as Deep Seek - is a content management system. Something that can handle text and graphics, like this:

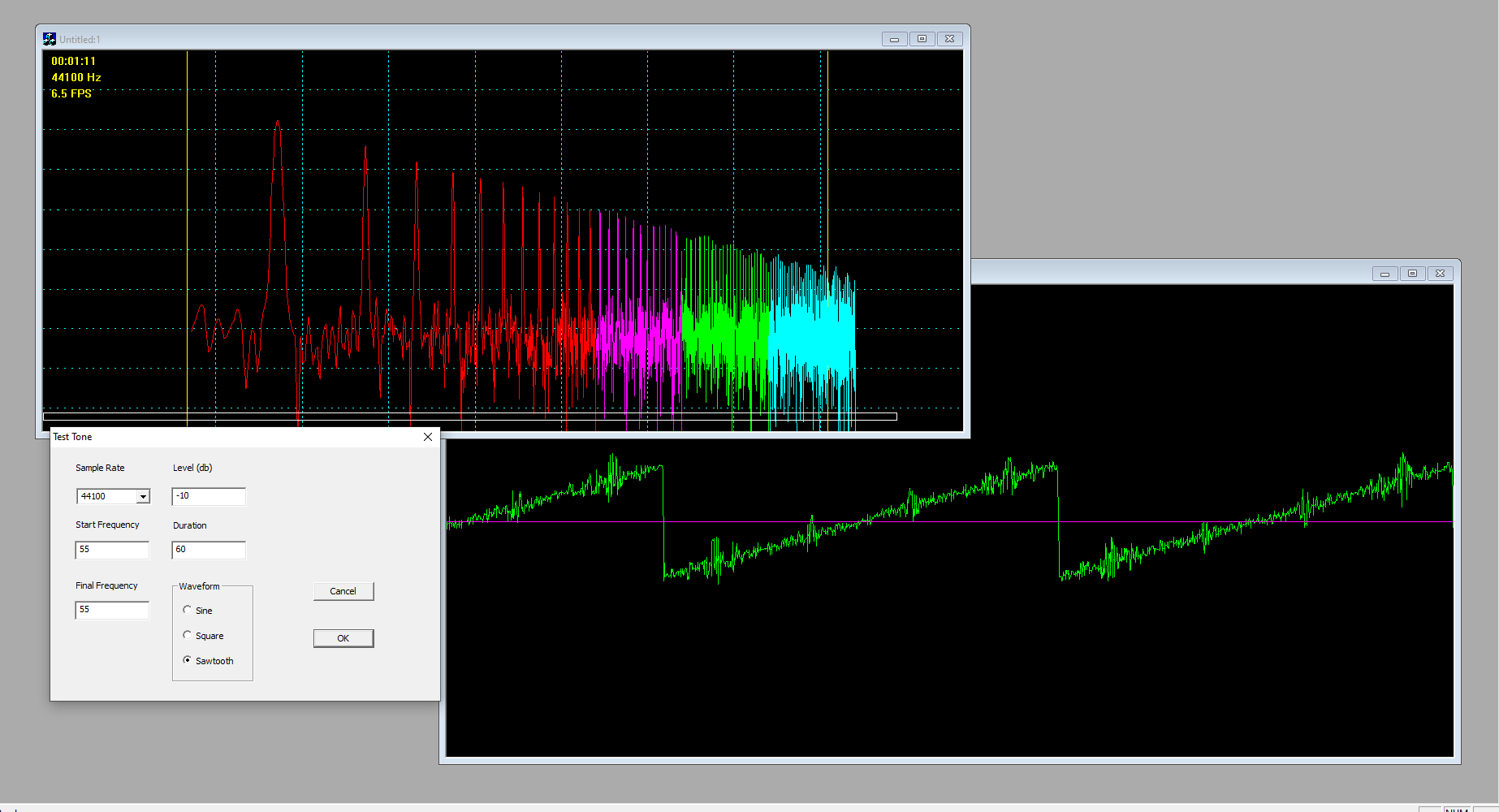

Yet, we also need to be able to do stuff like handle wavelet data, when processing speech or music, or when experimenting with spectral properties of different kinds of noise for example:

Of course, if you have ever tried writing your own DOOM wad editor from scratch, you might be on the right track to creating your own AI ... which can either learn to play DOOM, or else it just might be able to create an infinite number of DOOM like worlds. Of course, we all want so much more, don't we?

Alright then, first let's take a peek at some of the source code for Deep-Seek and see for ourselves if we can figure out just what exactly it is doing, just in case we want a noise expert, or a gear expert, or something else altogether!

Are you ready - silly rabbit?

class MoE(nn.Module):

"""

Mixture-of-Experts (MoE) module.

Attributes:

dim (int): Dimensionality of input features.

n_routed_experts (int): Total number of experts in the model.

n_local_experts (int): Number of experts handled locally in distributed systems.

n_activated_experts (int): Number of experts activated for each input.

gate (nn.Module): Gating mechanism to route inputs to experts.

experts (nn.ModuleList): List of expert modules.

shared_experts (nn.Module): Shared experts applied to all inputs.

"""

def __init__(self, args: ModelArgs):

"""

Initializes the MoE module.

Args:

args (ModelArgs): Model arguments containing MoE parameters.

"""

super().__init__()

self.dim = args.dim

assert args.n_routed_experts % world_size == 0

self.n_routed_experts = args.n_routed_experts

self.n_local_experts = args.n_routed_experts // world_size

self.n_activated_experts = args.n_activated_experts

self.experts_start_idx = rank * self.n_local_experts

self.experts_end_idx = self.experts_start_idx + self.n_local_experts

self.gate = Gate(args)

self.experts = nn.ModuleList([Expert(args.dim, args.moe_inter_dim) if self.experts_start_idx <= i < self.experts_end_idx else None

for i in range(self.n_routed_experts)])

self.shared_experts = MLP(args.dim, args.n_shared_experts * args.moe_inter_dim)

def forward(self, x: torch.Tensor) -> torch.Tensor:

"""

Forward pass for the MoE module.

Args:

x (torch.Tensor): Input tensor.

Returns:

torch.Tensor: Output tensor after expert routing and computation.

"""

shape = x.size()

x = x.view(-1, self.dim)

weights, indices = self.gate(x)

y = torch.zeros_like(x)

counts = torch.bincount(indices.flatten(), minlength=self.n_routed_experts).tolist()

for i in range(self.experts_start_idx, self.experts_end_idx):

if counts[i] == 0:

continue

expert = self.experts[i]

idx, top = torch.where(indices == i)

y[idx] += expert(x[idx]) * weights[idx, top, None]

z = self.shared_experts(x)

if world_size > 1:

dist.all_reduce(y)

return (y + z).view(shape)

Well for whatever it is worth, I must say that it certainly appears to make at least some sense. Like OK I sort of get the idea. What now? Install the latest Python on my Windows machine, and see how I can feed this thing some noise to train on? Or how about maybe some meta-data from an Audio-in sheet music out application from years ago?

glgorman

glgorman

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.