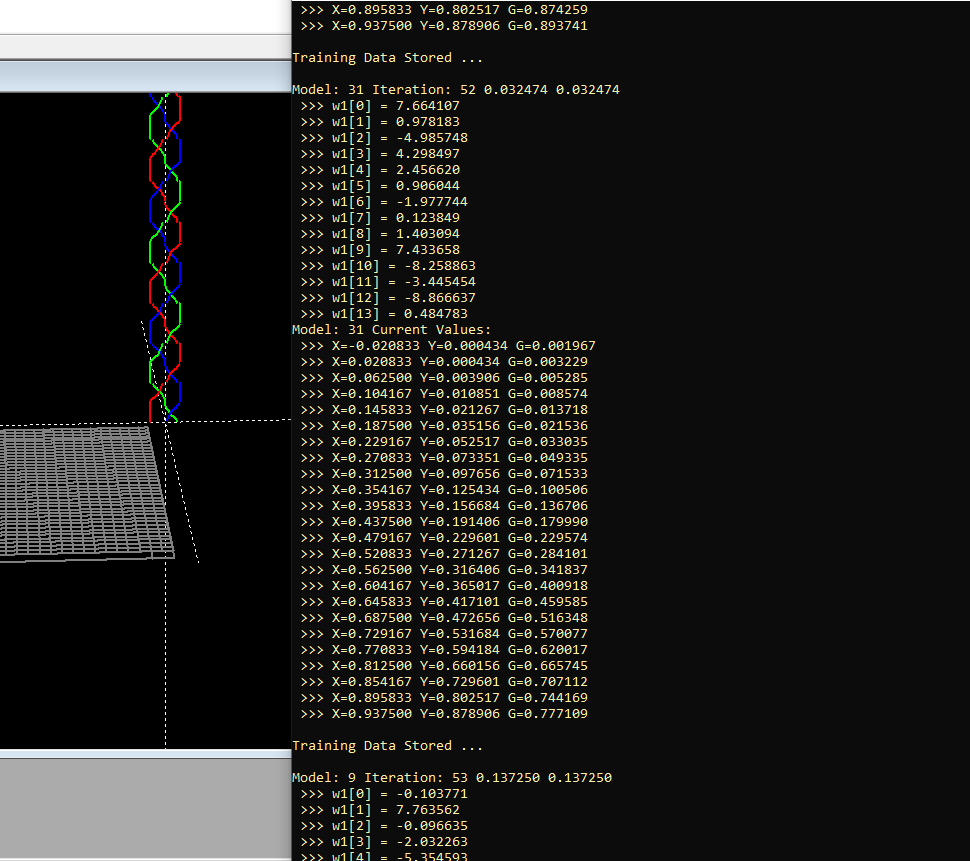

Well, for whatever it is worth, I have started work on parallelizing the genetic algorithm for approximating a mathematical function by using a neural network, and even though I haven't started yet on actually running it as a multi-threaded process, the results are nonetheless looking quite promising!

An interesting question should arise therefore; Is it better to train on a single genetic neural network, let's say for 2048 iterations, or is it better to try and run let's say 32 models in parallel, while iterating over each model, let's say 64 times. It appears that allowing multiple genetic models to compete against each other, as if according to an evolutionary metaphor is the better way to go, at least for the time being. Interestingly enough, since I have not yet started on running this on multiple threads, I think that if memory constraints permit, then this code just might be able to be back-ported to whatever legacy hardware might be of interest.

O.K., then - for now the code is starting to look like this, and soon I will most likely be putting some of this up on GitHub, just as soon as I iron out a few kinks.

int atari::main()

{

int t1,t2;

int iter = ITERATIONS;

int m,n;

// first initialize the dataset

vector<neural::dataset> datasets;

datasets.resize (NUMBER_OF_MODELS);

for (n=0;n<(int)datasets.size();n++)

datasets[n].initialize (NP);

// now construct the neural network

vector<neural::ann> models;

models.resize (NUMBER_OF_MODELS);

for (n=0;n<(int)models.size();n++)

{

models[n].bind (&datasets[n]);

models[n].init (n,iter);

}

t1 = GetTickCount();

bool result;

models[0].report0(false);

for (n=0;n<iter;n++)

for (m=0;m<(int)models.size();m++)

{

result = models[m].train (n);

if (result==true)

models[m].store_solution(n);

if (result==true)

models[m].report(n);

}

t2 = GetTickCount();

writeln(output);

writeln (output,"Training took ",(t2-t1)," msec.");

models[0].report1();

models[0].report0(true);

return 0;

}

It's really, not all that much more complicated than the code that Deep Seek generated. Mainly some heavy refactoring, and breaking things out into separate classes, etc. Yet, a whole bunch of other stuff comes to mind. Since it would almost be a one-liner at this point to try this on Cuda, on the one hand. Yet there is also the idea of getting under the hood of how Deep Seek works; since the idea also always comes to mind as to how one might train an actual DOOM expert or a hair expert for that matter, so as to be able to run in the Deep Seek environment.

Stay Tuned! Obviously, there should be some profound implications as to what might be accomplished next. Here are some ideas that I am thinking of right now:

- While evolving multiple models in parallel I could perhaps make some kind of "leader board" that looks like the current rank table for gainers or losers on the NASDAQ, or the current leaders in the Indy 500. Easy to do Linux style, if I make a view that looks like what you see when you do a SHOW PROCESS LIST in SQL or PS in Linux, for example.

- What if whenever a model shows an "improvement" over its previous best result, I not only store the results, but what if I also make a clone of that particular model, so that there would now be two copies of that particular improved model, evolving side by side?

- If there was a quota on the maximum number of fish that could be in the pond, so to speak, then some weaker models could be terminated - obviously, leading to a "survival of the fittest" metaphor.

- Likewise, what if I try combining multiple models, by taking two models that each have let's say 8 neurons, and combining them into a single network that might have 16 neurons for example or we could try deleting neurons from a model - so as to do some damage, and then let those mutations have a short period of time to adapt or die.

What seems surprising nonetheless, is how much that can be accomplished with so little code, since I think that what I have just described should work on any of a number of common micro-controllers, in addition to being able to be run on some the more massive parallelized and distributed super-computing environments that we usually think of when we think about AI.

glgorman

glgorman

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.