Problems

- we've seen in the main details of this project different build size results for the same source code depending on the build environment.

- When the Flash size does not fit the build size any more, well, the whole concept of the dev kits selection breaks down and we have to opt to a more expensive device, in the case of the Bluepill, that can really hurt.

Solution

- Start by having a look inside the build result, first a size summary

arm-none-eabi-size -B -d -t .\Bluepill_hello.elf

text data bss dec hex filename

55240 2496 872 58608 e4f0 .\Bluepill_hello.elf

55240 2496 872 58608 e4f0 (TOTALS)

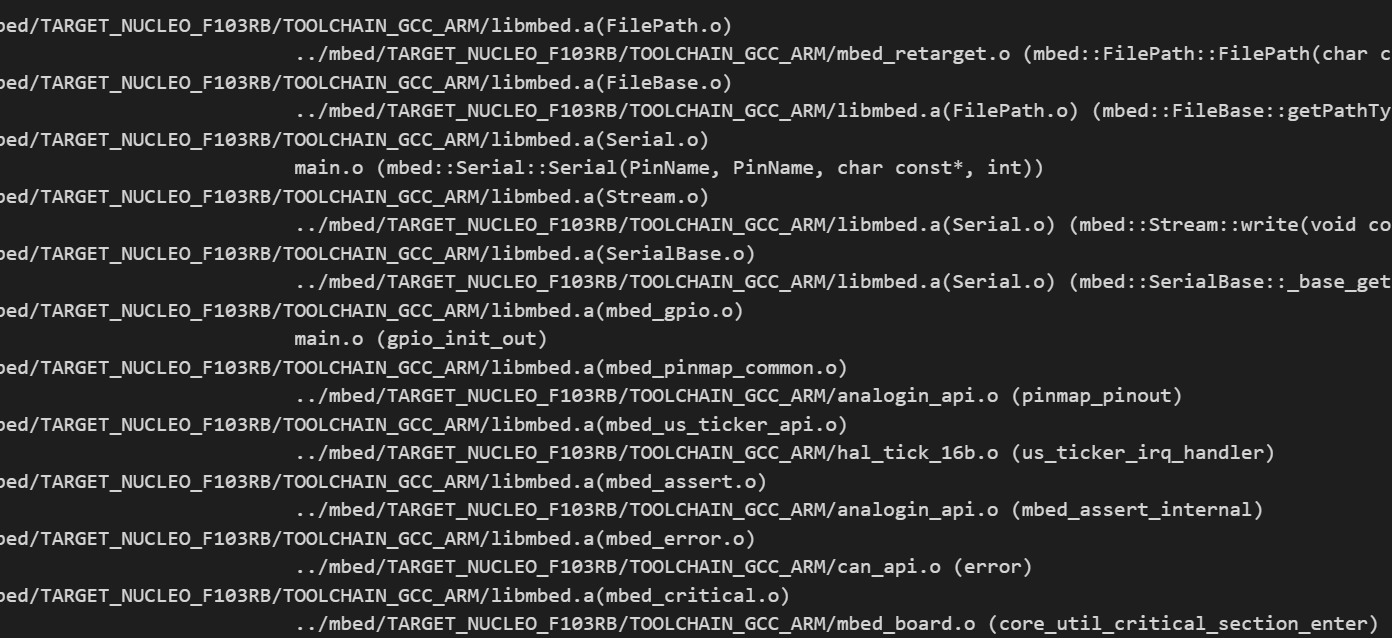

Yes, but that does not help, well the next level of details is to look into the map file,

- First you have to generate it by passing the right command to the linker, the "-Map" below

LD_FLAGS :=-Wl,-Map=output.map -Wl,--gc-sections -Wl,--wrap,main -Wl,--wrap,exit -Wl,--wrap,atexit -mcpu=cortex-m3 -mthumb

- Basically, we're done, but the result looks so ugly, how could any human understand anything ?

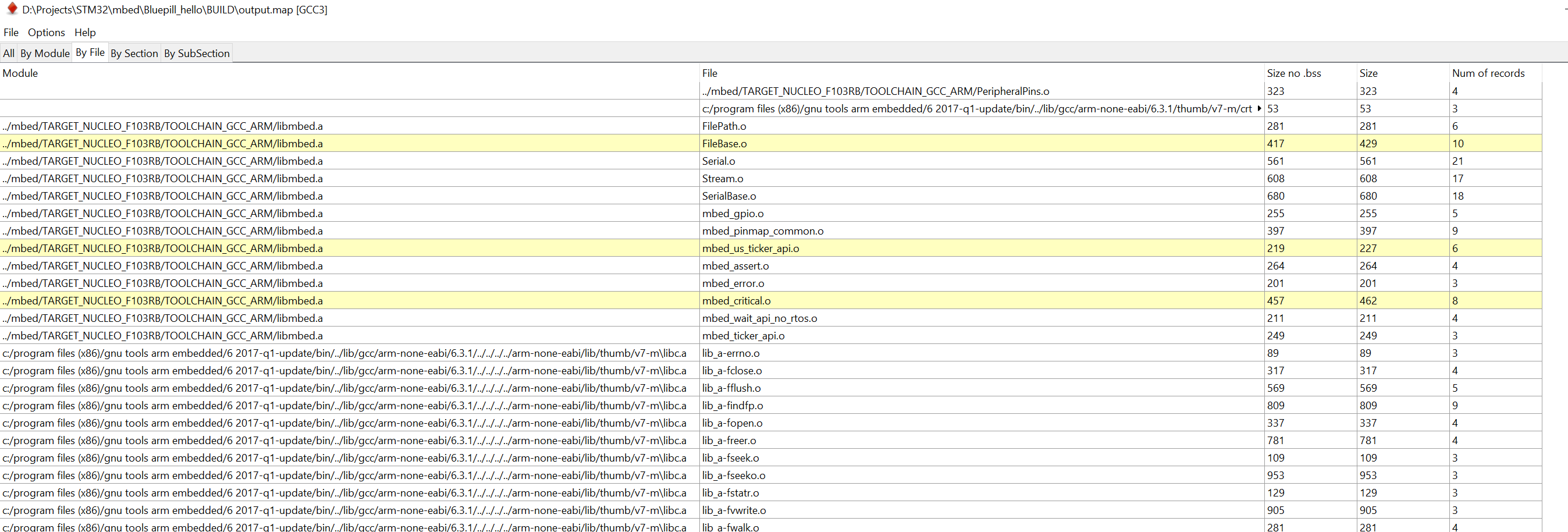

- Hopefully some smart people thought about this problem, and provided an out of the box, easy to use solution to display all the details at all levels, libs, modules,... The magic AMAP tool from sikorskiy.

I just had to drag and drop the map file, it complies with the "intuitive" qualification, and also with the "flexibility" as it works with command lines. It shows all results of size by Module, by file, by section.

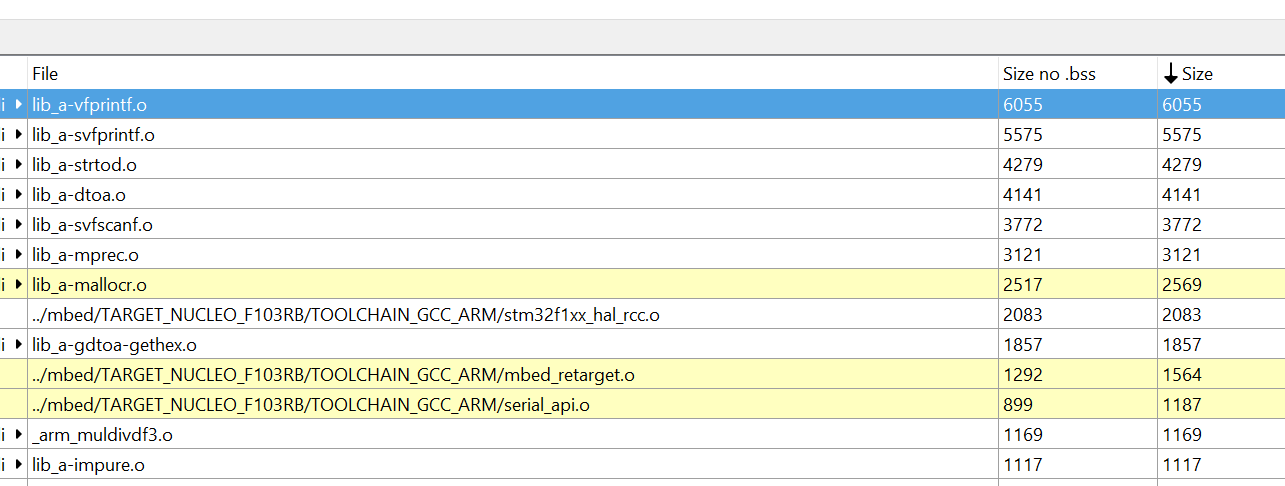

It is also possible to sort by size:

Conclusion

- Contrary to my expectations, the memory was not eaten by the mBed, but by the libc.a.

- The AMAP is a great tool to figure out what went wrong in the build chain

- Bad news here, if you were expecting all the magic to work for you by adding optimization flags to do everything, well this does not look to be the case here, so many questions raise,

- do we rebuild the library or do we link it as is ? And there come more questions,

- which magical build toolchain are we using ?

- What does platformIO do behind the scenes, how does mbed online generate its makefile ?

- Finally to resolve all of these questions, I'll try to stick with more standard build chains that I can understand, better than have easy to use ones, which modification gets much harder than the well documented existing ones.

Wassim

Wassim

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Did you figure this out? I'm digging into compiler and linker flags, it seems `mbed` wraps some memory management functions and links *a lot* more files ...

Are you sure? yes | no

If you're interested into reducing the size, I obtained max reductions with -Os and nano.specs, see this develop_nano.json from my repo : https://github.com/HomeSmartMesh/IoT_Frameworks/blob/master/stm32_rf_dongle/rf_uart_interface/develop_nano.json

It is true that mbed as an os is wrapping a lot all of the functions, I did not look into the memory management, but the pwm for example, was a tleast x1000 times slower than it could be.

here the default mbed pwm functions example

https://github.com/STM32Libs/rfpio-board/blob/master/rfpwm.cpp

and here direct registers access:

https://github.com/STM32Libs/light-dimmer/blob/master/dimm.cpp

So why would someone use an os then, well, if you are interested in extreame optimisation you probably should not use an os. The os must wrap all functions so that they are HW independent and then thread safe (or call from different tasks), and that where the price is paid with much slower and bigger wrapped functions.

Are you sure? yes | no