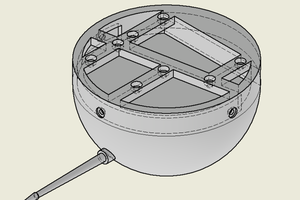

This project takes distance readings from ultrasonic sensors and translates them into MIDI notes that are then played via Fluidsynth, which is a software synthesizer for the Raspberry Pi.

Here's a demo of the Ultrasonic Pi Piano configured to play a different instrument for each sensor. The distance from the sensor determines the note that gets played.

Here's another video showing a single instrument being played:

Since this will be running headless (no keyboard or monitor attached) I wanted a way to change instruments easily without adding extra inputs, so I implemented a very basic form of gesture control where the instrument can be changed by covering two of the sensors for a few seconds. Here is a full demonstration of the completed version:

Detailed instructions for this project are available over on instructables and the source code (written in the Rust programming language) is available at https://github.com/TheGizmoDojo/UltrasonicPiPiano

Andy Grove

Andy Grove

Brenda Armour

Brenda Armour

Adam Wakelin

Adam Wakelin

BDM

BDM

Ron Grimes

Ron Grimes