Good sound from bad

Lets use machine learning to make a bad speaker make good sound.

Lets use machine learning to make a bad speaker make good sound.

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

It's going well... Prize here I come!

So lots of people have asked me this...

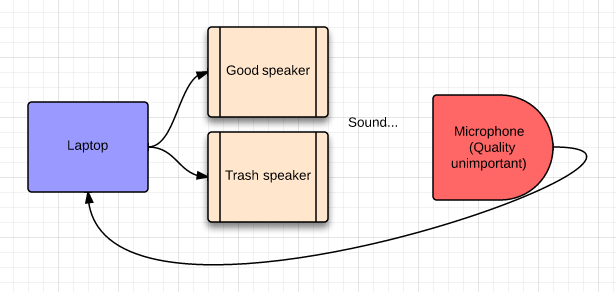

It turns out in my diagram in the previous update that the microphone quality doesn't matter. Here's why:

I play a sound x through the good speaker (G), record it with the shitty microphone (M). I then do the same with the modified sound y and bad speaker (B), and analyse the difference.

Turns out I'm analysing:

M(G(x)) - M(B(y))

And since M is present in both terms, the eventual result after many repeats will be:

M(G(x)) ~= M(B(y))

G(x) ~= B(y)

So the microphone got cancelled out! (Note this is only strictly true if function M is invertible, but we'll assume it approximately is...)

So I've got a few questions about my idea involving "feedback", which is normally a bad thing in the audio world.

Thats not always the case, and in fact it's very well researched. If you want to learn more, look here:

http://en.wikipedia.org/wiki/Nyquist_stability_criterion

In fact... Don't... Because thats one of those Wikipedia pages that's only good if you already have a PhD in the topic... Also, all that maths only applies to linear systems, which is the very assumption we don't want to make about our speaker!

The summary is this though: When you thing you have figured out what the speakers doing (ie. for a given input signal, you have figured out what sound it should make, and what sound it really makes, and hence the 'error'), if you apply the inverse signal to cancel it out, and the problem gets worse instead of better, then you've fallen foul of the Nyquist stability criterion.

Luckily, *for a given frequency*, if this happens and one instead adds instead of subtracts the error, you're guaranteed to end up with a stable system... Magic...

I found my rubbish speaker... I found a microphone... I found a good speaker...

The goal is to play a sound with the good speaker. Then play one with the rubbish speaker. Then figure out the main differences in the sound via doing an FFT and looking for the most major differences.

Next, calculate a signal which will cancel the differences, and repeat the whole process with the new signal added in for the trash speaker only. Over time, the differences will diminish towards zero.

Get the components, connect them together, fire up matlab or audacity to get going!

Create an account to leave a comment. Already have an account? Log In.

Become a member to follow this project and never miss any updates

By using our website and services, you expressly agree to the placement of our performance, functionality, and advertising cookies. Learn More

There has been a great deal of value to me in my involvement with the project. Would like to share it with the sound of text male voice team so they can also read it and implement something new.